id,node_id,number,title,user,state,locked,assignee,milestone,comments,created_at,updated_at,closed_at,author_association,pull_request,body,repo,type,active_lock_reason,performed_via_github_app,reactions,draft,state_reason

267515678,MDU6SXNzdWUyNjc1MTU2Nzg=,3,"Make individual column valuables addressable, with smart content types",9599,open,0,,,1,2017-10-23T01:11:32Z,2017-12-10T03:11:58Z,,OWNER,,"Some SQLite databases embed images in columns. It would be cool if these had URLs.

/database-name-7sha256/table-name/compound-pk/column

/database-name-7sha256/table-name/compound-pk/column.json

/database-name-7sha256/table-name/compound-pk/column.png

/database-name-7sha256/table-name/compound-pk/column.gif

/database-name-7sha256/table-name/compound-pk/column.txt

The one without an explicit file extension auto-detects the correct extension.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/3/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

267542338,MDU6SXNzdWUyNjc1NDIzMzg=,13,Add a syntax highlighting SQL editor,9599,closed,0,,,1,2017-10-23T05:03:33Z,2017-11-15T02:04:51Z,2017-11-15T02:04:51Z,OWNER,,https://ace.c9.io/#nav=embedding looks like a good option,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/13/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

267707940,MDU6SXNzdWUyNjc3MDc5NDA=,14,Datasette Plugins,9599,closed,0,,,22,2017-10-23T15:15:28Z,2019-05-13T18:58:20Z,2019-05-13T18:58:19Z,OWNER,,"It would be neat if additional functionality could be opted-in to the system in the form of easy-to-add plugins, hosted as separate packages. First example: a Google Analytics plugin, which adds GA tracking code with your tracking ID to the web interface for your dataset.

This may be an opportunity to experiment with entry points: http://amir.rachum.com/blog/2017/07/28/python-entry-points/",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/14/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

267739593,MDU6SXNzdWUyNjc3Mzk1OTM=,18,See if I can get a websockets interface working,9599,closed,0,,,1,2017-10-23T16:46:41Z,2021-01-04T20:05:52Z,2021-01-04T20:05:48Z,OWNER,,"Since I am already running on Sanic, how hard would it be to add a websocket ebdpoint that lets you talk to sqlite interactively?

Could this be used to efficiently support streaming in answers to giant queries?",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/18/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

267857622,MDU6SXNzdWUyNjc4NTc2MjI=,25,Endpoint that returns SQL ready to be piped into DB,9599,closed,0,,,2,2017-10-24T00:19:26Z,2017-11-15T05:11:12Z,2017-11-15T05:11:11Z,OWNER,,It would be cool if I could figure out a way to generate both the create table statements and the inserts for an individual table or the entire database and then stream them down to the client.,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/25/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

267886330,MDU6SXNzdWUyNjc4ODYzMzA=,27,Ability to plot a simple graph,9599,closed,0,,,3,2017-10-24T03:34:59Z,2018-07-10T17:52:41Z,2018-07-10T17:52:41Z,OWNER,,"Might be as simple as: pick he type of chart (bar, line) and then pick the column for the X axis and the column for the Y axis. Maybe also allow a pie chart. It’s up to the user to come up with SQL that gets the right values.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/27/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

268078453,MDU6SXNzdWUyNjgwNzg0NTM=,30,Do something neat with foreign keys,9599,closed,0,,,1,2017-10-24T15:29:29Z,2017-11-14T18:29:08Z,2017-11-14T18:29:01Z,OWNER,,"https://www.sqlite.org/pragma.html#pragma_foreign_key_list

SQLite has robust support for introspecting foreign keys. I could use that to automatically link to the corresponding record from my tables.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/30/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

268110769,MDU6SXNzdWUyNjgxMTA3Njk=,33,Use locust for benchmarking and load tests,9599,open,0,,,0,2017-10-24T17:00:09Z,2017-12-10T03:12:16Z,,OWNER,,"https://github.com/locustio/locust

Needed for #32 ",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/33/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

268176505,MDU6SXNzdWUyNjgxNzY1MDU=,34,Support CSV export with a .csv extension,9599,closed,0,,,1,2017-10-24T20:34:43Z,2021-06-17T18:14:48Z,2018-05-28T20:45:34Z,OWNER,,"Maybe do this using streaming with multiple pagination SQL queries so we can support arbritrarily large exports.

How would this work against a view which doesn’t have an obvious efficient pagination mechanism? Maybe limit views to up to 1000 exported records?

Relates to #5 ",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/34/reactions"", ""total_count"": 2, ""+1"": 2, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

268262480,MDU6SXNzdWUyNjgyNjI0ODA=,36,"date, year, month and day querystring lookups",9599,closed,0,,,3,2017-10-25T04:23:45Z,2018-05-28T17:30:53Z,2018-05-28T17:30:53Z,OWNER,,"- [ ] `?timestamp___date=2017-07-17` - return every item where the timestamp falls on that date

- [ ] `?timestamp___year=2017` - return every item where the timestamp falls within 2017

- [ ] `?timestamp___month=1` - return every item where the month component is January

- [ ] `?timestamp___day=10` - return every item where the day-of-the-month component is 10

Follow on from #23 ",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/36/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

268453968,MDU6SXNzdWUyNjg0NTM5Njg=,37,Ability to serialize massive JSON without blocking event loop,9599,closed,0,,,2,2017-10-25T15:58:03Z,2020-05-30T17:29:20Z,2020-05-30T17:29:20Z,OWNER,,"We run the risk of someone attempting a select statement that returns thousands of rows and hence takes several seconds just to JSON encode the response, effectively blocking the event loop and pausing all other traffic.

The Twisted community have a solution for this, can we adapt that in some way? http://as.ynchrono.us/2010/06/asynchronous-json_18.html?m=1",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/37/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

268462768,MDU6SXNzdWUyNjg0NjI3Njg=,38,Experiment with patterns for concurrent long running queries,9599,closed,0,,,5,2017-10-25T16:23:42Z,2018-05-28T20:47:31Z,2018-05-28T20:47:31Z,OWNER,,I want to understand how the system could perform under load with many concurrent long-running queries. Can we serve these without blocking the event loop?,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/38/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

268591332,MDU6SXNzdWUyNjg1OTEzMzI=,42,Homepage UI for editing metadata file,9599,closed,0,,,4,2017-10-26T00:22:03Z,2017-12-10T03:02:14Z,2017-12-10T03:02:14Z,OWNER,,"Since we are going to have a metadata file which sets the title/description/etc for each database, why not allow you to run the app in —dev mode which makes the homepage into a WYSIWYG editor that can save to that file format.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/42/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

269731374,MDU6SXNzdWUyNjk3MzEzNzQ=,44,?_group_count=country - return counts by specific column(s),9599,closed,0,,,7,2017-10-30T19:50:32Z,2018-04-26T15:09:58Z,2018-04-26T15:09:58Z,OWNER,,"Imagine if this:

https://stateless-datasets-jykibytogk.now.sh/flights-07d1283/airports.jsono?country__contains=gu&_group_count=country

Turned into this:

https://stateless-datasets-jykibytogk.now.sh/flights-07d1283?sql=select%20country,%20count(*)%20as%20group_count_country%20from%20airports%20where%20country%20like%20%27%gu%%27%20group%20by%20country%20order%20by%20group_count_country%20desc

This would involve introducing a new precedent of query string arguments that start with an _ having special meanings. While we're at it, could try adding _fields=x,y,z

Tasks:

- [x] Get initial version working

- [ ] Refactor code to not just ""pretend to be a view""

- [ ] Get foreign key relationships expanded",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/44/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

271301468,MDU6SXNzdWUyNzEzMDE0Njg=,46,Dockerfile should build more recent SQLite with FTS5 and spatialite support,9599,closed,0,,,13,2017-11-05T18:16:22Z,2017-11-17T14:32:12Z,2017-11-17T14:32:12Z,OWNER,,"The SQLite bundled with Python 3 doesn't support the FTS5 search extension. It would be nice if the SQLite built by our Dockerfile could support as many modern SQLite features as possible.

https://web.archive.org/web/20170212034155/http://charlesleifer.com/blog/using-the-sqlite-json1-and-fts5-extensions-with-python/ has instructions on building a more recent SQLite and the pysqlite package. Our Dockerfile could carry out an updated version of this process.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/46/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

271831408,MDU6SXNzdWUyNzE4MzE0MDg=,47,Create neat example database,9599,closed,0,,,5,2017-11-07T13:29:38Z,2017-11-14T03:08:13Z,2017-11-14T03:08:13Z,OWNER,,How about data from open elections eg https://github.com/openelections/openelections-data-ca?files=1,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/47/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

272391665,MDU6SXNzdWUyNzIzOTE2NjU=,48,Switch to ujson,9599,closed,0,,,4,2017-11-08T23:50:29Z,2019-06-24T06:57:54Z,2019-06-24T06:57:43Z,OWNER,,"ujson is already a dependency of Sanic, and should be quite a bit faster.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/48/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

273026602,MDU6SXNzdWUyNzMwMjY2MDI=,52,Solution for temporarily uploading DB so it can be built by docker,9599,closed,0,,,2,2017-11-10T18:55:25Z,2017-12-10T03:02:57Z,2017-12-10T03:02:57Z,OWNER,,For the `datasette publish` command I ideally need a way of uploading the specified DB to somewhere temporary on the internet so that when the Dockerfile is built by the final hosting location it can download that database as part of the build process.,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/52/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

273127443,MDU6SXNzdWUyNzMxMjc0NDM=,56,Easy way to block search engine crawling in robots.txt,9599,closed,0,,,1,2017-11-11T07:46:07Z,2018-05-28T20:50:25Z,2018-05-28T20:50:24Z,OWNER,,For people who don't want their datasets to be crawled by search engines.,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/56/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

273127694,MDU6SXNzdWUyNzMxMjc2OTQ=,57,Ship a Docker image of the whole thing,9599,closed,0,,,7,2017-11-11T07:51:28Z,2018-06-28T04:01:51Z,2018-06-28T04:01:38Z,OWNER,,"The generated Docker images can then just inherit from that. This will speed up deploys as no need to `pip install` anything.

- [x] Ship that image to Docker Hub

- [ ] Update the generated Dockerfile to use it",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/57/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

273157085,MDU6SXNzdWUyNzMxNTcwODU=,59,datasette publish hyper,9599,closed,0,,,4,2017-11-11T16:27:26Z,2019-05-13T19:01:00Z,2019-05-13T19:00:44Z,OWNER,,"This is a bit tricky, because unlike Now there doesn't seem to be a way to tell Hyper to ""build this Dockerfile and deploy the resulting image"". They expect you to build a container and publish it to a registry instead.

https://docs.hyper.sh/Reference/CLI/load.html allows you to publish an image directly from a tarball, but that still leaves the challenge of creating that image. The nice thing about the Now integration is that you don't need to have Docker installed on your local machine.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/59/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

273181020,MDU6SXNzdWUyNzMxODEwMjA=,64,Support for ?field__isnull=1 or similar,9599,closed,0,,,1,2017-11-11T22:26:52Z,2017-11-17T14:38:21Z,2017-11-17T14:38:21Z,OWNER,,,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/64/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

273296178,MDU6SXNzdWUyNzMyOTYxNzg=,73,_nocache=1 query string option for use with sort-by-random,9599,closed,0,,,2,2017-11-13T02:57:10Z,2018-05-28T17:25:15Z,2018-05-28T17:25:15Z,OWNER,,The one place where we wouldn’t want cdching is if we have something which uses sort by random to return random items. We can offer a _nocache=1 querystring argument to support this.,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/73/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

273569068,MDU6SXNzdWUyNzM1NjkwNjg=,79,Add more detailed API documentation to the README,9599,closed,0,,,3,2017-11-13T20:36:21Z,2018-05-28T17:24:48Z,2018-05-28T17:24:48Z,OWNER,,"Need to document:

- [ ] The ?column__gt=4 style filter arguments for tables

- [ ] The ?sql= API, and how named parameters work

- [ ] How API pagination works

- [ ] How redirects and cache headers work",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/79/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

273626815,MDU6SXNzdWUyNzM2MjY4MTU=,83,Individual row view is broken,9599,closed,0,,,0,2017-11-14T00:29:11Z,2017-11-14T00:45:34Z,2017-11-14T00:45:34Z,OWNER,,"https://parlgov.datasettes.com/parlgov-25f9855/viewcalc_parliament_composition/18

![]() ",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/83/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

273660425,MDU6SXNzdWUyNzM2NjA0MjU=,84,datasette package --metadata does not work with a relative path,9599,closed,0,,,0,2017-11-14T04:00:50Z,2017-11-15T05:18:35Z,2017-11-15T05:18:35Z,OWNER,," $ datasette package ~/parlgov-db/parlgov.db --metadata=~/parlgov-db/parlgov.json

Usage: datasette package [OPTIONS] FILES...

Error: Invalid value for ""-m"" / ""--metadata"": Could not open file: ~/parlgov-db/parlgov.json: No such file or directory

simonw-07542:~ simonw$ cd ~/parlgov-db/

simonw-07542:parlgov-db simonw$ datasette package ~/parlgov-db/parlgov.db --metadata=parlgov.json

Sending build context to Docker daemon 4.46MB

Step 1/7 : FROM python:3

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/84/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

273709194,MDU6SXNzdWUyNzM3MDkxOTQ=,87,Configure Travis to release new tags to PyPI,9599,closed,0,,,1,2017-11-14T08:44:08Z,2018-07-10T17:49:13Z,2018-07-10T17:49:12Z,OWNER,,https://docs.travis-ci.com/user/deployment/pypi/,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/87/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

273846123,MDU6SXNzdWUyNzM4NDYxMjM=,90,datasette publish heroku,9599,closed,0,,,8,2017-11-14T16:01:39Z,2017-12-10T03:06:34Z,2017-12-10T03:05:48Z,OWNER,,"Heroku has Docker container support so this should not be too hard:

https://devcenter.heroku.com/articles/container-registry-and-runtime

See also #59

This should work exactly like the existing “datasette publish now....” command except it would be “datasette publish heroku...”",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/90/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

273878873,MDU6SXNzdWUyNzM4Nzg4NzM=,91,"Option to serve databases from a different prefix, serve regular content elsewhere",9599,closed,0,,,1,2017-11-14T17:32:46Z,2017-12-10T03:07:58Z,2017-12-10T03:07:53Z,OWNER,,"It would be useful if the databases themselves could be served from a prefix e.g.

datasette serve mydb.db --path-prefix=db

Now my database is at `http://localhost:8001/db/mydb-23423`

This would free up the rest of the URL namespace for other things. Maybe we could have an option to serve static content from a known folder e.g.

datasette serve mydb.db --path-prefix=db --root-content=~/my-project/static

Now a hit to `http://localhost:8001/news/` serves content from `~/my-project/static/news/index.html`

This would make it trivial to package up entire HTML/CSS/JS apps with one or more underlying SQLite databases. Running without `--cors` would be fine here because any JS apps would be hosted on the same origin.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/91/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

273895344,MDU6SXNzdWUyNzM4OTUzNDQ=,92,Add --license --license_url --source --source_url --title arguments to datasette publish,9599,closed,0,,,0,2017-11-14T18:27:07Z,2017-11-15T05:04:41Z,2017-11-15T05:04:41Z,OWNER,,"I keep on using the `echo '{""source"": ""...""}' | datasette publish now --metadata=-` pattern, which suggests it makes sense for us to support these as optional arguments.

https://gist.github.com/simonw/9f8bf23b37a42d7628c4dcc4bba10253",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/92/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

273998513,MDU6SXNzdWUyNzM5OTg1MTM=,95,Allow shorter time limits to be set using a ?_sql_time_limit_ms =20 query string limit,9599,closed,0,,,1,2017-11-15T01:02:16Z,2017-11-15T02:56:13Z,2017-11-15T02:56:13Z,OWNER,,This cannot be greater than the configured time limit.,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/95/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

274001453,MDU6SXNzdWUyNzQwMDE0NTM=,96,UI for editing named parameters,9599,closed,0,,,3,2017-11-15T01:19:21Z,2017-11-16T01:45:51Z,2017-11-16T01:33:38Z,OWNER,,"On any page displaying a custom query that includes named parameters, we should show HTML form fields for editing those parameters.

Eg the breed parameter on https://australian-dogs.now.sh/australian-dogs-3ba9628?sql=select+name%2C+count%28*%29+as+n+from+%28%0D%0A%0D%0Aselect+upper%28%22Animal+name%22%29+as+name+from+%5BAdelaide-City-Council-dog-registrations-2013%5D+where+Breed+like+%3Abreed%0D%0A%0D%0Aunion+all%0D%0A%0D%0Aselect+upper%28Animal_Name%29+as+name+from+%5BAdelaide-City-Council-dog-registrations-2014%5D+where+Breed_Description+like+%3Abreed%0D%0A%0D%0Aunion+all+%0D%0A%0D%0Aselect+upper%28Animal_Name%29+as+name+from+%5BAdelaide-City-Council-dog-registrations-2015%5D+where+Breed_Description+like+%3Abreed%0D%0A%0D%0Aunion+all%0D%0A%0D%0Aselect+upper%28%22AnimalName%22%29+as+name+from+%5BCity-of-Port-Adelaide-Enfield-Dog_Registrations_2016%5D+where+AnimalBreed+like+%3Abreed%0D%0A%0D%0Aunion+all%0D%0A%0D%0Aselect+upper%28%22Animal+Name%22%29+as+name+from+%5BMitcham-dog-registrations-2015%5D+where+Breed+like+%3Abreed%0D%0A%0D%0Aunion+all%0D%0A%0D%0Aselect+upper%28%22DOG_NAME%22%29+as+name+from+%5Bburnside-dog-registrations-2015%5D+where+DOG_BREED+like+%3Abreed%0D%0A%0D%0Aunion+all+%0D%0A%0D%0Aselect+upper%28%22Animal_Name%22%29+as+name+from+%5Bcity-of-playford-2015-dog-registration%5D+where+Breed_Description+like+%3Abreed%0D%0A%0D%0Aunion+all%0D%0A%0D%0Aselect+upper%28%22Animal+Name%22%29+as+name+from+%5Bcity-of-prospect-dog-registration-details-2016%5D+where%22Breed+Description%22+like+%3Abreed%0D%0A%0D%0A%29+group+by+name+order+by+n+desc%3B&breed=pug",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/96/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

274022950,MDU6SXNzdWUyNzQwMjI5NTA=,97,Link to JSON for the list of tables ,9599,closed,0,,,3,2017-11-15T03:29:05Z,2018-05-29T18:51:35Z,2018-05-28T20:57:21Z,OWNER,,https://twitter.com/yschimke/status/930606210855854080,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/97/reactions"", ""total_count"": 1, ""+1"": 1, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

274023417,MDU6SXNzdWUyNzQwMjM0MTc=,98,Default to 127.0.0.1 not 0.0.0.0,9599,closed,0,,,0,2017-11-15T03:31:55Z,2017-11-15T05:08:54Z,2017-11-15T05:08:54Z,OWNER,,https://twitter.com/yschimke/status/930606210855854080,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/98/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

274023625,MDU6SXNzdWUyNzQwMjM2MjU=,99,Start a change log,9599,closed,0,,,0,2017-11-15T03:33:21Z,2017-11-16T15:12:46Z,2017-11-16T15:12:45Z,OWNER,,,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/99/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

274264175,MDU6SXNzdWUyNzQyNjQxNzU=,102,datasette publish elasticbeanstalk,9599,closed,0,,,1,2017-11-15T18:48:31Z,2021-01-04T20:13:20Z,2021-01-04T20:13:19Z,OWNER,,"It looks like Elastic Beanstalk is the most convenient way to deploy a docker container to AWS without first deploying a cluster.

https://aws.amazon.com/blogs/devops/dockerizing-a-python-web-app/ looks helpful. We would need to automate the deployment with Boto: http://boto3.readthedocs.io/en/latest/reference/services/elasticbeanstalk.html",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/102/reactions"", ""total_count"": 1, ""+1"": 1, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

274265878,MDU6SXNzdWUyNzQyNjU4Nzg=,103,datasette publish appengine,9599,closed,0,,,1,2017-11-15T18:54:18Z,2021-01-04T20:05:14Z,2021-01-04T20:05:14Z,OWNER,,"Similar approach to Heroku, discussed in #90

Looks like this could be pretty easy: https://cloud.google.com/appengine/docs/flexible/python/quickstart",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/103/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

274314940,MDU6SXNzdWUyNzQzMTQ5NDA=,105,Consider data-package as a format for metadata,9599,closed,0,,,4,2017-11-15T21:43:34Z,2017-11-20T19:50:53Z,2017-11-20T19:50:53Z,OWNER,,http://frictionlessdata.io/specs/data-package/,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/105/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

274315193,MDU6SXNzdWUyNzQzMTUxOTM=,106,Document how pagination works,9599,closed,0,,,1,2017-11-15T21:44:32Z,2019-06-24T06:42:33Z,2019-06-24T06:42:33Z,OWNER,,I made a start at that in this comment: https://news.ycombinator.com/item?id=15691926,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/106/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

274374317,MDU6SXNzdWUyNzQzNzQzMTc=,108,"Include version in python code, output in template",9599,closed,0,,,0,2017-11-16T02:32:40Z,2017-11-16T15:30:04Z,2017-11-16T15:30:04Z,OWNER,,It would be useful if I could tell which version of datasette was running on a site. Embed version number and include it in maybe a tooltip on the “powered by datasette” link,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/108/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

274378301,MDU6SXNzdWUyNzQzNzgzMDE=,109,Set up readthedocs,9599,closed,0,,,1,2017-11-16T02:58:01Z,2017-11-16T16:53:26Z,2017-11-16T16:13:56Z,OWNER,,,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/109/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

274578142,MDU6SXNzdWUyNzQ1NzgxNDI=,110,Add --load-extension option to datasette for loading extra SQLite extensions,9599,closed,0,,,2,2017-11-16T16:26:19Z,2017-11-16T18:38:30Z,2017-11-16T16:58:50Z,OWNER,,"This would allow users with extra SQLite extensions installed (like spatialite) to load them at runtime.

Inspired by this comment: https://github.com/simonw/datasette/issues/46#issuecomment-344810525",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/110/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

274615452,MDU6SXNzdWUyNzQ2MTU0NTI=,111,Add “updated” to metadata,9599,open,0,,,12,2017-11-16T18:22:20Z,2021-09-21T22:48:27Z,,OWNER,,"To give an indication as to when the data was last updated.

This should be a field in the metadata that is then shown on the index page and in the footer, if it is set.

Also support setting it using an option to “datasette publish” and “datasette package” - which can either be a string or can be the magic string “today” to set it to today’s date:

datasette publish file.db --updated=today",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/111/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

274617240,MDU6SXNzdWUyNzQ2MTcyNDA=,112,Allow --load-extension to be set via environment variables,9599,closed,0,,,1,2017-11-16T18:28:31Z,2017-11-17T14:19:23Z,2017-11-17T14:17:27Z,OWNER,,"This will make it easier to package up datasette in a Docker container with a bunch of pre-compiled extensions without the user having to remember to include all of the options every time.

Click has a mechanism for this: http://click.pocoo.org/5/options/#multiple-values-from-environment-values",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/112/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

274884209,MDU6SXNzdWUyNzQ4ODQyMDk=,116,Add documentation section about SQLite extensions,9599,closed,0,,,1,2017-11-17T14:36:30Z,2018-05-28T17:23:42Z,2018-05-28T17:23:41Z,OWNER,,,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/116/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

275048699,MDExOlB1bGxSZXF1ZXN0MTUzNDMyMDQ1,118,Foreign key information on row and table pages,9599,closed,0,,,0,2017-11-18T03:13:27Z,2017-11-18T03:15:57Z,2017-11-18T03:15:50Z,OWNER,simonw/datasette/pulls/118,,107914493,pull,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/118/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",0,

275082158,MDU6SXNzdWUyNzUwODIxNTg=,119,"Build an ""export this data to google sheets"" plugin",9599,closed,0,,,1,2017-11-18T14:14:51Z,2020-06-04T18:46:40Z,2020-06-04T18:46:39Z,OWNER,,"Inspired by https://github.com/kren1/tosheets

It should be a plug-in because I'd like to keep all interactions with proprietary / non-open-source software encapsulated in plugins rather than shipped as part of core.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/119/reactions"", ""total_count"": 1, ""+1"": 1, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

275087397,MDU6SXNzdWUyNzUwODczOTc=,120,Plugin that adds an authentication layer of some sort,9599,closed,0,,,4,2017-11-18T15:39:13Z,2020-03-16T18:48:06Z,2020-03-16T18:48:06Z,OWNER,,"Would allow people who want to host private data to do so.

.sh ",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/120/reactions"", ""total_count"": 7, ""+1"": 5, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 2, ""rocket"": 0, ""eyes"": 0}",,completed

275089535,MDU6SXNzdWUyNzUwODk1MzU=,121,?_json=foo&_json=bar query string argument ,9599,closed,0,,,4,2017-11-18T16:09:55Z,2018-05-31T13:48:12Z,2018-05-28T18:11:51Z,OWNER,,"Causes the specified columns in the output to be treated as JSON, and returned deserialized in the .json or .jsono response.

This will be particularly powerful when combined with https://sqlite.org/json1.html",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/121/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

275092453,MDU6SXNzdWUyNzUwOTI0NTM=,122,"Redesign JSON output, ditch jsono, offer variants controlled by parameter instead",9599,closed,0,,,5,2017-11-18T16:52:28Z,2018-04-08T14:54:09Z,2018-04-08T14:54:09Z,OWNER,,"I want to support three variants for the rows output:

* a list of lists, with a columns key saying what they are

* a list of dictionaries

* a single dictionary where the keys are the primary keys of the rows and the values are the row dictionaries themselves

I also want to make the various bits of metadata opt-in - so you don't get the SQL statement unless you ask for it.

These output options should be controlled by query string arguments.

I will set the .jsono URL to redirect to .json with the corresponding options. ",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/122/reactions"", ""total_count"": 1, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 1, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

275125561,MDU6SXNzdWUyNzUxMjU1NjE=,123,Datasette serve should accept paths/URLs to CSVs and other file formats,9599,open,0,,,9,2017-11-19T02:05:48Z,2021-07-19T00:04:32Z,,OWNER,,"This would remove the csvs-to-sqlite step which I end up using for almost everything.

I'm hesitant to introduce pandas as a required dependency though since it require compiling numpy. Could build it so this option is only available if you have pandas installed.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/123/reactions"", ""total_count"": 1, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 1, ""rocket"": 0, ""eyes"": 0}",,

275125805,MDU6SXNzdWUyNzUxMjU4MDU=,124,Option to open readonly but not immutable,9599,closed,0,,,5,2017-11-19T02:11:03Z,2019-06-24T06:43:46Z,2019-06-24T06:43:46Z,OWNER,,Immutable assumes no other process can modify the file. An option to open reqdonly instead would enable other processes to update the file in place.,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/124/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

275135393,MDU6SXNzdWUyNzUxMzUzOTM=,125,Plot rows on a map with Leaflet and Leaflet.markercluster,9599,closed,0,,,2,2017-11-19T06:05:05Z,2018-04-26T15:14:31Z,2018-04-26T15:14:31Z,OWNER,,"https://github.com/Leaflet/Leaflet.markercluster would allow us to paginate-load in an enormous set of rows with latitude/longitude points, e.g. https://australian-dunnies.now.sh/

Here's a demo of it loading 50,000 markers: https://leaflet.github.io/Leaflet.markercluster/example/marker-clustering-realworld.50000.html - and it looks like it's easy to support progress bars for if we were iteratively loading 1,000 markers at a time using datasette pagination.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/125/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

275159710,MDU6SXNzdWUyNzUxNTk3MTA=,128,"Every visualization should have an ""embed"" button",9599,open,0,,,0,2017-11-19T13:38:13Z,2019-05-13T18:33:51Z,,OWNER,,"At least for the first round of visualizations, any time you construct one using the UI the result should include an ""embed this"" button that returns source code to copy and paste

These examples should use unpkg.com (or similarl) urls with SRI hashes, eg https://www.srihash.org - and should load data from the datasette JSON API.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/128/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

275164558,MDU6SXNzdWUyNzUxNjQ1NTg=,129,Hide FTS-created tables by default on the database index page,9599,closed,0,,,2,2017-11-19T14:50:42Z,2017-11-22T20:22:02Z,2017-11-22T20:19:04Z,OWNER,,"SQLite databases that use FTS include a number of automatically generated tables, e.g.:

https://sf-trees-search.now.sh/sf-trees-search-a899b92

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/83/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

273660425,MDU6SXNzdWUyNzM2NjA0MjU=,84,datasette package --metadata does not work with a relative path,9599,closed,0,,,0,2017-11-14T04:00:50Z,2017-11-15T05:18:35Z,2017-11-15T05:18:35Z,OWNER,," $ datasette package ~/parlgov-db/parlgov.db --metadata=~/parlgov-db/parlgov.json

Usage: datasette package [OPTIONS] FILES...

Error: Invalid value for ""-m"" / ""--metadata"": Could not open file: ~/parlgov-db/parlgov.json: No such file or directory

simonw-07542:~ simonw$ cd ~/parlgov-db/

simonw-07542:parlgov-db simonw$ datasette package ~/parlgov-db/parlgov.db --metadata=parlgov.json

Sending build context to Docker daemon 4.46MB

Step 1/7 : FROM python:3

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/84/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

273709194,MDU6SXNzdWUyNzM3MDkxOTQ=,87,Configure Travis to release new tags to PyPI,9599,closed,0,,,1,2017-11-14T08:44:08Z,2018-07-10T17:49:13Z,2018-07-10T17:49:12Z,OWNER,,https://docs.travis-ci.com/user/deployment/pypi/,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/87/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

273846123,MDU6SXNzdWUyNzM4NDYxMjM=,90,datasette publish heroku,9599,closed,0,,,8,2017-11-14T16:01:39Z,2017-12-10T03:06:34Z,2017-12-10T03:05:48Z,OWNER,,"Heroku has Docker container support so this should not be too hard:

https://devcenter.heroku.com/articles/container-registry-and-runtime

See also #59

This should work exactly like the existing “datasette publish now....” command except it would be “datasette publish heroku...”",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/90/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

273878873,MDU6SXNzdWUyNzM4Nzg4NzM=,91,"Option to serve databases from a different prefix, serve regular content elsewhere",9599,closed,0,,,1,2017-11-14T17:32:46Z,2017-12-10T03:07:58Z,2017-12-10T03:07:53Z,OWNER,,"It would be useful if the databases themselves could be served from a prefix e.g.

datasette serve mydb.db --path-prefix=db

Now my database is at `http://localhost:8001/db/mydb-23423`

This would free up the rest of the URL namespace for other things. Maybe we could have an option to serve static content from a known folder e.g.

datasette serve mydb.db --path-prefix=db --root-content=~/my-project/static

Now a hit to `http://localhost:8001/news/` serves content from `~/my-project/static/news/index.html`

This would make it trivial to package up entire HTML/CSS/JS apps with one or more underlying SQLite databases. Running without `--cors` would be fine here because any JS apps would be hosted on the same origin.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/91/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

273895344,MDU6SXNzdWUyNzM4OTUzNDQ=,92,Add --license --license_url --source --source_url --title arguments to datasette publish,9599,closed,0,,,0,2017-11-14T18:27:07Z,2017-11-15T05:04:41Z,2017-11-15T05:04:41Z,OWNER,,"I keep on using the `echo '{""source"": ""...""}' | datasette publish now --metadata=-` pattern, which suggests it makes sense for us to support these as optional arguments.

https://gist.github.com/simonw/9f8bf23b37a42d7628c4dcc4bba10253",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/92/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

273998513,MDU6SXNzdWUyNzM5OTg1MTM=,95,Allow shorter time limits to be set using a ?_sql_time_limit_ms =20 query string limit,9599,closed,0,,,1,2017-11-15T01:02:16Z,2017-11-15T02:56:13Z,2017-11-15T02:56:13Z,OWNER,,This cannot be greater than the configured time limit.,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/95/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

274001453,MDU6SXNzdWUyNzQwMDE0NTM=,96,UI for editing named parameters,9599,closed,0,,,3,2017-11-15T01:19:21Z,2017-11-16T01:45:51Z,2017-11-16T01:33:38Z,OWNER,,"On any page displaying a custom query that includes named parameters, we should show HTML form fields for editing those parameters.

Eg the breed parameter on https://australian-dogs.now.sh/australian-dogs-3ba9628?sql=select+name%2C+count%28*%29+as+n+from+%28%0D%0A%0D%0Aselect+upper%28%22Animal+name%22%29+as+name+from+%5BAdelaide-City-Council-dog-registrations-2013%5D+where+Breed+like+%3Abreed%0D%0A%0D%0Aunion+all%0D%0A%0D%0Aselect+upper%28Animal_Name%29+as+name+from+%5BAdelaide-City-Council-dog-registrations-2014%5D+where+Breed_Description+like+%3Abreed%0D%0A%0D%0Aunion+all+%0D%0A%0D%0Aselect+upper%28Animal_Name%29+as+name+from+%5BAdelaide-City-Council-dog-registrations-2015%5D+where+Breed_Description+like+%3Abreed%0D%0A%0D%0Aunion+all%0D%0A%0D%0Aselect+upper%28%22AnimalName%22%29+as+name+from+%5BCity-of-Port-Adelaide-Enfield-Dog_Registrations_2016%5D+where+AnimalBreed+like+%3Abreed%0D%0A%0D%0Aunion+all%0D%0A%0D%0Aselect+upper%28%22Animal+Name%22%29+as+name+from+%5BMitcham-dog-registrations-2015%5D+where+Breed+like+%3Abreed%0D%0A%0D%0Aunion+all%0D%0A%0D%0Aselect+upper%28%22DOG_NAME%22%29+as+name+from+%5Bburnside-dog-registrations-2015%5D+where+DOG_BREED+like+%3Abreed%0D%0A%0D%0Aunion+all+%0D%0A%0D%0Aselect+upper%28%22Animal_Name%22%29+as+name+from+%5Bcity-of-playford-2015-dog-registration%5D+where+Breed_Description+like+%3Abreed%0D%0A%0D%0Aunion+all%0D%0A%0D%0Aselect+upper%28%22Animal+Name%22%29+as+name+from+%5Bcity-of-prospect-dog-registration-details-2016%5D+where%22Breed+Description%22+like+%3Abreed%0D%0A%0D%0A%29+group+by+name+order+by+n+desc%3B&breed=pug",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/96/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

274022950,MDU6SXNzdWUyNzQwMjI5NTA=,97,Link to JSON for the list of tables ,9599,closed,0,,,3,2017-11-15T03:29:05Z,2018-05-29T18:51:35Z,2018-05-28T20:57:21Z,OWNER,,https://twitter.com/yschimke/status/930606210855854080,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/97/reactions"", ""total_count"": 1, ""+1"": 1, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

274023417,MDU6SXNzdWUyNzQwMjM0MTc=,98,Default to 127.0.0.1 not 0.0.0.0,9599,closed,0,,,0,2017-11-15T03:31:55Z,2017-11-15T05:08:54Z,2017-11-15T05:08:54Z,OWNER,,https://twitter.com/yschimke/status/930606210855854080,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/98/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

274023625,MDU6SXNzdWUyNzQwMjM2MjU=,99,Start a change log,9599,closed,0,,,0,2017-11-15T03:33:21Z,2017-11-16T15:12:46Z,2017-11-16T15:12:45Z,OWNER,,,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/99/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

274264175,MDU6SXNzdWUyNzQyNjQxNzU=,102,datasette publish elasticbeanstalk,9599,closed,0,,,1,2017-11-15T18:48:31Z,2021-01-04T20:13:20Z,2021-01-04T20:13:19Z,OWNER,,"It looks like Elastic Beanstalk is the most convenient way to deploy a docker container to AWS without first deploying a cluster.

https://aws.amazon.com/blogs/devops/dockerizing-a-python-web-app/ looks helpful. We would need to automate the deployment with Boto: http://boto3.readthedocs.io/en/latest/reference/services/elasticbeanstalk.html",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/102/reactions"", ""total_count"": 1, ""+1"": 1, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

274265878,MDU6SXNzdWUyNzQyNjU4Nzg=,103,datasette publish appengine,9599,closed,0,,,1,2017-11-15T18:54:18Z,2021-01-04T20:05:14Z,2021-01-04T20:05:14Z,OWNER,,"Similar approach to Heroku, discussed in #90

Looks like this could be pretty easy: https://cloud.google.com/appengine/docs/flexible/python/quickstart",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/103/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

274314940,MDU6SXNzdWUyNzQzMTQ5NDA=,105,Consider data-package as a format for metadata,9599,closed,0,,,4,2017-11-15T21:43:34Z,2017-11-20T19:50:53Z,2017-11-20T19:50:53Z,OWNER,,http://frictionlessdata.io/specs/data-package/,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/105/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

274315193,MDU6SXNzdWUyNzQzMTUxOTM=,106,Document how pagination works,9599,closed,0,,,1,2017-11-15T21:44:32Z,2019-06-24T06:42:33Z,2019-06-24T06:42:33Z,OWNER,,I made a start at that in this comment: https://news.ycombinator.com/item?id=15691926,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/106/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

274374317,MDU6SXNzdWUyNzQzNzQzMTc=,108,"Include version in python code, output in template",9599,closed,0,,,0,2017-11-16T02:32:40Z,2017-11-16T15:30:04Z,2017-11-16T15:30:04Z,OWNER,,It would be useful if I could tell which version of datasette was running on a site. Embed version number and include it in maybe a tooltip on the “powered by datasette” link,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/108/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

274378301,MDU6SXNzdWUyNzQzNzgzMDE=,109,Set up readthedocs,9599,closed,0,,,1,2017-11-16T02:58:01Z,2017-11-16T16:53:26Z,2017-11-16T16:13:56Z,OWNER,,,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/109/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

274578142,MDU6SXNzdWUyNzQ1NzgxNDI=,110,Add --load-extension option to datasette for loading extra SQLite extensions,9599,closed,0,,,2,2017-11-16T16:26:19Z,2017-11-16T18:38:30Z,2017-11-16T16:58:50Z,OWNER,,"This would allow users with extra SQLite extensions installed (like spatialite) to load them at runtime.

Inspired by this comment: https://github.com/simonw/datasette/issues/46#issuecomment-344810525",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/110/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

274615452,MDU6SXNzdWUyNzQ2MTU0NTI=,111,Add “updated” to metadata,9599,open,0,,,12,2017-11-16T18:22:20Z,2021-09-21T22:48:27Z,,OWNER,,"To give an indication as to when the data was last updated.

This should be a field in the metadata that is then shown on the index page and in the footer, if it is set.

Also support setting it using an option to “datasette publish” and “datasette package” - which can either be a string or can be the magic string “today” to set it to today’s date:

datasette publish file.db --updated=today",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/111/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

274617240,MDU6SXNzdWUyNzQ2MTcyNDA=,112,Allow --load-extension to be set via environment variables,9599,closed,0,,,1,2017-11-16T18:28:31Z,2017-11-17T14:19:23Z,2017-11-17T14:17:27Z,OWNER,,"This will make it easier to package up datasette in a Docker container with a bunch of pre-compiled extensions without the user having to remember to include all of the options every time.

Click has a mechanism for this: http://click.pocoo.org/5/options/#multiple-values-from-environment-values",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/112/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

274884209,MDU6SXNzdWUyNzQ4ODQyMDk=,116,Add documentation section about SQLite extensions,9599,closed,0,,,1,2017-11-17T14:36:30Z,2018-05-28T17:23:42Z,2018-05-28T17:23:41Z,OWNER,,,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/116/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

275048699,MDExOlB1bGxSZXF1ZXN0MTUzNDMyMDQ1,118,Foreign key information on row and table pages,9599,closed,0,,,0,2017-11-18T03:13:27Z,2017-11-18T03:15:57Z,2017-11-18T03:15:50Z,OWNER,simonw/datasette/pulls/118,,107914493,pull,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/118/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",0,

275082158,MDU6SXNzdWUyNzUwODIxNTg=,119,"Build an ""export this data to google sheets"" plugin",9599,closed,0,,,1,2017-11-18T14:14:51Z,2020-06-04T18:46:40Z,2020-06-04T18:46:39Z,OWNER,,"Inspired by https://github.com/kren1/tosheets

It should be a plug-in because I'd like to keep all interactions with proprietary / non-open-source software encapsulated in plugins rather than shipped as part of core.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/119/reactions"", ""total_count"": 1, ""+1"": 1, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

275087397,MDU6SXNzdWUyNzUwODczOTc=,120,Plugin that adds an authentication layer of some sort,9599,closed,0,,,4,2017-11-18T15:39:13Z,2020-03-16T18:48:06Z,2020-03-16T18:48:06Z,OWNER,,"Would allow people who want to host private data to do so.

.sh ",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/120/reactions"", ""total_count"": 7, ""+1"": 5, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 2, ""rocket"": 0, ""eyes"": 0}",,completed

275089535,MDU6SXNzdWUyNzUwODk1MzU=,121,?_json=foo&_json=bar query string argument ,9599,closed,0,,,4,2017-11-18T16:09:55Z,2018-05-31T13:48:12Z,2018-05-28T18:11:51Z,OWNER,,"Causes the specified columns in the output to be treated as JSON, and returned deserialized in the .json or .jsono response.

This will be particularly powerful when combined with https://sqlite.org/json1.html",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/121/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

275092453,MDU6SXNzdWUyNzUwOTI0NTM=,122,"Redesign JSON output, ditch jsono, offer variants controlled by parameter instead",9599,closed,0,,,5,2017-11-18T16:52:28Z,2018-04-08T14:54:09Z,2018-04-08T14:54:09Z,OWNER,,"I want to support three variants for the rows output:

* a list of lists, with a columns key saying what they are

* a list of dictionaries

* a single dictionary where the keys are the primary keys of the rows and the values are the row dictionaries themselves

I also want to make the various bits of metadata opt-in - so you don't get the SQL statement unless you ask for it.

These output options should be controlled by query string arguments.

I will set the .jsono URL to redirect to .json with the corresponding options. ",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/122/reactions"", ""total_count"": 1, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 1, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

275125561,MDU6SXNzdWUyNzUxMjU1NjE=,123,Datasette serve should accept paths/URLs to CSVs and other file formats,9599,open,0,,,9,2017-11-19T02:05:48Z,2021-07-19T00:04:32Z,,OWNER,,"This would remove the csvs-to-sqlite step which I end up using for almost everything.

I'm hesitant to introduce pandas as a required dependency though since it require compiling numpy. Could build it so this option is only available if you have pandas installed.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/123/reactions"", ""total_count"": 1, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 1, ""rocket"": 0, ""eyes"": 0}",,

275125805,MDU6SXNzdWUyNzUxMjU4MDU=,124,Option to open readonly but not immutable,9599,closed,0,,,5,2017-11-19T02:11:03Z,2019-06-24T06:43:46Z,2019-06-24T06:43:46Z,OWNER,,Immutable assumes no other process can modify the file. An option to open reqdonly instead would enable other processes to update the file in place.,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/124/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

275135393,MDU6SXNzdWUyNzUxMzUzOTM=,125,Plot rows on a map with Leaflet and Leaflet.markercluster,9599,closed,0,,,2,2017-11-19T06:05:05Z,2018-04-26T15:14:31Z,2018-04-26T15:14:31Z,OWNER,,"https://github.com/Leaflet/Leaflet.markercluster would allow us to paginate-load in an enormous set of rows with latitude/longitude points, e.g. https://australian-dunnies.now.sh/

Here's a demo of it loading 50,000 markers: https://leaflet.github.io/Leaflet.markercluster/example/marker-clustering-realworld.50000.html - and it looks like it's easy to support progress bars for if we were iteratively loading 1,000 markers at a time using datasette pagination.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/125/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

275159710,MDU6SXNzdWUyNzUxNTk3MTA=,128,"Every visualization should have an ""embed"" button",9599,open,0,,,0,2017-11-19T13:38:13Z,2019-05-13T18:33:51Z,,OWNER,,"At least for the first round of visualizations, any time you construct one using the UI the result should include an ""embed this"" button that returns source code to copy and paste

These examples should use unpkg.com (or similarl) urls with SRI hashes, eg https://www.srihash.org - and should load data from the datasette JSON API.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/128/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

275164558,MDU6SXNzdWUyNzUxNjQ1NTg=,129,Hide FTS-created tables by default on the database index page,9599,closed,0,,,2,2017-11-19T14:50:42Z,2017-11-22T20:22:02Z,2017-11-22T20:19:04Z,OWNER,,"SQLite databases that use FTS include a number of automatically generated tables, e.g.:

https://sf-trees-search.now.sh/sf-trees-search-a899b92

![]() Of these, only the `Street_Tree_List` table is actually relevant to the user.

We can detect which tables are FTS tables by first finding the virtual tables:

sqlite> .headers on

sqlite> select * from sqlite_master where rootpage = 0;

type|name|tbl_name|rootpage|sql

table|Search|Search|0|CREATE VIRTUAL TABLE ""Street_Tree_List_fts"" USING FTS4 (""qAddress"", ""qCaretaker"", ""qSpecies"")

Then parsing the above to figure out which ones are USING FTS? - then assume that any table which starts with that `Street_Tree_List_fts` prefix was created to support search:

sqlite> select * from sqlite_master where type='table' and tbl_name like 'Street_Tree_List_fts%';

type|name|tbl_name|rootpage|sql

table|Search_content|Search_content|10355|CREATE TABLE 'Street_Tree_List_fts_content'(docid INTEGER PRIMARY KEY, 'c0qAddress', 'c1qCaretaker', 'c2qSpecies')

table|Search_segments|Search_segments|10356|CREATE TABLE 'Street_Tree_List_fts_segments'(blockid INTEGER PRIMARY KEY, block BLOB)

table|Search_segdir|Search_segdir|10357|CREATE TABLE 'Street_Tree_List_fts_segdir'(level INTEGER,idx INTEGER,start_block INTEGER,leaves_end_block INTEGER,end_block INTEGER,root BLOB,PRIMARY KEY(level, idx))

table|Search_docsize|Search_docsize|10359|CREATE TABLE 'Street_Tree_List_fts_docsize'(docid INTEGER PRIMARY KEY, size BLOB)

table|Search_stat|Search_stat|10360|CREATE TABLE 'Street_Tree_List_fts_stat'(id INTEGER PRIMARY KEY, value BLOB)

We won't hide these completely - instead, we'll default the database index view to not showing them with a message that says ""5 hidden tables"" and support ?_hidden=1 to display them.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/129/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

275166078,MDU6SXNzdWUyNzUxNjYwNzg=,130,"Rename ""datasette build"" to ""datasette inspect""",9599,closed,0,,,0,2017-11-19T15:08:02Z,2017-12-07T16:57:58Z,2017-12-07T16:57:58Z,OWNER,,"This command introspects the databases and writes out a JSON summary.

I think I'd like to use `datasette build` for something more interesting, potentially duplicating functionality from https://github.com/simonw/csvs-to-sqlite

Since the internal method that does this is called `ds.inspect()` that seems like a reasonable replacement name for the command.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/130/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

275166669,MDU6SXNzdWUyNzUxNjY2Njk=,131,UI support for running FTS searches,9599,closed,0,,,3,2017-11-19T15:16:20Z,2017-11-19T17:18:05Z,2017-11-19T17:00:12Z,OWNER,,"Here's an example query that searches all FTS indexed columns in a table: https://sf-trees-search.now.sh/sf-trees-search-a899b92?sql=select+*+from+Street_Tree_List+where+rowid+in+%28select+rowid+from+Street_Tree_List_fts+where+Street_Tree_List_fts+match+%27grove+london+dpw%27%29%0D%0A

And here's a query that searches a specific column: https://sf-trees-search.now.sh/sf-trees-search-a899b92?sql=select+*+from+Street_Tree_List+where+rowid+in+%28select+rowid+from+Street_Tree_List_fts+where+qSpecies+match+%27london%27%29%0D%0A

If we detect that a table has FTS enabled (which we can do by looking for it as a content table reference in another FTS table's create definition) we should add a search box to the table page which constructs this query - maybe using `?_search=XXX` in the query string?

Of these, only the `Street_Tree_List` table is actually relevant to the user.

We can detect which tables are FTS tables by first finding the virtual tables:

sqlite> .headers on

sqlite> select * from sqlite_master where rootpage = 0;

type|name|tbl_name|rootpage|sql

table|Search|Search|0|CREATE VIRTUAL TABLE ""Street_Tree_List_fts"" USING FTS4 (""qAddress"", ""qCaretaker"", ""qSpecies"")

Then parsing the above to figure out which ones are USING FTS? - then assume that any table which starts with that `Street_Tree_List_fts` prefix was created to support search:

sqlite> select * from sqlite_master where type='table' and tbl_name like 'Street_Tree_List_fts%';

type|name|tbl_name|rootpage|sql

table|Search_content|Search_content|10355|CREATE TABLE 'Street_Tree_List_fts_content'(docid INTEGER PRIMARY KEY, 'c0qAddress', 'c1qCaretaker', 'c2qSpecies')

table|Search_segments|Search_segments|10356|CREATE TABLE 'Street_Tree_List_fts_segments'(blockid INTEGER PRIMARY KEY, block BLOB)

table|Search_segdir|Search_segdir|10357|CREATE TABLE 'Street_Tree_List_fts_segdir'(level INTEGER,idx INTEGER,start_block INTEGER,leaves_end_block INTEGER,end_block INTEGER,root BLOB,PRIMARY KEY(level, idx))

table|Search_docsize|Search_docsize|10359|CREATE TABLE 'Street_Tree_List_fts_docsize'(docid INTEGER PRIMARY KEY, size BLOB)

table|Search_stat|Search_stat|10360|CREATE TABLE 'Street_Tree_List_fts_stat'(id INTEGER PRIMARY KEY, value BLOB)

We won't hide these completely - instead, we'll default the database index view to not showing them with a message that says ""5 hidden tables"" and support ?_hidden=1 to display them.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/129/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

275166078,MDU6SXNzdWUyNzUxNjYwNzg=,130,"Rename ""datasette build"" to ""datasette inspect""",9599,closed,0,,,0,2017-11-19T15:08:02Z,2017-12-07T16:57:58Z,2017-12-07T16:57:58Z,OWNER,,"This command introspects the databases and writes out a JSON summary.

I think I'd like to use `datasette build` for something more interesting, potentially duplicating functionality from https://github.com/simonw/csvs-to-sqlite

Since the internal method that does this is called `ds.inspect()` that seems like a reasonable replacement name for the command.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/130/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

275166669,MDU6SXNzdWUyNzUxNjY2Njk=,131,UI support for running FTS searches,9599,closed,0,,,3,2017-11-19T15:16:20Z,2017-11-19T17:18:05Z,2017-11-19T17:00:12Z,OWNER,,"Here's an example query that searches all FTS indexed columns in a table: https://sf-trees-search.now.sh/sf-trees-search-a899b92?sql=select+*+from+Street_Tree_List+where+rowid+in+%28select+rowid+from+Street_Tree_List_fts+where+Street_Tree_List_fts+match+%27grove+london+dpw%27%29%0D%0A

And here's a query that searches a specific column: https://sf-trees-search.now.sh/sf-trees-search-a899b92?sql=select+*+from+Street_Tree_List+where+rowid+in+%28select+rowid+from+Street_Tree_List_fts+where+qSpecies+match+%27london%27%29%0D%0A

If we detect that a table has FTS enabled (which we can do by looking for it as a content table reference in another FTS table's create definition) we should add a search box to the table page which constructs this query - maybe using `?_search=XXX` in the query string?

To support search against specified columns, we can do `?_search__ qSpecies=London`. - not necessary, see comment below.

- [x] Detect if a table has a FTS index defined against it as a content= parameter

- [x] Decide what to do if there is more than one FTS index (maybe just pick the first one?)

- [x] Add the `?_search=` query string argument

- [x] Add the UI",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/131/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

275228834,MDU6SXNzdWUyNzUyMjg4MzQ=,136,"""Reformat SQL"" button next to SQL editor textarea",9599,closed,0,,,0,2017-11-20T03:42:19Z,2019-10-14T03:46:13Z,2019-10-14T03:46:13Z,OWNER,,"Can use this:

https://github.com/zeroturnaround/sql-formatter

https://zeroturnaround.github.io/sql-formatter/

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/136/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

275415799,MDU6SXNzdWUyNzU0MTU3OTk=,137,Ability to combine multiple SQL queries on a single graph,9599,open,0,,,1,2017-11-20T16:26:57Z,2019-05-13T18:33:51Z,,OWNER,,This would make visualizations significantly more powerful. The interesting challenge will be around the URL design. It would be useful to be able to combine either multiple explicit SQL queries or multiple queries based on the filter string parameters passed to one or more table views.,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/137/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

275476839,MDU6SXNzdWUyNzU0NzY4Mzk=,138,"Per-database and per-table metadata, probably using data-package",9599,closed,0,,,1,2017-11-20T19:50:10Z,2017-12-10T03:08:36Z,2017-12-10T03:08:26Z,OWNER,,"Ability to annotate databases and tables with extra metadata describing their purpose, providing source and licensing information and describing individual columns.

http://frictionlessdata.io/specs/data-package/ looks like a great format for this, see #105 ",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/138/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

275493851,MDU6SXNzdWUyNzU0OTM4NTE=,139,Build a visualization plugin for Vega,9599,closed,0,,,2,2017-11-20T20:47:41Z,2018-07-10T17:48:18Z,2018-07-10T17:48:18Z,OWNER,,"https://vega.github.io/vega/examples/population-pyramid/ for example looks pretty easy to hook up to Datasette.

Depends on #14 ",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/139/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

275755475,MDU6SXNzdWUyNzU3NTU0NzU=,140,Heatmap visualization plugin,9599,open,0,,,2,2017-11-21T15:34:23Z,2019-05-13T18:33:51Z,,OWNER,,Could use https://github.com/scottbedard/svelte-heatmap,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/140/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

275917760,MDU6SXNzdWUyNzU5MTc3NjA=,142,Show extra instructions with the interrupted,9599,closed,0,,,3,2017-11-22T01:44:29Z,2018-05-28T21:25:06Z,2018-05-28T21:24:35Z,OWNER,,"When you are using Datasette locally for ad-hoc analysis it can be frustrating to hit the time limit.

If you start it with the correct command line arguments you can disable that time limit. So how about we tell you how to do that anytime you hit the interrupted error provided you are accessing it from localhost.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/142/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

275939188,MDU6SXNzdWUyNzU5MzkxODg=,143,"Mechanism for ""suggested visualizations""",9599,closed,0,,,1,2017-11-22T04:10:25Z,2018-07-10T17:48:34Z,2018-07-10T17:48:34Z,OWNER,," Each visualization should have a way of deciding if it might be appropriate for the current view of data.

We can then offer a ""suggested visualizations"" prompt which shows previews.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/143/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

276192732,MDExOlB1bGxSZXF1ZXN0MTU0MjQ2ODE2,145,Fix pytest version conflict,9599,closed,0,,,0,2017-11-22T20:15:34Z,2017-11-22T20:17:54Z,2017-11-22T20:17:52Z,OWNER,simonw/datasette/pulls/145,"https://travis-ci.org/simonw/datasette/jobs/305929426

pkg_resources.VersionConflict: (pytest 3.2.1 (/home/travis/virtualenv/python3.5.3/lib/python3.5/site-packages),

Requirement.parse('pytest==3.2.3'))",107914493,pull,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/145/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",0,

276455748,MDU6SXNzdWUyNzY0NTU3NDg=,146,datasette publish gcloud,9599,closed,0,,,2,2017-11-23T18:55:03Z,2019-06-24T06:48:20Z,2019-06-24T06:48:20Z,OWNER,,"See also #103

It looks like you can start a Google Cloud VM with a ""docker container"" option - and the Google Cloud Registry is easy to push containers to. So it would be feasible to have `datasette publish gcloud ...` automatically build a container, push it to GCR, then start a new VM instance with it:

https://cloud.google.com/container-registry/docs/pushing-and-pulling

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/146/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

276704327,MDU6SXNzdWUyNzY3MDQzMjc=,150,_group_count= feature improvements,9599,closed,0,,,3,2017-11-24T22:06:18Z,2018-05-28T16:41:28Z,2018-05-28T16:41:28Z,OWNER,,"- [ ] The ""apply filters"" form should keep you on the _group_count= page

- [ ] Foreign key references should be expand

- [ ] Page title should reflect the view you are on",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/150/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

276718605,MDU6SXNzdWUyNzY3MTg2MDU=,151,Set up a pattern portfolio,9599,closed,0,,,2,2017-11-25T02:09:49Z,2020-07-02T00:13:24Z,2020-05-03T03:13:16Z,OWNER,,"https://www.slideshare.net/nataliedowne/practical-maintainable-css/75

This will be a single page that demonstrates all of the different CSS styles and classes available to Datasette.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/151/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

279547886,MDU6SXNzdWUyNzk1NDc4ODY=,163,Document the querystring argument for setting a different time limit,9599,closed,0,,,2,2017-12-05T22:05:08Z,2021-03-23T02:44:33Z,2017-12-06T15:06:57Z,OWNER,,"http://datasette.readthedocs.io/en/latest/sql_queries.html#query-limits

Need to explain why this is useful too.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/163/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

280662866,MDExOlB1bGxSZXF1ZXN0MTU3MzY1ODEx,168,Upgrade to Sanic 0.7.0,9599,closed,0,,,1,2017-12-09T01:25:08Z,2017-12-09T03:00:34Z,2017-12-09T03:00:34Z,OWNER,simonw/datasette/pulls/168,,107914493,pull,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/168/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",0,

280896290,MDU6SXNzdWUyODA4OTYyOTA=,172,Show size of .db file next to download link,9599,closed,0,,,1,2017-12-11T05:12:46Z,2019-02-06T05:09:06Z,2019-02-06T05:00:36Z,OWNER,,"Size in bytes should be calculated by datasette inspect.

Template should display it in KB or MB or GB",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/172/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

281197863,MDU6SXNzdWUyODExOTc4NjM=,174,License/Source in footer should inherit from top level,9599,closed,0,,,1,2017-12-11T23:01:35Z,2018-08-11T17:46:51Z,2018-08-11T17:46:51Z,OWNER,,"The footer on https://vice-police-shootings.now.sh/vice-bc7c892/ViceNews_FullOISData does not show license and source information... but that Datasette has that information, it's just defined at the top level: https://vice-police-shootings.now.sh/

The footer for a row/table page should fall back on information for the database, and if there is none for the database it should fall back on the top-level metadata instead.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/174/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

288438570,MDU6SXNzdWUyODg0Mzg1NzA=,179,More metadata options for template authors ,9599,open,0,,,2,2018-01-14T20:51:04Z,2019-05-13T18:33:33Z,,OWNER,,See this thread on Twitter: https://twitter.com/simonw/status/952637152797458432,107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/179/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

309047460,MDU6SXNzdWUzMDkwNDc0NjA=,188,Ability to bundle metadata and templates inside the SQLite file,9599,open,0,,,4,2018-03-27T16:42:07Z,2020-12-04T17:18:34Z,,OWNER,,"One of the nicest qualities of SQLite as a data format is that you get a single file which you can then backup or share with other people.

Datasette breaks this a little once you start including custom metadata.json or template files and CSS.

It would be cool if there was an optional mechanism for baking that extra configuration into the SQLite file itself. That way entire datasette mini-applications (including canned queries and custom HTML and CSS) could be constructed as single .db files.

Since datasette configuration is all file-based, one way to achieve that would be to support a ""datasette_files"" table which, if present is used to search for file contents by path.

This is inline with the philosophy described by https://www.sqlite.org/appfileformat.html

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/188/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

309471814,MDU6SXNzdWUzMDk0NzE4MTQ=,189,Ability to sort (and paginate) by column,9599,closed,0,9599,,31,2018-03-28T18:04:51Z,2018-04-15T18:54:22Z,2018-04-09T05:16:02Z,OWNER,,"As requested in https://github.com/simonw/datasette/issues/185#issuecomment-376614973

I've previously avoided this for performance reasons: sort-by-column on a column without an index is likely to perform badly for hundreds of thousands of rows.

That's not a good enough reason to avoid the feature entirely though. A few options:

* Allow sort-by-column by default, give users the option to disable it for specific tables/columns

* Disallow sort-by-column by default, give users option (probably in `metadata.json`) to enable it for specific tables/columns

* Automatically detect if a column either has an index on it OR a table has less than X rows in it

We already have the mechanism in place to cut off SQL queries that take more than X seconds, so if someone DOES try to sort by a column that's too expensive it won't actually hurt anything - but it would be nice to not show people a ""sort"" option which is guaranteed to throw a timeout error.

The vast majority of datasette usage that I've seen so far is on smaller datasets where the performance penalties of sort-by-column are extremely unlikely to show up.

----

Still left to do:

- [x] UI that shows which sort order is currently being applied (in HTML and in JSON)

- [x] UI for applying a sort order (with rel=nofollow to avoid Google crawling it)

- [x] Sort column names should be escaped correctly in generated SQL

- [x] Validation that the selected sort order is a valid column

- [x] Throw error if user attempts to apply _sort AND _sort_desc at the same time

- [x] Ability to disable sorting (or sort only for specific columns) in metadata.json

- [x] Fix ""201 rows where sorted by sortable_with_nulls "" bug

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/189/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

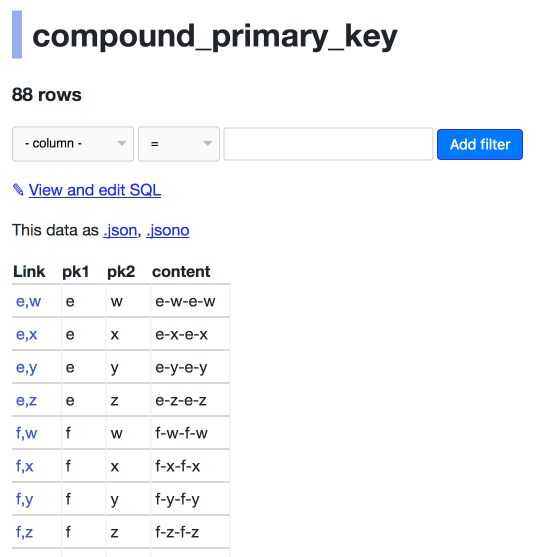

309558826,MDU6SXNzdWUzMDk1NTg4MjY=,190,Keyset pagination doesn't work correctly for compound primary keys,9599,closed,0,,,7,2018-03-28T22:45:06Z,2018-03-30T06:31:15Z,2018-03-30T06:26:28Z,OWNER,,"Consider https://datasette-issue-190-compound-pks.now.sh/compound-pks-9aafe8f/compound_primary_key

The next= link is to `d,v`:

https://datasette-issue-190-compound-pks.now.sh/compound-pks-9aafe8f/compound_primary_key?_next=d%2Cv

But that page starts with:

The next key in the sequence should be `d,w`. Also we should return the full a-z of the ones that start with the letter e - in this example we only return `e-w`, `e-x`, `e-y` and `e-z`",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/190/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed