id,node_id,number,title,user,state,locked,assignee,milestone,comments,created_at,updated_at,closed_at,author_association,pull_request,body,repo,type,active_lock_reason,performed_via_github_app,reactions,draft,state_reason

999902754,I_kwDOBm6k_c47mU4i,1473,base logo link visits `undefined` rather than href url,192568,open,0,,,2,2021-09-18T04:17:04Z,2021-09-19T00:45:32Z,,CONTRIBUTOR,,"I have two connected sites:

http://www.SaferOrToxic.org

(a Hugo website)

and:

http://disinfectants.SaferOrToxic.org/disinfectants/listN

(a datasette table page)

The latter is linked as ""The List"" in the former's menu.

(I'd love a prettier URL, but that's what I've got.)

On:

http://disinfectants.SaferOrToxic.org/disinfectants/listN

... all the other menu links should point back to:

https://www.SaferOrToxic.org

And they do!

But the logo, for some reason--though it has an href pointing to:

https://www.SaferOrToxic.org

Keeps going to this instead:

https://disinfectants.saferortoxic.org/disinfectants/undefined

What is causing that? How can I fix it?

In #1284 back in March, I was doing battle with the index.html template, in a still unresolved issue. (I wanted only a single table page at the root.)

But I thought, well, if I can't resolve that, at least I could just point the main website to the datasette page (""The List,"") and then have the List point back to the home website.

The menu hrefs to https://www.SaferOrToxic.org work just fine, exactly as they should, from the datasette page. Even the Home link works properly.

But the logo link keeps rewriting to: https://disinfectants.saferortoxic.org/disinfectants/undefined

This is the HTML:

```

![]() ```

Is this somehow related to cloudflare?

Or something in the datasette code?

I'm starting to think it's a cloudflare issue.

```

Is this somehow related to cloudflare?

Or something in the datasette code?

I'm starting to think it's a cloudflare issue.

![]() Can I at least rule out it being a datasette issue?

My repository is here:

https://github.com/mroswell/list-N

(BTW, I couldn't figure out how to reference a local image, either, on the datasette side, which is why I'm using the image from the www home page.)

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1473/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

1006781949,I_kwDOBm6k_c48AkX9,1478,Documentation Request: Feature alternative ID instead of default ID,192568,open,0,,,0,2021-09-24T19:56:13Z,2021-09-25T16:18:54Z,,CONTRIBUTOR,,"My data already has an ID that comes from a federal agency.

Would love to have documentation on how to modify the template to:

- Remove the generated ID from the table

- Link the federal ID to the detail page

- and to ensure that the JSON file uses that as the ID. I'd be happy to include the database ID in the export, but not as a key.

I don't want to remove the ID from the database, though, because my experience with the federal agency is that data often has anomalies. I don't want all hell to break loose if they end up applying the same ID to multiple rows (which they haven't done yet). I just don't want it to display in the table or the data exports.

Perhaps this isn't a template issue, maybe more of a db manipulation...

Margie",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1478/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

1023243105,I_kwDOBm6k_c48_XNh,1486,pipx installation instructions for plugins don't reference pipx inject,41546558,closed,0,,,0,2021-10-12T00:43:42Z,2021-10-13T21:09:11Z,2021-10-13T21:09:11Z,CONTRIBUTOR,,"The datasette [installation instructions](https://github.com/simonw/datasette/blob/main/docs/installation.rst) discuss how to install with pipx, how to upgrade with pipx, and how to upgrade plugins with pipx but do not mention how to install a plugin with pipx. You discussed this on your [blog](https://til.simonwillison.net/python/installing-upgrading-plugins-with-pipx) but looks like this didn't make it in when you updated the docs for pipx (#756).

I'll submit a PR shortly to fix this.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1486/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1015646369,I_kwDOBm6k_c48iYih,1480,Exceeding Cloud Run memory limits when deploying a 4.8G database,110420,open,0,,,9,2021-10-04T21:20:24Z,2022-10-07T04:39:10Z,,CONTRIBUTOR,,"When I try to deploy a 4.8G SQLite database to Google Cloud Run, I get this error message:

> Memory limit of 8192M exceeded with 8826M used. Consider increasing the memory limit, see https://cloud.google.com/run/docs/configuring/memory-limits

Unfortunately, the maximum amount of memory that can be allocated to an instance is 8192M.

Naively profiling the memory usage of running Datasette with this database locally on my MacBook shows the following memory usage (using Activity Monitor) when I just start up Datasette locally:

- Real Memory Size: 70.6 MB

- Virtual Memory Size: 4.51 GB

- Shared Memory Size: 2.5 MB

- Private Memory Size: 57.4 MB

I'm trying to understand if there's a query or other operation that gets run during container deployment that causes memory use to be so large and if this can be avoided somehow.

This is somewhat related to #1082, but on a different platform, so I decided to open a new issue.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1480/reactions"", ""total_count"": 1, ""+1"": 1, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

1059549523,I_kwDOBm6k_c4_J3FT,1526,"Add to vercel.json, rather than overwriting it.",192568,closed,0,,,2,2021-11-22T00:47:12Z,2021-11-22T04:49:45Z,2021-11-22T04:13:47Z,CONTRIBUTOR,,"I'd like to be able to add to vercel.json. But Datasette overwrites whatever I put in that file. I originally reported this here:

https://github.com/simonw/datasette-publish-vercel/issues/51

In that case, I wanted to do a rewrite... and now I need to do 301 redirects (because we had to rename our site).

Can this be addressed?

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1526/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1060631257,I_kwDOBm6k_c4_N_LZ,1528,"Add new `""sql_file""` key to Canned Queries in metadata?",15178711,open,0,,,3,2021-11-22T21:58:01Z,2022-06-10T03:23:08Z,,CONTRIBUTOR,,"Currently for canned queries, you have to inline SQL in your `metadata.yaml` like so:

```yaml

databases:

fixtures:

queries:

neighborhood_search:

sql: |-

select neighborhood, facet_cities.name, state

from facetable

join facet_cities on facetable.city_id = facet_cities.id

where neighborhood like '%' || :text || '%'

order by neighborhood

title: Search neighborhoods

```

This works fine, but for a few reasons, I usually have my canned queries already written in separate `.sql` files. I'd like to instead re-use those instead of re-writing it.

So, I'd like to see a new `""sql_file""` key that works like so:

`metadata.yaml`:

```yaml

databases:

fixtures:

queries:

neighborhood_search:

sql_file: neighborhood_search.sql

title: Search neighborhoods

```

`neighborhood_search.sql`:

```sql

select neighborhood, facet_cities.name, state

from facetable

join facet_cities on facetable.city_id = facet_cities.id

where neighborhood like '%' || :text || '%'

order by neighborhood

```

Both of these would work in the exact same way, where Datasette would instead open + include `neighborhood_search.sql` on startup.

A few reasons why I'd like to keep my canned queries SQL separate from metadata.yaml:

- Keeping SQL in standalone SQL files means syntax highlighting and other text editor integrations in my code

- Multiline strings in yaml, while functional, are a tad cumbersome and are hard to edit

- Works well with other tools (can pipe `.sql` files into the `sqlite3` CLI, or use with other SQLite clients easier)

- Typically my canned queries are quite long compared to everything else in my metadata.yaml, so I'd love to separate it where possible

Let me know if this is a feature you'd like to see, I can try to send up a PR if this sounds right!",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1528/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

1994857251,I_kwDOBm6k_c525xsj,2208,No suggested facets when a column named 'value' is included,198537,open,0,,,1,2023-11-15T14:11:17Z,2023-11-15T14:18:59Z,,CONTRIBUTOR,,"When a column named 'value' is included there are no suggested facets is shown as the query uses an alias of 'value'.

https://github.com/simonw/datasette/blob/452a587e236ef642cbc6ae345b58767ea8420cb5/datasette/facets.py#L168-L174

Currently the following is shown (from https://latest.datasette.io/fixtures/facetable)

When I add a column named 'value' only the JSON facets are processed.

I think that not using aliases could be a solution (except if someone wants to use a column named `count(*)` though this seems to be unlikely). I'll open a PR with that.

There is also a TODO with a similar question in the same file. I have not looked into that yet.

https://github.com/simonw/datasette/blob/452a587e236ef642cbc6ae345b58767ea8420cb5/datasette/facets.py#L512",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/2208/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

2028698018,I_kwDOBm6k_c5463mi,2213,feature request: gzip compression of database downloads,536941,open,0,,,1,2023-12-06T14:35:03Z,2023-12-06T15:05:46Z,,CONTRIBUTOR,,"At the bottom of database pages, datasette gives users the opportunity to download the underlying sqlite database. It would be great if that could be served gzip compressed.

this is similar to #1213, but for me, i don't need datasette to compress html and json because my CDN layer does it for me, however, cloudflare at least, will not compress a mimetype of ""application""

(see list of mimetype: https://developers.cloudflare.com/speed/optimization/content/brotli/content-compression/)",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/2213/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

1089529555,I_kwDOBm6k_c5A8ObT,1581,"when hashed urls are turned on, the _memory db has improperly long-lived cache expiry",536941,closed,0,,,1,2021-12-28T00:05:48Z,2022-03-24T04:08:18Z,2022-03-24T04:08:18Z,CONTRIBUTOR,,"if hashed_urls are on, then a -000 suffix is added to the `_memory` database, and the cache settings are set just as if it was a normal hashed database.

in particular, this header is set:

`cache-control: max-age=31536000`

this is not appropriate because the `_memory-000` database isn't really hashed based on the contents of the databases (see #1561).

Either the cache-control header should be changed, or the _memory db should have a hash suffix that does depend on the contents of the databases.

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1581/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1076388044,I_kwDOBm6k_c5AKGDM,1547,Writable canned queries fail to load custom templates,127565,closed,0,,7571612,6,2021-12-10T03:31:48Z,2022-01-13T22:27:59Z,2021-12-19T21:12:00Z,CONTRIBUTOR,,"I've created a canned query with `""write"": true` set. I've also created a custom template for it, but the template doesn't seem to be found. If I look in the HTML I see (`stock_exchange` is the db name):

``

My non-writeable canned queries pick up custom templates as expected, and if I look at their HTML I see the canned query name added to the templates considered (the canned query here is `date_search`):

``

So it seems like the writeable canned query is behaving differently for some reason. Is it an authentication thing? I'm using the built in `--root` authentication.

Thanks!

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1547/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1077620955,I_kwDOBm6k_c5AOzDb,1549,Redesign CSV export to improve usability,536941,open,0,,3268330,5,2021-12-11T19:02:12Z,2022-04-04T11:17:13Z,,CONTRIBUTOR,,"*Original title: Set content type for CSV so that browsers will attempt to download instead opening in the browser*

Right now, if the user clicks on the CSV related to a

Can I at least rule out it being a datasette issue?

My repository is here:

https://github.com/mroswell/list-N

(BTW, I couldn't figure out how to reference a local image, either, on the datasette side, which is why I'm using the image from the www home page.)

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1473/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

1006781949,I_kwDOBm6k_c48AkX9,1478,Documentation Request: Feature alternative ID instead of default ID,192568,open,0,,,0,2021-09-24T19:56:13Z,2021-09-25T16:18:54Z,,CONTRIBUTOR,,"My data already has an ID that comes from a federal agency.

Would love to have documentation on how to modify the template to:

- Remove the generated ID from the table

- Link the federal ID to the detail page

- and to ensure that the JSON file uses that as the ID. I'd be happy to include the database ID in the export, but not as a key.

I don't want to remove the ID from the database, though, because my experience with the federal agency is that data often has anomalies. I don't want all hell to break loose if they end up applying the same ID to multiple rows (which they haven't done yet). I just don't want it to display in the table or the data exports.

Perhaps this isn't a template issue, maybe more of a db manipulation...

Margie",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1478/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

1023243105,I_kwDOBm6k_c48_XNh,1486,pipx installation instructions for plugins don't reference pipx inject,41546558,closed,0,,,0,2021-10-12T00:43:42Z,2021-10-13T21:09:11Z,2021-10-13T21:09:11Z,CONTRIBUTOR,,"The datasette [installation instructions](https://github.com/simonw/datasette/blob/main/docs/installation.rst) discuss how to install with pipx, how to upgrade with pipx, and how to upgrade plugins with pipx but do not mention how to install a plugin with pipx. You discussed this on your [blog](https://til.simonwillison.net/python/installing-upgrading-plugins-with-pipx) but looks like this didn't make it in when you updated the docs for pipx (#756).

I'll submit a PR shortly to fix this.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1486/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1015646369,I_kwDOBm6k_c48iYih,1480,Exceeding Cloud Run memory limits when deploying a 4.8G database,110420,open,0,,,9,2021-10-04T21:20:24Z,2022-10-07T04:39:10Z,,CONTRIBUTOR,,"When I try to deploy a 4.8G SQLite database to Google Cloud Run, I get this error message:

> Memory limit of 8192M exceeded with 8826M used. Consider increasing the memory limit, see https://cloud.google.com/run/docs/configuring/memory-limits

Unfortunately, the maximum amount of memory that can be allocated to an instance is 8192M.

Naively profiling the memory usage of running Datasette with this database locally on my MacBook shows the following memory usage (using Activity Monitor) when I just start up Datasette locally:

- Real Memory Size: 70.6 MB

- Virtual Memory Size: 4.51 GB

- Shared Memory Size: 2.5 MB

- Private Memory Size: 57.4 MB

I'm trying to understand if there's a query or other operation that gets run during container deployment that causes memory use to be so large and if this can be avoided somehow.

This is somewhat related to #1082, but on a different platform, so I decided to open a new issue.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1480/reactions"", ""total_count"": 1, ""+1"": 1, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

1059549523,I_kwDOBm6k_c4_J3FT,1526,"Add to vercel.json, rather than overwriting it.",192568,closed,0,,,2,2021-11-22T00:47:12Z,2021-11-22T04:49:45Z,2021-11-22T04:13:47Z,CONTRIBUTOR,,"I'd like to be able to add to vercel.json. But Datasette overwrites whatever I put in that file. I originally reported this here:

https://github.com/simonw/datasette-publish-vercel/issues/51

In that case, I wanted to do a rewrite... and now I need to do 301 redirects (because we had to rename our site).

Can this be addressed?

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1526/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1060631257,I_kwDOBm6k_c4_N_LZ,1528,"Add new `""sql_file""` key to Canned Queries in metadata?",15178711,open,0,,,3,2021-11-22T21:58:01Z,2022-06-10T03:23:08Z,,CONTRIBUTOR,,"Currently for canned queries, you have to inline SQL in your `metadata.yaml` like so:

```yaml

databases:

fixtures:

queries:

neighborhood_search:

sql: |-

select neighborhood, facet_cities.name, state

from facetable

join facet_cities on facetable.city_id = facet_cities.id

where neighborhood like '%' || :text || '%'

order by neighborhood

title: Search neighborhoods

```

This works fine, but for a few reasons, I usually have my canned queries already written in separate `.sql` files. I'd like to instead re-use those instead of re-writing it.

So, I'd like to see a new `""sql_file""` key that works like so:

`metadata.yaml`:

```yaml

databases:

fixtures:

queries:

neighborhood_search:

sql_file: neighborhood_search.sql

title: Search neighborhoods

```

`neighborhood_search.sql`:

```sql

select neighborhood, facet_cities.name, state

from facetable

join facet_cities on facetable.city_id = facet_cities.id

where neighborhood like '%' || :text || '%'

order by neighborhood

```

Both of these would work in the exact same way, where Datasette would instead open + include `neighborhood_search.sql` on startup.

A few reasons why I'd like to keep my canned queries SQL separate from metadata.yaml:

- Keeping SQL in standalone SQL files means syntax highlighting and other text editor integrations in my code

- Multiline strings in yaml, while functional, are a tad cumbersome and are hard to edit

- Works well with other tools (can pipe `.sql` files into the `sqlite3` CLI, or use with other SQLite clients easier)

- Typically my canned queries are quite long compared to everything else in my metadata.yaml, so I'd love to separate it where possible

Let me know if this is a feature you'd like to see, I can try to send up a PR if this sounds right!",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1528/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

1994857251,I_kwDOBm6k_c525xsj,2208,No suggested facets when a column named 'value' is included,198537,open,0,,,1,2023-11-15T14:11:17Z,2023-11-15T14:18:59Z,,CONTRIBUTOR,,"When a column named 'value' is included there are no suggested facets is shown as the query uses an alias of 'value'.

https://github.com/simonw/datasette/blob/452a587e236ef642cbc6ae345b58767ea8420cb5/datasette/facets.py#L168-L174

Currently the following is shown (from https://latest.datasette.io/fixtures/facetable)

When I add a column named 'value' only the JSON facets are processed.

I think that not using aliases could be a solution (except if someone wants to use a column named `count(*)` though this seems to be unlikely). I'll open a PR with that.

There is also a TODO with a similar question in the same file. I have not looked into that yet.

https://github.com/simonw/datasette/blob/452a587e236ef642cbc6ae345b58767ea8420cb5/datasette/facets.py#L512",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/2208/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

2028698018,I_kwDOBm6k_c5463mi,2213,feature request: gzip compression of database downloads,536941,open,0,,,1,2023-12-06T14:35:03Z,2023-12-06T15:05:46Z,,CONTRIBUTOR,,"At the bottom of database pages, datasette gives users the opportunity to download the underlying sqlite database. It would be great if that could be served gzip compressed.

this is similar to #1213, but for me, i don't need datasette to compress html and json because my CDN layer does it for me, however, cloudflare at least, will not compress a mimetype of ""application""

(see list of mimetype: https://developers.cloudflare.com/speed/optimization/content/brotli/content-compression/)",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/2213/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

1089529555,I_kwDOBm6k_c5A8ObT,1581,"when hashed urls are turned on, the _memory db has improperly long-lived cache expiry",536941,closed,0,,,1,2021-12-28T00:05:48Z,2022-03-24T04:08:18Z,2022-03-24T04:08:18Z,CONTRIBUTOR,,"if hashed_urls are on, then a -000 suffix is added to the `_memory` database, and the cache settings are set just as if it was a normal hashed database.

in particular, this header is set:

`cache-control: max-age=31536000`

this is not appropriate because the `_memory-000` database isn't really hashed based on the contents of the databases (see #1561).

Either the cache-control header should be changed, or the _memory db should have a hash suffix that does depend on the contents of the databases.

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1581/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1076388044,I_kwDOBm6k_c5AKGDM,1547,Writable canned queries fail to load custom templates,127565,closed,0,,7571612,6,2021-12-10T03:31:48Z,2022-01-13T22:27:59Z,2021-12-19T21:12:00Z,CONTRIBUTOR,,"I've created a canned query with `""write"": true` set. I've also created a custom template for it, but the template doesn't seem to be found. If I look in the HTML I see (`stock_exchange` is the db name):

``

My non-writeable canned queries pick up custom templates as expected, and if I look at their HTML I see the canned query name added to the templates considered (the canned query here is `date_search`):

``

So it seems like the writeable canned query is behaving differently for some reason. Is it an authentication thing? I'm using the built in `--root` authentication.

Thanks!

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1547/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1077620955,I_kwDOBm6k_c5AOzDb,1549,Redesign CSV export to improve usability,536941,open,0,,3268330,5,2021-12-11T19:02:12Z,2022-04-04T11:17:13Z,,CONTRIBUTOR,,"*Original title: Set content type for CSV so that browsers will attempt to download instead opening in the browser*

Right now, if the user clicks on the CSV related to a table or a query, the response header for the content type is

""content-type: text/plain; charset=utf-8""

Most browsers will try to open a file with this content-type in the browser.

This is not what most people want to do, and lots of folks don't know that if they want to download the CSV and open it in the a spreadsheet program they next need to save the page through their browser.

It would be great if the response header could be something like

```

'Content-type: text/csv');

'Content-disposition: attachment;filename=MyVerySpecial.csv');

```

which would lead browsers to open a download dialog.

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1549/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

1078702875,I_kwDOBm6k_c5AS7Mb,1552,Allow to set `facets_array` in metadata (like current `facets`),3556,closed,0,,7571612,9,2021-12-13T16:00:44Z,2022-01-13T22:26:15Z,2021-12-16T18:47:48Z,CONTRIBUTOR,,"For now, you can set a `facets` value (array) in your metadata file but I couldn't find a way to set a `facets_array` in order to provide default facets for arrays (like tags). My use-case is to access to [that kind of view](https://latest.datasette.io/fixtures/facetable?_facet_array=tags) by default without URL's parameters as with other default facets.

_I'm new to datasette, and I'm willing to help with a PR if that is not already implemented and I missed it!_",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1552/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1079111498,I_kwDOBm6k_c5AUe9K,1553,if csv export is truncated in non streaming mode set informative response header,536941,open,0,,,3,2021-12-13T22:50:44Z,2021-12-16T19:17:28Z,,CONTRIBUTOR,,"streaming mode is currently not enabled for custom queries, so the queries will be truncated to max row limit.

it would be great if a response is truncated that an header signalling that was set in the header.

i need to write some pagination code for getting full results back for a custom query and it would make the code much better if i could reliably known when there is nothing more to limit/offset ",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1553/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

1082765654,I_kwDOBm6k_c5AibFW,1561,"add hash id to ""_memory"" url if hashed url mode is turned on and crossdb is also turned on",536941,closed,0,,,3,2021-12-17T00:45:12Z,2022-03-19T04:45:40Z,2022-03-19T04:45:40Z,CONTRIBUTOR,,"If hashed_url mode is turned on and crossdb is also turned on, then queries to _memory should have a hash_id.

One way that it could work is to have the _memory hash be a hash of all the individual databases.

Otherwise, crossdb queries can get quit out of data if using aggressive caching.

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1561/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1105916061,I_kwDOBm6k_c5B6vCd,1601,Add KNN and data_licenses to hidden tables list,25778,closed,0,,,5,2022-01-17T14:19:57Z,2022-01-20T21:29:44Z,2022-01-20T04:38:54Z,CONTRIBUTOR,,"They're generated by Spatialite and not very interesting in most cases.

![]() ",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1601/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1090810196,I_kwDOBm6k_c5BBHFU,1583,consider adding deletion step of cloudbuild artifacts to gcloud publish,536941,open,0,,,1,2021-12-30T00:33:23Z,2021-12-30T00:34:16Z,,CONTRIBUTOR,,"right now, as part of the the publish process images and other artifacts are stored to gcloud's cloud storage before being deployed to cloudrun.

after successfully deploying, it would be nice if the the script deleted these artifacts. otherwise, if you have regularly scheduled build process, you can end up paying to store lots of out of date artifacts.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1583/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

1096536240,I_kwDOBm6k_c5BW9Cw,1586,run analyze on all databases as part of start up or publishing,536941,open,0,,,1,2022-01-07T17:52:34Z,2022-02-02T07:13:37Z,,CONTRIBUTOR,,"Running `analyze;` lets sqlite's query planner make *much* better use of any indices.

It might be nice if the analyze was run as part of the start up of ""serve"" or ""publish"".",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1586/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

1108671952,I_kwDOBm6k_c5CFP3Q,1605,Scripted exports,25778,open,0,,,10,2022-01-19T23:45:55Z,2022-11-30T15:06:38Z,,CONTRIBUTOR,,"Posting this while I'm thinking about it: I mentioned at the end of [this thread](https://twitter.com/eyeseast/status/1483893011658551299) that I'm usually doing `datasette --get` to export canned queries.

I used to use a tool called [datafreeze](https://github.com/pudo/datafreeze) to do scripted exports, but that project looks dead now. The ergonomics of it are pretty nice, though, and the `Freezefile.yml` structure is actually not too far from Datasette's canned queries.

This is related to the idea for `datasette query` (#1356) but I think it's a distinct feature. It's most likely a plugin, but I want to raise it here because it's probably something other people have thought about.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1605/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

1114147905,I_kwDOBm6k_c5CaIxB,1612,Move canned queries closer to the SQL input area,639012,closed,0,,3268330,5,2022-01-25T17:06:39Z,2022-03-19T04:04:49Z,2022-01-25T18:34:21Z,CONTRIBUTOR,,"*Original title: Consider placing example queries above the sql input?*

Hi! Have been enjoying deploying ad hoc datasettes for collaborators to pick over!

I keep finding myself manually ""fixing"" the database.html template so that the ""example queries"" (canned queries) appear directly *over* the sql box? So they are sorta more a suggestion for collaborators who aren't inclined to write their own queries?

My sense is any time I go to the trouble of writing canned queries my users should see 'em?

(( I have also considered a client-side reactive-ish option where selecting a query just places the raw SQL in the box and doesn't execute it, but this seems to end up being an inconvenience, rather than a teaching tool. ))

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1612/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1163369515,I_kwDOBm6k_c5FV5wr,1655,query result page is using 400mb of browser memory 40x size of html page and 400x size of csv data,536941,open,0,,,8,2022-03-09T00:56:40Z,2023-10-17T21:53:17Z,,CONTRIBUTOR,,"[this page](https://labordata.bunkum.us/opdr-8335ea3?sql=with+most_recent_lu+as+%28%0D%0A++select%0D%0A++++*%0D%0A++from%0D%0A++++%28%0D%0A++++++select%0D%0A++++++++*%0D%0A++++++from%0D%0A++++++++lm_data%0D%0A++++++order+by%0D%0A++++++++f_num%2C%0D%0A++++++++receive_date+desc%0D%0A++++%29+t%0D%0A++group+by%0D%0A++++f_num%0D%0A%29%0D%0Aselect%0D%0A++aff_abbr+%7C%7C+coalesce%28%27+local+%27+%7C%7C+desig_num%2C+%27+%27+%7C%7C+unit_name%29+as+abbr_local_name%2C%0D%0A++coalesce%28%0D%0A++++regexp_match%28%27%28.*%3F%29%28%2C%3F+AFL-CIO%24%29%27%2C+union_name%29%2C%0D%0A++++regexp_match%28%27%28.*%3F%29%28+IND%24%29%27%2C+union_name%29%2C%0D%0A++++union_name%0D%0A++%29+%7C%7C+coalesce%28%27+local+%27+%7C%7C+desig_num%2C+%27+%27+%7C%7C+unit_name%29+as+full_local_name%2C%0D%0A++*%0D%0Afrom%0D%0A++most_recent_lu%0D%0Awhere+%28desig_num+IS+NOT+NULL+OR+unit_name+IS+NOT+NULL%29+AND+desig_name+%21%3D+%27HQ%27%0D%0Alimit%0D%0A++5000+offset+0)

is using about 400 mb in firefox 97 on mac os x. if you download the html for the page, it's about 11mb and if you get the csv for the data its about 1mb.

it's using over a 1G on chrome 99.

i found this because, i was trying to figure out why editing the SQL was getting very slow.

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1655/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

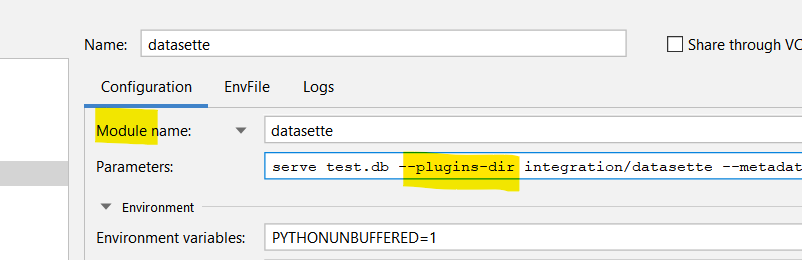

1181432624,I_kwDOBm6k_c5Gazsw,1688,[plugins][documentation] Is it possible to serve per-plugin static folders when writing one-off (single file) plugins?,9020979,closed,0,,,3,2022-03-26T01:17:44Z,2022-03-27T01:01:14Z,2022-03-26T21:34:47Z,CONTRIBUTOR,,"I'm trying to make a small plugin that depends on static assets, by following the guide [here](https://docs.datasette.io/en/stable/writing_plugins.html#writing-one-off-plugins). I made a `plugins/` directory with `datasette_nteract_data_explorer.py`.

I am trying to follow the example of `datasette_vega`, and serving static assets. I created a `statics/` directory within `plugins/` to serve my JS and CSS.

https://github.com/simonw/datasette-vega/blob/00de059ab1ef77394ba9f9547abfacf966c479c4/datasette_vega/__init__.py#L13

Unfortunately, datasette doesn't seem to be able to find my assets.

Input:

```bash

datasette ~/Library/Safari/History.db --plugins-dir=plugins/

```

Output:

I suspect this issue might go away if I move away from ""one-off"" plugin mode, but it's been a while since I created a new python package so I'm not sure how much work there is to go between ""one off"" and ""packaged for PyPI"". I'd like to try to avoid needing to repackage a new `tar.gz` file and or reinstall my library repeatedly when developing new python code.

1. Is there a way to serve a static assets when using the `plugins/` directory method instead of installing plugins as a new python package?

2. If not, is there a way I can work on developing a plugin without creating and repackaging tar.gz files after every change, or is that the recommended path?

Thanks for your help!

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1688/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1182227211,I_kwDOBm6k_c5Gd1sL,1692,[plugins][feature request]: Support additional script tag attributes when loading custom JS,9020979,open,0,,,2,2022-03-27T01:16:03Z,2022-03-30T06:14:51Z,,CONTRIBUTOR,,"## Motivation

- The build system for my new [plugin](https://github.com/hydrosquall/datasette-nteract-data-explorer) has two output JS files, one for browsers that support ES modules, one for browsers that don't. At present, I'm only passing one of them into Datasette.

- I'd like to specify the non-es-module script as a fallback for older browsers. I don't want to load it by default, because browsers will only need one, and it's heavy, so for now I'm only supporting modern browsers.

To be able to support legacy browsers without slowing down users with modern browsers, I would like to be able to set additional HTML attributes on the tag fallback script, `nomodule` and `defer`. My injected scripts should look something like this:

```html

```

## Proposal

To achieve this, I propose additional optional properties to the API accepted by the `extra_js_urls` hook and custom JS field the `metadata.json` [described here](https://docs.datasette.io/en/stable/custom_templates.html#custom-css-and-javascript).

Under this API, I'd write something like this to get the above HTML rendered in Datasette.

```json

{

""extra_js_urls"": [

{

""url"": ""/index.my-es-module-bundle.js"",

""module"": true,

},

{

""url"": ""/index.my-legacy-fallback-bundle.js"",

""nomodule"": """",

""defer"": true

}

]

}

```

## Resources

- [MDN on the script tag](https://developer.mozilla.org/en-US/docs/Web/HTML/Element/script)

- There may be other properties that could be added that are potentially valuable, like `async` or `referrerpolicy`, but I don't have an immediate need for those.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1692/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

1193090967,I_kwDOBm6k_c5HHR-X,1699,Proposal: datasette query,25778,open,0,,,6,2022-04-05T12:36:43Z,2022-04-11T01:32:12Z,,CONTRIBUTOR,,"I started sketching out a plugin to add a `datasette query` subcommand to export data from the command line. This is based on discussions in #1356 and #1605. Before I get too far down this rabbit hole, I figure it's worth getting some feedback here (unless this should happen in `Discussions`). Here's what I'm thinking:

At its most basic, it will write the results of a query to STDOUT.

```sh

datasette query -d data.db 'select * from data' > results.json

```

This isn't much improvement over using [sqlite-utils](https://github.com/simonw/sqlite-utils). To make better use of datasette and its ecosystem, run `datasette query` using a canned query defined in a `metadata.yml` file.

For example, using the metadata file from [alltheplaces-datasette](https://github.com/eyeseast/alltheplaces-datasette/blob/main/metadata.yml):

```sh

cd alltheplaces-datasette

datasette query -d alltheplaces.db -m metadata.yml count_by_spider

```

That query would be good to get as CSV, and we can auto-discover metadata and databases in the current directory:

```sh

cd alltheplaces-datasette

datasette query count_by_spider -f csv

```

In this case, `count_by_spider` is a canned query defined on the `alltheplaces` database. If the same query is defined on multiple databases or its otherwise unclear which database `query` should use, pass the `-d` or `--database` option.

If a query takes parameters, I can pass them in at runtime, using the `--param` or `-p` option:

```sh

datasette query -d data.db -p value something 'select * from neighborhoods where some_column = :value'

```

I'm very interested in feedback on this, including whether it should be a plugin or in Datasette core. (I don't have a strong opinion about this, but I'm prototyping it as a plugin to start.)",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1699/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

1198822563,I_kwDOBm6k_c5HdJSj,1706,"[feature] immutable mode for a directory, not just individual sqlite file",9020979,open,0,,,4,2022-04-10T00:50:57Z,2022-12-09T19:11:40Z,,CONTRIBUTOR,,"## Motivation

- I have a directory of sqlite databases

- I'd like to use immutable mode when opening them for better performance [docs](https://docs.datasette.io/en/0.54/performance.html#immutable-mode)

- Currently using this flag throws the following error

IsADirectoryError: [Errno 21] Is a directory: '/name-of-directory'

## Proposal

Immutable flag works for both single files and directories

datasette -i /folder-of-sqlite-files",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1706/reactions"", ""total_count"": 1, ""+1"": 1, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

1200224939,I_kwDOBm6k_c5Hifqr,1707,[feature] expanded detail page,536941,open,0,,,1,2022-04-11T16:29:17Z,2022-04-11T16:33:00Z,,CONTRIBUTOR,,"Right now, if click on the detail page for a row you get the info for the row and links to related tables:

It would be very cool if there was an option to expand the rows of the related tables from within this detail view.

If you had that then datasette could fulfill a pretty common use case where you want to search for an entity and get a consolidate detail view about what you know about that entity.

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1707/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

1218133366,I_kwDOBm6k_c5Imz12,1728,Writable canned queries fail with useless non-error against immutable databases,127565,closed,0,,8303187,13,2022-04-28T03:10:34Z,2022-08-14T16:34:40Z,2022-08-14T16:34:40Z,CONTRIBUTOR,,"I've been banging my head against a wall for a while and would appreciate any pointers...

- I have a writeable canned query to update rows in the db.

- I'm using the github-oauth plugin for authentication.

- I have `allow` set on the query to accept my GitHub id and a GH organisation.

- Authentication seems to work as expected both locally and on Cloudrun -- viewing `/-/actor` gives the same result in both environments

- I can access the 'padlocked' canned query in both environments.

Everything seems to be the same, but the canned query works perfectly when run locally, and fails when I try it on Cloudrun. I'm redirected back to the canned query page and the db is not changed. There's nothing in the Cloudstor logs to indicate an error.

Any clues as to where I should be looking for the problem?",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1728/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1292368833,I_kwDOBm6k_c5NB_vB,1764,Keep track of config_dir in directory mode (for plugins),25778,closed,0,,,0,2022-07-03T16:57:49Z,2022-07-18T01:12:45Z,2022-07-18T01:12:45Z,CONTRIBUTOR,,"I started working on using `config_dir` with my [datasette-query-files plugin](https://github.com/eyeseast/datasette-query-files) and realized Datasette doesn't actually hold onto the `config_dir` argument. It gets used in `__init__` but then forgotten. It would be nice to be able to use it in plugins, though.

Here's the reference issue: https://github.com/eyeseast/datasette-query-files/issues/4

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1764/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1339663518,I_kwDOBm6k_c5P2aSe,1784,"Include ""entrypoint"" option on `--load-extension`?",15178711,closed,0,,,2,2022-08-16T00:22:57Z,2022-08-23T18:34:31Z,2022-08-23T18:34:31Z,CONTRIBUTOR,,"## Problem

SQLite extensions have the option to define multiple ""entrypoints"" in each loadable extension. For example, the upcoming version of `sqlite-lines` will have 2 entrypoints: the default `sqlite3_lines_init` (which SQLite will automatically guess for) and `sqlite3_lines_noread_init`. The `sqlite3_lines_noread_init` version omits functions that read from the filesystem, which is necessary for security purposes when running untrusted SQL (which Datasette does).

(Similar multiple entrypoints will also be added for sqlite-http).

The `--load-extension` flag, however, doesn't give the option to specify a different entrypoint, so the default one is always used.

## Proposal

I want there to be a new command line option of the `--load-extension` flag to specify a custom entrypoint like so:

```

datasette my.db \

--load-extension ./lines0 sqlite3_lines0_noread_init

```

Then, under the hood, this line of code:

https://github.com/simonw/datasette/blob/7af67b54b7d9bca43e948510fc62f6db2b748fa8/datasette/app.py#L562

Would look something like this:

```python

conn.execute(""SELECT load_extension(?, ?)"", [extension, entrypoint])

```

One potential problem: For backward compatibility, I'm not sure if Click allows cli flags to have variable number of options (""arity""). So I guess it could also use a `:` delimiter like `--static`:

```

datasette my.db \

--load-extension ./lines0:sqlite3_lines0_noread_init

```

Or maybe even a new flag name?

```

datasette my.db \

--load-extension-entrypoint ./lines0 sqlite3_lines0_noread_init

```

Personally I prefer the `:` option... and maybe even `--load-extension` -> `--load`? Definitely out of scope for this issue tho

```

datasette my.db \

--load./lines0:sqlite3_lines0_noread_init

```",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1784/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1334628400,I_kwDOBm6k_c5PjNAw,1779,google cloudrun updated their limits on maxscale based on memory and cpu count,536941,closed,0,,8303187,13,2022-08-10T13:27:21Z,2022-08-14T19:42:59Z,2022-08-14T17:07:34Z,CONTRIBUTOR,,"if you don't set an explicit limit on container scaling, then [google defaults to 100](https://cloud.google.com/run/docs/configuring/max-instances#limits)

google recently updated the [limits on container scaling](https://cloud.google.com/run/docs/configuring/max-instances#limits), such that if you set up datasette to use more memory or cpu, then you need to set the maxScale argument much smaller than 100.

would be nice if `datasette publish` could do this math for you and set the right maxScale.

[Log of an failing publish run](https://github.com/labordata/warehouse/runs/7764725972?check_suite_focus=true#step:8:332).

```

ERROR: (gcloud.run.deploy) spec.template.spec.containers[0].resources.limits.cpu: Invalid value specified for cpu. For the specified value, maxScale may not exceed 15.

Consider running your workload in a region with greater capacity, decreasing your requested cpu-per-instance, or requesting an increase in quota for this region if you are seeing sustained usage near this limit, see https://cloud.google.com/run/quotas. Your project may gain access to further scaling by adding billing information to your account.

Traceback (most recent call last):

File ""/home/runner/.local/bin/datasette"", line 8, in

sys.exit(cli())

File ""/home/runner/.local/lib/python3.8/site-packages/click/core.py"", line 1128, in __call__

return self.main(*args, **kwargs)

File ""/home/runner/.local/lib/python3.8/site-packages/click/core.py"", line 1053, in main

rv = self.invoke(ctx)

File ""/home/runner/.local/lib/python3.8/site-packages/click/core.py"", line 1659, in invoke

return _process_result(sub_ctx.command.invoke(sub_ctx))

File ""/home/runner/.local/lib/python3.8/site-packages/click/core.py"", line 1659, in invoke

return _process_result(sub_ctx.command.invoke(sub_ctx))

File ""/home/runner/.local/lib/python3.8/site-packages/click/core.py"", line 1395, in invoke

return ctx.invoke(self.callback, **ctx.params)

File ""/home/runner/.local/lib/python3.8/site-packages/click/core.py"", line 754, in invoke

return __callback(*args, **kwargs)

File ""/home/runner/.local/lib/python3.8/site-packages/datasette/publish/cloudrun.py"", line 160, in cloudrun

check_call(

File ""/usr/lib/python3.8/subprocess.py"", line 364, in check_call

raise CalledProcessError(retcode, cmd)

subprocess.CalledProcessError: Command 'gcloud run deploy --allow-unauthenticated --platform=managed --image gcr.io/labordata/datasette warehouse --memory 8Gi --cpu 2' returned non-zero exit status 1.

```",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1779/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1377811868,I_kwDOBm6k_c5SH72c,1813,missing next and next_url in JSON responses from an instance deployed on Fly ,883348,closed,0,,,1,2022-09-19T11:32:34Z,2022-09-19T11:34:45Z,2022-09-19T11:34:45Z,CONTRIBUTOR,,"👋 thank you for an incredibly useful project!

I have noticed that my deployed instance on Fly does not include the `next` and `next_url` keys even for a truncated response :

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1601/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1090810196,I_kwDOBm6k_c5BBHFU,1583,consider adding deletion step of cloudbuild artifacts to gcloud publish,536941,open,0,,,1,2021-12-30T00:33:23Z,2021-12-30T00:34:16Z,,CONTRIBUTOR,,"right now, as part of the the publish process images and other artifacts are stored to gcloud's cloud storage before being deployed to cloudrun.

after successfully deploying, it would be nice if the the script deleted these artifacts. otherwise, if you have regularly scheduled build process, you can end up paying to store lots of out of date artifacts.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1583/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

1096536240,I_kwDOBm6k_c5BW9Cw,1586,run analyze on all databases as part of start up or publishing,536941,open,0,,,1,2022-01-07T17:52:34Z,2022-02-02T07:13:37Z,,CONTRIBUTOR,,"Running `analyze;` lets sqlite's query planner make *much* better use of any indices.

It might be nice if the analyze was run as part of the start up of ""serve"" or ""publish"".",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1586/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

1108671952,I_kwDOBm6k_c5CFP3Q,1605,Scripted exports,25778,open,0,,,10,2022-01-19T23:45:55Z,2022-11-30T15:06:38Z,,CONTRIBUTOR,,"Posting this while I'm thinking about it: I mentioned at the end of [this thread](https://twitter.com/eyeseast/status/1483893011658551299) that I'm usually doing `datasette --get` to export canned queries.

I used to use a tool called [datafreeze](https://github.com/pudo/datafreeze) to do scripted exports, but that project looks dead now. The ergonomics of it are pretty nice, though, and the `Freezefile.yml` structure is actually not too far from Datasette's canned queries.

This is related to the idea for `datasette query` (#1356) but I think it's a distinct feature. It's most likely a plugin, but I want to raise it here because it's probably something other people have thought about.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1605/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

1114147905,I_kwDOBm6k_c5CaIxB,1612,Move canned queries closer to the SQL input area,639012,closed,0,,3268330,5,2022-01-25T17:06:39Z,2022-03-19T04:04:49Z,2022-01-25T18:34:21Z,CONTRIBUTOR,,"*Original title: Consider placing example queries above the sql input?*

Hi! Have been enjoying deploying ad hoc datasettes for collaborators to pick over!

I keep finding myself manually ""fixing"" the database.html template so that the ""example queries"" (canned queries) appear directly *over* the sql box? So they are sorta more a suggestion for collaborators who aren't inclined to write their own queries?

My sense is any time I go to the trouble of writing canned queries my users should see 'em?

(( I have also considered a client-side reactive-ish option where selecting a query just places the raw SQL in the box and doesn't execute it, but this seems to end up being an inconvenience, rather than a teaching tool. ))

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1612/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1163369515,I_kwDOBm6k_c5FV5wr,1655,query result page is using 400mb of browser memory 40x size of html page and 400x size of csv data,536941,open,0,,,8,2022-03-09T00:56:40Z,2023-10-17T21:53:17Z,,CONTRIBUTOR,,"[this page](https://labordata.bunkum.us/opdr-8335ea3?sql=with+most_recent_lu+as+%28%0D%0A++select%0D%0A++++*%0D%0A++from%0D%0A++++%28%0D%0A++++++select%0D%0A++++++++*%0D%0A++++++from%0D%0A++++++++lm_data%0D%0A++++++order+by%0D%0A++++++++f_num%2C%0D%0A++++++++receive_date+desc%0D%0A++++%29+t%0D%0A++group+by%0D%0A++++f_num%0D%0A%29%0D%0Aselect%0D%0A++aff_abbr+%7C%7C+coalesce%28%27+local+%27+%7C%7C+desig_num%2C+%27+%27+%7C%7C+unit_name%29+as+abbr_local_name%2C%0D%0A++coalesce%28%0D%0A++++regexp_match%28%27%28.*%3F%29%28%2C%3F+AFL-CIO%24%29%27%2C+union_name%29%2C%0D%0A++++regexp_match%28%27%28.*%3F%29%28+IND%24%29%27%2C+union_name%29%2C%0D%0A++++union_name%0D%0A++%29+%7C%7C+coalesce%28%27+local+%27+%7C%7C+desig_num%2C+%27+%27+%7C%7C+unit_name%29+as+full_local_name%2C%0D%0A++*%0D%0Afrom%0D%0A++most_recent_lu%0D%0Awhere+%28desig_num+IS+NOT+NULL+OR+unit_name+IS+NOT+NULL%29+AND+desig_name+%21%3D+%27HQ%27%0D%0Alimit%0D%0A++5000+offset+0)

is using about 400 mb in firefox 97 on mac os x. if you download the html for the page, it's about 11mb and if you get the csv for the data its about 1mb.

it's using over a 1G on chrome 99.

i found this because, i was trying to figure out why editing the SQL was getting very slow.

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1655/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

1181432624,I_kwDOBm6k_c5Gazsw,1688,[plugins][documentation] Is it possible to serve per-plugin static folders when writing one-off (single file) plugins?,9020979,closed,0,,,3,2022-03-26T01:17:44Z,2022-03-27T01:01:14Z,2022-03-26T21:34:47Z,CONTRIBUTOR,,"I'm trying to make a small plugin that depends on static assets, by following the guide [here](https://docs.datasette.io/en/stable/writing_plugins.html#writing-one-off-plugins). I made a `plugins/` directory with `datasette_nteract_data_explorer.py`.

I am trying to follow the example of `datasette_vega`, and serving static assets. I created a `statics/` directory within `plugins/` to serve my JS and CSS.

https://github.com/simonw/datasette-vega/blob/00de059ab1ef77394ba9f9547abfacf966c479c4/datasette_vega/__init__.py#L13

Unfortunately, datasette doesn't seem to be able to find my assets.

Input:

```bash

datasette ~/Library/Safari/History.db --plugins-dir=plugins/

```

Output:

I suspect this issue might go away if I move away from ""one-off"" plugin mode, but it's been a while since I created a new python package so I'm not sure how much work there is to go between ""one off"" and ""packaged for PyPI"". I'd like to try to avoid needing to repackage a new `tar.gz` file and or reinstall my library repeatedly when developing new python code.

1. Is there a way to serve a static assets when using the `plugins/` directory method instead of installing plugins as a new python package?

2. If not, is there a way I can work on developing a plugin without creating and repackaging tar.gz files after every change, or is that the recommended path?

Thanks for your help!

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1688/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1182227211,I_kwDOBm6k_c5Gd1sL,1692,[plugins][feature request]: Support additional script tag attributes when loading custom JS,9020979,open,0,,,2,2022-03-27T01:16:03Z,2022-03-30T06:14:51Z,,CONTRIBUTOR,,"## Motivation

- The build system for my new [plugin](https://github.com/hydrosquall/datasette-nteract-data-explorer) has two output JS files, one for browsers that support ES modules, one for browsers that don't. At present, I'm only passing one of them into Datasette.

- I'd like to specify the non-es-module script as a fallback for older browsers. I don't want to load it by default, because browsers will only need one, and it's heavy, so for now I'm only supporting modern browsers.

To be able to support legacy browsers without slowing down users with modern browsers, I would like to be able to set additional HTML attributes on the tag fallback script, `nomodule` and `defer`. My injected scripts should look something like this:

```html

```

## Proposal

To achieve this, I propose additional optional properties to the API accepted by the `extra_js_urls` hook and custom JS field the `metadata.json` [described here](https://docs.datasette.io/en/stable/custom_templates.html#custom-css-and-javascript).

Under this API, I'd write something like this to get the above HTML rendered in Datasette.

```json

{

""extra_js_urls"": [

{

""url"": ""/index.my-es-module-bundle.js"",

""module"": true,

},

{

""url"": ""/index.my-legacy-fallback-bundle.js"",

""nomodule"": """",

""defer"": true

}

]

}

```

## Resources

- [MDN on the script tag](https://developer.mozilla.org/en-US/docs/Web/HTML/Element/script)

- There may be other properties that could be added that are potentially valuable, like `async` or `referrerpolicy`, but I don't have an immediate need for those.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1692/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

1193090967,I_kwDOBm6k_c5HHR-X,1699,Proposal: datasette query,25778,open,0,,,6,2022-04-05T12:36:43Z,2022-04-11T01:32:12Z,,CONTRIBUTOR,,"I started sketching out a plugin to add a `datasette query` subcommand to export data from the command line. This is based on discussions in #1356 and #1605. Before I get too far down this rabbit hole, I figure it's worth getting some feedback here (unless this should happen in `Discussions`). Here's what I'm thinking:

At its most basic, it will write the results of a query to STDOUT.

```sh

datasette query -d data.db 'select * from data' > results.json

```

This isn't much improvement over using [sqlite-utils](https://github.com/simonw/sqlite-utils). To make better use of datasette and its ecosystem, run `datasette query` using a canned query defined in a `metadata.yml` file.

For example, using the metadata file from [alltheplaces-datasette](https://github.com/eyeseast/alltheplaces-datasette/blob/main/metadata.yml):

```sh

cd alltheplaces-datasette

datasette query -d alltheplaces.db -m metadata.yml count_by_spider

```

That query would be good to get as CSV, and we can auto-discover metadata and databases in the current directory:

```sh

cd alltheplaces-datasette

datasette query count_by_spider -f csv

```

In this case, `count_by_spider` is a canned query defined on the `alltheplaces` database. If the same query is defined on multiple databases or its otherwise unclear which database `query` should use, pass the `-d` or `--database` option.

If a query takes parameters, I can pass them in at runtime, using the `--param` or `-p` option:

```sh

datasette query -d data.db -p value something 'select * from neighborhoods where some_column = :value'

```

I'm very interested in feedback on this, including whether it should be a plugin or in Datasette core. (I don't have a strong opinion about this, but I'm prototyping it as a plugin to start.)",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1699/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

1198822563,I_kwDOBm6k_c5HdJSj,1706,"[feature] immutable mode for a directory, not just individual sqlite file",9020979,open,0,,,4,2022-04-10T00:50:57Z,2022-12-09T19:11:40Z,,CONTRIBUTOR,,"## Motivation

- I have a directory of sqlite databases

- I'd like to use immutable mode when opening them for better performance [docs](https://docs.datasette.io/en/0.54/performance.html#immutable-mode)

- Currently using this flag throws the following error

IsADirectoryError: [Errno 21] Is a directory: '/name-of-directory'

## Proposal

Immutable flag works for both single files and directories

datasette -i /folder-of-sqlite-files",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1706/reactions"", ""total_count"": 1, ""+1"": 1, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

1200224939,I_kwDOBm6k_c5Hifqr,1707,[feature] expanded detail page,536941,open,0,,,1,2022-04-11T16:29:17Z,2022-04-11T16:33:00Z,,CONTRIBUTOR,,"Right now, if click on the detail page for a row you get the info for the row and links to related tables:

It would be very cool if there was an option to expand the rows of the related tables from within this detail view.

If you had that then datasette could fulfill a pretty common use case where you want to search for an entity and get a consolidate detail view about what you know about that entity.

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1707/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,

1218133366,I_kwDOBm6k_c5Imz12,1728,Writable canned queries fail with useless non-error against immutable databases,127565,closed,0,,8303187,13,2022-04-28T03:10:34Z,2022-08-14T16:34:40Z,2022-08-14T16:34:40Z,CONTRIBUTOR,,"I've been banging my head against a wall for a while and would appreciate any pointers...

- I have a writeable canned query to update rows in the db.

- I'm using the github-oauth plugin for authentication.

- I have `allow` set on the query to accept my GitHub id and a GH organisation.

- Authentication seems to work as expected both locally and on Cloudrun -- viewing `/-/actor` gives the same result in both environments

- I can access the 'padlocked' canned query in both environments.

Everything seems to be the same, but the canned query works perfectly when run locally, and fails when I try it on Cloudrun. I'm redirected back to the canned query page and the db is not changed. There's nothing in the Cloudstor logs to indicate an error.

Any clues as to where I should be looking for the problem?",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1728/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1292368833,I_kwDOBm6k_c5NB_vB,1764,Keep track of config_dir in directory mode (for plugins),25778,closed,0,,,0,2022-07-03T16:57:49Z,2022-07-18T01:12:45Z,2022-07-18T01:12:45Z,CONTRIBUTOR,,"I started working on using `config_dir` with my [datasette-query-files plugin](https://github.com/eyeseast/datasette-query-files) and realized Datasette doesn't actually hold onto the `config_dir` argument. It gets used in `__init__` but then forgotten. It would be nice to be able to use it in plugins, though.

Here's the reference issue: https://github.com/eyeseast/datasette-query-files/issues/4

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1764/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1339663518,I_kwDOBm6k_c5P2aSe,1784,"Include ""entrypoint"" option on `--load-extension`?",15178711,closed,0,,,2,2022-08-16T00:22:57Z,2022-08-23T18:34:31Z,2022-08-23T18:34:31Z,CONTRIBUTOR,,"## Problem

SQLite extensions have the option to define multiple ""entrypoints"" in each loadable extension. For example, the upcoming version of `sqlite-lines` will have 2 entrypoints: the default `sqlite3_lines_init` (which SQLite will automatically guess for) and `sqlite3_lines_noread_init`. The `sqlite3_lines_noread_init` version omits functions that read from the filesystem, which is necessary for security purposes when running untrusted SQL (which Datasette does).

(Similar multiple entrypoints will also be added for sqlite-http).

The `--load-extension` flag, however, doesn't give the option to specify a different entrypoint, so the default one is always used.

## Proposal

I want there to be a new command line option of the `--load-extension` flag to specify a custom entrypoint like so:

```

datasette my.db \

--load-extension ./lines0 sqlite3_lines0_noread_init

```

Then, under the hood, this line of code:

https://github.com/simonw/datasette/blob/7af67b54b7d9bca43e948510fc62f6db2b748fa8/datasette/app.py#L562

Would look something like this:

```python

conn.execute(""SELECT load_extension(?, ?)"", [extension, entrypoint])

```

One potential problem: For backward compatibility, I'm not sure if Click allows cli flags to have variable number of options (""arity""). So I guess it could also use a `:` delimiter like `--static`:

```

datasette my.db \

--load-extension ./lines0:sqlite3_lines0_noread_init

```

Or maybe even a new flag name?

```

datasette my.db \

--load-extension-entrypoint ./lines0 sqlite3_lines0_noread_init

```

Personally I prefer the `:` option... and maybe even `--load-extension` -> `--load`? Definitely out of scope for this issue tho

```

datasette my.db \

--load./lines0:sqlite3_lines0_noread_init

```",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1784/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1334628400,I_kwDOBm6k_c5PjNAw,1779,google cloudrun updated their limits on maxscale based on memory and cpu count,536941,closed,0,,8303187,13,2022-08-10T13:27:21Z,2022-08-14T19:42:59Z,2022-08-14T17:07:34Z,CONTRIBUTOR,,"if you don't set an explicit limit on container scaling, then [google defaults to 100](https://cloud.google.com/run/docs/configuring/max-instances#limits)

google recently updated the [limits on container scaling](https://cloud.google.com/run/docs/configuring/max-instances#limits), such that if you set up datasette to use more memory or cpu, then you need to set the maxScale argument much smaller than 100.

would be nice if `datasette publish` could do this math for you and set the right maxScale.

[Log of an failing publish run](https://github.com/labordata/warehouse/runs/7764725972?check_suite_focus=true#step:8:332).

```

ERROR: (gcloud.run.deploy) spec.template.spec.containers[0].resources.limits.cpu: Invalid value specified for cpu. For the specified value, maxScale may not exceed 15.

Consider running your workload in a region with greater capacity, decreasing your requested cpu-per-instance, or requesting an increase in quota for this region if you are seeing sustained usage near this limit, see https://cloud.google.com/run/quotas. Your project may gain access to further scaling by adding billing information to your account.

Traceback (most recent call last):

File ""/home/runner/.local/bin/datasette"", line 8, in

sys.exit(cli())

File ""/home/runner/.local/lib/python3.8/site-packages/click/core.py"", line 1128, in __call__

return self.main(*args, **kwargs)

File ""/home/runner/.local/lib/python3.8/site-packages/click/core.py"", line 1053, in main

rv = self.invoke(ctx)

File ""/home/runner/.local/lib/python3.8/site-packages/click/core.py"", line 1659, in invoke

return _process_result(sub_ctx.command.invoke(sub_ctx))

File ""/home/runner/.local/lib/python3.8/site-packages/click/core.py"", line 1659, in invoke

return _process_result(sub_ctx.command.invoke(sub_ctx))

File ""/home/runner/.local/lib/python3.8/site-packages/click/core.py"", line 1395, in invoke

return ctx.invoke(self.callback, **ctx.params)

File ""/home/runner/.local/lib/python3.8/site-packages/click/core.py"", line 754, in invoke

return __callback(*args, **kwargs)

File ""/home/runner/.local/lib/python3.8/site-packages/datasette/publish/cloudrun.py"", line 160, in cloudrun

check_call(

File ""/usr/lib/python3.8/subprocess.py"", line 364, in check_call

raise CalledProcessError(retcode, cmd)

subprocess.CalledProcessError: Command 'gcloud run deploy --allow-unauthenticated --platform=managed --image gcr.io/labordata/datasette warehouse --memory 8Gi --cpu 2' returned non-zero exit status 1.

```",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1779/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1377811868,I_kwDOBm6k_c5SH72c,1813,missing next and next_url in JSON responses from an instance deployed on Fly ,883348,closed,0,,,1,2022-09-19T11:32:34Z,2022-09-19T11:34:45Z,2022-09-19T11:34:45Z,CONTRIBUTOR,,"👋 thank you for an incredibly useful project!

I have noticed that my deployed instance on Fly does not include the `next` and `next_url` keys even for a truncated response :

![]() This is publically accessible here: `https://collectif-objets-datasette.fly.dev/collectif-objets.json?sql=select+*+from+mairies`

However when I run the dataset server locally with the same data I get these next keys for the exact same query:

This is publically accessible here: `https://collectif-objets-datasette.fly.dev/collectif-objets.json?sql=select+*+from+mairies`

However when I run the dataset server locally with the same data I get these next keys for the exact same query:

![]() I am wondering if I've missed some config or something specific to deployments on Fly.io?

I am running datasette v0.62, without any specific config :

- locally `poetry run datasette data/collectif-objets.sqlite`

- for the deploy : `poetry run datasette publish fly data/collectif-objets.sqlite`

as visible in [the Makefile](https://github.com/adipasquale/collectif-objets-datasette/blob/main/Makefile). _The very limited codebase is public but the sqlite db is not versioned yet because it is too large._",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1813/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1385026210,I_kwDOBm6k_c5SjdKi,1819,Preserve query on timeout,2182,closed,0,,,3,2022-09-25T13:32:31Z,2022-09-26T23:16:15Z,2022-09-26T23:06:06Z,CONTRIBUTOR,,"If a query hits the timeout it shows a message like:

> SQL query took too long. The time limit is controlled by the [sql_time_limit_ms](https://docs.datasette.io/en/stable/settings.html#sql-time-limit-ms) configuration option.

But the query is lost. Hitting the browser back button shows the query _before_ the one that errored.

It would be nice if the query that errored was preserved for more tweaking. This would make it similar to how ""invalid syntax"" works since #1346 / #619.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1819/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1400083043,I_kwDOBm6k_c5Tc5Jj,1834,inspect data is not used for caching database hash,536941,closed,0,,,0,2022-10-06T17:52:01Z,2022-10-06T20:06:21Z,2022-10-06T20:06:08Z,CONTRIBUTOR,,"When databases are loaded,

https://github.com/simonw/datasette/blob/cb1e093fd361b758120aefc1a444df02462389a3/datasette/app.py#L257-L260

there is nothing preventing the rehashing of the database for immutable databases.

https://github.com/simonw/datasette/blob/cb1e093fd361b758120aefc1a444df02462389a3/datasette/database.py#L50-L53

what i might expect is that relevant values of `inspect_data` get passed to the `Database` class to prevent re-hashing?

With data that is many gigs large, this is a significant start up time.

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1834/reactions"", ""total_count"": 1, ""+1"": 1, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1400374908,I_kwDOBm6k_c5TeAZ8,1836,docker image is duplicating db files somehow,536941,open,0,,,13,2022-10-06T22:35:54Z,2022-10-08T16:56:51Z,,CONTRIBUTOR,,"if you look into the docker image created by docker publish, the `datasette inspect` line is duplicating the db files.

here's the result of the inspect command:

I am wondering if I've missed some config or something specific to deployments on Fly.io?

I am running datasette v0.62, without any specific config :

- locally `poetry run datasette data/collectif-objets.sqlite`

- for the deploy : `poetry run datasette publish fly data/collectif-objets.sqlite`

as visible in [the Makefile](https://github.com/adipasquale/collectif-objets-datasette/blob/main/Makefile). _The very limited codebase is public but the sqlite db is not versioned yet because it is too large._",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1813/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1385026210,I_kwDOBm6k_c5SjdKi,1819,Preserve query on timeout,2182,closed,0,,,3,2022-09-25T13:32:31Z,2022-09-26T23:16:15Z,2022-09-26T23:06:06Z,CONTRIBUTOR,,"If a query hits the timeout it shows a message like:

> SQL query took too long. The time limit is controlled by the [sql_time_limit_ms](https://docs.datasette.io/en/stable/settings.html#sql-time-limit-ms) configuration option.

But the query is lost. Hitting the browser back button shows the query _before_ the one that errored.

It would be nice if the query that errored was preserved for more tweaking. This would make it similar to how ""invalid syntax"" works since #1346 / #619.",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1819/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

1400083043,I_kwDOBm6k_c5Tc5Jj,1834,inspect data is not used for caching database hash,536941,closed,0,,,0,2022-10-06T17:52:01Z,2022-10-06T20:06:21Z,2022-10-06T20:06:08Z,CONTRIBUTOR,,"When databases are loaded,

https://github.com/simonw/datasette/blob/cb1e093fd361b758120aefc1a444df02462389a3/datasette/app.py#L257-L260

there is nothing preventing the rehashing of the database for immutable databases.

https://github.com/simonw/datasette/blob/cb1e093fd361b758120aefc1a444df02462389a3/datasette/database.py#L50-L53

what i might expect is that relevant values of `inspect_data` get passed to the `Database` class to prevent re-hashing?

With data that is many gigs large, this is a significant start up time.

",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1834/reactions"", ""total_count"": 1, ""+1"": 1, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,completed

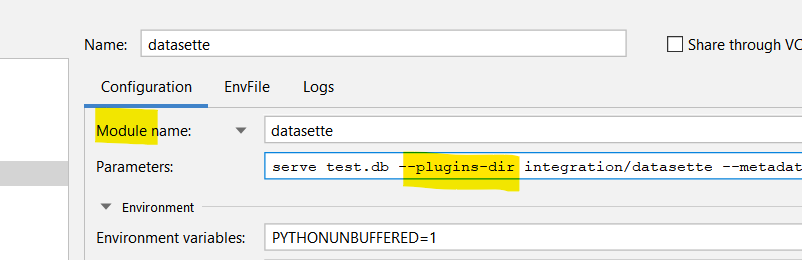

1400374908,I_kwDOBm6k_c5TeAZ8,1836,docker image is duplicating db files somehow,536941,open,0,,,13,2022-10-06T22:35:54Z,2022-10-08T16:56:51Z,,CONTRIBUTOR,,"if you look into the docker image created by docker publish, the `datasette inspect` line is duplicating the db files.

here's the result of the inspect command:

![]() ",107914493,issue,,,"{""url"": ""https://api.github.com/repos/simonw/datasette/issues/1836/reactions"", ""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",,