`.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",726094754,Add horizontal scrollbar to tables,

https://github.com/simonw/datasette/issues/1036#issuecomment-713226726,https://api.github.com/repos/simonw/datasette/issues/1036,713226726,MDEyOklzc3VlQ29tbWVudDcxMzIyNjcyNg==,9599,simonw,2020-10-21T01:04:25Z,2020-10-21T01:04:25Z,OWNER,"Extra security idea: a `blob_download_host` setting which can be used to indicate a host that should be used for downloads - for example `datasettestatic.com`. If this setting is populated then binary downloads are served from paths on that host only, and no other Datasette URLs from that host will be served.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",725996507,Make it possible to download BLOB data from the Datasette UI,

https://github.com/simonw/datasette/issues/262#issuecomment-713208667,https://api.github.com/repos/simonw/datasette/issues/262,713208667,MDEyOklzc3VlQ29tbWVudDcxMzIwODY2Nw==,9599,simonw,2020-10-21T00:03:18Z,2020-10-21T00:03:18Z,OWNER,"I think I should prioritize the facets component of this, since that could have significant performance wins while also supporting `datasette-graphql`.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",323658641,Add ?_extra= mechanism for requesting extra properties in JSON,

https://github.com/simonw/datasette/issues/262#issuecomment-713200782,https://api.github.com/repos/simonw/datasette/issues/262,713200782,MDEyOklzc3VlQ29tbWVudDcxMzIwMDc4Mg==,9599,simonw,2020-10-20T23:41:30Z,2020-10-20T23:41:30Z,OWNER,This is now blocking https://github.com/simonw/datasette-graphql/issues/61 because that issue needs a way to turn off suggested facets when retrieving the results of a table query.,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",323658641,Add ?_extra= mechanism for requesting extra properties in JSON,

https://github.com/simonw/datasette/issues/1034#issuecomment-713191819,https://api.github.com/repos/simonw/datasette/issues/1034,713191819,MDEyOklzc3VlQ29tbWVudDcxMzE5MTgxOQ==,9599,simonw,2020-10-20T23:12:58Z,2020-10-20T23:12:58Z,OWNER,"Enzo has a great solution here: https://twitter.com/enzo_mdd/status/1318685442976436226

> Or maybe an option for a url. This keeps the CSV small but allows scripts to download binary data as needed.

In #1036 I'm planning on adding a way for users to access BLOB data. I can include that URL in the CSV output.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",725184645,Better way of representing binary data in .csv output,

https://github.com/simonw/datasette/issues/1036#issuecomment-713186189,https://api.github.com/repos/simonw/datasette/issues/1036,713186189,MDEyOklzc3VlQ29tbWVudDcxMzE4NjE4OQ==,9599,simonw,2020-10-20T22:56:33Z,2020-10-20T22:56:33Z,OWNER,I think this plus the binary-CSV stuff in #1034 will justify a dedicated section of the documentation to talk about how Datasette handles binary BLOB columns.,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",725996507,Make it possible to download BLOB data from the Datasette UI,

https://github.com/simonw/datasette/issues/1036#issuecomment-713185871,https://api.github.com/repos/simonw/datasette/issues/1036,713185871,MDEyOklzc3VlQ29tbWVudDcxMzE4NTg3MQ==,9599,simonw,2020-10-20T22:55:36Z,2020-10-20T22:55:36Z,OWNER,I can also use a `Content-Disposition` header to force a download. I'm reasonably confident that the combination of `Content-Disposition` and `X-Content-Type-Options: nosniff` and `application/binary` will let me allow users to download the contents of arbitrary BLOB columns without any XSS risk.,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",725996507,Make it possible to download BLOB data from the Datasette UI,

https://github.com/simonw/datasette/issues/1036#issuecomment-713185173,https://api.github.com/repos/simonw/datasette/issues/1036,713185173,MDEyOklzc3VlQ29tbWVudDcxMzE4NTE3Mw==,9599,simonw,2020-10-20T22:53:41Z,2020-10-20T22:53:41Z,OWNER,"https://security.stackexchange.com/questions/12896/does-x-content-type-options-really-prevent-content-sniffing-attacks says:

> In Tangled Web Michal Zalewski says:

>

> > Refrain from using Content-Type: application/octet-stream and use application/binary instead, especially for unknown document types. Refrain from returning Content-Type: text/plain.

> >

> > For example, any code-hosting platform must exercise caution when returning executables or source archives as application/octet-stream, because there is a risk they may be misinterpreted as HTML and displayed inline.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",725996507,Make it possible to download BLOB data from the Datasette UI,

https://github.com/simonw/datasette/issues/1036#issuecomment-713184374,https://api.github.com/repos/simonw/datasette/issues/1036,713184374,MDEyOklzc3VlQ29tbWVudDcxMzE4NDM3NA==,9599,simonw,2020-10-20T22:51:22Z,2020-10-20T22:51:22Z,OWNER,"From https://hackerone.com/reports/126197:

> archive.uber.com mirrors pypi. When downloading `.tar.gz` files from archive.uber.com, the MIME type is `application/octet-stream`. Injecting `` into the start of the `.tar.gz` causes an XSS in Internet Explorer due to MIME sniffing.

So you do have to be careful not to open accidental XSS holes with `application/octet-stream` thanks to (presumably older) versions of IE.

From that thread it looks like the solution is to add a `X-Content-Type-Options: nosniff` header.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",725996507,Make it possible to download BLOB data from the Datasette UI,

https://github.com/simonw/datasette/issues/1036#issuecomment-713183306,https://api.github.com/repos/simonw/datasette/issues/1036,713183306,MDEyOklzc3VlQ29tbWVudDcxMzE4MzMwNg==,9599,simonw,2020-10-20T22:48:10Z,2020-10-20T22:48:10Z,OWNER,Twitter thread: https://twitter.com/dancow/status/1318681053347840005,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",725996507,Make it possible to download BLOB data from the Datasette UI,

https://github.com/simonw/datasette/issues/1034#issuecomment-713176082,https://api.github.com/repos/simonw/datasette/issues/1034,713176082,MDEyOklzc3VlQ29tbWVudDcxMzE3NjA4Mg==,9599,simonw,2020-10-20T22:27:33Z,2020-10-20T22:27:33Z,OWNER,"This feels good to me - it's consistent with how other features in Datasette work, and it means users who need the binary data in CSV (for whatever reason) can get it if they want to.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",725184645,Better way of representing binary data in .csv output,

https://github.com/simonw/datasette/issues/1034#issuecomment-713175741,https://api.github.com/repos/simonw/datasette/issues/1034,713175741,MDEyOklzc3VlQ29tbWVudDcxMzE3NTc0MQ==,9599,simonw,2020-10-20T22:26:45Z,2020-10-20T22:26:45Z,OWNER,"> New idea: since binary in CSV doesn't make sense anyway, emulate Datasette's HTML UI default and output this:

>

> id,title,data

> 1,Some title,

> 2,Other title,

>

> Then allow users to add ?_base64=1 to the URL to get base64 instead

> https://twitter.com/simonw/status/1318679950635888641","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",725184645,Better way of representing binary data in .csv output,

https://github.com/simonw/datasette/issues/1034#issuecomment-713174690,https://api.github.com/repos/simonw/datasette/issues/1034,713174690,MDEyOklzc3VlQ29tbWVudDcxMzE3NDY5MA==,9599,simonw,2020-10-20T22:23:50Z,2020-10-20T22:23:50Z,OWNER,Or... default to `` and support a `?_base64=1` option which outputs in base64 instead.,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",725184645,Better way of representing binary data in .csv output,

https://github.com/simonw/datasette/issues/1034#issuecomment-713174341,https://api.github.com/repos/simonw/datasette/issues/1034,713174341,MDEyOklzc3VlQ29tbWVudDcxMzE3NDM0MQ==,9599,simonw,2020-10-20T22:22:53Z,2020-10-20T22:23:14Z,OWNER,"An even easier option: do what the Datasette UI does and output `` for that CSV cell, as seen on https://latest.datasette.io/fixtures/binary_data","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",725184645,Better way of representing binary data in .csv output,

https://github.com/simonw/datasette/issues/1034#issuecomment-713172901,https://api.github.com/repos/simonw/datasette/issues/1034,713172901,MDEyOklzc3VlQ29tbWVudDcxMzE3MjkwMQ==,9599,simonw,2020-10-20T22:19:10Z,2020-10-20T22:20:28Z,OWNER,"I could go with the same format as `datasette-render-binary` but using `0x00` as the format for the hex bytes.

0x15 0x1C 0x02 0xC7 JFIF 0x00 0x01

Problem with this is that it's ambiguous: if the ASCII characters `0x15` occur in the text they will be indistinguishable from those hex bytes.

But since representing binary data in CSV fundamentally doesn't make sense I'm not sure if that really matters.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",725184645,Better way of representing binary data in .csv output,

https://github.com/simonw/datasette/issues/741#issuecomment-713171742,https://api.github.com/repos/simonw/datasette/issues/741,713171742,MDEyOklzc3VlQ29tbWVudDcxMzE3MTc0Mg==,9599,simonw,2020-10-20T22:16:25Z,2020-10-20T22:16:25Z,OWNER,See also #992 which will rename `--config` to `--setting`.,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",607223136,"Replace ""datasette publish --extra-options"" with ""--setting""",

https://github.com/simonw/datasette/issues/262#issuecomment-713170979,https://api.github.com/repos/simonw/datasette/issues/262,713170979,MDEyOklzc3VlQ29tbWVudDcxMzE3MDk3OQ==,9599,simonw,2020-10-20T22:14:37Z,2020-10-20T22:14:37Z,OWNER,"I think it's worth having a plugin hook for this - it can be same hook that is used internally. Maybe `register_extra` - it lets you return one or more `extra` implementations, each with a name and an async function that gets called.

Things like suggested facets will become `register_extra` hooks. Maybe actual facets too?","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",323658641,Add ?_extra= mechanism for requesting extra properties in JSON,

https://github.com/simonw/datasette/issues/262#issuecomment-713170284,https://api.github.com/repos/simonw/datasette/issues/262,713170284,MDEyOklzc3VlQ29tbWVudDcxMzE3MDI4NA==,9599,simonw,2020-10-20T22:13:01Z,2020-10-20T22:13:01Z,OWNER,In the documentation for `?_extra=` I think I'll emphasize the comma-separated version of it. Also: there will be `?_extra=` values which act as aliases for collection combinations - e.g. `?_extra=full` will toggle everything.,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",323658641,Add ?_extra= mechanism for requesting extra properties in JSON,

https://github.com/simonw/datasette/issues/782#issuecomment-712986115,https://api.github.com/repos/simonw/datasette/issues/782,712986115,MDEyOklzc3VlQ29tbWVudDcxMjk4NjExNQ==,9599,simonw,2020-10-20T16:28:46Z,2020-10-20T16:29:51Z,OWNER,"I think this all comes down to how the `?_extras=` mechanism works (see #262), as first hinted at in a30c5b220c15360d575e94b0e67f3255e120b916 (see commit message) when I added this long-forgotten undocumented feature: https://latest.datasette.io/fixtures/attraction_characteristic/2.json?_extras=foreign_key_tables

Extras need to be able to execute additional SQL, since that would solve the problem we have now where the expensive ""suggested facets"" code runs on all `.json` output even when its results are not being shown.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",627794879,Redesign default .json format,

https://github.com/simonw/datasette/issues/1035#issuecomment-712976314,https://api.github.com/repos/simonw/datasette/issues/1035,712976314,MDEyOklzc3VlQ29tbWVudDcxMjk3NjMxNA==,9599,simonw,2020-10-20T16:21:42Z,2020-10-20T16:21:42Z,OWNER,"Makes me question if `datasette.urls` should grow functionality equivalent to the other path and querystring manipulation methods in `datasette.utils`:

https://github.com/simonw/datasette/blob/66120a7a1cb592e8a21164cf537f62a4d7ab1dfc/datasette/utils/__init__.py#L216-L279","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",725743755,"datasette.urls.table(..., format=""json"") argument",

https://github.com/simonw/datasette/issues/1035#issuecomment-712965574,https://api.github.com/repos/simonw/datasette/issues/1035,712965574,MDEyOklzc3VlQ29tbWVudDcxMjk2NTU3NA==,9599,simonw,2020-10-20T16:13:57Z,2020-10-20T16:13:57Z,OWNER,"That `renderers[key] = path_with_format(` is in a base class which can be used for both arbitrary queries, canned queries and the table view. I think that's OK, but it means that the `format=""json""` argument on `datasette.urls.table()` won't be used by Datasette internally, it will just be available for plugins.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",725743755,"datasette.urls.table(..., format=""json"") argument",

https://github.com/simonw/datasette/issues/1035#issuecomment-712963959,https://api.github.com/repos/simonw/datasette/issues/1035,712963959,MDEyOklzc3VlQ29tbWVudDcxMjk2Mzk1OQ==,9599,simonw,2020-10-20T16:11:25Z,2020-10-20T16:11:25Z,OWNER,"Relevant code:

https://github.com/simonw/datasette/blob/091441a4449beae559a8c0d007376dc85d3aa624/datasette/utils/__init__.py#L681-L696

Only used here:

https://github.com/simonw/datasette/blob/091441a4449beae559a8c0d007376dc85d3aa624/datasette/views/base.py#L498-L502","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",725743755,"datasette.urls.table(..., format=""json"") argument",

https://github.com/simonw/datasette/issues/1026#issuecomment-712962517,https://api.github.com/repos/simonw/datasette/issues/1026,712962517,MDEyOklzc3VlQ29tbWVudDcxMjk2MjUxNw==,9599,simonw,2020-10-20T16:09:12Z,2020-10-20T16:09:12Z,OWNER,"That `datasette.urls.table(""db"", ""table"") + "".json""` example is bad because if the table name contains a `.` it should be `?_format=json` instead.

Maybe `.table()` should have a `format=""json""` option that knows how to do this.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",722738988,How should datasette.client interact with base_url,

https://github.com/simonw/datasette/issues/1026#issuecomment-712959034,https://api.github.com/repos/simonw/datasette/issues/1026,712959034,MDEyOklzc3VlQ29tbWVudDcxMjk1OTAzNA==,9599,simonw,2020-10-20T16:03:33Z,2020-10-20T16:03:55Z,OWNER,"Reconsidering this: I think the `.get()` etc methods should automatically add the `base_url` prefix for you, since these APIs are only intended to make internal calls.

The clincher on this is when I went to add a section to the `datasette.client` documentation recommending you use `datasette.urls.path()` for every call to them that you make.

But there's a problem: to handle table name escaping users are likely to want to use `datasette.urls.table()` anyway, like this:

response = await datasette.client.get(datasette.urls.table(""db"", ""table"") + "".json"")

This risks adding the `base_url` prefix twice. Maybe the `.table()` method could return a string-like object that is marked as already having the `base_url` prefix added, so the `client.get()` method knows not to add it again.

","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",722738988,How should datasette.client interact with base_url,

https://github.com/simonw/datasette/issues/991#issuecomment-712855389,https://api.github.com/repos/simonw/datasette/issues/991,712855389,MDEyOklzc3VlQ29tbWVudDcxMjg1NTM4OQ==,24740,furilo,2020-10-20T13:36:41Z,2020-10-20T13:36:41Z,NONE,"Here is one quick sketch (done in Figma :P) for an idea: a possible filter to switch between showing all tables from all databases, or grouping tables by database.

(the switch is interactive)

All tables: https://www.figma.com/proto/BjFrMroEtmVx6EeRjvSrox/Datasette-test?node-id=1%3A2&viewport=536%2C348%2C0.5&scaling=min-zoom

Grouped: https://www.figma.com/proto/BjFrMroEtmVx6EeRjvSrox/Datasette-test?node-id=3%3A974&viewport=536%2C348%2C0.5&scaling=min-zoom

When only 1 database: https://www.figma.com/proto/BjFrMroEtmVx6EeRjvSrox/Datasette-test?node-id=1%3A162&viewport=536%2C348%2C0.5&scaling=min-zoom

Is this is useful, I can send some more suggestions/sketches.

","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",714377268,Redesign application homepage,

https://github.com/simonw/datasette/issues/1023#issuecomment-712607608,https://api.github.com/repos/simonw/datasette/issues/1023,712607608,MDEyOklzc3VlQ29tbWVudDcxMjYwNzYwOA==,9599,simonw,2020-10-20T05:47:42Z,2020-10-20T05:47:42Z,OWNER,Requested alpha testers in https://github.com/simonw/datasette/issues/838#issuecomment-712604364,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",722673818,Fix issues relating to base_url,

https://github.com/simonw/datasette/issues/1026#issuecomment-712607227,https://api.github.com/repos/simonw/datasette/issues/1026,712607227,MDEyOklzc3VlQ29tbWVudDcxMjYwNzIyNw==,9599,simonw,2020-10-20T05:46:44Z,2020-10-20T05:46:44Z,OWNER,We have a solution for this now: `datasette.urls` from #1033 can be used by plugins to assemble the correct URLs to pass to `.get()` and friends.,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",722738988,How should datasette.client interact with base_url,

https://github.com/simonw/datasette/issues/1023#issuecomment-712604541,https://api.github.com/repos/simonw/datasette/issues/1023,712604541,MDEyOklzc3VlQ29tbWVudDcxMjYwNDU0MQ==,9599,simonw,2020-10-20T05:39:44Z,2020-10-20T05:39:44Z,OWNER,Here's the alpha with most of this work ready for people to preview: https://github.com/simonw/datasette/releases/tag/0.51a0,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",722673818,Fix issues relating to base_url,

https://github.com/simonw/datasette/issues/838#issuecomment-712604364,https://api.github.com/repos/simonw/datasette/issues/838,712604364,MDEyOklzc3VlQ29tbWVudDcxMjYwNDM2NA==,9599,simonw,2020-10-20T05:39:15Z,2020-10-20T05:39:15Z,OWNER,"OK, I've made a ton of improvements to how the `base_url` setting works - see tickets linked from #1023. I've just pushed out an alpha release with those changes in it: https://github.com/simonw/datasette/releases/tag/0.51a0

@tsibley @tballison @ChristopherWilks I'd really appreciate your help testing this alpha!

You can install it with:

pip install datasette==0.51a0

It should work with just `ProxyPass`, without needing the `ProxyPassReverse` setting.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",637395097,Incorrect URLs when served behind a proxy with base_url set,

https://github.com/simonw/datasette/issues/865#issuecomment-712597762,https://api.github.com/repos/simonw/datasette/issues/865,712597762,MDEyOklzc3VlQ29tbWVudDcxMjU5Nzc2Mg==,9599,simonw,2020-10-20T05:22:59Z,2020-10-20T05:22:59Z,OWNER,"OK, this is definitely working now.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",644582921,"base_url doesn't seem to work when adding criteria and clicking ""apply""",

https://github.com/simonw/datasette/issues/1025#issuecomment-712593790,https://api.github.com/repos/simonw/datasette/issues/1025,712593790,MDEyOklzc3VlQ29tbWVudDcxMjU5Mzc5MA==,9599,simonw,2020-10-20T05:12:36Z,2020-10-20T05:12:36Z,OWNER,"I'm going to leave the cookies code setting cookies to default to the `""/""` top level.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",722724086,"Fix last remaining links to ""/"" that do not respect base_url",

https://github.com/simonw/datasette/issues/782#issuecomment-712590398,https://api.github.com/repos/simonw/datasette/issues/782,712590398,MDEyOklzc3VlQ29tbWVudDcxMjU5MDM5OA==,9599,simonw,2020-10-20T05:03:46Z,2020-10-20T05:04:09Z,OWNER,"OK, https://latest-with-plugins.datasette.io/ is running that now - e.g. https://latest-with-plugins.datasette.io/fixtures/roadside_attractions.json-preview or https://latest-with-plugins.datasette.io/fixtures/compound_three_primary_keys.json-preview

```json

{

""rows"": [

{

""pk"": 1,

""name"": ""The Mystery Spot"",

""address"": ""465 Mystery Spot Road, Santa Cruz, CA 95065"",

""latitude"": 37.0167,

""longitude"": -122.0024

},

{

""pk"": 2,

""name"": ""Winchester Mystery House"",

""address"": ""525 South Winchester Boulevard, San Jose, CA 95128"",

""latitude"": 37.3184,

""longitude"": -121.9511

},

{

""pk"": 3,

""name"": ""Burlingame Museum of PEZ Memorabilia"",

""address"": ""214 California Drive, Burlingame, CA 94010"",

""latitude"": 37.5793,

""longitude"": -122.3442

},

{

""pk"": 4,

""name"": ""Bigfoot Discovery Museum"",

""address"": ""5497 Highway 9, Felton, CA 95018"",

""latitude"": 37.0414,

""longitude"": -122.0725

}

],

""total"": 4,

""next_url"": null

}

```","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",627794879,Redesign default .json format,

https://github.com/simonw/datasette/issues/782#issuecomment-712585921,https://api.github.com/repos/simonw/datasette/issues/782,712585921,MDEyOklzc3VlQ29tbWVudDcxMjU4NTkyMQ==,9599,simonw,2020-10-20T04:48:01Z,2020-10-20T04:48:01Z,OWNER,I'll update `datasette-json-preview` with that now.,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",627794879,Redesign default .json format,

https://github.com/simonw/datasette/issues/782#issuecomment-712585687,https://api.github.com/repos/simonw/datasette/issues/782,712585687,MDEyOklzc3VlQ29tbWVudDcxMjU4NTY4Nw==,9599,simonw,2020-10-20T04:47:02Z,2020-10-20T04:47:12Z,OWNER,"Great point about CORS, I hadn't considered that.

I think I'm going to keep the `Link:` header (added in #1014) because I quite enjoy using it with GitHub and WordPress, but I'm not going to have it be the default way of doing pagination. For the default shape I'm now leaning towards this:

```json

{

""total"": 36,

""rows"": [{""id"": 1, ""name"": ""Cleo""}],

""next_url"": ""https://latest-with-plugins.datasette.io/fixtures/facetable.json?_next=5""

}

```

So three keys: `total`, `rows` and `next_url`. Then extra keys can be added using `?_extra=` with various named bundles.","{""total_count"": 3, ""+1"": 3, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",627794879,Redesign default .json format,

https://github.com/simonw/datasette/issues/1034#issuecomment-712582699,https://api.github.com/repos/simonw/datasette/issues/1034,712582699,MDEyOklzc3VlQ29tbWVudDcxMjU4MjY5OQ==,9599,simonw,2020-10-20T04:36:04Z,2020-10-20T04:36:14Z,OWNER,Asked for ideas on Twitter: https://twitter.com/simonw/status/1318409558805467136,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",725184645,Better way of representing binary data in .csv output,

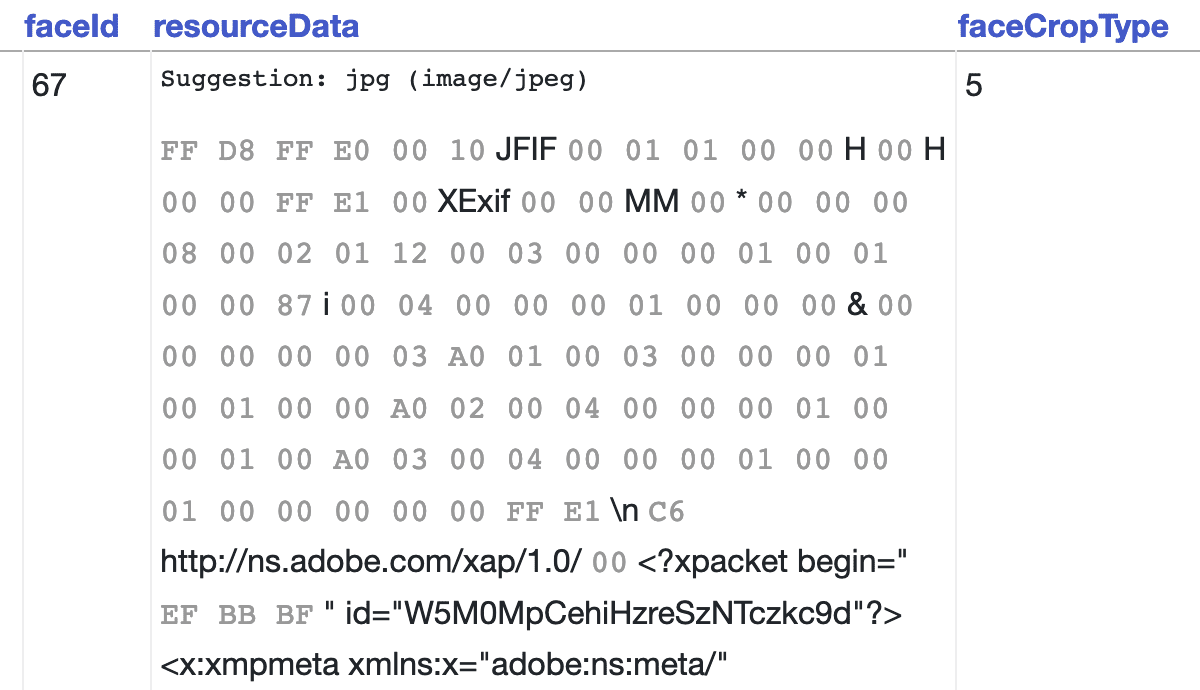

https://github.com/simonw/datasette/issues/1034#issuecomment-712581994,https://api.github.com/repos/simonw/datasette/issues/1034,712581994,MDEyOklzc3VlQ29tbWVudDcxMjU4MTk5NA==,9599,simonw,2020-10-20T04:33:28Z,2020-10-20T04:33:28Z,OWNER,"The [datasette-render-binary](https://github.com/simonw/datasette-render-binary) plugin does this, which I really like - but without the different coloured fonts I'm not sure how readable it would be as just plain text:

Really the goal here is to find the most human-friendly option, so that people looking at the output have a vague idea what's going on. That's why I'm not leaping at the chance to use base64.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",725184645,Better way of representing binary data in .csv output,

https://github.com/simonw/datasette/issues/1034#issuecomment-712580976,https://api.github.com/repos/simonw/datasette/issues/1034,712580976,MDEyOklzc3VlQ29tbWVudDcxMjU4MDk3Ng==,9599,simonw,2020-10-20T04:29:23Z,2020-10-20T04:29:23Z,OWNER,Most obvious option is base64. Any other potential solutions I'm missing?,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",725184645,Better way of representing binary data in .csv output,

https://github.com/simonw/datasette/issues/1025#issuecomment-712579674,https://api.github.com/repos/simonw/datasette/issues/1025,712579674,MDEyOklzc3VlQ29tbWVudDcxMjU3OTY3NA==,9599,simonw,2020-10-20T04:24:10Z,2020-10-20T04:24:10Z,OWNER,"Changed my mind, `error.html` needs access to `urls` in order to link to its CSS file. Passing it after all (it already got passed `ds.config(""base_url"")` so `ds` was available previously).","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",722724086,"Fix last remaining links to ""/"" that do not respect base_url",

https://github.com/simonw/datasette/issues/782#issuecomment-712569695,https://api.github.com/repos/simonw/datasette/issues/782,712569695,MDEyOklzc3VlQ29tbWVudDcxMjU2OTY5NQ==,222245,carlmjohnson,2020-10-20T03:45:48Z,2020-10-20T03:46:14Z,NONE,"I vote against headers. It has a lot of strikes against it: poor discoverability, new developers often don’t know how to use them, makes CORS harder, makes it hard to use eg with JQ, needs ad hoc specification for each bit of metadata, etc.

The only advantage of headers is that you don’t need to do .rows, but that’s actually good as a data validation step anyway—if .rows is missing assume there’s an error and do your error handling path instead of parsing the rest.","{""total_count"": 1, ""+1"": 1, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",627794879,Redesign default .json format,

https://github.com/simonw/datasette/issues/1025#issuecomment-712481127,https://api.github.com/repos/simonw/datasette/issues/1025,712481127,MDEyOklzc3VlQ29tbWVudDcxMjQ4MTEyNw==,9599,simonw,2020-10-19T22:40:37Z,2020-10-20T01:21:36Z,OWNER,Was blocked on #904 - now unblocked.,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",722724086,"Fix last remaining links to ""/"" that do not respect base_url",

https://github.com/simonw/datasette/issues/1033#issuecomment-712529413,https://api.github.com/repos/simonw/datasette/issues/1033,712529413,MDEyOklzc3VlQ29tbWVudDcxMjUyOTQxMw==,9599,simonw,2020-10-20T01:21:12Z,2020-10-20T01:21:12Z,OWNER,Also refs #1023,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",725099777,datasette.urls.static_plugins(...) method,

https://github.com/simonw/datasette/issues/1025#issuecomment-712525557,https://api.github.com/repos/simonw/datasette/issues/1025,712525557,MDEyOklzc3VlQ29tbWVudDcxMjUyNTU1Nw==,9599,simonw,2020-10-20T01:07:02Z,2020-10-20T01:07:02Z,OWNER,"I fixed the `queries.html` one. I'm not going to fix these two:

```

datasette/templates/error.html: home

datasette/templates/patterns.html: home /

```

Because the `error.html` one does not get passed a context (which makes sense since an error has occurred) and the pattern portfolio doesn't need to link to anywhere in particular.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",722724086,"Fix last remaining links to ""/"" that do not respect base_url",

https://github.com/simonw/datasette/issues/904#issuecomment-712524699,https://api.github.com/repos/simonw/datasette/issues/904,712524699,MDEyOklzc3VlQ29tbWVudDcxMjUyNDY5OQ==,9599,simonw,2020-10-20T01:04:12Z,2020-10-20T01:04:12Z,OWNER,Documentation is https://docs.datasette.io/en/latest/writing_plugins.html#building-urls-within-plugins and https://docs.datasette.io/en/latest/internals.html#internals-datasette-urls,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",663228985,"datasette.urls.table() / .instance() / .database() methods for constructing URLs, also exposed to templates",

https://github.com/simonw/datasette/issues/904#issuecomment-712483066,https://api.github.com/repos/simonw/datasette/issues/904,712483066,MDEyOklzc3VlQ29tbWVudDcxMjQ4MzA2Ng==,9599,simonw,2020-10-19T22:46:48Z,2020-10-19T22:46:48Z,OWNER,"OK, I'm committing to `datasette.urls.table()` and friends, which will be available to (and documented for use in) plugins and will be exposed to templates as `{{ urls.table(...) }}`.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",663228985,"datasette.urls.table() / .instance() / .database() methods for constructing URLs, also exposed to templates",

https://github.com/simonw/datasette/issues/1020#issuecomment-712482504,https://api.github.com/repos/simonw/datasette/issues/1020,712482504,MDEyOklzc3VlQ29tbWVudDcxMjQ4MjUwNA==,9599,simonw,2020-10-19T22:45:01Z,2020-10-19T22:45:01Z,OWNER,"I'm having trouble coming up with the syntax for this. Here's one option:

```python

response = await datasette.client.get(path, request=request)

```

But this feels confusing to me. We're not using the `request=` argument as a request - we're using it as a source of some default request values (the cookies and incoming headers, but not the path).

We're essentially combining that request with the other arguments passed to `.get()`.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",721068929,Method for datasette.client() to forward on authentication,

https://github.com/simonw/datasette/issues/1020#issuecomment-712482015,https://api.github.com/repos/simonw/datasette/issues/1020,712482015,MDEyOklzc3VlQ29tbWVudDcxMjQ4MjAxNQ==,9599,simonw,2020-10-19T22:43:24Z,2020-10-19T22:43:24Z,OWNER,"... unless I want to support authentication mechanisms that work based on incoming IP address instead, in which case there's an argument for copying more over from the incoming request.

Tailscale is a good example of a system where authentication based on IP address can actually work well, so this is worth doing. Also, there might be authentication mechanisms which work by setting a custom header on the incoming request (not to mention the `Authorization` header).","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",721068929,Method for datasette.client() to forward on authentication,

https://github.com/simonw/datasette/issues/1020#issuecomment-712481568,https://api.github.com/repos/simonw/datasette/issues/1020,712481568,MDEyOklzc3VlQ29tbWVudDcxMjQ4MTU2OA==,9599,simonw,2020-10-19T22:41:59Z,2020-10-19T22:41:59Z,OWNER,"It turns out this works just fine:

```python

response = await datasette.client.get(path, cookies=request.cookies)

```

So I don't need a mechanism for this. I'm going to add this to the documentation instead.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",721068929,Method for datasette.client() to forward on authentication,

https://github.com/simonw/datasette/issues/1028#issuecomment-712480866,https://api.github.com/repos/simonw/datasette/issues/1028,712480866,MDEyOklzc3VlQ29tbWVudDcxMjQ4MDg2Ng==,9599,simonw,2020-10-19T22:39:51Z,2020-10-19T22:39:51Z,OWNER,Documentation: https://docs.datasette.io/en/latest/spatialite.html,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",723803777,--load-extension=spatialite shortcut,

https://github.com/simonw/datasette/issues/1032#issuecomment-712397537,https://api.github.com/repos/simonw/datasette/issues/1032,712397537,MDEyOklzc3VlQ29tbWVudDcxMjM5NzUzNw==,236498,saulpw,2020-10-19T19:37:55Z,2020-10-19T19:37:55Z,NONE,"python-dateutil is awesome, but it can only guess at one date at a time. So if you have a column of dates that are (presumably) in the same format, it can't use the full set of dates to deduce the format. Also, once it has parsed a date, you can't get the format it used, whether to parse or render other dates. These limitations prevent it from being a silver bullet for date parsing, though they're not enough for me to stop using it!","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",724878151,Bring date parsing into Datasette core,

https://github.com/simonw/datasette/issues/1032#issuecomment-712367285,https://api.github.com/repos/simonw/datasette/issues/1032,712367285,MDEyOklzc3VlQ29tbWVudDcxMjM2NzI4NQ==,9599,simonw,2020-10-19T18:39:32Z,2020-10-19T18:39:32Z,OWNER,https://github.com/digital-land/brownfield-land-collection/blob/a09ddf9960a6af59e72dc02448f7b645e59bf227/bin/harmonise.py#L217-L247 is a beautiful example of this problem.,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",724878151,Bring date parsing into Datasette core,

https://github.com/simonw/datasette/issues/1032#issuecomment-712365439,https://api.github.com/repos/simonw/datasette/issues/1032,712365439,MDEyOklzc3VlQ29tbWVudDcxMjM2NTQzOQ==,9599,simonw,2020-10-19T18:35:50Z,2020-10-19T18:37:57Z,OWNER,"Maybe I don't need to add `dateutil` as a dependency here?

`pendulum` and `arrow` both depend on it so it's pretty deeply embedded in the Python date ecosystem. Adding it as a dependency seems reasonable.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",724878151,Bring date parsing into Datasette core,

https://github.com/simonw/datasette/issues/1032#issuecomment-712365236,https://api.github.com/repos/simonw/datasette/issues/1032,712365236,MDEyOklzc3VlQ29tbWVudDcxMjM2NTIzNg==,9599,simonw,2020-10-19T18:35:25Z,2020-10-19T18:35:25Z,OWNER,"Since I'm dealing with tables of data I usually have a whole column of examples, so heuristics that check for numbers-greater-than-12 could actually work well for many cases.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",724878151,Bring date parsing into Datasette core,

https://github.com/simonw/datasette/issues/1032#issuecomment-712364532,https://api.github.com/repos/simonw/datasette/issues/1032,712364532,MDEyOklzc3VlQ29tbWVudDcxMjM2NDUzMg==,9599,simonw,2020-10-19T18:34:10Z,2020-10-19T18:34:10Z,OWNER,Lots of great example date data in https://biglocal.datasettes.com/COVID_WARN_Notices,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",724878151,Bring date parsing into Datasette core,

https://github.com/simonw/datasette/issues/1032#issuecomment-712364317,https://api.github.com/repos/simonw/datasette/issues/1032,712364317,MDEyOklzc3VlQ29tbWVudDcxMjM2NDMxNw==,9599,simonw,2020-10-19T18:33:42Z,2020-10-19T18:33:42Z,OWNER,"Related challenge: timezones. I think I'll punt on those for the moment and just concentrate on dates, but I should keep them in mind.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",724878151,Bring date parsing into Datasette core,

https://github.com/simonw/datasette/issues/1032#issuecomment-712363825,https://api.github.com/repos/simonw/datasette/issues/1032,712363825,MDEyOklzc3VlQ29tbWVudDcxMjM2MzgyNQ==,9599,simonw,2020-10-19T18:32:43Z,2020-10-19T18:32:43Z,OWNER,"Horrible thought: I bet there is data out there that uses more than one date format in the same table! So this needs to be exposed as a visible per-column setting.

Column action menu can help here, although it's not yet the full solution because it isn't yet visible on mobile.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",724878151,Bring date parsing into Datasette core,

https://github.com/simonw/datasette/issues/1032#issuecomment-712363344,https://api.github.com/repos/simonw/datasette/issues/1032,712363344,MDEyOklzc3VlQ29tbWVudDcxMjM2MzM0NA==,9599,simonw,2020-10-19T18:31:46Z,2020-10-19T18:31:46Z,OWNER,"As always with dates, the challenge is America. `mm/dd/yy` is indistinguishable from the more sensible `dd/mm/yy`. It's crucially important to visibly tell people which format you assumed, otherwise all kinds of weird invisible bugs can manifest.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",724878151,Bring date parsing into Datasette core,

https://github.com/simonw/datasette/issues/904#issuecomment-712355877,https://api.github.com/repos/simonw/datasette/issues/904,712355877,MDEyOklzc3VlQ29tbWVudDcxMjM1NTg3Nw==,9599,simonw,2020-10-19T18:17:22Z,2020-10-19T18:17:22Z,OWNER,I quite like `datasette.urls.table()` but I'm not sure why.,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",663228985,"datasette.urls.table() / .instance() / .database() methods for constructing URLs, also exposed to templates",

https://github.com/simonw/datasette/issues/904#issuecomment-712355706,https://api.github.com/repos/simonw/datasette/issues/904,712355706,MDEyOklzc3VlQ29tbWVudDcxMjM1NTcwNg==,9599,simonw,2020-10-19T18:17:03Z,2020-10-19T18:17:03Z,OWNER,"Options:

- `datasette.urls.instance()` (or `datasette.urls.root()`), `datasette.urls.table()` etc

- `datasette.url_for.instance()`...

- `datasette.url.instance()`...

- `datasette.root_url()`, `datasette.table_url()`...","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",663228985,"datasette.urls.table() / .instance() / .database() methods for constructing URLs, also exposed to templates",

https://github.com/simonw/datasette/issues/904#issuecomment-712354600,https://api.github.com/repos/simonw/datasette/issues/904,712354600,MDEyOklzc3VlQ29tbWVudDcxMjM1NDYwMA==,9599,simonw,2020-10-19T18:15:03Z,2020-10-19T18:15:39Z,OWNER,"Related: #1026 (How should datasette.client interact with base_url)

Also this comment from https://github.com/simonw/datasette/issues/943#issuecomment-675752436

> One thing to consider here: Datasette's table and database name escaping rules can be a little bit convoluted.

>

> If a plugin wants to get back the first five rows of a table, it will need to construct a URL `/dbname/tablename?_size=5` - but it will need to know how to turn the database and table names into the correctly escaped `dbname` and `tablename` values.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",663228985,"datasette.urls.table() / .instance() / .database() methods for constructing URLs, also exposed to templates",

https://github.com/simonw/datasette/issues/904#issuecomment-712324077,https://api.github.com/repos/simonw/datasette/issues/904,712324077,MDEyOklzc3VlQ29tbWVudDcxMjMyNDA3Nw==,9599,simonw,2020-10-19T17:39:38Z,2020-10-19T17:39:38Z,OWNER,"If I do these methods I think this should be available on the `datasette` object too, as an internal API for plugins to use to construct redirects and suchlike.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",663228985,"datasette.urls.table() / .instance() / .database() methods for constructing URLs, also exposed to templates",

https://github.com/simonw/datasette/issues/904#issuecomment-710487083,https://api.github.com/repos/simonw/datasette/issues/904,710487083,MDEyOklzc3VlQ29tbWVudDcxMDQ4NzA4Mw==,9599,simonw,2020-10-16T19:34:10Z,2020-10-19T17:39:04Z,OWNER,"Alternatively, I could expose a single object that knows how to construct all kinds of URLs. Something like this:

```

{{ urls.instance() }}

{{ urls.database(database_name) }}

{{ urls.table(database_name, table_name) }}

etc

```","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",663228985,"datasette.urls.table() / .instance() / .database() methods for constructing URLs, also exposed to templates",

https://github.com/simonw/datasette/issues/1027#issuecomment-712320103,https://api.github.com/repos/simonw/datasette/issues/1027,712320103,MDEyOklzc3VlQ29tbWVudDcxMjMyMDEwMw==,9599,simonw,2020-10-19T17:35:04Z,2020-10-19T17:35:04Z,OWNER,Still need to configure proxying though. https://www.netnea.com/cms/apache-tutorial-9_setting-up-a-reverse-proxy/,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",722758132,Add documentation on serving Datasette behind a proxy using base_url,

https://github.com/simonw/datasette/issues/991#issuecomment-712317638,https://api.github.com/repos/simonw/datasette/issues/991,712317638,MDEyOklzc3VlQ29tbWVudDcxMjMxNzYzOA==,9599,simonw,2020-10-19T17:30:56Z,2020-10-19T17:30:56Z,OWNER,https://biglocal.datasettes.com/ is one of my larger Datasettes in terms of number of databases.,"{""total_count"": 1, ""+1"": 1, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",714377268,Redesign application homepage,

https://github.com/dogsheep/dogsheep-beta/issues/29#issuecomment-712266834,https://api.github.com/repos/dogsheep/dogsheep-beta/issues/29,712266834,MDEyOklzc3VlQ29tbWVudDcxMjI2NjgzNA==,9599,simonw,2020-10-19T16:01:23Z,2020-10-19T16:01:23Z,MEMBER,Might just be a documented pattern for how to configure this in YAML templates.,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",724759588,Add search highlighting snippets,

https://github.com/simonw/datasette/pull/1030#issuecomment-711407607,https://api.github.com/repos/simonw/datasette/issues/1030,711407607,MDEyOklzc3VlQ29tbWVudDcxMTQwNzYwNw==,22429695,codecov[bot],2020-10-18T19:31:31Z,2020-10-19T08:01:51Z,NONE,"# [Codecov](https://codecov.io/gh/simonw/datasette/pull/1030?src=pr&el=h1) Report

> Merging [#1030](https://codecov.io/gh/simonw/datasette/pull/1030?src=pr&el=desc) into [main](https://codecov.io/gh/simonw/datasette/commit/568bd7bbf590861687db8c318f3d8cfcd1dfb47a?el=desc) will **decrease** coverage by `0.10%`.

> The diff coverage is `68.75%`.

[](https://codecov.io/gh/simonw/datasette/pull/1030?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## main #1030 +/- ##

==========================================

- Coverage 84.63% 84.53% -0.11%

==========================================

Files 28 28

Lines 3892 3905 +13

==========================================

+ Hits 3294 3301 +7

- Misses 598 604 +6

```

| [Impacted Files](https://codecov.io/gh/simonw/datasette/pull/1030?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [datasette/utils/\_\_init\_\_.py](https://codecov.io/gh/simonw/datasette/pull/1030/diff?src=pr&el=tree#diff-ZGF0YXNldHRlL3V0aWxzL19faW5pdF9fLnB5) | `93.35% <68.75%> (-0.79%)` | :arrow_down: |

| [datasette/views/index.py](https://codecov.io/gh/simonw/datasette/pull/1030/diff?src=pr&el=tree#diff-ZGF0YXNldHRlL3ZpZXdzL2luZGV4LnB5) | `96.49% <0.00%> (-1.76%)` | :arrow_down: |

------

[Continue to review full report at Codecov](https://codecov.io/gh/simonw/datasette/pull/1030?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/simonw/datasette/pull/1030?src=pr&el=footer). Last update [568bd7b...e082533](https://codecov.io/gh/simonw/datasette/pull/1030?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",723982480,Make `package` command deal with a configuration directory argument,

https://github.com/simonw/datasette/pull/1031#issuecomment-711792622,https://api.github.com/repos/simonw/datasette/issues/1031,711792622,MDEyOklzc3VlQ29tbWVudDcxMTc5MjYyMg==,22429695,codecov[bot],2020-10-19T07:57:17Z,2020-10-19T07:57:17Z,NONE,"# [Codecov](https://codecov.io/gh/simonw/datasette/pull/1031?src=pr&el=h1) Report

> Merging [#1031](https://codecov.io/gh/simonw/datasette/pull/1031?src=pr&el=desc) into [main](https://codecov.io/gh/simonw/datasette/commit/568bd7bbf590861687db8c318f3d8cfcd1dfb47a?el=desc) will **decrease** coverage by `0.02%`.

> The diff coverage is `n/a`.

[](https://codecov.io/gh/simonw/datasette/pull/1031?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## main #1031 +/- ##

==========================================

- Coverage 84.63% 84.60% -0.03%

==========================================

Files 28 28

Lines 3892 3892

==========================================

- Hits 3294 3293 -1

- Misses 598 599 +1

```

| [Impacted Files](https://codecov.io/gh/simonw/datasette/pull/1031?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [datasette/cli.py](https://codecov.io/gh/simonw/datasette/pull/1031/diff?src=pr&el=tree#diff-ZGF0YXNldHRlL2NsaS5weQ==) | `74.22% <ø> (ø)` | |

| [datasette/views/index.py](https://codecov.io/gh/simonw/datasette/pull/1031/diff?src=pr&el=tree#diff-ZGF0YXNldHRlL3ZpZXdzL2luZGV4LnB5) | `96.49% <0.00%> (-1.76%)` | :arrow_down: |

------

[Continue to review full report at Codecov](https://codecov.io/gh/simonw/datasette/pull/1031?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/simonw/datasette/pull/1031?src=pr&el=footer). Last update [568bd7b...7e7eaa4](https://codecov.io/gh/simonw/datasette/pull/1031?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",724369025,Fallback to databases in inspect-data.json when no -i options are passed,

https://github.com/dogsheep/github-to-sqlite/issues/50#issuecomment-711569063,https://api.github.com/repos/dogsheep/github-to-sqlite/issues/50,711569063,MDEyOklzc3VlQ29tbWVudDcxMTU2OTA2Mw==,9599,simonw,2020-10-19T05:01:29Z,2020-10-19T05:01:29Z,MEMBER,"Demo of `--accept`:

github-to-sqlite get /repos/simonw/datasette/readme --accept 'application/vnd.github.VERSION.html'

","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",703218756,Commands for making authenticated API calls,

https://github.com/dogsheep/dogsheep-beta/issues/28#issuecomment-711089647,https://api.github.com/repos/dogsheep/dogsheep-beta/issues/28,711089647,MDEyOklzc3VlQ29tbWVudDcxMTA4OTY0Nw==,9599,simonw,2020-10-17T22:43:13Z,2020-10-17T22:43:13Z,MEMBER,"Since my personal Dogsheep uses Datasette authentication, I'm going to need to pass through cookies. https://github.com/simonw/datasette/issues/1020 will solve that in the future but for now I need to solve it explicitly.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",723861683,Switch to using datasette.client,

https://github.com/dogsheep/healthkit-to-sqlite/issues/11#issuecomment-711083698,https://api.github.com/repos/dogsheep/healthkit-to-sqlite/issues/11,711083698,MDEyOklzc3VlQ29tbWVudDcxMTA4MzY5OA==,572,jarib,2020-10-17T21:39:15Z,2020-10-17T21:39:15Z,NONE,Nice! Works perfectly. Thanks for the quick response and great tooling in general.,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",723838331,export.xml file name varies with different language settings,

https://github.com/dogsheep/healthkit-to-sqlite/issues/11#issuecomment-711081703,https://api.github.com/repos/dogsheep/healthkit-to-sqlite/issues/11,711081703,MDEyOklzc3VlQ29tbWVudDcxMTA4MTcwMw==,9599,simonw,2020-10-17T21:18:35Z,2020-10-17T21:18:35Z,MEMBER,"OK, if you upgrade to the just-released 1.0 this should work (it worked against my Spanish export).","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",723838331,export.xml file name varies with different language settings,

https://github.com/dogsheep/healthkit-to-sqlite/issues/11#issuecomment-711079760,https://api.github.com/repos/dogsheep/healthkit-to-sqlite/issues/11,711079760,MDEyOklzc3VlQ29tbWVudDcxMTA3OTc2MA==,9599,simonw,2020-10-17T21:00:05Z,2020-10-17T21:00:05Z,MEMBER,Checking for either `

""

TEMPLATE = """"""

{}

"""""".strip()

EN_MEDIA_SCRIPT = """"""

Array.from(document.querySelectorAll('en-media')).forEach(el => {

let hash = el.getAttribute('hash');

let type = el.getAttribute('type');

let path = `/evernote/resources_data/${hash}.json?_shape=array`;

fetch(path).then(r => r.json()).then(rows => {

let b64 = rows[0].data.encoded;

let data = `data:${type};base64,${b64}`;

el.innerHTML = `![]() `;

});

});

""""""

@hookimpl

def render_cell(value, table):

if not table:

# Don't render content from arbitrary SQL queries, could be XSS hole

return

if not value or not isinstance(value, str):

return

value = value.strip()

if value.startswith(START) and value.endswith(END):

trimmed = value[len(START) : -len(END)]

trimmed = trimmed.split("">"", 1)[1]

# Replace those horrible double newlines

trimmed = trimmed.replace(""

`;

});

});

""""""

@hookimpl

def render_cell(value, table):

if not table:

# Don't render content from arbitrary SQL queries, could be XSS hole

return

if not value or not isinstance(value, str):

return

value = value.strip()

if value.startswith(START) and value.endswith(END):

trimmed = value[len(START) : -len(END)]

trimmed = trimmed.split("">"", 1)[1]

# Replace those horrible double newlines

trimmed = trimmed.replace(""

"", ""

"")

return jinja2.Markup(TEMPLATE.format(trimmed))

@hookimpl

def extra_body_script():

return EN_MEDIA_SCRIPT

```

It works!

It does however demonstrate that Evernote's ""clip this webpage"" feature means there is a LOT of weird HTML that can get into a note. It looks like they've filtered out the scripts but I wouldn't bet on it - they certainly don't filter out many of the inline styles. So running Bleach is almost certainly a good idea.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",718938889,Figure out how to display images from tags inline in Datasette,

https://github.com/simonw/sqlite-utils/issues/49#issuecomment-710461468,https://api.github.com/repos/simonw/sqlite-utils/issues/49,710461468,MDEyOklzc3VlQ29tbWVudDcxMDQ2MTQ2OA==,9599,simonw,2020-10-16T19:18:19Z,2020-10-16T19:18:19Z,OWNER,"Reconsidering: #89 was a feature request that relates to this, so maybe this is worth implementing after all.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",472115381,extracts= should support multiple-column extracts,

https://github.com/simonw/sqlite-utils/issues/89#issuecomment-710460242,https://api.github.com/repos/simonw/sqlite-utils/issues/89,710460242,MDEyOklzc3VlQ29tbWVudDcxMDQ2MDI0Mg==,9599,simonw,2020-10-16T19:17:27Z,2020-10-16T19:17:50Z,OWNER,"I came up with potential syntax for that here: https://github.com/simonw/sqlite-utils/issues/49#issuecomment-710393550 - based on how `table.extract(...)` works:

```python

fresh_db.table(""tree"", extracts=[Extract(

columns=(""CommonName"", ""LatinName""),

table=""Species"",

fk_column=""species_id"",

rename={""CommonName"": ""name"", ""LatinName"": ""latin""}

)])

```","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",573578548,Ability to customize columns used by extracts= feature,

https://github.com/simonw/sqlite-utils/issues/187#issuecomment-710456981,https://api.github.com/repos/simonw/sqlite-utils/issues/187,710456981,MDEyOklzc3VlQ29tbWVudDcxMDQ1Njk4MQ==,9599,simonw,2020-10-16T19:15:13Z,2020-10-16T19:15:13Z,OWNER,This is a duplicate of #79.,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",723460107,Maybe: Utility method / CLI tool for initializing SpatiaLite,

https://github.com/simonw/sqlite-utils/issues/187#issuecomment-710440853,https://api.github.com/repos/simonw/sqlite-utils/issues/187,710440853,MDEyOklzc3VlQ29tbWVudDcxMDQ0MDg1Mw==,9599,simonw,2020-10-16T19:04:19Z,2020-10-16T19:04:19Z,OWNER,I split this off from #136.,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",723460107,Maybe: Utility method / CLI tool for initializing SpatiaLite,

https://github.com/simonw/sqlite-utils/issues/136#issuecomment-710428802,https://api.github.com/repos/simonw/sqlite-utils/issues/136,710428802,MDEyOklzc3VlQ29tbWVudDcxMDQyODgwMg==,9599,simonw,2020-10-16T18:56:47Z,2020-10-16T18:56:47Z,OWNER,"To keep the code cleaner, I'm tempted to support this instead:

--load-extension=spatialite

Where `spatialite` is a special shortcut value that triggers a search for that module in known locations.

Users could still load a module in a file called `spatialite` in the current directory using:

--load-extension=./spatialite

In fact, `--load-extension=spatialite` could handle that case too by always checking for a file called `spatialite` before attempting to search for it in known locations.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",683812642,--load-extension=spatialite shortcut option,

https://github.com/simonw/sqlite-utils/issues/69#issuecomment-710405658,https://api.github.com/repos/simonw/sqlite-utils/issues/69,710405658,MDEyOklzc3VlQ29tbWVudDcxMDQwNTY1OA==,9599,simonw,2020-10-16T18:42:48Z,2020-10-16T18:42:48Z,OWNER,"Did some work on this for #134 in 7e9aad7e1c09d1cf80d0b4d17d6157212a4b857d

I still need to add `--load-extension` to other CLI methods, see #137. Closing this issue in favour of that one.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",534507142,Feature request: enable extensions loading,

https://github.com/simonw/sqlite-utils/issues/48#issuecomment-710402331,https://api.github.com/repos/simonw/sqlite-utils/issues/48,710402331,MDEyOklzc3VlQ29tbWVudDcxMDQwMjMzMQ==,9599,simonw,2020-10-16T18:41:06Z,2020-10-16T18:41:06Z,OWNER,I could use this demo from JupyterCon 2020 https://gist.github.com/simonw/656c21b5800d5e4624dec9930f00e093,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",471818939,"Jupyter notebook demo of the library, launchable on Binder",

https://github.com/simonw/sqlite-utils/issues/58#issuecomment-710399593,https://api.github.com/repos/simonw/sqlite-utils/issues/58,710399593,MDEyOklzc3VlQ29tbWVudDcxMDM5OTU5Mw==,9599,simonw,2020-10-16T18:39:31Z,2020-10-16T18:39:31Z,OWNER,I don't think this is valuable enough to justify adding to the library - especially since you can execute FTS search against views by joining to an FTS table built against an underlying table.,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",488293926,Support enabling FTS on views,

https://github.com/simonw/sqlite-utils/issues/49#issuecomment-710397574,https://api.github.com/repos/simonw/sqlite-utils/issues/49,710397574,MDEyOklzc3VlQ29tbWVudDcxMDM5NzU3NA==,9599,simonw,2020-10-16T18:38:21Z,2020-10-16T18:38:21Z,OWNER,"I'm not going to implement this. I'll leave `extract=...` as it is right now, suitable for quick simple single-column operations on input, but if users want to do something more complicated involving multiple columns they should use the `table.extract()` method after the initial insert instead.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",472115381,extracts= should support multiple-column extracts,

https://github.com/simonw/sqlite-utils/issues/49#issuecomment-710395444,https://api.github.com/repos/simonw/sqlite-utils/issues/49,710395444,MDEyOklzc3VlQ29tbWVudDcxMDM5NTQ0NA==,9599,simonw,2020-10-16T18:37:10Z,2020-10-16T18:37:10Z,OWNER,"But this begins to feel too complicated, given that `table.extract()` can already be used to achieve the same thing.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",472115381,extracts= should support multiple-column extracts,

https://github.com/simonw/sqlite-utils/issues/49#issuecomment-710393550,https://api.github.com/repos/simonw/sqlite-utils/issues/49,710393550,MDEyOklzc3VlQ29tbWVudDcxMDM5MzU1MA==,9599,simonw,2020-10-16T18:35:57Z,2020-10-16T18:36:39Z,OWNER,"If I want to support that most complicated example, I think the option to pass a `Extracts()` object to `extracts=` is the best way to do it:

```python

fresh_db.table(""tree"", extracts=[Extract(

columns=(""CommonName"", ""LatinName""),

table=""Species"",

fk_column=""species_id"",

rename={""CommonName"": ""name"", ""LatinName"": ""latin""}

)])

```","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",472115381,extracts= should support multiple-column extracts,

https://github.com/simonw/sqlite-utils/issues/49#issuecomment-710390915,https://api.github.com/repos/simonw/sqlite-utils/issues/49,710390915,MDEyOklzc3VlQ29tbWVudDcxMDM5MDkxNQ==,9599,simonw,2020-10-16T18:34:26Z,2020-10-16T18:34:50Z,OWNER,"Here's the most complex example of `.extracts()`:

```python

db[""Trees""].extract(

[""CommonName"", ""LatinName""],

table=""Species"",

fk_column=""species_id"",

rename={""CommonName"": ""name"", ""LatinName"": ""latin""}

)

```

Resulting in:

```sql

CREATE TABLE [Species] (

[id] INTEGER PRIMARY KEY,

[name] TEXT,

[latin] TEXT

)

```

From https://sqlite-utils.readthedocs.io/en/stable/python-api.html#extracting-columns-into-a-separate-table","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",472115381,extracts= should support multiple-column extracts,

https://github.com/simonw/sqlite-utils/issues/49#issuecomment-710364942,https://api.github.com/repos/simonw/sqlite-utils/issues/49,710364942,MDEyOklzc3VlQ29tbWVudDcxMDM2NDk0Mg==,9599,simonw,2020-10-16T18:18:48Z,2020-10-16T18:18:48Z,OWNER,"I think there is. It's a nice existing feature, and I don't think adding tuple support to it would be a huge lift.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",472115381,extracts= should support multiple-column extracts,

https://github.com/simonw/sqlite-utils/issues/49#issuecomment-710363789,https://api.github.com/repos/simonw/sqlite-utils/issues/49,710363789,MDEyOklzc3VlQ29tbWVudDcxMDM2Mzc4OQ==,9599,simonw,2020-10-16T18:18:05Z,2020-10-16T18:18:05Z,OWNER,I wonder if there's value in extending the `extracts=` option at all given the existence of `table.extract()`.,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",472115381,extracts= should support multiple-column extracts,

https://github.com/simonw/sqlite-utils/issues/49#issuecomment-710359724,https://api.github.com/repos/simonw/sqlite-utils/issues/49,710359724,MDEyOklzc3VlQ29tbWVudDcxMDM1OTcyNA==,9599,simonw,2020-10-16T18:15:31Z,2020-10-16T18:15:31Z,OWNER,"Using a tuple would work:

```python

fresh_db.table(""tree"", extracts=[(""common_name"", ""latin_name"")])

```

Or to define a custom name:

```python

fresh_db.table(""tree"", extracts={(""common_name"", ""latin_name""): ""names""})

```","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",472115381,extracts= should support multiple-column extracts,

https://github.com/simonw/sqlite-utils/issues/49#issuecomment-710346830,https://api.github.com/repos/simonw/sqlite-utils/issues/49,710346830,MDEyOklzc3VlQ29tbWVudDcxMDM0NjgzMA==,9599,simonw,2020-10-16T18:08:52Z,2020-10-16T18:09:21Z,OWNER,"The new `.extract()` method can handle multiple columns:

https://github.com/simonw/sqlite-utils/blob/2c541fac352632e23e40b0d21e3f233f7a744a57/tests/test_extract.py#L70-L87","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",472115381,extracts= should support multiple-column extracts,

https://github.com/simonw/sqlite-utils/issues/182#issuecomment-710258736,https://api.github.com/repos/simonw/sqlite-utils/issues/182,710258736,MDEyOklzc3VlQ29tbWVudDcxMDI1ODczNg==,9599,simonw,2020-10-16T17:20:41Z,2020-10-16T17:20:41Z,OWNER,Documentation: https://sqlite-utils.readthedocs.io/en/latest/cli.html#inserting-csv-or-tsv-data,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",711649325,"Better handling of encodings other than utf-8 for ""sqlite-utils insert""",

https://github.com/simonw/sqlite-utils/issues/186#issuecomment-710198162,https://api.github.com/repos/simonw/sqlite-utils/issues/186,710198162,MDEyOklzc3VlQ29tbWVudDcxMDE5ODE2Mg==,9599,simonw,2020-10-16T16:41:00Z,2020-10-16T16:41:00Z,OWNER,"Failing test:

```python

def test_extract_null_values(fresh_db):

fresh_db[""species""].insert({""id"": 1, ""species"": ""Wolf""}, pk=""id"")

fresh_db[""individuals""].insert_all(

[

{""id"": 10, ""name"": ""Terriana"", ""species"": ""Fox""},

{""id"": 11, ""name"": ""Spenidorm"", ""species"": None},

{""id"": 12, ""name"": ""Grantheim"", ""species"": ""Wolf""},

{""id"": 13, ""name"": ""Turnutopia"", ""species"": None},

{""id"": 14, ""name"": ""Wargal"", ""species"": ""Wolf""},

],

pk=""id"",

)

fresh_db[""individuals""].extract(""species"")

assert fresh_db[""species""].schema == (

""CREATE TABLE [species] (\n""

"" [id] INTEGER PRIMARY KEY,\n""

"" [species] TEXT\n""

"")""

)

assert fresh_db[""individuals""].schema == (

'CREATE TABLE ""individuals"" (\n'

"" [id] INTEGER PRIMARY KEY,\n""

"" [name] TEXT,\n""

"" [species_id] INTEGER,\n""

"" FOREIGN KEY(species_id) REFERENCES species(id)\n""

"")""

)

assert list(fresh_db[""species""].rows) == [

{""id"": 1, ""species"": ""Wolf""},

{""id"": 2, ""species"": ""Fox""},

]

assert list(fresh_db[""individuals""].rows) == [

{""id"": 10, ""name"": ""Terriana"", ""species_id"": 2},

{""id"": 11, ""name"": ""Spenidorm"", ""species_id"": None},

{""id"": 12, ""name"": ""Grantheim"", ""species_id"": 1},

{""id"": 13, ""name"": ""Turnutopia"", ""species_id"": None},

{""id"": 14, ""name"": ""Wargal"", ""species_id"": 1},

]

```","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",722816436,.extract() shouldn't extract null values,

https://github.com/simonw/sqlite-utils/issues/182#issuecomment-710178871,https://api.github.com/repos/simonw/sqlite-utils/issues/182,710178871,MDEyOklzc3VlQ29tbWVudDcxMDE3ODg3MQ==,9599,simonw,2020-10-16T16:27:39Z,2020-10-16T16:28:14Z,OWNER,"The file is opened for me by `click.File()`, which also handles things like `-` for stdin. But i neee to be able to switch the encoding used to read from that based on the `--encoding` option.

I think the way to do that is to open the file in binary mode and then wrap it in a codec reader:

```python

fp = codecs.getreader(encoding)(fp)

```","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",711649325,"Better handling of encodings other than utf-8 for ""sqlite-utils insert""",

https://github.com/simonw/sqlite-utils/issues/186#issuecomment-709706260,https://api.github.com/repos/simonw/sqlite-utils/issues/186,709706260,MDEyOklzc3VlQ29tbWVudDcwOTcwNjI2MA==,9599,simonw,2020-10-16T03:17:02Z,2020-10-16T03:17:17Z,OWNER,Actually I think this should be an option to `.extract()` which controls if nulls are extracted or left alone. Maybe called `extract_nulls=True/False`.,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",722816436,.extract() shouldn't extract null values,

https://github.com/simonw/sqlite-utils/issues/186#issuecomment-709706065,https://api.github.com/repos/simonw/sqlite-utils/issues/186,709706065,MDEyOklzc3VlQ29tbWVudDcwOTcwNjA2NQ==,9599,simonw,2020-10-16T03:16:22Z,2020-10-16T03:16:22Z,OWNER,Either way I think I'm going to need to add some SQL which uses `where a = b or (a is null and b is null)`.,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",722816436,.extract() shouldn't extract null values,

https://github.com/simonw/sqlite-utils/issues/186#issuecomment-709705885,https://api.github.com/repos/simonw/sqlite-utils/issues/186,709705885,MDEyOklzc3VlQ29tbWVudDcwOTcwNTg4NQ==,9599,simonw,2020-10-16T03:15:39Z,2020-10-16T03:15:39Z,OWNER,The alternative solution here would be that a single `null` value DOES get extracted. To implement this I would need to add some logic that uses `is null` instead of `=`.,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",722816436,.extract() shouldn't extract null values,

https://github.com/simonw/sqlite-utils/issues/186#issuecomment-709705624,https://api.github.com/repos/simonw/sqlite-utils/issues/186,709705624,MDEyOklzc3VlQ29tbWVudDcwOTcwNTYyNA==,9599,simonw,2020-10-16T03:14:39Z,2020-10-16T03:14:39Z,OWNER,"How should this work with extractions covering multiple columns?

If there's a single column then it makes sense that a `null` value would not be extracted into the lookup table, but would instead become stay as `null`.

For a multiple column extraction, provided at least one of those columns is not null It should map to a record in the lookup table. Only if ALL of the extracted columns are null should the lookup value stay null.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",722816436,.extract() shouldn't extract null values,

https://github.com/simonw/datasette/issues/1027#issuecomment-709647525,https://api.github.com/repos/simonw/datasette/issues/1027,709647525,MDEyOklzc3VlQ29tbWVudDcwOTY0NzUyNQ==,9599,simonw,2020-10-15T23:49:51Z,2020-10-15T23:51:39Z,OWNER,"I'll install Apache on macOS to figure this out using https://formulae.brew.sh/formula/httpd

`brew install httpd` output this at the end:

```

==> httpd

DocumentRoot is /usr/local/var/www.

The default ports have been set in /usr/local/etc/httpd/httpd.conf to 8080 and in

/usr/local/etc/httpd/extra/httpd-ssl.conf to 8443 so that httpd can run without sudo.

To have launchd start httpd now and restart at login:

brew services start httpd

Or, if you don't want/need a background service you can just run:

apachectl start

```","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",722758132,Add documentation on serving Datasette behind a proxy using base_url,

https://github.com/simonw/datasette/issues/1027#issuecomment-709646865,https://api.github.com/repos/simonw/datasette/issues/1027,709646865,MDEyOklzc3VlQ29tbWVudDcwOTY0Njg2NQ==,9599,simonw,2020-10-15T23:47:08Z,2020-10-15T23:47:08Z,OWNER,It should cover both nginx and Apache. nginx config is here: https://github.com/simonw/datasette/issues/1024#issuecomment-709598324,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",722758132,Add documentation on serving Datasette behind a proxy using base_url,

https://github.com/simonw/datasette/issues/1026#issuecomment-709636372,https://api.github.com/repos/simonw/datasette/issues/1026,709636372,MDEyOklzc3VlQ29tbWVudDcwOTYzNjM3Mg==,9599,simonw,2020-10-15T23:09:34Z,2020-10-15T23:09:34Z,OWNER,"I'm inclined to say that internal requests should ignore `base_url` - since that seems like the right thing for plugins that need to access default Datasette APIs.

The one catch here is plugins that might want to proxy the current incoming URL for some reason - where that incoming `request.path` could include the `base_url`.

Actually those should be fine - because it will have been stripped off earlier:

https://github.com/simonw/datasette/blob/4f7c0ebd85ccd8c1853d7aa0147628f7c1b749cc/datasette/app.py#L963-L968","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",722738988,How should datasette.client interact with base_url,

https://github.com/simonw/datasette/issues/904#issuecomment-709635276,https://api.github.com/repos/simonw/datasette/issues/904,709635276,MDEyOklzc3VlQ29tbWVudDcwOTYzNTI3Ng==,9599,simonw,2020-10-15T23:05:54Z,2020-10-15T23:05:54Z,OWNER,Could have `instance_url()` take an optional path argument which is then turned into the correct path.,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",663228985,"datasette.urls.table() / .instance() / .database() methods for constructing URLs, also exposed to templates",

https://github.com/simonw/datasette/issues/904#issuecomment-709635021,https://api.github.com/repos/simonw/datasette/issues/904,709635021,MDEyOklzc3VlQ29tbWVudDcwOTYzNTAyMQ==,9599,simonw,2020-10-15T23:05:11Z,2020-10-15T23:05:11Z,OWNER,"I think this should be a family of functions:

- `instance_url()` - the root URL of the instance (usually `/` unless `base_url` is set)

- `database_url(database_name)` - already got this

- `table_url(database_name, table_name)`

- `row_url(database_name, table_name, row)` - not sure about this one. The idea would be for `row` to be correctly turned into a URL by introspecting the primary keys for that table, then pulling those values out of the SQLite `row` object. Might not be necessary though.

I also need a way for plugins to link to e.g. `/-/configure-fts` - or even `/-/configure-fts/database-name/table-name`. What should that look like?","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",663228985,"datasette.urls.table() / .instance() / .database() methods for constructing URLs, also exposed to templates",

https://github.com/simonw/datasette/issues/904#issuecomment-709634261,https://api.github.com/repos/simonw/datasette/issues/904,709634261,MDEyOklzc3VlQ29tbWVudDcwOTYzNDI2MQ==,9599,simonw,2020-10-15T23:02:43Z,2020-10-15T23:02:43Z,OWNER,"Here's the current implementation of `database_url` - on the `BaseView` class, but only because it needs access to a `datasette` instance (to read `base_url`):

https://github.com/simonw/datasette/blob/8f97b9b58e77f82fef1f10e9c9f6754b993544b6/datasette/views/base.py#L102-L108","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",663228985,"datasette.urls.table() / .instance() / .database() methods for constructing URLs, also exposed to templates",

https://github.com/simonw/datasette/issues/904#issuecomment-709633823,https://api.github.com/repos/simonw/datasette/issues/904,709633823,MDEyOklzc3VlQ29tbWVudDcwOTYzMzgyMw==,9599,simonw,2020-10-15T23:01:13Z,2020-10-15T23:01:13Z,OWNER,Tracking ticket: #1023,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",663228985,"datasette.urls.table() / .instance() / .database() methods for constructing URLs, also exposed to templates",

https://github.com/simonw/datasette/issues/988#issuecomment-709633762,https://api.github.com/repos/simonw/datasette/issues/988,709633762,MDEyOklzc3VlQ29tbWVudDcwOTYzMzc2Mg==,9599,simonw,2020-10-15T23:01:01Z,2020-10-15T23:01:01Z,OWNER,This is a dupe of https://github.com/simonw/datasette/issues/904,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",713209404,Mechanism for plugins to construct URLs that respect base_url,

https://github.com/simonw/datasette/issues/865#issuecomment-709633080,https://api.github.com/repos/simonw/datasette/issues/865,709633080,MDEyOklzc3VlQ29tbWVudDcwOTYzMzA4MA==,9599,simonw,2020-10-15T22:58:51Z,2020-10-15T22:58:51Z,OWNER,"It looks like there are places where Datasette might return a redirect that doesn't take `base_url` into account - I'm planning on fixing those here, after which I think `ProxyPassReverse` should no longer be necessary. https://github.com/simonw/datasette/issues/1025#issuecomment-709632136","{""total_count"": 1, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 1, ""rocket"": 0, ""eyes"": 0}",644582921,"base_url doesn't seem to work when adding criteria and clicking ""apply""",

https://github.com/simonw/datasette/issues/900#issuecomment-709632765,https://api.github.com/repos/simonw/datasette/issues/900,709632765,MDEyOklzc3VlQ29tbWVudDcwOTYzMjc2NQ==,9599,simonw,2020-10-15T22:57:55Z,2020-10-15T22:57:55Z,OWNER,"I believe this particular bug has been fixed, based on my testing here: https://github.com/simonw/datasette/issues/1024#issuecomment-709622973

Please re-open the ticket if you are still experiencing it.

","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",661605489,Some links don't honor base_url,

https://github.com/simonw/datasette/issues/1025#issuecomment-709632314,https://api.github.com/repos/simonw/datasette/issues/1025,709632314,MDEyOklzc3VlQ29tbWVudDcwOTYzMjMxNA==,9599,simonw,2020-10-15T22:56:25Z,2020-10-15T22:56:34Z,OWNER,"That `utils/asgi.py` line is the default path for setting cookies. That should likely take `base_url` into account too:

https://github.com/simonw/datasette/blob/4f7c0ebd85ccd8c1853d7aa0147628f7c1b749cc/datasette/utils/asgi.py#L331-L342","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",722724086,"Fix last remaining links to ""/"" that do not respect base_url",

https://github.com/simonw/datasette/issues/1025#issuecomment-709632136,https://api.github.com/repos/simonw/datasette/issues/1025,709632136,MDEyOklzc3VlQ29tbWVudDcwOTYzMjEzNg==,9599,simonw,2020-10-15T22:55:44Z,2020-10-15T22:55:44Z,OWNER,"It looks like there are also some generated redirect responses that don't take `base_url` into account:

```