issue_comments

32 rows where "created_at" is on date 2022-03-05 sorted by updated_at descending

This data as json, CSV (advanced)

Suggested facets: issue_url, reactions, created_at (date)

user 4

- simonw 29

- eyeseast 1

- zaneselvans 1

- codecov[bot] 1

| id | html_url | issue_url | node_id | user | created_at | updated_at ▲ | author_association | body | reactions | issue | performed_via_github_app |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1059823151 | https://github.com/simonw/datasette/pull/1648#issuecomment-1059823151 | https://api.github.com/repos/simonw/datasette/issues/1648 | IC_kwDOBm6k_c4_K54v | codecov[bot] 22429695 | 2022-03-05T19:56:41Z | 2022-03-07T15:38:08Z | NONE | Codecov Report

```diff @@ Coverage Diff @@ main #1648 +/-==========================================

+ Coverage 92.03% 92.05% +0.02% | Impacted Files | Coverage Δ | |

|---|---|---|

| datasette/url_builder.py | Continue to review full report at Codecov.

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Use dash encoding for table names and row primary keys in URLs 1160432941 | |

| 1059854864 | https://github.com/simonw/datasette/issues/1439#issuecomment-1059854864 | https://api.github.com/repos/simonw/datasette/issues/1439 | IC_kwDOBm6k_c4_LBoQ | simonw 9599 | 2022-03-05T23:59:05Z | 2022-03-05T23:59:05Z | OWNER | OK, for that percentage thing: the Python core implementation of URL percentage escaping deliberately ignores two of the characters we want to escape:

I'm going to try borrowing and modifying the core of the Python implementation: https://github.com/python/cpython/blob/6927632492cbad86a250aa006c1847e03b03e70b/Lib/urllib/parse.py#L795-L814 ```python class _Quoter(dict): """A mapping from bytes numbers (in range(0,256)) to strings. String values are percent-encoded byte values, unless the key < 128, and in either of the specified safe set, or the always safe set. """ # Keeps a cache internally, via missing, for efficiency (lookups # of cached keys don't call Python code at all). def init(self, safe): """safe: bytes object.""" self.safe = _ALWAYS_SAFE.union(safe) ``` |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Rethink how .ext formats (v.s. ?_format=) works before 1.0 973139047 | |

| 1059853526 | https://github.com/simonw/datasette/issues/1439#issuecomment-1059853526 | https://api.github.com/repos/simonw/datasette/issues/1439 | IC_kwDOBm6k_c4_LBTW | simonw 9599 | 2022-03-05T23:49:59Z | 2022-03-05T23:49:59Z | OWNER | I want to try regular percentage encoding, except that it also encodes both the Should check what it does with emoji too. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Rethink how .ext formats (v.s. ?_format=) works before 1.0 973139047 | |

| 1059851259 | https://github.com/simonw/datasette/issues/1439#issuecomment-1059851259 | https://api.github.com/repos/simonw/datasette/issues/1439 | IC_kwDOBm6k_c4_LAv7 | simonw 9599 | 2022-03-05T23:35:47Z | 2022-03-05T23:35:59Z | OWNER | This comment from glyph got me thinking:

What happens if a table name includes a I should consider |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Rethink how .ext formats (v.s. ?_format=) works before 1.0 973139047 | |

| 1059850369 | https://github.com/simonw/datasette/issues/1439#issuecomment-1059850369 | https://api.github.com/repos/simonw/datasette/issues/1439 | IC_kwDOBm6k_c4_LAiB | simonw 9599 | 2022-03-05T23:28:56Z | 2022-03-05T23:28:56Z | OWNER | Lots of great conversations about the dash encoding implementation on Twitter: https://twitter.com/simonw/status/1500228316309061633 @dracos helped me figure out a simpler regex: https://twitter.com/dracos/status/1500236433809973248

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Rethink how .ext formats (v.s. ?_format=) works before 1.0 973139047 | |

| 1059836599 | https://github.com/simonw/datasette/issues/1439#issuecomment-1059836599 | https://api.github.com/repos/simonw/datasette/issues/1439 | IC_kwDOBm6k_c4_K9K3 | simonw 9599 | 2022-03-05T21:52:10Z | 2022-03-05T21:52:10Z | OWNER | Blogged about this here: https://simonwillison.net/2022/Mar/5/dash-encoding/ |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Rethink how .ext formats (v.s. ?_format=) works before 1.0 973139047 | |

| 1059823119 | https://github.com/simonw/datasette/issues/1647#issuecomment-1059823119 | https://api.github.com/repos/simonw/datasette/issues/1647 | IC_kwDOBm6k_c4_K54P | simonw 9599 | 2022-03-05T19:56:27Z | 2022-03-05T19:56:27Z | OWNER | Updated this TIL with extra patterns I figured out: https://til.simonwillison.net/sqlite/ld-preload |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Test failures with SQLite 3.37.0+ due to column affinity case 1160407071 | |

| 1059822391 | https://github.com/simonw/datasette/issues/1439#issuecomment-1059822391 | https://api.github.com/repos/simonw/datasette/issues/1439 | IC_kwDOBm6k_c4_K5s3 | simonw 9599 | 2022-03-05T19:50:12Z | 2022-03-05T19:50:12Z | OWNER | I'm going to move this work to a PR. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Rethink how .ext formats (v.s. ?_format=) works before 1.0 973139047 | |

| 1059822151 | https://github.com/simonw/datasette/issues/1439#issuecomment-1059822151 | https://api.github.com/repos/simonw/datasette/issues/1439 | IC_kwDOBm6k_c4_K5pH | simonw 9599 | 2022-03-05T19:48:35Z | 2022-03-05T19:48:35Z | OWNER | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Rethink how .ext formats (v.s. ?_format=) works before 1.0 973139047 | ||

| 1059821674 | https://github.com/simonw/datasette/issues/1647#issuecomment-1059821674 | https://api.github.com/repos/simonw/datasette/issues/1647 | IC_kwDOBm6k_c4_K5hq | simonw 9599 | 2022-03-05T19:44:32Z | 2022-03-05T19:44:32Z | OWNER | I thought I'd need to introduce https://dirty-equals.helpmanual.io/types/string/ to help write tests for this, but I think I've found a good alternative that doesn't need a new dependency. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Test failures with SQLite 3.37.0+ due to column affinity case 1160407071 | |

| 1059819628 | https://github.com/simonw/datasette/issues/1647#issuecomment-1059819628 | https://api.github.com/repos/simonw/datasette/issues/1647 | IC_kwDOBm6k_c4_K5Bs | simonw 9599 | 2022-03-05T19:28:54Z | 2022-03-05T19:28:54Z | OWNER | OK, using that trick worked for testing this: Then inside that container: For each version of SQLite I wanted to test I needed to figure out the tarball URL - for example, for Then to build it (the After some trial and error I proved that those tests passed with 3.36.0:

3.37.0cd /tmp wget https://www.sqlite.org/src/tarball/bd41822c/SQLite-bd41822c.tar.gz tar -xzvf SQLite-bd41822c.tar.gz cd SQLite-bd41822c CPPFLAGS="-DSQLITE_ENABLE_FTS3 -DSQLITE_ENABLE_FTS3_PARENTHESIS -DSQLITE_ENABLE_RTREE=1" ./configure make cd /tmp/datasette LD_PRELOAD=/tmp/SQLite-bd41822c/.libs/libsqlite3.so pytest tests/test_internals_database.py ``` |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Test failures with SQLite 3.37.0+ due to column affinity case 1160407071 | |

| 1059807598 | https://github.com/simonw/datasette/issues/1647#issuecomment-1059807598 | https://api.github.com/repos/simonw/datasette/issues/1647 | IC_kwDOBm6k_c4_K2Fu | simonw 9599 | 2022-03-05T18:06:56Z | 2022-03-05T18:08:00Z | OWNER | Had a look through the commits in https://github.com/sqlite/sqlite/compare/version-3.37.2...version-3.38.0 but couldn't see anything obvious that might have caused this. Really wish I had a good mechanism for running the test suite against different SQLite versions! May have to revisit this old trick: https://til.simonwillison.net/sqlite/ld-preload |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Test failures with SQLite 3.37.0+ due to column affinity case 1160407071 | |

| 1059804577 | https://github.com/simonw/datasette/issues/1647#issuecomment-1059804577 | https://api.github.com/repos/simonw/datasette/issues/1647 | IC_kwDOBm6k_c4_K1Wh | simonw 9599 | 2022-03-05T17:49:46Z | 2022-03-05T17:49:46Z | OWNER | My best guess is that this is an undocumented change in SQLite 3.38 - I get that test failure with that SQLite version. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Test failures with SQLite 3.37.0+ due to column affinity case 1160407071 | |

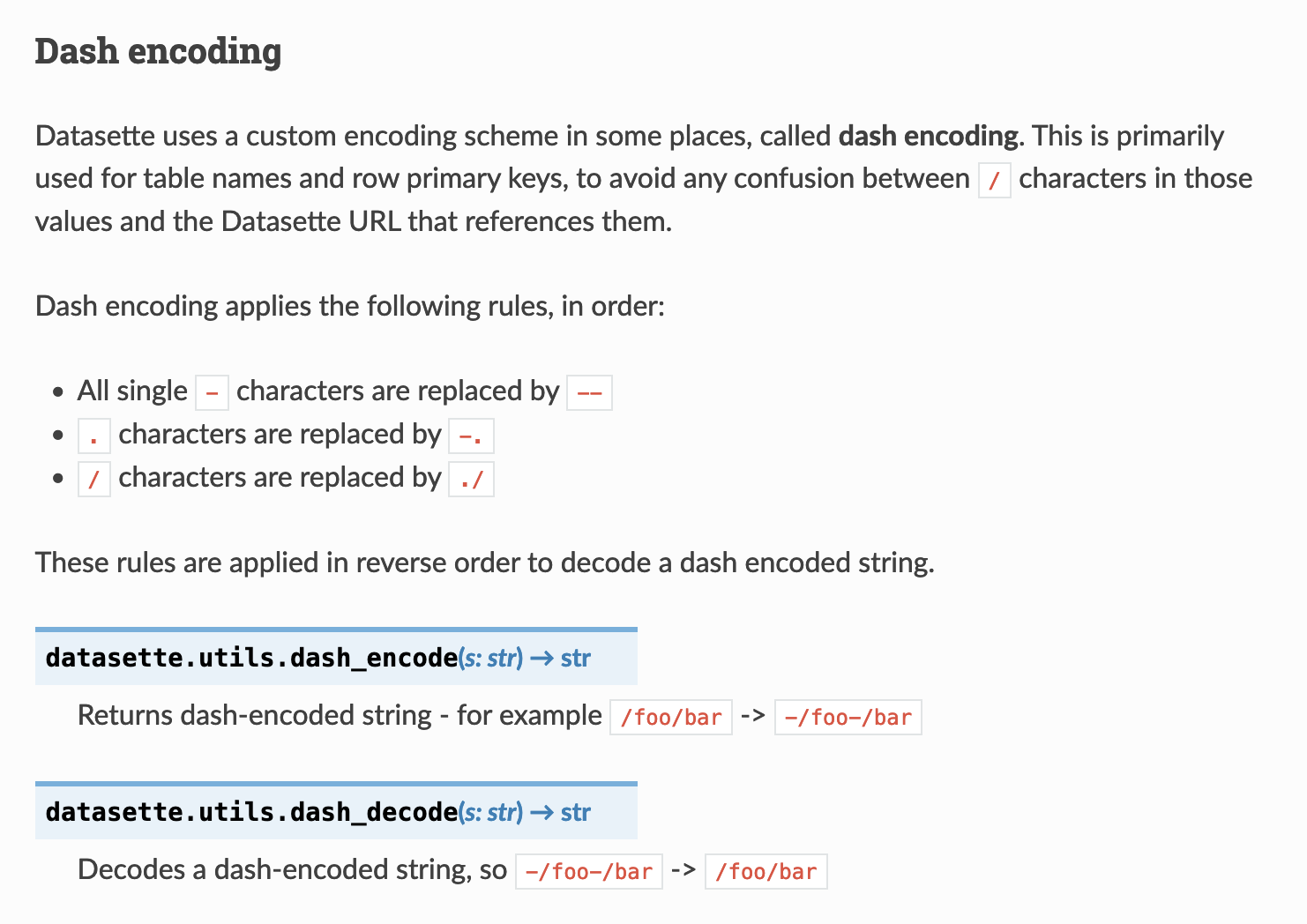

| 1059802318 | https://github.com/simonw/datasette/issues/1439#issuecomment-1059802318 | https://api.github.com/repos/simonw/datasette/issues/1439 | IC_kwDOBm6k_c4_K0zO | simonw 9599 | 2022-03-05T17:34:33Z | 2022-03-05T17:34:33Z | OWNER | Wrote documentation:

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Rethink how .ext formats (v.s. ?_format=) works before 1.0 973139047 | |

| 1059652834 | https://github.com/simonw/sqlite-utils/issues/412#issuecomment-1059652834 | https://api.github.com/repos/simonw/sqlite-utils/issues/412 | IC_kwDOCGYnMM4_KQTi | zaneselvans 596279 | 2022-03-05T02:14:40Z | 2022-03-05T02:14:40Z | NONE | We do a lot of |

{

"total_count": 2,

"+1": 2,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Optional Pandas integration 1160182768 | |

| 1059652538 | https://github.com/simonw/sqlite-utils/issues/412#issuecomment-1059652538 | https://api.github.com/repos/simonw/sqlite-utils/issues/412 | IC_kwDOCGYnMM4_KQO6 | simonw 9599 | 2022-03-05T02:13:17Z | 2022-03-05T02:13:17Z | OWNER |

Shows a DateFrame``` |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Optional Pandas integration 1160182768 | |

| 1059651306 | https://github.com/simonw/sqlite-utils/issues/412#issuecomment-1059651306 | https://api.github.com/repos/simonw/sqlite-utils/issues/412 | IC_kwDOCGYnMM4_KP7q | simonw 9599 | 2022-03-05T02:10:49Z | 2022-03-05T02:10:49Z | OWNER | I could teach |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Optional Pandas integration 1160182768 | |

| 1059651056 | https://github.com/simonw/sqlite-utils/issues/412#issuecomment-1059651056 | https://api.github.com/repos/simonw/sqlite-utils/issues/412 | IC_kwDOCGYnMM4_KP3w | simonw 9599 | 2022-03-05T02:09:38Z | 2022-03-05T02:09:38Z | OWNER | OK, so reading results from existing How about writing a DataFrame to a database table? That feels like it could a lot more useful. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Optional Pandas integration 1160182768 | |

| 1059650190 | https://github.com/simonw/sqlite-utils/issues/412#issuecomment-1059650190 | https://api.github.com/repos/simonw/sqlite-utils/issues/412 | IC_kwDOCGYnMM4_KPqO | simonw 9599 | 2022-03-05T02:04:43Z | 2022-03-05T02:04:54Z | OWNER | To be honest, I'm having second thoughts about this now mainly because the idiom for turning a generator of dicts into a DataFrame is SO simple:

|

{

"total_count": 2,

"+1": 2,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Optional Pandas integration 1160182768 | |

| 1059649803 | https://github.com/simonw/sqlite-utils/issues/412#issuecomment-1059649803 | https://api.github.com/repos/simonw/sqlite-utils/issues/412 | IC_kwDOCGYnMM4_KPkL | simonw 9599 | 2022-03-05T02:02:41Z | 2022-03-05T02:02:41Z | OWNER | It looks like the existing ``` ... import pandas as pd pd.read_sql_query(db.conn, "select * from articles") ImportError: Using URI string without sqlalchemy installed.``` |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Optional Pandas integration 1160182768 | |

| 1059649213 | https://github.com/simonw/sqlite-utils/issues/412#issuecomment-1059649213 | https://api.github.com/repos/simonw/sqlite-utils/issues/412 | IC_kwDOCGYnMM4_KPa9 | simonw 9599 | 2022-03-05T02:00:10Z | 2022-03-05T02:00:10Z | OWNER | Requested feedback on Twitter here :https://twitter.com/simonw/status/1499927075930578948 |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Optional Pandas integration 1160182768 | |

| 1059649193 | https://github.com/simonw/sqlite-utils/issues/412#issuecomment-1059649193 | https://api.github.com/repos/simonw/sqlite-utils/issues/412 | IC_kwDOCGYnMM4_KPap | simonw 9599 | 2022-03-05T02:00:02Z | 2022-03-05T02:00:02Z | OWNER | Yeah, I imagine there are plenty of ways to do this with Pandas already - I'm opportunistically looking for a way to provide better integration with the rest of the Pandas situation from the work I've done in Might be that this isn't worth doing at all. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Optional Pandas integration 1160182768 | |

| 1059647114 | https://github.com/simonw/sqlite-utils/issues/412#issuecomment-1059647114 | https://api.github.com/repos/simonw/sqlite-utils/issues/412 | IC_kwDOCGYnMM4_KO6K | eyeseast 25778 | 2022-03-05T01:54:24Z | 2022-03-05T01:54:24Z | CONTRIBUTOR | I haven't tried this, but it looks like Pandas has a method for this: https://pandas.pydata.org/docs/reference/api/pandas.read_sql_query.html |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Optional Pandas integration 1160182768 | |

| 1059646645 | https://github.com/simonw/sqlite-utils/issues/412#issuecomment-1059646645 | https://api.github.com/repos/simonw/sqlite-utils/issues/412 | IC_kwDOCGYnMM4_KOy1 | simonw 9599 | 2022-03-05T01:53:10Z | 2022-03-05T01:53:10Z | OWNER | I'm not an experienced enough Pandas user to know if this design is right or not. I'm going to leave this open for a while and solicit some feedback. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Optional Pandas integration 1160182768 | |

| 1059646543 | https://github.com/simonw/sqlite-utils/issues/412#issuecomment-1059646543 | https://api.github.com/repos/simonw/sqlite-utils/issues/412 | IC_kwDOCGYnMM4_KOxP | simonw 9599 | 2022-03-05T01:52:47Z | 2022-03-05T01:52:47Z | OWNER | I built a prototype of that second option and it looks pretty good:

Here's the ```python from .db import Database as _Database, Table as _Table, View as _View import pandas as pd from typing import ( Iterable, Union, Optional, ) class Database(_Database): def query( self, sql: str, params: Optional[Union[Iterable, dict]] = None ) -> pd.DataFrame: return pd.DataFrame(super().query(sql, params)) class PandasQueryable: def rows_where( self, where: str = None, where_args: Optional[Union[Iterable, dict]] = None, order_by: str = None, select: str = "*", limit: int = None, offset: int = None, ) -> pd.DataFrame: return pd.DataFrame( super().rows_where( where, where_args, order_by=order_by, select=select, limit=limit, offset=offset, ) ) class Table(PandasQueryable, _Table): pass class View(PandasQueryable, _View): pass ``` |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Optional Pandas integration 1160182768 | |

| 1059646247 | https://github.com/simonw/sqlite-utils/issues/412#issuecomment-1059646247 | https://api.github.com/repos/simonw/sqlite-utils/issues/412 | IC_kwDOCGYnMM4_KOsn | simonw 9599 | 2022-03-05T01:51:03Z | 2022-03-05T01:51:03Z | OWNER | I considered two ways of doing this. First, have methods such as Second, have a compatibility class that is imported separately such as:

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Optional Pandas integration 1160182768 | |

| 1059638778 | https://github.com/simonw/datasette/issues/1640#issuecomment-1059638778 | https://api.github.com/repos/simonw/datasette/issues/1640 | IC_kwDOBm6k_c4_KM36 | simonw 9599 | 2022-03-05T01:19:00Z | 2022-03-05T01:19:00Z | OWNER | The reason I implemented it like this was to support things like the Here's the code that hooks it up to the URL resolver: Which uses this function: One option here would be to support a workaround that looks something like this: The URL routing code could then look out for that It's a bit of a cludge, but it would be pretty straight-forward to implement. Would that work for you @broccolihighkicks? |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Support static assets where file length may change, e.g. logs 1148725876 | |

| 1059636420 | https://github.com/simonw/datasette/issues/1640#issuecomment-1059636420 | https://api.github.com/repos/simonw/datasette/issues/1640 | IC_kwDOBm6k_c4_KMTE | simonw 9599 | 2022-03-05T01:13:26Z | 2022-03-05T01:13:26Z | OWNER | Hah, this is certainly unexpected. It looks like this is the code in question: https://github.com/simonw/datasette/blob/a6ff123de5464806441f6a6f95145c9a83b7f20b/datasette/utils/asgi.py#L259-L266 You're right: it assumes that the file it is serving won't change length while it is serving it. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Support static assets where file length may change, e.g. logs 1148725876 | |

| 1059635969 | https://github.com/simonw/datasette/issues/1642#issuecomment-1059635969 | https://api.github.com/repos/simonw/datasette/issues/1642 | IC_kwDOBm6k_c4_KMMB | simonw 9599 | 2022-03-05T01:11:17Z | 2022-03-05T01:11:17Z | OWNER |

Neither does Closing this as can't reproduce. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Dependency issue with asgiref and uvicorn 1152072027 | |

| 1059634688 | https://github.com/simonw/datasette/issues/1645#issuecomment-1059634688 | https://api.github.com/repos/simonw/datasette/issues/1645 | IC_kwDOBm6k_c4_KL4A | simonw 9599 | 2022-03-05T01:06:08Z | 2022-03-05T01:06:08Z | OWNER | It sounds like you can workaround this with Varnish configuration for the moment, but I'm going to bump this up the list of things to fix - it's particularly relevant now as I'd like to get a solution in place before Datasette 1.0, since it's likely to be beneficial to plugins and hence should be part of the stable, documented plugin interface. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Sensible `cache-control` headers for static assets, including those served by plugins 1154399841 | |

| 1059634412 | https://github.com/simonw/datasette/issues/1645#issuecomment-1059634412 | https://api.github.com/repos/simonw/datasette/issues/1645 | IC_kwDOBm6k_c4_KLzs | simonw 9599 | 2022-03-05T01:04:53Z | 2022-03-05T01:04:53Z | OWNER | The existing |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Sensible `cache-control` headers for static assets, including those served by plugins 1154399841 | |

| 1059633902 | https://github.com/simonw/datasette/issues/1645#issuecomment-1059633902 | https://api.github.com/repos/simonw/datasette/issues/1645 | IC_kwDOBm6k_c4_KLru | simonw 9599 | 2022-03-05T01:03:06Z | 2022-03-05T01:03:06Z | OWNER | I agree: this is bad. Ideally, content served from

Datasette half-implemented the first of these: if you view source on https://latest.datasette.io/ you'll see it links to I had forgotten I had implemented this! Here is how it is calculated: So |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Sensible `cache-control` headers for static assets, including those served by plugins 1154399841 |

Advanced export

JSON shape: default, array, newline-delimited, object

CREATE TABLE [issue_comments] (

[html_url] TEXT,

[issue_url] TEXT,

[id] INTEGER PRIMARY KEY,

[node_id] TEXT,

[user] INTEGER REFERENCES [users]([id]),

[created_at] TEXT,

[updated_at] TEXT,

[author_association] TEXT,

[body] TEXT,

[reactions] TEXT,

[issue] INTEGER REFERENCES [issues]([id])

, [performed_via_github_app] TEXT);

CREATE INDEX [idx_issue_comments_issue]

ON [issue_comments] ([issue]);

CREATE INDEX [idx_issue_comments_user]

ON [issue_comments] ([user]);

issue 7