issue_comments

46 rows where "updated_at" is on date 2022-03-21 sorted by updated_at descending

This data as json, CSV (advanced)

Suggested facets: issue_url, reactions, created_at (date), updated_at (date)

| id | html_url | issue_url | node_id | user | created_at | updated_at ▲ | author_association | body | reactions | issue | performed_via_github_app |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1074479932 | https://github.com/simonw/datasette/issues/339#issuecomment-1074479932 | https://api.github.com/repos/simonw/datasette/issues/339 | IC_kwDOBm6k_c5AC0M8 | simonw 9599 | 2022-03-21T22:22:34Z | 2022-03-21T22:22:34Z | OWNER | Closing this as obsolete since Datasette no longer uses Sanic. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Expose SANIC_RESPONSE_TIMEOUT config option in a sensible way 340396247 | |

| 1074479768 | https://github.com/simonw/datasette/issues/276#issuecomment-1074479768 | https://api.github.com/repos/simonw/datasette/issues/276 | IC_kwDOBm6k_c5AC0KY | simonw 9599 | 2022-03-21T22:22:20Z | 2022-03-21T22:22:20Z | OWNER | I'm closing this issue because this is now solved by a number of neat plugins:

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Handle spatialite geometry columns better 324835838 | |

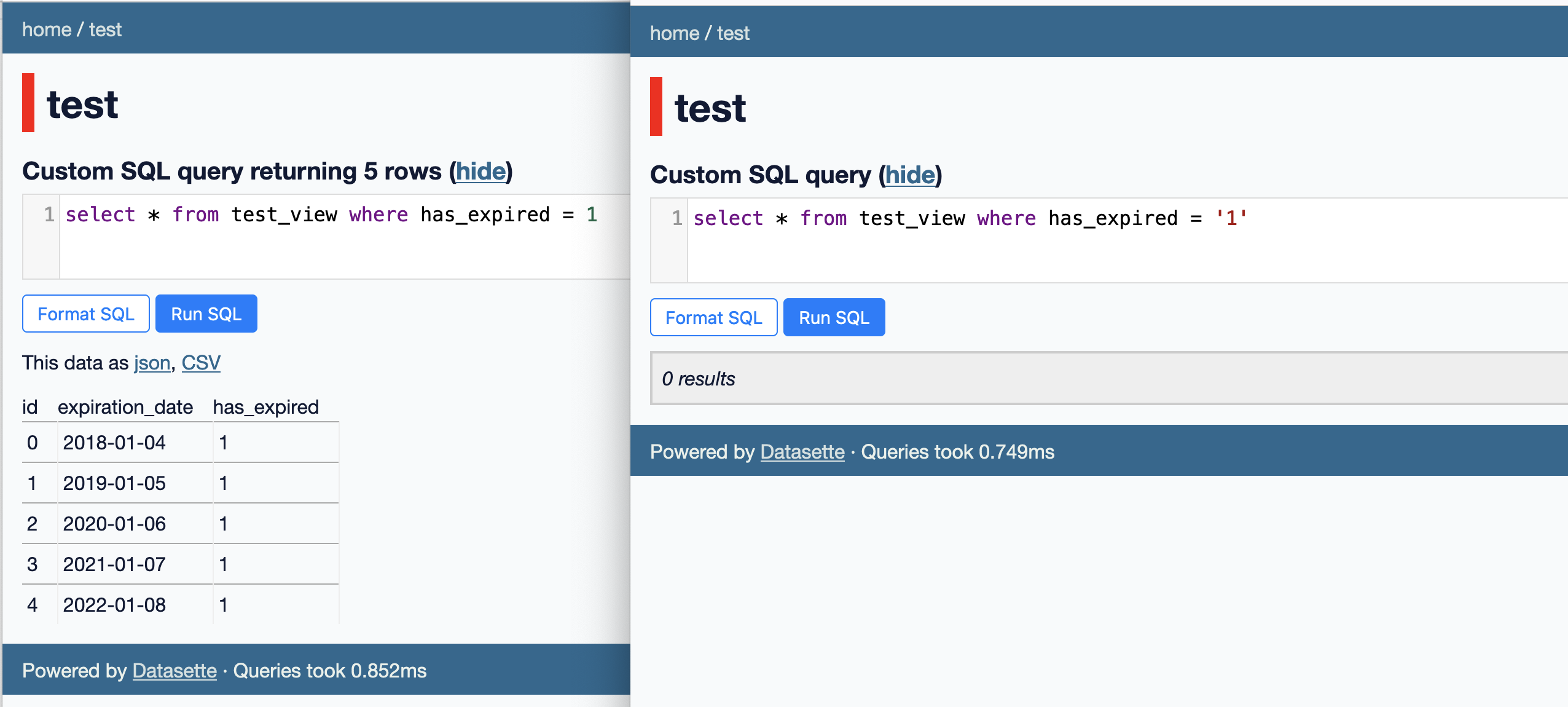

| 1074478299 | https://github.com/simonw/datasette/issues/1671#issuecomment-1074478299 | https://api.github.com/repos/simonw/datasette/issues/1671 | IC_kwDOBm6k_c5ACzzb | simonw 9599 | 2022-03-21T22:20:26Z | 2022-03-21T22:20:26Z | OWNER | Thinking about options for fixing this... The following query works fine:

If someone clicks on "View and edit SQL" from a filtered table page I don't want them to have to wonder why that But... for querying views, the So one fix would be to get the SQL generating logic to use casts like this any time it is operating against a view. An even better fix would be to detect which columns in a view come from a table and which ones might not, and only use casts for the columns that aren't definitely from a table. The trick I was exploring here might be able to help with that: - #1293 |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Filters fail to work correctly against calculated numeric columns returned by SQL views because type affinity rules do not apply 1174655187 | |

| 1074470568 | https://github.com/simonw/datasette/issues/1671#issuecomment-1074470568 | https://api.github.com/repos/simonw/datasette/issues/1671 | IC_kwDOBm6k_c5ACx6o | simonw 9599 | 2022-03-21T22:11:14Z | 2022-03-21T22:12:49Z | OWNER | I wonder if this will be a problem with generated columns, or with SQLite strict tables? My hunch is that strict tables will continue to work without any changes, because https://www.sqlite.org/stricttables.html says nothing about their impact on comparison operations. I should test this to make absolutely sure though. Generated columns have a type, so my hunch is they will continue to work fine too. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Filters fail to work correctly against calculated numeric columns returned by SQL views because type affinity rules do not apply 1174655187 | |

| 1074468450 | https://github.com/simonw/datasette/issues/1671#issuecomment-1074468450 | https://api.github.com/repos/simonw/datasette/issues/1671 | IC_kwDOBm6k_c5ACxZi | simonw 9599 | 2022-03-21T22:08:35Z | 2022-03-21T22:10:00Z | OWNER | Relevant section of the SQLite documentation: 3.2. Affinity Of Expressions:

In your example, Then 4.2. Type Conversions Prior To Comparison fills in the rest:

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Filters fail to work correctly against calculated numeric columns returned by SQL views because type affinity rules do not apply 1174655187 | |

| 1074465536 | https://github.com/simonw/datasette/issues/1671#issuecomment-1074465536 | https://api.github.com/repos/simonw/datasette/issues/1671 | IC_kwDOBm6k_c5ACwsA | simonw 9599 | 2022-03-21T22:04:31Z | 2022-03-21T22:04:31Z | OWNER | Oh this is fascinating! I replicated the bug (thanks for the steps to reproduce) and it looks like this is down to the following:

Against views, This doesn't happen against tables because of SQLite's type affinity mechanism, which handles the type conversion automatically. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Filters fail to work correctly against calculated numeric columns returned by SQL views because type affinity rules do not apply 1174655187 | |

| 1074459746 | https://github.com/simonw/datasette/issues/1679#issuecomment-1074459746 | https://api.github.com/repos/simonw/datasette/issues/1679 | IC_kwDOBm6k_c5ACvRi | simonw 9599 | 2022-03-21T21:55:45Z | 2022-03-21T21:55:45Z | OWNER | I'm going to change the original logic to set n=1 for times that are |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Research: how much overhead does the n=1 time limit have? 1175854982 | |

| 1074458506 | https://github.com/simonw/datasette/issues/1679#issuecomment-1074458506 | https://api.github.com/repos/simonw/datasette/issues/1679 | IC_kwDOBm6k_c5ACu-K | simonw 9599 | 2022-03-21T21:53:47Z | 2022-03-21T21:53:47Z | OWNER | Oh interesting, it turns out there is ONE place in the code that sets the |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Research: how much overhead does the n=1 time limit have? 1175854982 | |

| 1074454687 | https://github.com/simonw/datasette/issues/1679#issuecomment-1074454687 | https://api.github.com/repos/simonw/datasette/issues/1679 | IC_kwDOBm6k_c5ACuCf | simonw 9599 | 2022-03-21T21:48:02Z | 2022-03-21T21:48:02Z | OWNER | Here's another microbenchmark that measures how many nanoseconds it takes to run 1,000 vmops: ```python import sqlite3 import time db = sqlite3.connect(":memory:") i = 0 out = [] def count(): global i i += 1000 out.append(((i, time.perf_counter_ns()))) db.set_progress_handler(count, 1000) print("Start:", time.perf_counter_ns()) all = db.execute(""" with recursive counter(x) as ( select 0 union select x + 1 from counter ) select * from counter limit 10000; """).fetchall() print("End:", time.perf_counter_ns()) print() print("So how long does it take to execute 1000 ops?") prev_time_ns = None

for i, time_ns in out:

if prev_time_ns is not None:

print(time_ns - prev_time_ns, "ns")

prev_time_ns = time_ns

So how long does it take to execute 1000 ops? 47290 ns 49573 ns 48226 ns 45674 ns 53238 ns 47313 ns 52346 ns 48689 ns 47092 ns 87596 ns 69999 ns 52522 ns 52809 ns 53259 ns 52478 ns 53478 ns 65812 ns ``` 87596ns is 0.087596ms - so even a measure rate of every 1000 ops is easily finely grained enough to capture differences of less than 0.1ms. If anything I could bump that default 1000 up - and I can definitely eliminate the |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Research: how much overhead does the n=1 time limit have? 1175854982 | |

| 1074446576 | https://github.com/simonw/datasette/issues/1679#issuecomment-1074446576 | https://api.github.com/repos/simonw/datasette/issues/1679 | IC_kwDOBm6k_c5ACsDw | simonw 9599 | 2022-03-21T21:38:27Z | 2022-03-21T21:38:27Z | OWNER | OK here's a microbenchmark script: ```python import sqlite3 import timeit db = sqlite3.connect(":memory:") db_with_progress_handler_1 = sqlite3.connect(":memory:") db_with_progress_handler_1000 = sqlite3.connect(":memory:") db_with_progress_handler_1.set_progress_handler(lambda: None, 1) db_with_progress_handler_1000.set_progress_handler(lambda: None, 1000) def execute_query(db): cursor = db.execute(""" with recursive counter(x) as ( select 0 union select x + 1 from counter ) select * from counter limit 10000; """) list(cursor.fetchall()) print("Without progress_handler") print(timeit.timeit(lambda: execute_query(db), number=100)) print("progress_handler every 1000 ops") print(timeit.timeit(lambda: execute_query(db_with_progress_handler_1000), number=100)) print("progress_handler every 1 op")

print(timeit.timeit(lambda: execute_query(db_with_progress_handler_1), number=100))

So running every 1000 ops makes almost no difference at all, but running every single op is a 3.2x performance degradation. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Research: how much overhead does the n=1 time limit have? 1175854982 | |

| 1074439309 | https://github.com/simonw/datasette/issues/1679#issuecomment-1074439309 | https://api.github.com/repos/simonw/datasette/issues/1679 | IC_kwDOBm6k_c5ACqSN | simonw 9599 | 2022-03-21T21:28:58Z | 2022-03-21T21:28:58Z | OWNER | David Raymond solved it there: https://sqlite.org/forum/forumpost/330c8532d8a88bcd

Sure enough, adding that gets the VM steps number up to 190,007 which is close enough that I'm happy. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Research: how much overhead does the n=1 time limit have? 1175854982 | |

| 1074378472 | https://github.com/simonw/datasette/issues/1676#issuecomment-1074378472 | https://api.github.com/repos/simonw/datasette/issues/1676 | IC_kwDOBm6k_c5ACbbo | simonw 9599 | 2022-03-21T20:18:10Z | 2022-03-21T20:18:10Z | OWNER | Maybe there is a better name for this method that helps emphasize its cascading nature. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Reconsider ensure_permissions() logic, can it be less confusing? 1175690070 | |

| 1074347023 | https://github.com/simonw/datasette/issues/1679#issuecomment-1074347023 | https://api.github.com/repos/simonw/datasette/issues/1679 | IC_kwDOBm6k_c5ACTwP | simonw 9599 | 2022-03-21T19:48:59Z | 2022-03-21T19:48:59Z | OWNER | Posed a question about that here: https://sqlite.org/forum/forumpost/de9ff10fa7 |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Research: how much overhead does the n=1 time limit have? 1175854982 | |

| 1074341924 | https://github.com/simonw/datasette/issues/1679#issuecomment-1074341924 | https://api.github.com/repos/simonw/datasette/issues/1679 | IC_kwDOBm6k_c5ACSgk | simonw 9599 | 2022-03-21T19:42:08Z | 2022-03-21T19:42:08Z | OWNER | Here's the Python-C implementation of It calls https://www.sqlite.org/c3ref/progress_handler.html says:

So maybe VM-steps and virtual machine instructions are different things? |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Research: how much overhead does the n=1 time limit have? 1175854982 | |

| 1074337997 | https://github.com/simonw/datasette/issues/1679#issuecomment-1074337997 | https://api.github.com/repos/simonw/datasette/issues/1679 | IC_kwDOBm6k_c5ACRjN | simonw 9599 | 2022-03-21T19:37:08Z | 2022-03-21T19:37:08Z | OWNER | This is weird: ```python import sqlite3 db = sqlite3.connect(":memory:") i = 0 def count(): global i i += 1 db.set_progress_handler(count, 1) db.execute(""" with recursive counter(x) as ( select 0 union select x + 1 from counter ) select * from counter limit 10000; """) print(i)

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Research: how much overhead does the n=1 time limit have? 1175854982 | |

| 1074332718 | https://github.com/simonw/datasette/issues/1679#issuecomment-1074332718 | https://api.github.com/repos/simonw/datasette/issues/1679 | IC_kwDOBm6k_c5ACQQu | simonw 9599 | 2022-03-21T19:31:10Z | 2022-03-21T19:31:10Z | OWNER | How long does it take for SQLite to execute 1000 opcodes anyway? |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Research: how much overhead does the n=1 time limit have? 1175854982 | |

| 1074332325 | https://github.com/simonw/datasette/issues/1679#issuecomment-1074332325 | https://api.github.com/repos/simonw/datasette/issues/1679 | IC_kwDOBm6k_c5ACQKl | simonw 9599 | 2022-03-21T19:30:44Z | 2022-03-21T19:30:44Z | OWNER | So it looks like even for facet suggestion |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Research: how much overhead does the n=1 time limit have? 1175854982 | |

| 1074331743 | https://github.com/simonw/datasette/issues/1679#issuecomment-1074331743 | https://api.github.com/repos/simonw/datasette/issues/1679 | IC_kwDOBm6k_c5ACQBf | simonw 9599 | 2022-03-21T19:30:05Z | 2022-03-21T19:30:05Z | OWNER | https://github.com/simonw/datasette/blob/1a7750eb29fd15dd2eea3b9f6e33028ce441b143/datasette/app.py#L118-L122 sets it to 50ms for facet suggestion but that's not going to pass

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Research: how much overhead does the n=1 time limit have? 1175854982 | |

| 1074321862 | https://github.com/simonw/datasette/issues/1660#issuecomment-1074321862 | https://api.github.com/repos/simonw/datasette/issues/1660 | IC_kwDOBm6k_c5ACNnG | simonw 9599 | 2022-03-21T19:19:01Z | 2022-03-21T19:19:01Z | OWNER | I've simplified this a ton now. I'm going to keep working on this in the long-term but I think this issue can be closed. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Refactor and simplify Datasette routing and views 1170144879 | |

| 1074302559 | https://github.com/simonw/datasette/issues/1678#issuecomment-1074302559 | https://api.github.com/repos/simonw/datasette/issues/1678 | IC_kwDOBm6k_c5ACI5f | simonw 9599 | 2022-03-21T19:04:03Z | 2022-03-21T19:04:03Z | OWNER | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Make `check_visibility()` a documented API 1175715988 | ||

| 1074287177 | https://github.com/simonw/datasette/issues/1660#issuecomment-1074287177 | https://api.github.com/repos/simonw/datasette/issues/1660 | IC_kwDOBm6k_c5ACFJJ | simonw 9599 | 2022-03-21T18:51:42Z | 2022-03-21T18:51:42Z | OWNER |

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Refactor and simplify Datasette routing and views 1170144879 | |

| 1074256603 | https://github.com/simonw/sqlite-utils/issues/417#issuecomment-1074256603 | https://api.github.com/repos/simonw/sqlite-utils/issues/417 | IC_kwDOCGYnMM5AB9rb | blaine 9954 | 2022-03-21T18:19:41Z | 2022-03-21T18:19:41Z | NONE | That makes sense; just a little hint that points folks towards doing the right thing might be helpful! fwiw, the reason I was using jq in the first place was just a quick way to extract one attribute from an actual JSON array. When I initially imported it, I got a table with a bunch of embedded JSON values, rather than a native table, because each array entry had two attributes, one with the data I actually wanted. Not sure how common a use-case this is, though (and easily fixed, aside from the jq weirdness!) |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

insert fails on JSONL with whitespace 1175744654 | |

| 1074243540 | https://github.com/simonw/sqlite-utils/issues/417#issuecomment-1074243540 | https://api.github.com/repos/simonw/sqlite-utils/issues/417 | IC_kwDOCGYnMM5AB6fU | simonw 9599 | 2022-03-21T18:08:03Z | 2022-03-21T18:08:03Z | OWNER | I've not really thought about standards as much here as I should. It looks like there are two competing specs for newline-delimited JSON! http://ndjson.org/ is the one I've been using in

https://jsonlines.org/ is the other one. It is slightly less clear, but it does say this:

My interpretation of both of these is that newlines in the middle of a JSON object shouldn't be allowed. So what's The The thing I like about newline-delimited JSON is that it's really trivial to parse - loop through each line, run it through Unless someone has written a robust Python implementation of a |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

insert fails on JSONL with whitespace 1175744654 | |

| 1074184240 | https://github.com/simonw/datasette/issues/1677#issuecomment-1074184240 | https://api.github.com/repos/simonw/datasette/issues/1677 | IC_kwDOBm6k_c5ABsAw | simonw 9599 | 2022-03-21T17:20:17Z | 2022-03-21T17:20:17Z | OWNER | This is weirdly different from how |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Remove `check_permission()` from `BaseView` 1175694248 | |

| 1074180312 | https://github.com/simonw/datasette/issues/1676#issuecomment-1074180312 | https://api.github.com/repos/simonw/datasette/issues/1676 | IC_kwDOBm6k_c5ABrDY | simonw 9599 | 2022-03-21T17:16:45Z | 2022-03-21T17:16:45Z | OWNER | When looking at this code earlier I assumed that the following would check each permission in turn and fail if any of them failed:

If that is indeed the right abstraction, I need to work to make the documentation as clear as possible. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Reconsider ensure_permissions() logic, can it be less confusing? 1175690070 | |

| 1074178865 | https://github.com/simonw/datasette/issues/1676#issuecomment-1074178865 | https://api.github.com/repos/simonw/datasette/issues/1676 | IC_kwDOBm6k_c5ABqsx | simonw 9599 | 2022-03-21T17:15:27Z | 2022-03-21T17:15:27Z | OWNER | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Reconsider ensure_permissions() logic, can it be less confusing? 1175690070 | ||

| 1074177827 | https://github.com/simonw/datasette/issues/1675#issuecomment-1074177827 | https://api.github.com/repos/simonw/datasette/issues/1675 | IC_kwDOBm6k_c5ABqcj | simonw 9599 | 2022-03-21T17:14:31Z | 2022-03-21T17:14:31Z | OWNER | Updated documentation: https://github.com/simonw/datasette/blob/e627510b760198ccedba9e5af47a771e847785c9/docs/internals.rst#await-ensure_permissionsactor-permissions

That's pretty hard to understand! I'm going to open a separate issue to reconsider if this is a useful enough abstraction given how confusing it is. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Extract out `check_permissions()` from `BaseView 1175648453 | |

| 1074161523 | https://github.com/simonw/datasette/issues/1675#issuecomment-1074161523 | https://api.github.com/repos/simonw/datasette/issues/1675 | IC_kwDOBm6k_c5ABmdz | simonw 9599 | 2022-03-21T16:59:55Z | 2022-03-21T17:00:03Z | OWNER | Also calling that function |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Extract out `check_permissions()` from `BaseView 1175648453 | |

| 1074158890 | https://github.com/simonw/datasette/issues/1675#issuecomment-1074158890 | https://api.github.com/repos/simonw/datasette/issues/1675 | IC_kwDOBm6k_c5ABl0q | simonw 9599 | 2022-03-21T16:57:15Z | 2022-03-21T16:57:15Z | OWNER | Idea: A new |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Extract out `check_permissions()` from `BaseView 1175648453 | |

| 1074156779 | https://github.com/simonw/datasette/issues/1675#issuecomment-1074156779 | https://api.github.com/repos/simonw/datasette/issues/1675 | IC_kwDOBm6k_c5ABlTr | simonw 9599 | 2022-03-21T16:55:08Z | 2022-03-21T16:56:02Z | OWNER | One benefit of the current design of I could return an object which evaluates to |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Extract out `check_permissions()` from `BaseView 1175648453 | |

| 1074143209 | https://github.com/simonw/datasette/issues/1675#issuecomment-1074143209 | https://api.github.com/repos/simonw/datasette/issues/1675 | IC_kwDOBm6k_c5ABh_p | simonw 9599 | 2022-03-21T16:46:05Z | 2022-03-21T16:46:05Z | OWNER | The other difference though is that |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Extract out `check_permissions()` from `BaseView 1175648453 | |

| 1074142617 | https://github.com/simonw/datasette/issues/1675#issuecomment-1074142617 | https://api.github.com/repos/simonw/datasette/issues/1675 | IC_kwDOBm6k_c5ABh2Z | simonw 9599 | 2022-03-21T16:45:27Z | 2022-03-21T16:45:27Z | OWNER | Though at that point So maybe

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Extract out `check_permissions()` from `BaseView 1175648453 | |

| 1074141457 | https://github.com/simonw/datasette/issues/1675#issuecomment-1074141457 | https://api.github.com/repos/simonw/datasette/issues/1675 | IC_kwDOBm6k_c5ABhkR | simonw 9599 | 2022-03-21T16:44:09Z | 2022-03-21T16:44:09Z | OWNER | A slightly odd thing about these methods is that they either fail silently or they raise a Maybe they should instead return |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Extract out `check_permissions()` from `BaseView 1175648453 | |

| 1074136176 | https://github.com/simonw/datasette/issues/1660#issuecomment-1074136176 | https://api.github.com/repos/simonw/datasette/issues/1660 | IC_kwDOBm6k_c5ABgRw | simonw 9599 | 2022-03-21T16:38:46Z | 2022-03-21T16:38:46Z | OWNER | I'm going to refactor this stuff out and document it so it can be easily used by plugins: |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Refactor and simplify Datasette routing and views 1170144879 | |

| 1074019047 | https://github.com/simonw/datasette/issues/526#issuecomment-1074019047 | https://api.github.com/repos/simonw/datasette/issues/526 | IC_kwDOBm6k_c5ABDrn | simonw 9599 | 2022-03-21T15:09:56Z | 2022-03-21T15:09:56Z | OWNER | I should research how much overhead creating a new connection costs - it may be that an easy way to solve this is to create A dedicated connection for the query and then close that connection at the end. |

{

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Stream all results for arbitrary SQL and canned queries 459882902 | |

| 1074017633 | https://github.com/simonw/datasette/issues/1177#issuecomment-1074017633 | https://api.github.com/repos/simonw/datasette/issues/1177 | IC_kwDOBm6k_c5ABDVh | simonw 9599 | 2022-03-21T15:08:51Z | 2022-03-21T15:08:51Z | OWNER | Related: - #1062 |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Ability to stream all rows as newline-delimited JSON 780153562 | |

| 1073468996 | https://github.com/simonw/sqlite-utils/issues/415#issuecomment-1073468996 | https://api.github.com/repos/simonw/sqlite-utils/issues/415 | IC_kwDOCGYnMM4_-9ZE | simonw 9599 | 2022-03-21T04:14:42Z | 2022-03-21T04:14:42Z | OWNER | I can fix this like so: ``` % sqlite-utils convert demo.db demo foo '{"foo": "bar"}' --multi --dry-run abc --- becomes: {"foo": "bar"} Would affect 1 row

|

{

"total_count": 1,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 1,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Convert with `--multi` and `--dry-run` flag does not work 1171599874 | |

| 1073463375 | https://github.com/simonw/sqlite-utils/issues/415#issuecomment-1073463375 | https://api.github.com/repos/simonw/sqlite-utils/issues/415 | IC_kwDOCGYnMM4_-8BP | simonw 9599 | 2022-03-21T04:02:36Z | 2022-03-21T04:02:36Z | OWNER | Thanks for the really clear steps to reproduce! |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Convert with `--multi` and `--dry-run` flag does not work 1171599874 | |

| 1073456222 | https://github.com/simonw/sqlite-utils/issues/416#issuecomment-1073456222 | https://api.github.com/repos/simonw/sqlite-utils/issues/416 | IC_kwDOCGYnMM4_-6Re | simonw 9599 | 2022-03-21T03:45:52Z | 2022-03-21T03:45:52Z | OWNER | Needs tests and documentation. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Options for how `r.parsedate()` should handle invalid dates 1173023272 | |

| 1073456155 | https://github.com/simonw/sqlite-utils/issues/416#issuecomment-1073456155 | https://api.github.com/repos/simonw/sqlite-utils/issues/416 | IC_kwDOCGYnMM4_-6Qb | simonw 9599 | 2022-03-21T03:45:37Z | 2022-03-21T03:45:37Z | OWNER | Prototype: ```diff diff --git a/sqlite_utils/cli.py b/sqlite_utils/cli.py index 8255b56..0a3693e 100644 --- a/sqlite_utils/cli.py +++ b/sqlite_utils/cli.py @@ -2583,7 +2583,11 @@ def generate_convert_help(): """ ).strip() recipe_names = [ - n for n in dir(recipes) if not n.startswith("") and n not in ("json", "parser") + n + for n in dir(recipes) + if not n.startswith("_") + and n not in ("json", "parser") + and callable(getattr(recipes, n)) ] for name in recipe_names: fn = getattr(recipes, name) diff --git a/sqlite_utils/recipes.py b/sqlite_utils/recipes.py index 6918661..569c30d 100644 --- a/sqlite_utils/recipes.py +++ b/sqlite_utils/recipes.py @@ -1,17 +1,38 @@ from dateutil import parser import json +IGNORE = object() +SET_NULL = object() -def parsedate(value, dayfirst=False, yearfirst=False): + +def parsedate(value, dayfirst=False, yearfirst=False, errors=None): "Parse a date and convert it to ISO date format: yyyy-mm-dd" - return ( - parser.parse(value, dayfirst=dayfirst, yearfirst=yearfirst).date().isoformat() - ) + try: + return ( + parser.parse(value, dayfirst=dayfirst, yearfirst=yearfirst) + .date() + .isoformat() + ) + except parser.ParserError: + if errors is IGNORE: + return value + elif errors is SET_NULL: + return None + else: + raise -def parsedatetime(value, dayfirst=False, yearfirst=False): +def parsedatetime(value, dayfirst=False, yearfirst=False, errors=None): "Parse a datetime and convert it to ISO datetime format: yyyy-mm-ddTHH:MM:SS" - return parser.parse(value, dayfirst=dayfirst, yearfirst=yearfirst).isoformat() + try: + return parser.parse(value, dayfirst=dayfirst, yearfirst=yearfirst).isoformat() + except parser.ParserError: + if errors is IGNORE: + return value + elif errors is SET_NULL: + return None + else: + raise def jsonsplit(value, delimiter=",", type=str): ``` |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Options for how `r.parsedate()` should handle invalid dates 1173023272 | |

| 1073455905 | https://github.com/simonw/sqlite-utils/issues/416#issuecomment-1073455905 | https://api.github.com/repos/simonw/sqlite-utils/issues/416 | IC_kwDOCGYnMM4_-6Mh | simonw 9599 | 2022-03-21T03:44:47Z | 2022-03-21T03:45:00Z | OWNER | This is quite nice:

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Options for how `r.parsedate()` should handle invalid dates 1173023272 | |

| 1073453370 | https://github.com/simonw/sqlite-utils/issues/416#issuecomment-1073453370 | https://api.github.com/repos/simonw/sqlite-utils/issues/416 | IC_kwDOCGYnMM4_-5k6 | simonw 9599 | 2022-03-21T03:41:06Z | 2022-03-21T03:41:06Z | OWNER | I'm going to try the |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Options for how `r.parsedate()` should handle invalid dates 1173023272 | |

| 1073453230 | https://github.com/simonw/sqlite-utils/issues/416#issuecomment-1073453230 | https://api.github.com/repos/simonw/sqlite-utils/issues/416 | IC_kwDOCGYnMM4_-5iu | simonw 9599 | 2022-03-21T03:40:37Z | 2022-03-21T03:40:37Z | OWNER | I think the options here should be:

These need to be indicated by parameters to the Some design options:

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Options for how `r.parsedate()` should handle invalid dates 1173023272 | |

| 1073451659 | https://github.com/simonw/sqlite-utils/issues/416#issuecomment-1073451659 | https://api.github.com/repos/simonw/sqlite-utils/issues/416 | IC_kwDOCGYnMM4_-5KL | simonw 9599 | 2022-03-21T03:35:01Z | 2022-03-21T03:35:01Z | OWNER | I confirmed that if it fails for any value ALL values are left alone, since it runs in a transaction. Here's the code that does that: |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Options for how `r.parsedate()` should handle invalid dates 1173023272 | |

| 1073450588 | https://github.com/simonw/sqlite-utils/issues/416#issuecomment-1073450588 | https://api.github.com/repos/simonw/sqlite-utils/issues/416 | IC_kwDOCGYnMM4_-45c | simonw 9599 | 2022-03-21T03:32:58Z | 2022-03-21T03:32:58Z | OWNER | Then I ran this to convert |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Options for how `r.parsedate()` should handle invalid dates 1173023272 | |

| 1073448904 | https://github.com/simonw/sqlite-utils/issues/416#issuecomment-1073448904 | https://api.github.com/repos/simonw/sqlite-utils/issues/416 | IC_kwDOCGYnMM4_-4fI | simonw 9599 | 2022-03-21T03:28:12Z | 2022-03-21T03:30:37Z | OWNER | Generating a test database using a pattern from https://www.geekytidbits.com/date-range-table-sqlite/

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Options for how `r.parsedate()` should handle invalid dates 1173023272 |

Advanced export

JSON shape: default, array, newline-delimited, object

CREATE TABLE [issue_comments] (

[html_url] TEXT,

[issue_url] TEXT,

[id] INTEGER PRIMARY KEY,

[node_id] TEXT,

[user] INTEGER REFERENCES [users]([id]),

[created_at] TEXT,

[updated_at] TEXT,

[author_association] TEXT,

[body] TEXT,

[reactions] TEXT,

[issue] INTEGER REFERENCES [issues]([id])

, [performed_via_github_app] TEXT);

CREATE INDEX [idx_issue_comments_issue]

ON [issue_comments] ([issue]);

CREATE INDEX [idx_issue_comments_user]

ON [issue_comments] ([user]);

issue 14