from pluggy import HookimplMarker

ModuleNotFoundError: No module named 'pluggy'

```

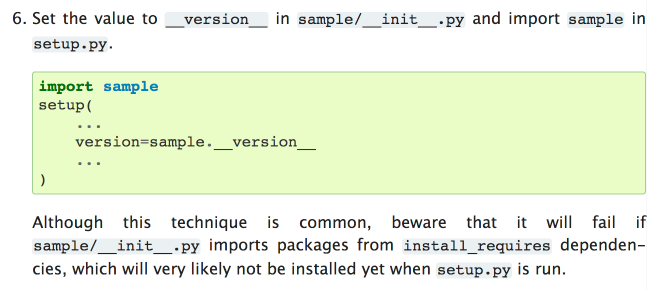

Looks like I've run into point 6 on https://packaging.python.org/guides/single-sourcing-package-version/ :

",17608,

381455054,"I think Vega-Lite is the way to go here: https://vega.github.io/vega-lite/

I've been playing around with it and Datasette with some really positive initial results:

https://vega.github.io/editor/#/gist/vega-lite/simonw/89100ce80573d062d70f780d10e5e609/decada131575825875c0a076e418c661c2adb014/vice-shootings-gender-race-by-department.vl.json

https://vega.github.io/editor/#/gist/vega-lite/simonw/5f69fbe29380b0d5d95f31a385f49ee4/7087b64df03cf9dba44a5258a606f29182cb8619/trees-san-francisco.vl.json",17608,

381456434,"The easiest way to implement this in Python 2 would be `execfile(...)` - but that was removed in Python 3. According to https://stackoverflow.com/a/437857/6083 `2to3` replaces that with this, which ensures the filename is associated with the code for debugging purposes:

```

with open(""somefile.py"") as f:

code = compile(f.read(), ""somefile.py"", 'exec')

exec(code, global_vars, local_vars)

```

Implementing it this way would force this kind of plugin to be self-contained in a single file. I think that's OK: if you want a more complex plugin you can use the standard pluggy-powered setuptools mechanism to build it.",17608,

381462005,This needs unit tests. I also need to manually test the `datasette package` and `datesette publish` commands.,17608,

381478217,"Here's the result of running:

datasette publish now fivethirtyeight.db \

--plugins-dir=plugins/ --title=""FiveThirtyEight"" --branch=plugins-dir

https://datasette-phjtvzwwzl.now.sh/fivethirtyeight-2628db9?sql=select+convert_units%28100%2C+%27m%27%2C+%27ft%27%29

Where `plugins/pint_plugin.py` contains the following:

```

from datasette import hookimpl

import pint

ureg = pint.UnitRegistry()

@hookimpl

def prepare_connection(conn):

def convert_units(amount, from_, to_):

""select convert_units(100, 'm', 'ft');""

return (amount * ureg(from_)).to(to_).to_tuple()[0]

conn.create_function('convert_units', 3, convert_units)

```",17608,

381478253,"This worked as well:

datasette package fivethirtyeight.db \

--plugins-dir=plugins/ --title=""FiveThirtyEight"" --branch=plugins-dir

",17608,

381481990,Added unit tests in 33c6bcadb962457be6b0c7f369826b404e2bcef5,17608,

381482407,"Here's the result of running this:

datasette publish heroku fivethirtyeight.db \

--plugins-dir=plugins/ --title=""FiveThirtyEight"" --branch=plugins-dir

https://intense-river-24599.herokuapp.com/fivethirtyeight-2628db9?sql=select+convert_units%28100%2C+%27m%27%2C+%27ft%27%29",17608,

381483301,I think this is a good improvement. If you fix the tests I'll merge it.,17608,

381488049,"I think this is pretty hard. @coleifer has done some work in this direction, including https://github.com/coleifer/pysqlite3 which ports the standalone pysqlite module to Python 3. ",17608,

381490361,"Packaging JS and CSS in a pip installable wheel is fiddly but possible. http://peak.telecommunity.com/DevCenter/PythonEggs#accessing-package-resources

from pkg_resources import resource_string

foo_config = resource_string(__name__, 'foo.conf')",17608,

381491707,This looks like a good example: https://github.com/funkey/nyroglancer/commit/d4438ab42171360b2b8e9020f672846dd70c8d80,17608,

381602005,I don't think it should be too difficult... you can look at what @ghaering did with pysqlite (and similarly what I copied for pysqlite3). You would theoretically take an amalgamation build of Sqlite (all code in a single .c and .h file). The `AmalgamationLibSqliteBuilder` class detects the presence of this amalgamated source file and builds a statically-linked pysqlite.,17608,

381611738,I should check if it's possible to have two template registration function plugins in a single plugin module. If it isn't maybe I should use class plugins instead of module plugins.,17608,

381612585,`resource_stream` returns a file-like object which may be better for serving from Sanic.,17608,

381621338,"Annoyingly, the following only results in the last of the two `prepare_connection` hooks being registered:

```

from datasette import hookimpl

import pint

import random

ureg = pint.UnitRegistry()

@hookimpl

def prepare_connection(conn):

def convert_units(amount, from_, to_):

""select convert_units(100, 'm', 'ft');""

return (amount * ureg(from_)).to(to_).to_tuple()[0]

conn.create_function('convert_units', 3, convert_units)

@hookimpl

def prepare_connection(conn):

conn.create_function('random_integer', 2, random.randint)

```",17608,

381622793,"I think that's OK. The two plugins I've implemented so far (`prepare_connection` and `prepare_jinja2_environment`) both make sense if they can only be defined once-per-plugin. For the moment I'll assume I can define future hooks to work well with the same limitation.

The syntactic sugar idea in #220 can help here too.",17608,

381643173,"Yikes, definitely a bug.",17608,

381644355,"So there are two tricky problems to solve here:

* I need a way of encoding `null` into that `_next=` that is unambiguous from the string `None` or `null`. This means introducing some kind of escaping mechanism in those strings. I already use URL encoding as part of the construction of those components here, maybe that can help here?

* I need to figure out what the SQL should be for the ""next"" set of results if the previous value was null. Thankfully we use the primary key as a tie-breaker so this shouldn't be impossible.",17608,

381645274,"Relevant code:

https://github.com/simonw/datasette/blob/904f1c75a3c17671d25c53b91e177c249d14ab3b/datasette/app.py#L828-L832",17608,

381645973,"I could use `$null` as a magic value that means None. Since I'm applying `quote_plus()` to actual values, any legit strings that look like this will be encoded as `%24null`:

```

>>> urllib.parse.quote_plus('$null')

'%24null'

```",17608,

381648053,"I think the correct SQL is this: https://datasette-issue-189-demo-3.now.sh/salaries-7859114-7859114?sql=select+rowid%2C+*+from+%5B2017+Maryland+state+salaries%5D%0D%0Awhere+%28middle_initial+is+not+null+or+%28middle_initial+is+null+and+rowid+%3E+%3Ap0%29%29%0D%0Aorder+by+middle_initial+limit+101&p0=391

```

select rowid, * from [2017 Maryland state salaries]

where (middle_initial is not null or (middle_initial is null and rowid > :p0))

order by middle_initial limit 101

```

Though this will also need to be taken into account for #198 ",17608,

381649140,But what would that SQL look like for `_sort_desc`?,17608,

381649437,"Here's where that SQL gets constructed at the moment:

https://github.com/simonw/datasette/blob/10a34f995c70daa37a8a2aa02c3135a4b023a24c/datasette/app.py#L761-L771",17608,

381738137,"Tests now fixed, honest. The failing test on Travis looks like an intermittent sqlite failure which should resolve itself on a retry...",17608,

381763651,"Ah, I had no idea you could bind python functions into sqlite!

I think the primary purpose of this issue has been served now - I'm going to close this and create a new issue for the only bit of this that hasn't been touched yet, which is (optionally) exposing units in the JSON API.",17608,

381777108,This could also help workaround the current predicament that a single plugin can only define one prepare_connection hook.,17608,

381786522,"Weird... tests are failing in Travis, despite passing on my local machine. https://travis-ci.org/simonw/datasette/builds/367423706",17608,

381788051,Still failing. This is very odd.,17608,

381794744,I'm reverting this out of master until I can figure out why the tests are failing.,17608,

381798786,"Here's the test that's failing:

https://github.com/simonw/datasette/blob/59a3aa859c0e782aeda9a515b1b52c358e8458a2/tests/test_api.py#L437-L470

I got Travis to spit out the `fetched` and `expected` variables.

`expected` has 201 items in it and is identical to what I get on my local laptop.

`fetched` has 250 items in it, so it's clearly different from my local environment.

I've managed to replicate the bug in production! I created a test database like this:

python tests/fixtures.py sortable.db

Then deployed that database like so:

datasette publish now sortable.db \

--extra-options=""--page_size=50"" --branch=debug-travis-issue-216

And... if you click ""next"" on this page https://datasette-issue-216-pagination.now.sh/sortable-5679797/sortable?_sort_desc=sortable_with_nulls five times you get back 250 results, when you should only get back 201.",17608,

381799267,"The version that I deployed which exhibits the bug is running SQLite `3.8.7.1` - https://datasette-issue-216-pagination.now.sh/sortable-5679797?sql=select+sqlite_version%28%29

The version that I have running locally which does NOT exhibit the bug is running SQLite `3.23.0`",17608,

381799408,"... which is VERY surprising, because `3.23.0` only came out on 2nd April this year: https://www.sqlite.org/changes.html - I have no idea how I came to be running that version on my laptop.",17608,

381801302,"This is the SQL that returns differing results in production and on my laptop: https://datasette-issue-216-pagination.now.sh/sortable-5679797?sql=select+%2A+from+sortable+where+%28sortable_with_nulls+is+null+and+%28%28pk1+%3E+%3Ap0%29%0A++or%0A%28pk1+%3D+%3Ap0+and+pk2+%3E+%3Ap1%29%29%29+order+by+sortable_with_nulls+desc+limit+51&p0=b&p1=t

```

select * from sortable where (sortable_with_nulls is null and ((pk1 > :p0)

or

(pk1 = :p0 and pk2 > :p1))) order by sortable_with_nulls desc limit 51

```

I think that `order by sortable_with_nulls desc` bit is at fault - the primary keys should be included in that order by as well.

Sure enough, changing the query to this one returns the same results across both environments:

```

select * from sortable where (sortable_with_nulls is null and ((pk1 > :p0)

or

(pk1 = :p0 and pk2 > :p1))) order by sortable_with_nulls desc, pk1, pk2 limit 51

```",17608,

381803157,Fixed!,17608,

381809998,I just shipped Datasette 0.19 with where I'm at so far: https://github.com/simonw/datasette/releases/tag/0.19,17608,

381905593,"I've added another commit which puts classes a class on each `` by default with its column name, and I've also made the PK column bold.

Unfortunately the tests are still failing on 3.6, which is weird. I can't reproduce locally...",17608,

382038613,"I figured out the recipe for bundling static assets in a plugin: https://github.com/simonw/datasette-plugin-demos/commit/26c5548f4ab7c6cc6d398df17767950be50d0edf (and then `python3 setup.py bdist_wheel`)

Having done that, I ran `pip install ../datasette-plugin-demos/dist/datasette_plugin_demos-0.2-py3-none-any.whl` from my Datasette virtual environment and then did the following:

```

>>> import pkg_resources

>>> pkg_resources.resource_stream(

... 'datasette_plugin_demos', 'static/plugin.js'

... ).read()

b""alert('hello');\n""

>>> pkg_resources.resource_filename(

... 'datasette_plugin_demos', 'static/plugin.js'

... )

'..../venv/lib/python3.6/site-packages/datasette_plugin_demos/static/plugin.js'

>>> pkg_resources.resource_string(

... 'datasette_plugin_demos', 'static/plugin.js'

... )

b""alert('hello');\n""

```",17608,

382048582,"One possible option: let plugins bundle their own `static/` directory and then register themselves with Datasette, then have `/-/static-plugins/name-of-plugin/...` serve files from that directory.",17608,

382069980,"Even if we automatically serve ALL `static/` content from installed plugins, we'll still need them to register which files need to be linked to from `extra_css_urls` and `extra_js_urls`",17608,

382205189,"I managed to get a better error message out of that test. The server is returning this (but only on Python 3.6, not on Python 3.5 - and only in Travis, not in my local environment):

```{'error': 'interrupted', 'ok': False, 'status': 400, 'title': 'Invalid SQL'}```

https://travis-ci.org/simonw/datasette/jobs/367929134",17608,

382210976,"OK, aaf59db570ab7688af72c08bb5bc1edc145e3e07 should mean that the tests pass when I merge that.",17608,

382256729,I added a mechanism for plugins to serve static files and define custom CSS and JS URLs in #214 - see new documentation on http://datasette.readthedocs.io/en/latest/plugins.html#static-assets and http://datasette.readthedocs.io/en/latest/plugins.html#extra-css-urls,17608,

382408128,"Demo:

datasette publish now sortable.db --install datasette-plugin-demos --branch=master

Produced this deployment, with both the `random_integer()` function and the static file from https://github.com/simonw/datasette-plugin-demos/tree/0.2

https://datasette-issue-223.now.sh/-/static-plugins/datasette_plugin_demos/plugin.js

https://datasette-issue-223.now.sh/sortable-4bbaa6f?sql=select+random_integer%280%2C+10%29

",17608,

382409989,"Tested on Heroku as well.

datasette publish heroku sortable.db --install datasette-plugin-demos --branch=master

https://morning-tor-45944.herokuapp.com/-/static-plugins/datasette_plugin_demos/plugin.js

https://morning-tor-45944.herokuapp.com/sortable-4bbaa6f?sql=select+random_integer%280%2C+10%29",17608,

382413121,"And tested `datasette package` - this time exercising the ability to pass more than one `--install` option:

```

$ datasette package sortable.db --branch=master --install requests --install datasette-plugin-demos

Sending build context to Docker daemon 125.4kB

Step 1/7 : FROM python:3

---> 79e1dc9af1c1

Step 2/7 : COPY . /app

---> 6e8e40bce378

Step 3/7 : WORKDIR /app

Removing intermediate container 7cdc9ab20d09

---> f42258c2211f

Step 4/7 : RUN pip install https://github.com/simonw/datasette/archive/master.zip requests datasette-plugin-demos

---> Running in a0f17cec08a4

Collecting ...

Removing intermediate container a0f17cec08a4

---> beea84e73271

Step 5/7 : RUN datasette inspect sortable.db --inspect-file inspect-data.json

---> Running in 4daa28792348

Removing intermediate container 4daa28792348

---> c60312d21b99

Step 6/7 : EXPOSE 8001

---> Running in fa728468482d

Removing intermediate container fa728468482d

---> 8f219a61fddc

Step 7/7 : CMD [""datasette"", ""serve"", ""--host"", ""0.0.0.0"", ""sortable.db"", ""--cors"", ""--port"", ""8001"", ""--inspect-file"", ""inspect-data.json""]

---> Running in cd4eaeb2ce9e

Removing intermediate container cd4eaeb2ce9e

---> 066e257c7c44

Successfully built 066e257c7c44

(venv) datasette $ docker run -p 8081:8001 066e257c7c44

Serve! files=('sortable.db',) on port 8001

[2018-04-18 14:40:18 +0000] [1] [INFO] Goin' Fast @ http://0.0.0.0:8001

[2018-04-18 14:40:18 +0000] [1] [INFO] Starting worker [1]

[2018-04-18 14:46:01 +0000] - (sanic.access)[INFO][1:7]: GET http://localhost:8081/-/static-plugins/datasette_plugin_demos/plugin.js 200 16

``` ",17608,

382616527,"No need to use `PackageLoader` after all, we can use the same mechanism we used for the static path:

https://github.com/simonw/datasette/blob/b55809a1e20986bb2e638b698815a77902e8708d/datasette/utils.py#L694-L695",17608,

382808266,"Maybe this should have a second argument indicating which codepath was being handled. That way plugins could say ""only inject this extra context variable on the row page"".",17608,

382924910,"Hiding tables with the `idx_` prefix should be good enough here, since false positives aren't very harmful.",17608,

382958693,"A better way to do this would be with many different plugin hooks, one for each view.",17608,

382959857,"Plus a generic prepare_context() hook called in the common render method.

prepare_context_table(), prepare_context_row() etc

Arguments are context, request, self (hence can access self.ds)

",17608,

382964794,"What if the context needs to make await calls?

One possible option: plugins can either manipulate the context in place OR they can return an awaitable. If they do that, the caller will await it.",17608,

382966604,Should this differentiate between preparing the data to be sent back as JSON and preparing the context for the template?,17608,

382967238,Maybe prepare_table_data() vs prepare_table_context(),17608,

383109984,Refs #229,17608,

383139889,"I released everything we have so far in [Datasette 0.20](https://github.com/simonw/datasette/releases/tag/0.20) and built and released an example plugin, [datasette-cluster-map](https://pypi.org/project/datasette-cluster-map/). Here's my blog entry about it: https://simonwillison.net/2018/Apr/20/datasette-plugins/",17608,

383140111,Here's a link demonstrating my new plugin: https://datasette-cluster-map-demo.now.sh/polar-bears-455fe3a/USGS_WC_eartags_output_files_2009-2011-Status,17608,

383252624,Thanks!,17608,

383315348,"I could also have an `""autodetect"": false` option for that plugin to turn off autodetecting entirely.

Would be useful if the plugin didn't append its JavaScript in pages that it wasn't used for - that might require making the `extra_js_urls()` hook optionally aware of the columns and table and metadata.",17608,

383398182,"```{

""databases"": {

""database1"": {

""tables"": {

""example_table"": {

""label_column"": ""name""

}

}

}

}

}

```",17608,

383399762,Docs here: http://datasette.readthedocs.io/en/latest/metadata.html#specifying-the-label-column-for-a-table,17608,

383410146,"I built this wrong: my implementation is looking for the `label_column` on the table-being-displayed, but it should be looking for it on the table-the-foreign-key-links-to.",17608,

383727973,"There might also be something clever we can do here with PRAGMA statements: https://stackoverflow.com/questions/14146881/limit-the-maximum-amount-of-memory-sqlite3-uses

And https://www.sqlite.org/pragma.html",17608,

383764533,The `resource` module in he standard library has the ability to set limits on memory usage for the current process: https://pymotw.com/2/resource/,17608,

384362028,"On further thought: this is actually only an issue for immutable deployments to platforms like Zeit Now and Heroku.

As such, adding it to `datasette serve` feels clumsy. Maybe `datasette publish` should instead gain the ability to optionally install an extra mechanism that periodically pulls a fresh copy of `metadata.json` from a URL.",17608,

384500327,"```

{

""databases"": {

""database1"": {

""tables"": {

""example_table"": {

""hidden"": true

}

}

}

}

}

```",17608,

384503873,Documentation: http://datasette.readthedocs.io/en/latest/metadata.html#hiding-tables,17608,

384512192,Documentation: http://datasette.readthedocs.io/en/latest/json_api.html#special-table-arguments,17608,

384675792,"Docs now live at http://datasette.readthedocs.io/

I still need to document a few more parts of the API before closing this.",17608,

384676488,Remaining work for this is tracked in #150,17608,

384678319,"I shipped this last week as the first plugin: https://simonwillison.net/2018/Apr/20/datasette-plugins/

Demo: https://datasette-cluster-map-demo.datasettes.com/polar-bears-455fe3a/USGS_WC_eartags_output_files_2009-2011-Status

Plugin: https://github.com/simonw/datasette-cluster-map",17608,

386309928,Demo: https://datasette-versions-and-shape-demo.now.sh/-/versions,17608,

386310149,"Demos:

* https://datasette-versions-and-shape-demo.now.sh/sf-trees-02c8ef1/qSpecies.json?_shape=array

* https://datasette-versions-and-shape-demo.now.sh/sf-trees-02c8ef1/qSpecies.json?_shape=object

* https://datasette-versions-and-shape-demo.now.sh/sf-trees-02c8ef1/qSpecies.json?_shape=arrays

* https://datasette-versions-and-shape-demo.now.sh/sf-trees-02c8ef1/qSpecies.json?_shape=objects",17608,

386357645,"Even better: use `plugin_manager.list_plugin_distinfo()` from pluggy to get back a list of tuples, the second item in each tuple is a `pkg_resources.DistInfoDistribution` with a `.version` attribute.",17608,

386692333,Demo: https://datasette-plugins-and-max-size-demo.now.sh/-/plugins,17608,

386692534,Demo: https://datasette-plugins-and-max-size-demo.now.sh/sf-trees/Street_Tree_List.json?_size=max,17608,

386840307,Documented here: http://datasette.readthedocs.io/en/latest/json_api.html#special-table-arguments,17608,

386840806,"Demo:

datasette publish now ../datasettes/san-francisco/sf-film-locations.db --branch=master --name datasette-column-search-demo

https://datasette-column-search-demo.now.sh/sf-film-locations/Film_Locations_in_San_Francisco?_search_Locations=justin",17608,

386879509,"We can solve this using the `sqlite_timelimit(conn, 20)` helper, which can tell SQLite to give up after 20ms. We can wrap that around the following SQL:

select distinct COLUMN from TABLE limit 21;

Then we look at the number of rows returned. If it's 21 or more we know that this table had more than 21 distinct values, so we'll treat it as ""unlimited"". Likewise, if the SQL times out before 20ms is up we will skip this introspection.",17608,

386879840,"Here's a quick demo of that exploration: https://datasette-distinct-column-values.now.sh/-/inspect

Example output:

```

{

""antiquities-act/actions_under_antiquities_act"": {

""columns"": [

""current_name"",

""states"",

""original_name"",

""current_agency"",

""action"",

""date"",

""year"",

""pres_or_congress"",

""acres_affected""

],

""count"": 344,

""distinct_values_by_column"": {

""acres_affected"": null,

""action"": null,

""current_agency"": [

""NPS"",

""State of Montana"",

""BLM"",

""State of Arizona"",

""USFS"",

""State of North Dakota"",

""NPS, BLM"",

""State of South Carolina"",

""State of New York"",

""FWS"",

""FWS, NOAA"",

""NPS, FWS"",

""NOAA"",

""BLM, USFS"",

""NOAA, FWS""

],

""current_name"": null,

""date"": null,

""original_name"": null,

""pres_or_congress"": null,

""states"": null,

""year"": null

},

""foreign_keys"": {

""incoming"": [],

""outgoing"": []

},

""fts_table"": null,

""hidden"": false,

""label_column"": null,

""name"": ""antiquities-act/actions_under_antiquities_act"",

""primary_keys"": []

}

}

```",17608,

386879878,If I'm going to expand column introspection in this way it would be useful to also capture column type information.,17608,

388360255,"Do you have an example I can look at?

I think I have a possible route for fixing this, but it's pretty tricky (it involves adding a full SQL statement parser, but that's needed for some other potential improvements as well).

In the meantime, is this causing actual errors for you or is it more of an inconvenience (form fields being displayed that don't actually do anything)?

Another potential solution here could be to allow canned queries to optionally declare their parameters in metadata.json",17608,

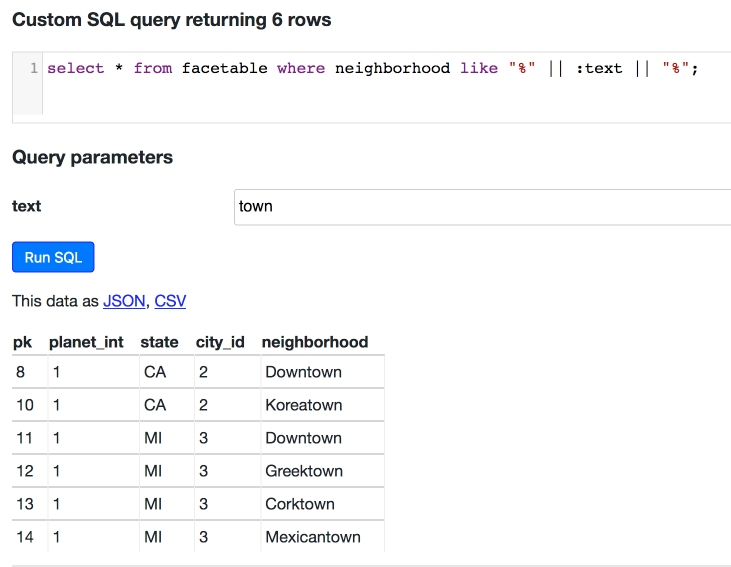

388367027,"An example deployment @ https://datasette-zkcvlwdrhl.now.sh/simplestreams-270f20c/cloudimage?content_id__exact=com.ubuntu.cloud%3Areleased%3Adownload

It is not causing errors, more of an inconvenience. I have worked around it using a `like` query instead. ",17608,

388497467,"Got it, this seems to trigger the problem: https://datasette-zkcvlwdrhl.now.sh/simplestreams-270f20c?sql=select+*+from+cloudimage+where+%22content_id%22+%3D+%22com.ubuntu.cloud%3Areleased%3Adownload%22+order+by+id+limit+10",17608,

388525357,Facet counts will be generated by extra SQL queries with their own aggressive time limit.,17608,

388550742,http://datasette.readthedocs.io/en/latest/full_text_search.html,17608,

388587855,Adding some TODOs to the original description (so they show up as a todo progress bar),17608,

388588011,Initial documentation: http://datasette.readthedocs.io/en/latest/facets.html,17608,

388588998,"A few demos:

* https://datasette-facets-demo.now.sh/fivethirtyeight-2628db9/college-majors%2Fall-ages?_facet=Major_category

* https://datasette-facets-demo.now.sh/fivethirtyeight-2628db9/congress-age%2Fcongress-terms?_facet=chamber&_facet=state&_facet=party&_facet=incumbent

* https://datasette-facets-demo.now.sh/fivethirtyeight-2628db9/bechdel%2Fmovies?_facet=binary&_facet=test",17608,

388589072,"I need to decide how to display these. They currently look like this:

https://datasette-facets-demo.now.sh/fivethirtyeight-2628db9/congress-age%2Fcongress-terms?_facet=chamber&_facet=state&_facet=party&_facet=incumbent&state=MO

",17608,

388625703,"I'm still seeing intermittent Python 3.5 failures due to dictionary ordering differences.

https://travis-ci.org/simonw/datasette/jobs/378356802

```

> assert expected_facet_results == facet_results

E AssertionError: assert {'city': [{'c...alue': 'MI'}]} == {'city': [{'co...alue': 'MI'}]}

E Omitting 1 identical items, use -vv to show

E Differing items:

E {'city': [{'count': 4, 'toggle_url': '_facet=state&_facet=city&state=MI&city=Detroit', 'value': 'Detroit'}]} != {'city': [{'count': 4, 'toggle_url': 'state=MI&_facet=state&_facet=city&city=Detroit', 'value': 'Detroit'}]}

E Use -v to get the full diff

```

To solve these cleanly I need to be able to run Python 3.5 on my local laptop rather than relying on Travis every time.",17608,

388626721,"I managed to get Python 3.5.0 running on my laptop using [pyenv](https://github.com/pyenv/pyenv). Here's the incantation I used:

```

# Install pyenv using homebrew (turns out I already had it)

brew install pyenv

# Check which versions of Python I have installed

pyenv versions

# Install Python 3.5.0

pyenv install 3.5.0

# Figure out where pyenv has been installing things

pyenv root

# Check I can run my newly installed Python 3.5.0

/Users/simonw/.pyenv/versions/3.5.0/bin/python

# Use it to create a new virtualenv

/Users/simonw/.pyenv/versions/3.5.0/bin/python -mvenv venv35

source venv35/bin/activate

# Install datasette into that virtualenv

python setup.py install

```",17608,

388626804,"Unfortunately, running `python setup.py test` on my laptop using Python 3.5.0 in that virtualenv results in a flow of weird Sanic-related errors:

```

File ""/Users/simonw/Dropbox/Development/datasette/venv35/lib/python3.5/site-packages/sanic-0.7.0-py3.5.egg/sanic/testing.py"", line 16, in _local_request

import aiohttp

File ""/Users/simonw/Dropbox/Development/datasette/.eggs/aiohttp-2.3.2-py3.5-macosx-10.13-x86_64.egg/aiohttp/__init__.py"", line 6, in

from .client import * # noqa

File ""/Users/simonw/Dropbox/Development/datasette/.eggs/aiohttp-2.3.2-py3.5-macosx-10.13-x86_64.egg/aiohttp/client.py"", line 13, in

from yarl import URL

File ""/Users/simonw/Dropbox/Development/datasette/.eggs/yarl-1.2.4-py3.5-macosx-10.13-x86_64.egg/yarl/__init__.py"", line 11, in

from .quoting import _Quoter, _Unquoter

File ""/Users/simonw/Dropbox/Development/datasette/.eggs/yarl-1.2.4-py3.5-macosx-10.13-x86_64.egg/yarl/quoting.py"", line 3, in

from typing import Optional, TYPE_CHECKING, cast

ImportError: cannot import name 'TYPE_CHECKING'

```",17608,

388627281,"https://github.com/rtfd/readthedocs.org/issues/3812#issuecomment-373780860 suggests Python 3.5.2 may have the fix.

Yup, that worked:

```

pyenv install 3.5.2

rm -rf venv35

/Users/simonw/.pyenv/versions/3.5.2/bin/python -mvenv venv35

source venv35/bin/activate

# Not sure why I need this in my local environment but I do:

pip install datasette_plugin_demos

python setup.py test

```

This is now giving me the same test failure locally that I am seeing in Travis.",17608,

388628966,"Running specific tests:

```

venv35/bin/pip install pytest beautifulsoup4 aiohttp

venv35/bin/pytest tests/test_utils.py

```",17608,

388645828,I may be able to run the SQL for all of the facet counts in one go using a WITH CTE query - will have to microbenchmark this to make sure it is worthwhile: https://datasette-facets-demo.now.sh/fivethirtyeight-2628db9?sql=with+blah+as+%28select+*+from+%5Bcollege-majors%2Fall-ages%5D%29%0D%0Aselect+*+from+%28select+%22Major_category%22%2C+Major_category%2C+count%28*%29+as+n+from%0D%0Ablah+group+by+Major_category+order+by+n+desc+limit+10%29%0D%0Aunion+all%0D%0Aselect+*+from+%28select+%22Major_category2%22%2C+Major_category%2C+count%28*%29+as+n+from%0D%0Ablah+group+by+Major_category+order+by+n+desc+limit+10%29,17608,

388684356,"I just landed pull request #257 - I haven't refactored the tests, I may do that later if it looks worthwhile.",17608,

388686463,It would be neat if there was a mechanism for calculating aggregates per facet - e.g. calculating the sum() of specific columns against each facet result on https://datasette-facets-demo.now.sh/fivethirtyeight-2628db9/nba-elo%2Fnbaallelo?_facet=lg_id&_facet=fran_id&lg_id=ABA&_facet=team_id,17608,

388784063,"Can I get facets working across many2many relationships?

This would be fiendishly useful, but the querystring and `metadata.json` syntax is non-obvious.",17608,

388784787,"To decide which facets to suggest: for each column, is the unique value count less than the number of rows matching the current query or is it less than 20 (if we are showing more than 20 rows)?

Maybe only do this if there are less than ten non-float columns. Or always try for foreign keys and booleans, then if there are none of those try indexed text and integer fields, then finally try non-indexed text and integer fields but only if there are less than ten.",17608,

388797919,"For M2M to work we will need a mechanism for applying IN queries to the table view, so you can select multiple M2M filters. Maybe this would work:

?_m2m_category=123&_m2m_category=865",17608,

388987044,This work is now happening in the facets branch. Closing this in favor of #255.,17608,

389145872,Activity has now moved to this branch: https://github.com/simonw/datasette/commits/suggested-facets,17608,

389147608,"New demo (published with `datasette publish now --branch=suggested-facets fivethirtyeight.db sf-trees.db --name=datastte-suggested-facets-demo`): https://datasette-suggested-facets-demo.now.sh/fivethirtyeight-2628db9/comic-characters%2Fmarvel-wikia-data

After turning on a couple of suggested facets... https://datasette-suggested-facets-demo.now.sh/fivethirtyeight-2628db9/comic-characters%2Fmarvel-wikia-data?_facet=SEX&_facet=ID

",17608,

389386142,"The URL does persist across deployments already, in that you can use the URL without the hash and it will redirect to the current location. Here's an example of that: https://san-francisco.datasettes.com/sf-trees/Street_Tree_List.json

This also works if you attempt to hit the incorrect hash, e.g. if you have deployed a new version of the database with an updated hash. The old hash will redirect, e.g. https://san-francisco.datasettes.com/sf-trees-c4b972c/Street_Tree_List.json

If you serve Datasette from a HTTP/2 proxy (I've been using Cloudflare for this) you won't even have to pay the cost of the redirect - Datasette sends a `Link: ; rel=preload` header with those redirects, which causes Cloudflare to push out the redirected source as part of that HTTP/2 request. You can fire up the Chrome DevTools to watch this happen.

https://github.com/simonw/datasette/blob/2b79f2bdeb1efa86e0756e741292d625f91cb93d/datasette/views/base.py#L91

All of that said... I'm not at all opposed to this feature. For consistency with other Datasette options (e.g. `--cors`) I'd prefer to do this as an optional argument to the `datasette serve` command - something like this:

datasette serve mydb.db --no-url-hash",17608,

389386919,"I updated that demo to demonstrate the new foreign key label expansions: https://datasette-suggested-facets-demo.now.sh/sf-trees-02c8ef1/Street_Tree_List?_facet=qLegalStatus

",17608,

389397457,Maybe `suggested_facets` should only be calculated for the HTML view.,17608,

389536870,"The principle benefit provided by the hash URLs is that Datasette can set a far-future cache expiry header on every response. This is particularly useful for JavaScript API work as it makes fantastic use of the browser's cache. It also means that if you are serving your API from behind a caching proxy like Cloudflare you get a fantastic cache hit rate.

An option to serve without persistent hashes would also need to turn off the cache headers.

Maybe the option should support both? If you hit a page with the hash in the URL you still get the cache headers, but hits to the URL without the hash serve uncashed content directly.",17608,

389546040,"Latest demo - now with multiple columns: https://datasette-suggested-facets-demo.now.sh/sf-trees-02c8ef1/Street_Tree_List?_facet=qCaretaker&_facet=qCareAssistant&_facet=qLegalStatus

",17608,

389562708,"This is now landed in master, ready for the next release.",17608,

389563719,The underlying mechanics for the `_extras` mechanism described in #262 may help with this.,17608,

389566147,"An official demo instance of Datasette dedicated to this use-case would be useful, especially if it was automatically deployed by Travis for every commit to master that passes the tests.

Maybe there should be a permanent version of it deployed for each released version too?",17608,

389570841,"At the most basic level, this will work based on an extension. Most places you currently put a `.json` extension should also allow a `.csv` extension.

By default this will return the exact results you see on the current page (default max will remain 1000).

## Streaming all records

Where things get interested is *streaming mode*. This will be an option which returns ALL matching records as a streaming CSV file, even if that ends up being millions of records.

I think the best way to build this will be on top of the existing mechanism used to efficiently implement keyset pagination via `_next=` tokens.

## Expanding foreign keys

For tables with foreign key references it would be useful if the CSV format could expand those references to include the labels from `label_column` - maybe via an additional `?_expand=1` option.

When expanding each foreign key column will be shown twice:

rowid,city_id,city_id_label,state",17608,

389572201,"This will likely be implemented in the `BaseView` class, which needs to know how to spot the `.csv` extension, call the underlying JSON generating function and then return the `columns` and `rows` as correctly formatted CSV.

https://github.com/simonw/datasette/blob/9959a9e4deec8e3e178f919e8b494214d5faa7fd/datasette/views/base.py#L201-L207

This means it will take ALL arguments that are available to the `.json` view. It may ignore some (e.g. `_facet=` makes no sense since CSV tables don't have space to show the facet results).

In streaming mode, things will behave a little bit differently - in particular, if `_stream=1` then `_next=` will be forbidden.

It can't include a length header because we don't know how many bytes it will be

CSV output will throw an error if the endpoint doesn't have rows and columns keys eg `/-/inspect.json`

So the implementation...

- looks for the `.csv` extension

- internally fetches the `.json` data instead

- If no `_stream` it just transposes that JSON to CSV with the correct content type header

- If `_stream=1` - checks for `_next=` and throws an error if it was provided

- Otherwise... fetch first page and emit CSV header and first set of rows

- Then start async looping, emitting more CSV rows and following the `_next=` internal reference until done

I like that this takes advantage of efficient pagination. It may not work so well for views which use offset/limit though.

It won't work at all for custom SQL because custom SQL doesn't support _next= pagination. That's fine.

For views... easiest fix is to cut off after first X000 records. That seems OK. View JSON would need to include a property that the mechanism can identify.",17608,

389579363,I started a thread on Twitter discussing various CSV output dialects: https://twitter.com/simonw/status/996783395504979968 - I want to pick defaults which will work as well as possible for whatever tools people might be using to consume the data.,17608,

389579762,"> I basically want someone to tell me which arguments I can pass to Python's csv.writer() function that will result in the least complaints from people who try to parse the results :)

https://twitter.com/simonw/status/996786815938977792",17608,

389592566,Let's provide a CSV Dialect definition too: https://frictionlessdata.io/specs/csv-dialect/ - via https://twitter.com/drewdaraabrams/status/996794915680997382,17608,

389608473,"There are some code examples in this issue which should help with the streaming part: https://github.com/channelcat/sanic/issues/1067

Also https://github.com/channelcat/sanic/blob/master/docs/sanic/streaming.md#response-streaming",17608,

389626715,"> I’d recommend using the Windows-1252 encoding for maximum compatibility, unless you have any characters not in that set, in which case use UTF8 with a byte order mark. Bit of a pain, but some progams (eg various versions of Excel) don’t read UTF8.

**frankieroberto** https://twitter.com/frankieroberto/status/996823071947460616

> There is software that consumes CSV and doesn't speak UTF8!? Huh. Well I can't just use Windows-1252 because I need to support the full UTF8 range of potential data - maybe I should support an optional ?_encoding=windows-1252 argument

**simonw** https://twitter.com/simonw/status/996824677245857793",17608,

389702480,Idea: `?_extra=sqllog` could output a lot of every individual SQL statement that was executed in order to generate the page - useful for seeing how foreign key expansion and faceting actually works.,17608,

389893810,Idea: add a `supports_csv = False` property to `BaseView` and over-ride it to `True` just on the view classes that should support CSV (Table and Row). Slight subtlety: the `DatabaseView` class only supports CSV in the `custom_sql()` path. Maybe that needs to be refactored a bit.,17608,

389894382,"I should definitely sanity check if the `_next=` route really is the most efficient way to build this. It may turn out that iterating over a SQLite cursor with a million rows in it is super-efficient and would provide much more reliable performance (plus solve the problem for retrieving full custom SQL queries where we can't do keyset pagination).

Problem here is that we run SQL queries in a thread pool. A query that returns millions of rows would presumably tie up a SQL thread until it has finished, which could block the server. This may be a reason to stick with `_next=` keyset pagination - since it ensures each SQL thread yields back again after each 1,000 rows.",17608,

389989015,"This is a departure from how Datasette has been designed so far, and it may turn out that it's not feasible or it requires too many philosophical changes to be worthwhile.

If we CAN do it though it would mean Datasette could stay running pointed at a directory on disk and new SQLite databases could be dropped into that directory by another process and served directly as they become available.",17608,

389989615,"From https://www.sqlite.org/c3ref/open.html

> **immutable**: The immutable parameter is a boolean query parameter that indicates that the database file is stored on read-only media. When immutable is set, SQLite assumes that the database file cannot be changed, even by a process with higher privilege, and so the database is opened read-only and all locking and change detection is disabled. Caution: Setting the immutable property on a database file that does in fact change can result in incorrect query results and/or SQLITE_CORRUPT errors. See also: SQLITE_IOCAP_IMMUTABLE.

So this would probably have to be a new mode, `datasette serve --detect-db-changes`, which no longer opens in immutable mode. Or maybe current behavior becomes not-the-default and you opt into it with `datasette serve --immutable`",17608,

390105147,I'm going to add a `/-/limits` page that shows the current limits.,17608,

390105943,Docs: http://datasette.readthedocs.io/en/latest/limits.html#default-facet-size,17608,

390250253,"Shouldn't [versioneer](https://github.com/warner/python-versioneer) do that?

E.g. 0.21+2.g1076c97

You'd need to install via `pip install git+https://github.com/simow/datasette.git` though, this does a temp git clone.",17608,

390433040,Could also support these as optional environment variables - `DATASETTE_NAMEOFSETTING`,17608,

390496376,http://datasette.readthedocs.io/en/latest/config.html,17608,

390577711,"Excellent, I was not aware of the auto redirect to the new hash. My bad

This solves my use case.

I do agree that your suggested --no-url-hash approach is much neater. I will investigate ",17608,

390689406,"I've changed my mind about the way to support external connectors aside of SQLite and I'm working in a more simple style that respects the original Datasette, i.e. less refactoring. I present you [a version of Datasette wich supports other database connectors](https://github.com/jsancho-gpl/datasette/tree/external-connectors) and [a Datasette connector for HDF5/PyTables files](https://github.com/jsancho-gpl/datasette-pytables).",17608,

390707183,"This is definitely a big improvement.

I'd like to refactor the unit tests that cover .inspect() too - currently they are a huge ugly blob at the top of test_api.py",17608,

390707760,"This probably needs to be in a plugin simply because getting Spatialite compiled and installed is a bit of a pain.

It's a great opportunity to expand the plugin hooks in useful ways though.",17608,

390795067,"Well, we do have the capability to detect spatialite so my intention certainly wasn't to require it.

I can see the advantage of having it as a plugin but it does touch a number of points in the code. I think I'm going to attack this by refactoring the necessary bits and seeing where that leads (which was my plan anyway).

I think my main concern is - if I add certain plugin hooks for this, is anything else ever going to use them? I'm not sure I have an answer to that question yet, either way.",17608,

390804333,"We should merge this before refactoring the tests though, because that way we don't couple the new tests to the verification of this change.",17608,

390991640,For SpatiaLite this example may be useful - though it's building 4.3.0 and not 4.4.0: https://github.com/terranodo/spatialite-docker/blob/master/Dockerfile,17608,

390993397,"Useful GitHub code search: https://github.com/search?utf8=✓&q=%22libspatialite-4.4.0%22+%22RC0%22&type=Code

",17608,

390993861,If we can't get `import sqlite3` to load the latest version but we can get `import pysqlite3` to work that's fine too - I can teach Datasette to import the best available version.,17608,

390999055,This shipped in Datasette 0.22. Here's my blog post about it: https://simonwillison.net/2018/May/20/datasette-facets/,17608,

391000659,"Right now the plugin stuff is early enough that I'd like to get as many potential plugin hooks as possible crafted out A much easier to judge if they should be added as actual hooks if we have a working branch prototype of them.

Some kind of mechanism for custom column display is already needed - eg there are columns where I want to say ""render this as markdown"" or ""URLify any links in this text"" - or even ""use this date format"" or ""add commas to this integer"".

You can do it with a custom template but a lower-level mechanism would be nicer. ",17608,

391003285,"That looks great. I don't think it's possible to derive the current commit version from the .zip downloaded directly from GitHub, so needing to pip install via git+https feels reasonable to me.",17608,

391011268,"I think I can do this almost entirely within my existing BaseView class structure.

First, decouple the async data() methods by teaching them to take a querystring object as an argument instead of a Sanic request object. The get() method can then send that new object instead of a request.

Next teach the base class how to obey the ASGI protocol.

I should be able to get support for both Sanic and uvicorn/daphne working in the same codebase, which will make it easy to compare their performance. ",17608,

391025841,"The other reason I mention plugins is that I have an idea to outlaw JavaScript entirely from Datasette core and instead encourage ALL JavaScript functionality to move into plugins.right now that just means CodeMirror. I may set up some of those plugins (like CodeMirror) as default dependencies so you get them from ""pip install datasette"".

I like the neatness of saying that core Datasette is a very simple JSON + HTML application, then encouraging people to go completely wild with JavaScript in the plugins.",17608,

391030083,See also #278,17608,

391050113,"Yup, I'll have a think about it. My current thoughts are for spatialite we'll need to hook into the following places:

* Inspection, so we can detect which columns are geometry columns. (We also currently ignore spatialite tables during inspection, it may be worth moving that to the plugin as well.)

* After data load, so we can convert WKB into the correct intermediate format for display. The alternative here is to alter the select SQL itself and get spatialite to do this conversion, but that strikes me as a bit more complex and possibly not as useful.

* HTML rendering.

* Querying?

The rendering and querying hooks could also potentially be used to move the units support into a plugin.",17608,

391055490,"This is fantastic!

I think I prefer the aesthetics of just ""0.22"" for the version string if it's a tagged release with no additional changes - does that work?

I'd like to continue to provide a tuple that can be imported from the version.py module as well, as seen here:

https://github.com/simonw/datasette/blob/558d9d7bfef3dd633eb16389281b67d42c9bdeef/datasette/version.py#L1

Presumably we can generate that from the versioneer string?

",17608,

391059008,"```python

>>> import sqlite3

>>> sqlite3.sqlite_version

'3.23.1'

>>>

```

running the above in the container seems to show 3.23.1 too so maybe we don't need pysqlite3 at all?",17608,

391073009,"> I think I prefer the aesthetics of just ""0.22"" for the version string if it's a tagged release with no additional changes - does that work?

Yes! That's the default versioneer behaviour.

> I'd like to continue to provide a tuple that can be imported from the version.py module as well, as seen here:

Should work now, it can be a two (for a tagged version), three or four items tuple.

```

In [2]: datasette.__version__

Out[2]: '0.12+292.ga70c2a8.dirty'

In [3]: datasette.__version_info__

Out[3]: ('0', '12+292', 'ga70c2a8', 'dirty')

```",17608,

391073267,"Sorry, just realised you rely on `version` being a module ...",17608,

391076239,This looks amazing! Can't wait to try this out this evening.,17608,

391076458,Yeah let's try this without pysqlite3 and see if we still get the correct version.,17608,

391077700,"Alright, that should work now -- let me know if you would prefer any different behaviour.",17608,

391141391,"I'm going to clean this up for consistency tomorrow morning so hold off

merging until then please

On Tue, May 22, 2018 at 6:34 PM, Simon Willison

wrote:

> Yeah let's try this without pysqlite3 and see if we still get the correct

> version.

>

> —

> You are receiving this because you authored the thread.

> Reply to this email directly, view it on GitHub

> , or mute

> the thread

>

> .

>

",17608,

391190497,"I grabbed just your Dockerfile and built it like this:

docker build . -t datasette

Once it had built, I ran it like this:

docker run -p 8001:8001 -v `pwd`:/mnt datasette \

datasette -p 8001 -h 0.0.0.0 /mnt/fixtures.db \

--load-extension=/usr/local/lib/mod_spatialite.so

(The fixtures.db file is created by running `python tests/fixtures.py fixtures.db`)

Then I visited http://localhost:8001/-/versions and I got this:

{

""datasette"": {

""version"": ""0+unknown""

},

""python"": {

""full"": ""3.6.3 (default, Dec 12 2017, 06:37:05) \n[GCC 6.3.0 20170516]"",

""version"": ""3.6.3""

},

""sqlite"": {

""extensions"": {

""json1"": null,

""spatialite"": ""4.4.0-RC0""

},

""fts_versions"": [

""FTS4"",

""FTS3""

],

""version"": ""3.23.1""

}

}

Fantastic! I'm getting SQLite `3.23.1` and SpatiaLite `4.4.0-RC0`",17608,

391290271,"Running:

```bash

docker run -p 8001:8001 -v `pwd`:/mnt datasette \

datasette -p 8001 -h 0.0.0.0 /mnt/fixtures.db \

--load-extension=/usr/local/lib/mod_spatialite.so

```

is now returning FTS5 enabled in the versions output:

```json

{

""datasette"": {

""version"": ""0.22""

},

""python"": {

""full"": ""3.6.5 (default, May 5 2018, 03:07:21) \n[GCC 6.3.0 20170516]"",

""version"": ""3.6.5""

},

""sqlite"": {

""extensions"": {

""json1"": null,

""spatialite"": ""4.4.0-RC0""

},

""fts_versions"": [

""FTS5"",

""FTS4"",

""FTS3""

],

""version"": ""3.23.1""

}

}

```

The old query didn't work because specifying `(t TEXT)` caused an error",17608,

391354237,@r4vi any objections to me merging this?,17608,

391355030,"No objections;

It's good to go @simonw

On Wed, 23 May 2018, 14:51 Simon Willison, wrote:

> @r4vi any objections to me merging this?

>

> —

> You are receiving this because you were mentioned.

> Reply to this email directly, view it on GitHub

> , or mute

> the thread

>

> .

>

",17608,

391355099,Confirmed fixed: https://fivethirtyeight-datasette-mipwbeadvr.now.sh/fivethirtyeight-5de27e3/nba-elo%2Fnbaallelo?_facet=lg_id&_next=100 ,17608,

391437199,Thank you very much! This is most excellent.,17608,

391437462,I'm afraid I just merged #280 which means this no longer applies. You're very welcome to see if you can further optimize the new Dockerfile though.,17608,

391504199,"I'm not keen on anything that modifies the SQLite file itself on startup - part of the datasette contract is that it should work with any SQLite file you throw at it without having any side-effects.

A neat thing about SQLite is that because everything happens in the same process there's very little additional overhead involved in executing extra SQL queries - even if we ran a query-per-row to transform data in one specific column it shouldn't add more than a few ms to the total page load time (whereas with MySQL all of the extra query overhead would kill us).",17608,

391504757,"That said, it looks like we may be able to use a library like https://github.com/geomet/geomet to run the conversion from WKB entirely in Python space.",17608,

391505930,"> I'm not keen on anything that modifies the SQLite file itself on startup

Ah I didn't mean that - I meant altering the SELECT query to fetch the data so that it ran a spatialite function to transform that specific column.

I think that's less useful as a general-purpose plugin hook though, and it's not that hard to parse the WKB in Python (my default approach would be to use [shapely](https://github.com/Toblerity/Shapely), which is great, but geomet looks like an interesting pure-python alternative).",17608,

391583528,"The challenge here is which database should be the ""default"" database. The first database attached to SQLite is treated as the default - if no database is specified in a query, that's the database that queries will be executed against.

Currently, each database URL in Datasette (e.g. https://san-francisco.datasettes.com/sf-film-locations-84594a7 v.s. https://san-francisco.datasettes.com/sf-trees-ebc2ad9 ) gets its own independent connection, and all queries within that base URL run against that database.

If we're going to attach multiple databases to the same connection, how do we set which database gets to be the default?

The easiest thing to do here will be to have a special database (maybe which is turned off by default and can be enabled using `datasette serve --enable-cross-database-joins` or similar) which attaches to ALL the databases. Perhaps it starts as an in-memory database, maybe at `/memory`?

",17608,

391584112,"I built a very rough prototype of this to prove it could work. It's deployed here - and here's an example of a query that joins across two different databases:

https://datasette-cross-database-joins-prototype.now.sh/memory?sql=select+fivethirtyeight.%5Blove-actually%2Flove_actually_adjacencies%5D.rowid%2C%0D%0Afivethirtyeight.%5Blove-actually%2Flove_actually_adjacencies%5D.actors%2C%0D%0A%5Bgoogle-trends%5D.%5B20150430_UKDebate%5D.city%0D%0Afrom+fivethirtyeight.%5Blove-actually%2Flove_actually_adjacencies%5D%0D%0Ajoin+%5Bgoogle-trends%5D.%5B20150430_UKDebate%5D%0D%0A++on+%5Bgoogle-trends%5D.%5B20150430_UKDebate%5D.rowid+%3D+fivethirtyeight.%5Blove-actually%2Flove_actually_adjacencies%5D.rowid

```

select fivethirtyeight.[love-actually/love_actually_adjacencies].rowid,

fivethirtyeight.[love-actually/love_actually_adjacencies].actors,

[google-trends].[20150430_UKDebate].city

from fivethirtyeight.[love-actually/love_actually_adjacencies]

join [google-trends].[20150430_UKDebate]

on [google-trends].[20150430_UKDebate].rowid = fivethirtyeight.[love-actually/love_actually_adjacencies].rowid

```

I deployed it like this:

datasette publish now --branch=cross-database-joins fivethirtyeight.db google-trends.db --name=datasette-cross-database-joins-prototype

",17608,

391584366,"I used some pretty ugly hacks, like faking an entire `.inspect()` block for the `:memory:` database just to get past the errors I was seeing. To ship this as a feature it will need quite a bit of code refactoring to make those hacks unnecessary.

https://github.com/simonw/datasette/blob/7a3040f5782375373b2b66e5969bc2c49b3a6f0e/datasette/views/database.py#L18-L26",17608,

391584527,Rather than stealing the `/memory` namespace for this it would be nicer if these cross-database joins could be executed at the very top-level URL of the Datasette instance - `https://example.com/?sql=...`,17608,

391752218,Most of the time Datasette is used with just a single database file. So maybe it makes sense for this option to be turned on by default and to ALWAYS be available on the Datasette instance homepage unless the user has explicitly disabled it.,17608,

391752425,"This would make Datasett's SQL features a lot more instantly obvious to people who land on a homepage, which is probably a good thing.",17608,

391752629,"Should this support canned queries too? I think it should, though that raises interesting questions regarding their URL structure.",17608,

391752882,Another option: give this the `/-/all` URL namespace.,17608,

391754506,"Giving it `/all/` would be easier since that way the existing URL routes (including canned queries) would all work... but I would have to teach it NOT to expect a database content hash on that URL.

Or maybe it should still have a content hash (to enable far-future cache expiry headers on query results) but the hash should be constructed out of all of the other database hashes concatenated together.

That way the URLs would be `/all-5de27e3` and `/all-5de27e3/canned-query-name`

Only downside: this would make it impossible to have a database file with the name `all.db`. I think that's probably an OK trade-off. You could turn the feature off with a config flag if you really want to use that filename (for whatever reason).

How about `/-all-5de27e3/` instead to avoid collisions?",17608,

391755300,On the `/-all-5de27e3` page we can show the regular https://fivethirtyeight.datasettes.com/fivethirtyeight-5de27e3 interface but instead of the list of tables we can show a list of attached databases plus some help text showing how to construct a cross-database join.,17608,

391756841,"For an example query that pre-populates that textarea... maybe a UNION that pulls the first 10 rows from the first table of each of the first two databases?

```

select * from (select rowid, actors from fivethirtyeight.[love-actually/love_actually_adjacencies] limit 10)

union all

select * from (select rowid, city from [google-trends].[20150430_UKDebate] limit 10)

```

https://datasette-cross-database-joins-prototype.now.sh/memory?sql=select+*+from+%28select+rowid%2C+actors+from+fivethirtyeight.%5Blove-actually%2Flove_actually_adjacencies%5D+limit+10%29%0D%0A+++union+all%0D%0Aselect+*+from+%28select+rowid%2C+city+from+%5Bgoogle-trends%5D.%5B20150430_UKDebate%5D+limit+10%29",17608,

391765706,I'm not crazy about the `enable_` prefix on these.,17608,

391765973,This will also give us a mechanism for turning on and off the cross-database joins feature from #283,17608,

391766420,"Maybe `allow_sql`, `allow_facet` and `allow_download`",17608,

391768302,I like `/-/all-5de27e3` for this (with `/-/all` redirecting to the correct hash),17608,

391771202,"So the lookup priority order should be:

* table level in metadata

* database level in metadata

* root level in metadata

* `--config` options passed to `datasette serve`

* `DATASETTE_X` environment variables",17608,

391771658,It feels slightly weird continuing to call it `metadata.json` as it starts to grow support for config options (which already started with the `units` and `facets` keys) but I can live with that.,17608,

391912392,`allow_sql` should only affect the `?sql=` parameter and whether or not the form is displayed. You should still be able to use and execute canned queries even if this option is turned off.,17608,

391950691,"Demo:

datasette publish now --branch=master fixtures.db \

--source=""#284 Demo"" \

--source_url=""https://github.com/simonw/datasette/issues/284"" \

--extra-options ""--config allow_sql:off --config allow_facet:off --config allow_download:off"" \

--name=datasette-demo-284

now alias https://datasette-demo-284-jogjwngegj.now.sh datasette-demo-284.now.sh

https://datasette-demo-284.now.sh/

Note the following:

* https://datasette-demo-284.now.sh/fixtures-fda0fea has no SQL input textarea

* https://datasette-demo-284.now.sh/fixtures-fda0fea has no database download link

* https://datasette-demo-284.now.sh/fixtures-fda0fea.db returns 403 forbidden

* https://datasette-demo-284.now.sh/fixtures-fda0fea?sql=select%20*%20from%20sqlite_master throws error 400

* https://datasette-demo-284.now.sh/fixtures-fda0fea/facetable shows no suggested facets

* https://datasette-demo-284.now.sh/fixtures-fda0fea/facetable?_facet=city_id throws error 400",17608,

392118755,"Thinking about this further, maybe I should embrace ASGI turtles-all-the-way-down and teach each datasette view class to take a scope to the constructor and act entirely as an ASGI component. Would be a nice way of diving deep into ASGI and I can add utility helpers for things like querystring evaluation as I need them.",17608,

392121500,"A few extra thoughts:

* Some users may want to opt out of this. We could have `--config version_in_hash:false`

* should this affect the filename for the downloadable copy of the SQLite database? Maybe that should stay as just the hash of the contents, but that's a fair bit more complex

* What about users who stick with the same version of datasette but deploy changes to their custom templates - how can we help them cache bust? Maybe with `--config cache_version:2`",17608,

392121743,This is also a great excuse to finally write up some detailed documentation on Datasette's caching strategy,17608,

392121905,See also #286,17608,

392212119,"This should detect any table which can be linked to the current table via some other table, based on the other table having a foreign key to them both.

These join tables could be arbitrarily complicated. They might have foreign keys to more than two other tables, maybe even multiple foreign keys to the same column.

Ideally M2M defection would catch all of these cases. Maybe the resulting inspect data looks something like this:

```

""artists"": {

...

""m2m"": [{

""other_table"": ""festivals"",

""through"": ""performances"",

""our_fk"": ""artist_id"",

""other_fk"": ""performance_id""

}]

```

Let's ignore compound primary keys: we k it detect m2m relationships where the join table has foreign keys to a single primary key on the other two tables.",17608,

392214791,"We may need to derive a usable name for each of these relationships that can be used in eg querystring parameters.

The name of the join table is a reasonable choice here. Say the join table is called `event_tags` - the querystring for returning all events that are tagged `badger` could be `/db/events?_m2m_event_tags__tag=badger` perhaps?

But what if `event_tags` has more than one foreign key back to `events`? Might need to specify the column in `events` that is referred back to by `event_tags` somehow in that case.",17608,

392279508,Related: I started the documentation for using SpatiaLite with Datasette here: https://datasette.readthedocs.io/en/latest/spatialite.html,17608,

392279644,"I've been thinking a bit about modifying the SQL select statement used for the table view recently. I've run into a few examples of SQLite database that slow to a crawl when viewed with datasette because the rows are too big, so there's definitely scope for supporting custom select clauses (avoiding some columns, showing length(colname) for others).",17608,

392288531,"This might also be an opportunity to support an __in= operator - though that's an odd one as it acts equivalent to an OR whereas every other parameter is combined with an AND

UPDATE 15th April 2019: I implemented `?column__in=` in a different way, see #433 ",17608,

392288990,An example of a query where you might want to use this option: https://fivethirtyeight.datasettes.com/fivethirtyeight-5de27e3?sql=select+rowid%2C+*+from+%5Balcohol-consumption%2Fdrinks%5D+order+by+random%28%29+limit+1,17608,

392291605,Documented here https://datasette.readthedocs.io/en/latest/json_api.html#special-table-arguments and here: https://datasette.readthedocs.io/en/latest/config.html#default-cache-ttl,17608,

392291716,Demo: hit refresh on https://fivethirtyeight.datasettes.com/fivethirtyeight-5de27e3?sql=select+rowid%2C+*+from+%5Balcohol-consumption%2Fdrinks%5D+order+by+random%28%29+limit+1&_ttl=0,17608,

392296758,Docs: https://datasette.readthedocs.io/en/latest/json_api.html#different-shapes,17608,

392297392,"I ran a very rough micro-benchmark on the new `num_sql_threads` config option.

datasette --config num_sql_threads:1 fivethirtyeight.db

Then

ab -n 100 -c 10 'http://127.0.0.1:8011/fivethirtyeight-2628db9/twitter-ratio%2Fsenators'

| Number of threads | Requests/second |

|---|---|

| 1 | 4.57 |

| 3 | 9.77 |

| 10 | 13.53 |

| 20 | 15.24

| 50 | 8.21 |

",17608,

392297508,Documentation: http://datasette.readthedocs.io/en/latest/config.html#num-sql-threads,17608,

392302406,"My first attempt at this was to have plugins depend on each other - so there would be a `datasette-leaflet` plugin which adds Leaflet to the page, and the `datasette-cluster-map` and `datasette-leaflet-geojson` plugins would depend on that plugin.

I tried this and it didn't work, because it turns out the order in which plugins are loaded isn't predictable. `datasette-cluster-map` ended up adding it's script link before Leaflet had been loaded by `datasette-leaflet`, resulting in JavaScript errors.",17608,

392302416,For the moment then I'm going with a really simple solution: when iterating through `extra_css_urls` and `extra_js_urls` de-dupe by URL and avoid outputting the same link twice.,17608,

392302456,The big gap in this solution is conflicting versions: I don't yet have a story for what happens if two plugins attempt to load different versions of Leaflet. ,17608,

392305776,These plugin config options should be exposed to JavaScript as `datasette.config.plugins`,17608,

392316250,It looks like we can use the `geometry_columns` table to introspect which columns are SpatiaLite geometries. It includes a `geometry_type` integer which is documented here: https://www.gaia-gis.it/fossil/libspatialite/wiki?name=switching-to-4.0,17608,

392316306,Relevant to this ticket: I've been playing with a plugin that automatically renders any GeoJSON cells as leaflet maps: https://github.com/simonw/datasette-leaflet-geojson,17608,

392316673,Open question: how should this affect the row page? Just because columns were hidden on the table page doesn't necessarily mean they should be hidden on the row page as well. ,17608,

392316701,I could certainly see people wanting different custom column selects for the row page compared to the table page.,17608,

392338130,"Here's my first sketch at a metadata format for this:

* `columns`: optional list of columns to include - if missing, shows all

* `column_selects`: dictionary mapping column names to alternative select clauses

`column_selects` can also invent new keys and use them to create derived columns. These new keys will be selected at the end of the list of columns UNLESS they are mentioned in `columns`, in which case that sequence will define the order.

Can you facet by things that are customized using `column_selects`? Yes, and let's try running suggested facets against those columns as well.

```

{

""databases"": {

""databasename"": {

""tables"": {

""tablename"": {

""columns"": [

""id"", ""name"", ""size""

],

""column_selects"": {

""name"": ""upper(name)"",

""geo_json"": ""AsGeoJSON(Geometry)""

}

""row_columns"": [...]

""row_column_selects"": {...}

}

```

The `row_columns` and `row_column_selects` properties work the same as the `column*` ones, except they are applied on the row page instead.

If omitted, the `column*` ones will be used on the row page as well.

If you want the row page to switch back to Datasette's default behaviour you can set `""row_columns"": [], ""row_column_selects"": {}`.",17608,

392342269,"Here's the metadata I tried against that first working prototype:

```

{

""databases"": {

""timezones"": {

""tables"": {

""timezones"": {

""columns"": [""PK_UID""],

""column_selects"": {

""upper_tzid"": ""upper(tzid)"",

""Geometry"": ""AsGeoJSON(Geometry)""

}

}

}

},

""wtr"": {

""tables"": {

""license_frequency"": {

""columns"": [""id"", ""license"", ""tx_rx"", ""frequency""],

""column_selects"": {

""latitude"": ""Y(Geometry)"",

""longitude"": ""X(Geometry)""

}

}

}

}

}

}

```

Run using this:

datasette timezones.db wtr.db \

--reload --debug --load-extension=/usr/local/lib/mod_spatialite.dylib \

-m column-metadata.json --config sql_time_limit_ms:10000

Usefully, the `--reload` flag detects changes to the `metadata.json` file as well as Datasette's own Python code.",17608,

392342947,I'd still like to be able to over-ride this using querystring arguments.,17608,

392343690,"Turns out it's actually possible to pull data from other tables using the mechanism in the prototype:

```

{

""databases"": {

""wtr"": {

""tables"": {

""license"": {

""column_selects"": {

""count"": ""(select count(*) from license_frequency where license_frequency.license = license.id)""

}

}

}

}

}

}

```

Performance using this technique is pretty terrible though:

",17608,

392343839,"The more efficient way of doing this kind of count would be to provide a mechanism which can also add extra fragments to a `GROUP BY` clause used for the `SELECT`.

Or... how about a mechanism similar to Django's `prefetch_related` which lets you define extra queries that will be called with a list of primary keys (or values from other columns) and used to populate a new column? A little unconventional but could be extremely useful and efficient.

Related to that: since the per-query overhead in SQLite is tiny, could even define an extra query to be run once-per-row before returning results.",17608,

392345062,There needs to be a way to turn this off and return to Datasette default bahviour. Maybe a `?_raw=1` querystring parameter for the table view.,17608,

392350495,"Querystring design:

* `?_column=a&_column=b` - equivalent of `""columns"": [""a"", ""b""]` in `metadata.json`

* `?_select_nameupper=upper(name)` - equivalent of `""column_selects"": {""nameupper"": ""upper(name)""}`",17608,

392350568,"If any `?_column=` parameters are provided the metadata version is completely ignored.

",17608,

392350980,"Should `?_raw=1` also turn off foreign key expansions? No, we will eventually provide a separate mechanism for that (or leave it to nerds who care to figure out using JSON or CSV export).",17608,

392568047,Closing this as obsolete since we have facets now.,17608,

392574208,"I'm handling this as separate documentation sections instead, e.g. http://datasette.readthedocs.io/en/latest/spatialite.html",17608,

392574358,Closing this as obsolete in favor of other issues [tagged documentation](https://github.com/simonw/datasette/labels/documentation).,17608,

392574415,I implemented this as `?_ttl=0` in #289 ,17608,

392575160,"I've changed my mind about this.

""Select every record on the 3rd day of the month"" doesn't strike me as an actually useful feature.

""Select every record in 2018 / in May 2018 / on 1st May 2018"", if you are using the SQLite-preferred datestring format, are already supported using LIKE queries (or the startswith filter):

* https://fivethirtyeight.datasettes.com/fivethirtyeight/inconvenient-sequel%2Fratings?timestamp__startswith=2017

* https://fivethirtyeight.datasettes.com/fivethirtyeight/inconvenient-sequel%2Fratings?timestamp__startswith=2017-08

* https://fivethirtyeight.datasettes.com/fivethirtyeight/inconvenient-sequel%2Fratings?timestamp__startswith=2017-08-29

",17608,

392575448,"This shouldn't be a comma-separated list, it should be an argument you can pass multiple times to better match #255 and #292

Maybe `?_json=foo&_json=bar`

",17608,

392580715,"Oops, that commit should have referenced #121 ",17608,

392580902,"Implemented in 76d11eb768e2f05f593c4d37a25280c0fcdf8fd6

Documented here: http://datasette.readthedocs.io/en/latest/json_api.html#special-json-arguments",17608,

392600866,"This is an accidental duplicate, work is now taking place in #266",17608,

392601114,I think the way Datasette executes SQL queries in a thread pool introduced in #45 is a good solution for this ticket.,17608,

392601478,I'm going to close this as WONTFIX for the moment. Once Plugins #14 grows the ability to add extra URL paths and views someone who needs this could build it as a plugin instead.,17608,

392602334,"The `/.json` endpoint is more of an implementation detail of the homepage at this point. A better, documented ( http://datasette.readthedocs.io/en/stable/introspection.html#inspect ) endpoint for finding all of the databases and tables is https://parlgov.datasettes.com/-/inspect.json",17608,

392602558,I'll have the error message display a link to the documentation.,17608,

392605574,"

",17608,

392606044,"The other major limitation of APSW is its treatment of unicode: https://rogerbinns.github.io/apsw/types.html - it tells you that it is your responsibility to ensure that TEXT columns in your SQLite database are correctly encoded.

Since Datasette is designed to work against ANY SQLite database that someone may have already created, I see that as a show-stopping limitation.

Thanks to https://github.com/coleifer/sqlite-vtfunc I now have a working mechanism for virtual tables (I've even built a demo plugin with them - https://github.com/simonw/datasette-sql-scraper ) which was the main thing that interested me about APSW.

I'm going to close this as WONTFIX - I think Python's built-in `sqlite3` is good enough, and is now so firmly embedded in the project that making it pluggable would be more trouble than it's worth.",17608,

392606418,"> It could also be useful to allow users to import a python file containing custom functions that can that be loaded into scope and made available to custom templates.

That's now covered by the plugins mechanism - you can create plugins that define custom template functions: http://datasette.readthedocs.io/en/stable/plugins.html#prepare-jinja2-environment-env",17608,

392815673,"I'm coming round to the idea that this should be baked into Datasette core - see above referenced issues for some of the explorations I've been doing around this area.

Datasette should absolutely work without SpatiaLite, but it's such a huge bonus part of the SQLite ecosystem that I'm happy to ship features that take advantage of it without being relegated to plugins.

I'm also becoming aware that there aren't really that many other interesting loadable extensions for SQLite. If SpatiaLite was one of dozens I'd feel that a rule that ""anything dependent on an extension lives in a plugin"" would make sense, but as it stands I think 99% of the time the only loadable extensions people will be using will be SpatiaLite and json1 (and json1 is available in the amalgamation anyway).

",17608,

392822050,"I don't know how it happened, but I've somehow got myself into a state where my local SQLite for Python 3 on OS X is `3.23.1`:

```

~ $ python3

Python 3.6.5 (default, Mar 30 2018, 06:41:53)

[GCC 4.2.1 Compatible Apple LLVM 9.0.0 (clang-900.0.39.2)] on darwin

Type ""help"", ""copyright"", ""credits"" or ""license"" for more information.

>>> import sqlite3

>>> sqlite3.connect(':memory:').execute('select sqlite_version()').fetchall()

[('3.23.1',)]

>>>

```

Maybe I did something in homebrew that changed this? I'd love to understand what exactly I did to get to this state.",17608,

392825746,"I haven't had time to look further into this, but if doing this as a plugin results in useful hooks then I think we should do it that way. We could always require the plugin as a standard dependency.

I think this is going to result in quite a bit of refactoring anyway so it's a good time to add hooks regardless.

On the other hand, if we have to add lots of specialist hooks for it then maybe it's worth integrating into the core.",17608,

392828475,"Python standard-library SQLite dynamically links against the system sqlite3. So presumably you installed a more up-to-date sqlite3 somewhere on your `LD_LIBRARY_PATH`.

To compile a statically-linked pysqlite you need to include an amalgamation in the project root when building the extension. Read the relevant setup.py.",17608,

392831543,"I ran an informal survey on twitter and most people were on 3.21 - https://twitter.com/simonw/status/1001487546289815553

Maybe this is from upgrading to the latest OS X release.",17608,

392840811,"Since #275 will allow configs to be overridden at the table and database level it also makes sense to expose a completely evaluated list of configs at:

* `/dbname/-/config`

* `/dbname/tablename/-/config`

Similar to https://fivethirtyeight.datasettes.com/-/config",17608,

392890045,"Just about to ask for this! Move this page https://github.com/simonw/datasette/wiki/Datasettes

into a datasette, with some concept of versioning as well.",17608,

392895733,Do you have an existing example with views?,17608,

392917380,Creating URLs using concatenation as seen in `('https://twitter.com/' || user) as user_url` is likely to have all sorts of useful applications for ad-hoc analysis.,17608,

392918311,Should the `tablename` ones also work for views and canned queries? Probably not.,17608,

392969173,The more time I spend with SpatiaLite the more convinced I am that this should be default behavior. There's nothing useful about the binary Geometry representation - it's not even valid WKB. I'm on board with WKT as the default display in HTML and GeoJSON as the default for `.json`,17608,

393003340,Funny you should mention that... I'm planning on doing that as part of the official Datasette website at some point soon. A Datasette instance that lists other Datasette instances feels pleasingly appropriate.,17608,