{"id": 273775212, "node_id": "MDU6SXNzdWUyNzM3NzUyMTI=", "number": 88, "title": "Add NHS England Hospitals example to wiki", "user": {"value": 15543, "label": "tomdyson"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 4, "created_at": "2017-11-14T12:29:10Z", "updated_at": "2021-03-22T23:46:36Z", "closed_at": "2017-11-14T22:54:06Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "https://nhs-england-hospitals.now.sh\r\n\r\nand an associated map visualisation:\r\n\r\nhttp://run.plnkr.co/preview/cj9zlf1qc0003414y90ajkwpk/\r\n\r\nDatasette is wonderful!\r\n\r\n", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/88/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 275814941, "node_id": "MDU6SXNzdWUyNzU4MTQ5NDE=", "number": 141, "title": "datasette publish can fail if /tmp is on a different device", "user": {"value": 21148, "label": "jacobian"}, "state": "closed", "locked": 0, "assignee": null, "milestone": {"value": 2949431, "label": "Custom templates edition"}, "comments": 5, "created_at": "2017-11-21T18:28:05Z", "updated_at": "2020-04-29T03:27:54Z", "closed_at": "2017-12-08T16:06:36Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "`datasette publish` uses hard links to avoid copying the db into a tmp directory. This can fail if `/tmp` is on another device, because hardlinks can't cross devices. You'll see something like this:\r\n\r\n```\r\n$ datasette publish heroku whatever.db\r\n...\r\nOSError: [Errno 18] Invalid cross-device link: '/mnt/c/Users/jacob/c/datasette/whatever.db' -> '/tmp/tmpvxq2yof6/whatever.db'\r\n```\r\n[In my case this is failing because I'm on a Windows machine, using WSL, so my code's on a different virtual filesystem from the Linux subsystem, Because Reasons.]\r\n\r\nI'm not sure if it's possible to detect this (can you figure out which device `/tmp` is on?), or what the fallback should be (soft link? copy?).", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/141/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 286938589, "node_id": "MDU6SXNzdWUyODY5Mzg1ODk=", "number": 177, "title": "Publishing to Heroku - metadata file not uploaded?", "user": {"value": 82988, "label": "psychemedia"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 0, "created_at": "2018-01-09T01:04:31Z", "updated_at": "2018-01-25T16:45:32Z", "closed_at": "2018-01-25T16:45:32Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "Trying to run *datasette* (version 0.14) on Heroku with a `metadata.json` doesn't seem to be picking up the `metadata.json` file? \r\n\r\nOn a Mac with dodgy `tar` support:\r\n\r\n```\r\n \u25b8 Couldn't detect GNU tar. Builds could fail due to decompression errors\r\n \u25b8 See\r\n \u25b8 https://devcenter.heroku.com/articles/platform-api-deploying-slugs#create-slug-archive\r\n \u25b8 Please install it, or specify the '--tar' option\r\n \u25b8 Falling back to node's built-in compressor\r\n```\r\n\r\nCould that be causing the issue?\r\n\r\nAlso, I'm not seeing custom query links anywhere obvious when I run the metadata file with a local *datasette* server?\r\n\r\n", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/177/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 291639118, "node_id": "MDU6SXNzdWUyOTE2MzkxMTg=", "number": 183, "title": "Custom Queries - escaping strings", "user": {"value": 82988, "label": "psychemedia"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 2, "created_at": "2018-01-25T16:49:13Z", "updated_at": "2019-06-24T06:45:07Z", "closed_at": "2019-06-24T06:45:07Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "If a SQLite table column name contains spaces, they are usually referred to in double quotes:\r\n\r\n`SELECT * FROM mytable WHERE \"gappy column name\"=\"my value\";`\r\n\r\nIn the JSON metadata file, this is passed by escaping the double quotes:\r\n\r\n`\"queries\": {\"my query\": \"SELECT * FROM mytable WHERE \\\"gappy column name\\\"=\\\"my value\\\";\"}`\r\n\r\nWhen specifying a custom query in `metadata.json` using double quotes, these are then rendered in the *datasette* query box using single quotes:\r\n\r\n`SELECT * FROM mytable WHERE 'gappy column name'='my value';`\r\n\r\nwhich does not work.\r\n\r\nAlternatively, a valid custom query can be passed using backticks (\\`) to quote the column name and single (unescaped) quotes for the matched value:\r\n\r\n``\"queries\": {\"my query\": \"SELECT * FROM mytable WHERE `gappy column name`='my value';\"}``\r\n", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/183/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 313837303, "node_id": "MDU6SXNzdWUzMTM4MzczMDM=", "number": 203, "title": "Support for units", "user": {"value": 45057, "label": "russss"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 10, "created_at": "2018-04-12T18:24:28Z", "updated_at": "2018-04-16T21:59:17Z", "closed_at": "2018-04-16T21:59:17Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "It would be nice to be able to attach a unit to a column in the metadata, and have it rendered with that unit (and SI prefix) when it's displayed.\r\n\r\nIt would also be nice to support entering the prefixes in variables when querying.\r\n\r\nWith my radio licensing app I've put all frequencies in Hz. It's easy enough to special-case the row rendering to add the SI prefixes, but it's pretty unusable when querying by that field.", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/203/reactions\", \"total_count\": 1, \"+1\": 1, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 314834783, "node_id": "MDU6SXNzdWUzMTQ4MzQ3ODM=", "number": 219, "title": "Expose units in the JSON API?", "user": {"value": 45057, "label": "russss"}, "state": "open", "locked": 0, "assignee": null, "milestone": null, "comments": 0, "created_at": "2018-04-16T22:04:25Z", "updated_at": "2018-04-16T22:04:25Z", "closed_at": null, "author_association": "CONTRIBUTOR", "pull_request": null, "body": "From #203: it would be nice for the JSON API to (optionally) return columns rendered with units in them - if, for example, you're consuming the JSON to render the rows on a map.\r\n\r\nI'm not entirely sure how useful this will be though - at the moment my map queries are custom SQL queries (a few have joins in, the rest might be fetching large amounts of data so it makes sense to limit columns fetched). Perhaps the SQL function is a better approach in general.", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/219/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": null}

{"id": 324835838, "node_id": "MDU6SXNzdWUzMjQ4MzU4Mzg=", "number": 276, "title": "Handle spatialite geometry columns better", "user": {"value": 45057, "label": "russss"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 21, "created_at": "2018-05-21T08:46:55Z", "updated_at": "2022-03-21T22:22:20Z", "closed_at": "2022-03-21T22:22:20Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "I'd like to see spatialite geometry columns rendered more sensibly - at the moment they come through as well-known-binary unless you use custom SQL, and WKB isn't of much use to anyone on the web.\r\n\r\nIn HTML: they should be shown either as simple lat/long (if it's just a point, for example), or as a sensible placeholder if they're more complex geometries.\r\n\r\nIn JSON: they should be GeoJSON geometries, (which means they can be automatically fed into a leaflet map with no further messing around).\r\n\r\nIn CSV: they should be WKT.\r\n\r\nI briefly wondered if this should go into a plugin, but I suspect it needs hooking in at a deeper level than the plugin architecture will support any time soon.", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/276/reactions\", \"total_count\": 1, \"+1\": 1, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 336924199, "node_id": "MDU6SXNzdWUzMzY5MjQxOTk=", "number": 330, "title": "Limit text display in cells containing large amounts of text", "user": {"value": 82988, "label": "psychemedia"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 4, "created_at": "2018-06-29T09:15:22Z", "updated_at": "2018-07-24T04:53:20Z", "closed_at": "2018-07-10T16:20:48Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "The default preview of a database shows all columns (is the row count limited?) which is fine in many cases but can take a long time to load / offer a large overhead if the table is a SpatiaLite table containing geometry columns that include large shapefiles.\r\n\r\nWould it make sense to have a setting that can limit the amount of text displayed in any given cell in the table preview, or (less useful?) suppress (with notification) the display of overlong columns unless enabled by the user?\r\n\r\nAn issue then arises if a user does want to see all the text in a cell:\r\n\r\n 1) for a particular cell;\r\n 2) for every cell in the table;\r\n 3) for all cells in a particular column or columns\r\n\r\n(I haven't checked but what if a column contains e.g. raw image data? Does this display as raw data? Or can this be rendered in a context aware way as an image preview? I guess a custom template would be one way to do that?)", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/330/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 336936010, "node_id": "MDU6SXNzdWUzMzY5MzYwMTA=", "number": 331, "title": "Datasette throws error when loading spatialite db without extension loaded", "user": {"value": 82988, "label": "psychemedia"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 2, "created_at": "2018-06-29T09:51:14Z", "updated_at": "2022-01-20T21:29:40Z", "closed_at": "2018-07-10T15:13:36Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "When starting datasette on a SpatialLite database *without* loading the SpatiaLite extension (using eg `--load-extension=/usr/local/lib/mod_spatialite.dylib`) an error is thrown and the server fails to start:\r\n\r\n```\r\ndatasette -p 8003 adminboundaries.db \r\nServe! files=('adminboundaries.db',) on port 8003\r\nTraceback (most recent call last):\r\n File \"/Users/ajh59/anaconda3/bin/datasette\", line 11, in <module>\r\n sys.exit(cli())\r\n File \"/Users/ajh59/anaconda3/lib/python3.6/site-packages/click/core.py\", line 722, in __call__\r\n return self.main(*args, **kwargs)\r\n File \"/Users/ajh59/anaconda3/lib/python3.6/site-packages/click/core.py\", line 697, in main\r\n rv = self.invoke(ctx)\r\n File \"/Users/ajh59/anaconda3/lib/python3.6/site-packages/click/core.py\", line 1066, in invoke\r\n return _process_result(sub_ctx.command.invoke(sub_ctx))\r\n File \"/Users/ajh59/anaconda3/lib/python3.6/site-packages/click/core.py\", line 895, in invoke\r\n return ctx.invoke(self.callback, **ctx.params)\r\n File \"/Users/ajh59/anaconda3/lib/python3.6/site-packages/click/core.py\", line 535, in invoke\r\n return callback(*args, **kwargs)\r\n File \"/Users/ajh59/anaconda3/lib/python3.6/site-packages/datasette/cli.py\", line 552, in serve\r\n ds.inspect()\r\n File \"/Users/ajh59/anaconda3/lib/python3.6/site-packages/datasette/app.py\", line 273, in inspect\r\n \"tables\": inspect_tables(conn, self.metadata.get(\"databases\", {}).get(name, {}))\r\n File \"/Users/ajh59/anaconda3/lib/python3.6/site-packages/datasette/inspect.py\", line 79, in inspect_tables\r\n \"PRAGMA table_info({});\".format(escape_sqlite(table))\r\nsqlite3.OperationalError: no such module: VirtualSpatialIndex\r\n``` \r\n\r\nIt would be nice to trap this and return a message saying something like:\r\n\r\n```\r\nIt looks like you're trying to load a SpatiaLite database? Make sure you load in the SpatiaLite extension when starting datasette.\r\n\r\nRead more: https://datasette.readthedocs.io/en/latest/spatialite.html\r\n```\r\n\r\n", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/331/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 341228846, "node_id": "MDU6SXNzdWUzNDEyMjg4NDY=", "number": 343, "title": "Render boolean fields better by default", "user": {"value": 45057, "label": "russss"}, "state": "open", "locked": 0, "assignee": null, "milestone": null, "comments": 1, "created_at": "2018-07-14T11:10:29Z", "updated_at": "2018-07-14T14:17:14Z", "closed_at": null, "author_association": "CONTRIBUTOR", "pull_request": null, "body": "These show up as 0 or 1 because sqlite. I think Yes/No would be fine in most cases?", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/343/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": null}

{"id": 341229113, "node_id": "MDU6SXNzdWUzNDEyMjkxMTM=", "number": 344, "title": "datasette publish heroku fails without name provided", "user": {"value": 45057, "label": "russss"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 1, "created_at": "2018-07-14T11:15:56Z", "updated_at": "2018-07-14T13:00:48Z", "closed_at": "2018-07-14T13:00:48Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "It fails with the following JSON traceback if the `-n` option isn't provided, despite the fact that the command line help says that's not needed for heroku publishes.\r\n\r\n<details>\r\n\r\n```\r\nTraceback (most recent call last):\r\n File \"/usr/local/bin/datasette\", line 11, in <module>\r\n sys.exit(cli())\r\n File \"/usr/local/lib/python3.6/site-packages/click/core.py\", line 722, in __call__\r\n return self.main(*args, **kwargs)\r\n File \"/usr/local/lib/python3.6/site-packages/click/core.py\", line 697, in main\r\n rv = self.invoke(ctx)\r\n File \"/usr/local/lib/python3.6/site-packages/click/core.py\", line 1066, in invoke\r\n return _process_result(sub_ctx.command.invoke(sub_ctx))\r\n File \"/usr/local/lib/python3.6/site-packages/click/core.py\", line 895, in invoke\r\n return ctx.invoke(self.callback, **ctx.params)\r\n File \"/usr/local/lib/python3.6/site-packages/click/core.py\", line 535, in invoke\r\n return callback(*args, **kwargs)\r\n File \"/usr/local/lib/python3.6/site-packages/datasette/cli.py\", line 265, in publish\r\n app_name = json.loads(create_output)[\"name\"]\r\n File \"/usr/local/Cellar/python/3.6.5/Frameworks/Python.framework/Versions/3.6/lib/python3.6/json/__init__.py\", line 354, in loads\r\n return _default_decoder.decode(s)\r\n File \"/usr/local/Cellar/python/3.6.5/Frameworks/Python.framework/Versions/3.6/lib/python3.6/json/decoder.py\", line 339, in decode\r\n obj, end = self.raw_decode(s, idx=_w(s, 0).end())\r\n File \"/usr/local/Cellar/python/3.6.5/Frameworks/Python.framework/Versions/3.6/lib/python3.6/json/decoder.py\", line 357, in raw_decode\r\n raise JSONDecodeError(\"Expecting value\", s, err.value) from None\r\njson.decoder.JSONDecodeError: Expecting value: line 1 column 1 (char 0)\r\n```\r\n\r\n</details>", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/344/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 369716228, "node_id": "MDU6SXNzdWUzNjk3MTYyMjg=", "number": 366, "title": "Default built image size over Zeit Now 100MiB limit", "user": {"value": 416374, "label": "gfrmin"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 2, "created_at": "2018-10-12T21:27:17Z", "updated_at": "2018-11-05T06:23:32Z", "closed_at": "2018-11-05T06:23:32Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "Using `dataset publish now` with no other custom options on a small (43KB) sqlite database leads to the error \"The built image size (373.5M) exceeds the 100MiB limit\". I think this is because of a recent Zeit change: https://github.com/zeit/now-cli/issues/1523", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/366/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

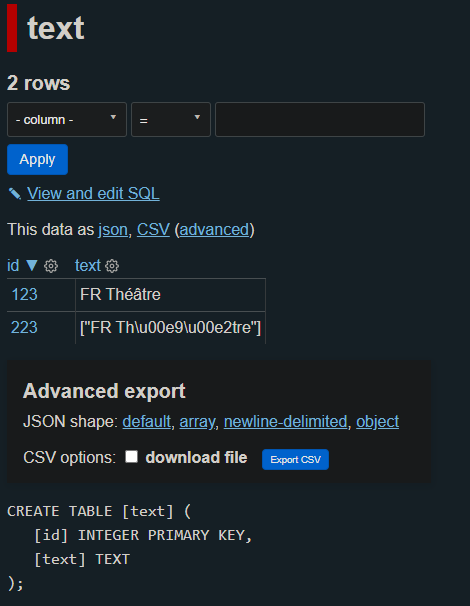

{"id": 374953006, "node_id": "MDU6SXNzdWUzNzQ5NTMwMDY=", "number": 369, "title": "Interface should show same JSON shape options for custom SQL queries", "user": {"value": 416374, "label": "gfrmin"}, "state": "open", "locked": 0, "assignee": null, "milestone": {"value": 3268330, "label": "Datasette 1.0"}, "comments": 2, "created_at": "2018-10-29T10:39:15Z", "updated_at": "2020-05-30T17:24:06Z", "closed_at": null, "author_association": "CONTRIBUTOR", "pull_request": null, "body": "At the moment the page returning a custom SQL query shows the JSON and CSV APIs, but not the multiple JSON shapes. However, adding the `_shape` parameter to the JSON API URL manually still works, so perhaps there should be consistency in the interface by having the same \"Advanced Export\" box for custom SQL queries.", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/369/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": null}

{"id": 377155320, "node_id": "MDU6SXNzdWUzNzcxNTUzMjA=", "number": 370, "title": "Integration with JupyterLab", "user": {"value": 82988, "label": "psychemedia"}, "state": "open", "locked": 0, "assignee": null, "milestone": null, "comments": 4, "created_at": "2018-11-04T13:57:13Z", "updated_at": "2022-09-29T08:17:47Z", "closed_at": null, "author_association": "CONTRIBUTOR", "pull_request": null, "body": "I just watched a demo video for the [JupyterLab Chart Editor](https://www.crowdcast.io/e/introducing-JupyterLab-Chart-Editor/) which wraps the plotly chart editor app in a JupyterLab panel and lets you open a plotly chart JSON file in that editor. Essentially, it pops an HTML app into a panel in JupyterLab, and I think registers the app as a file viewer for a particular file type. (I'm not completely taken by it, tbh, because it means you can do irreproducible things to the chart definition file, but that's another issue).\r\n\r\nJupyterLab extensions can also open files from a dialogue as the iframe/html previewer shows: https://github.com/timkpaine/jupyterlab_iframe.\r\n\r\nThis made me wonder about what `datasette` integration with JupyterLab might do.\r\n\r\nFor example, by right-clicking on a CSV file (for which there is already a CSV table view) in the file browser, offer a *View / Run as datasette* file viewer option that will:\r\n\r\n- run the CSV file through `csvs-to-sqlite`;\r\n- launch the `datasette` server and display the `datasette` view in a JupyterLab panel.\r\n\r\n(? Create a new SQLite db for each CSV file and launch each datasette view on a new port? Or have a JupyterLab (session?) SQLite db that stores all `datasette` viewed CSVs and runs on a single port?) \r\n\r\nAs a freebie, the `datasette` API would allow you to run efficient SQL queries against the file eg using using `pandas.read_sql()` queries in a notebook in the same space.\r\n\r\nRelated:\r\n\r\n- [JupyterLab extensions docs](https://jupyterlab.readthedocs.io/en/stable/user/extensions.html)\r\n- a [cookiecutter for wrting JupyterLab extensions using Javascript](https://github.com/jupyterlab/extension-cookiecutter-js)\r\n- a [cookiecutter for writing JupyterLab extensions using Typescript](https://github.com/jupyterlab/extension-cookiecutter-ts)\r\n- tutorial: [Let\u2019s Make an xkcd JupyterLab Extension](https://jupyterlab.readthedocs.io/en/stable/developer/xkcd_extension_tutorial.html)", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/370/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": null}

{"id": 377156339, "node_id": "MDU6SXNzdWUzNzcxNTYzMzk=", "number": 371, "title": "datasette publish digitalocean plugin", "user": {"value": 82988, "label": "psychemedia"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 3, "created_at": "2018-11-04T14:07:41Z", "updated_at": "2021-01-04T20:14:28Z", "closed_at": "2021-01-04T20:14:28Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "Provide support for launching `datasette` on Digital Ocean.\r\n\r\nExample: [Deploy Docker containers into Digital Ocean](https://blog.machinebox.io/deploy-machine-box-in-digital-ocean-385265fbeafd).\r\n\r\nDigital Ocean also has a preconfigured VM running Docker that can be launched from the command line via the Digital Ocean API: [Docker One-Click Application](https://www.digitalocean.com/docs/one-clicks/docker/).\r\n\r\nRelated:\r\n- Launching containers in Digital Ocean servers running docker: [How To Provision and Manage Remote Docker Hosts with Docker Machine on Ubuntu 16.04](https://www.digitalocean.com/community/tutorials/how-to-provision-and-manage-remote-docker-hosts-with-docker-machine-on-ubuntu-16-04)\r\n- [How To Use Doctl, the Official DigitalOcean Command-Line Client](https://www.digitalocean.com/community/tutorials/how-to-use-doctl-the-official-digitalocean-command-line-client)", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/371/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 377166793, "node_id": "MDU6SXNzdWUzNzcxNjY3OTM=", "number": 372, "title": "Docker build tools", "user": {"value": 82988, "label": "psychemedia"}, "state": "open", "locked": 0, "assignee": null, "milestone": null, "comments": 0, "created_at": "2018-11-04T16:02:35Z", "updated_at": "2018-11-04T16:02:35Z", "closed_at": null, "author_association": "CONTRIBUTOR", "pull_request": null, "body": "In terms of small pieces lightly joined, I note that there are several tools starting to appear for building generating Dockerfiles and building Docker containers from simpler components such as `requirements.txt` files.\r\n\r\nIf plugin/extensions builders want to include additional packages, then things like incremental builds of composable builds that add additional items into a base `datasette` container may be required.\r\n\r\nExamples of Dockerfile generators / container builders:\r\n\r\n- [openshift/source-to-image (s2i)](https://github.com/openshift/source-to-image)\r\n- [jupyter/repo2docker](https://github.com/jupyter/repo2docker)\r\n- [stencila/dockter](https://github.com/stencila/dockter)\r\n\r\nDiscussions / threads (via Binderhub gitter) on:\r\n- [why `repo2docker` not `s2i`](http://words.yuvi.in/post/why-not-s2i/)\r\n- [why `dockter` not `repo2docker`](https://twitter.com/choldgraf/status/1058499607309647872)\r\n- [composability in `s2i`](https://trello.com/c/AexIVZNf/1008-8-composable-builds-builds-evg)\r\n\r\nRelates to things like:\r\n\r\n- https://github.com/simonw/datasette/pull/280", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/372/reactions\", \"total_count\": 2, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 2, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": null}

{"id": 377266351, "node_id": "MDU6SXNzdWUzNzcyNjYzNTE=", "number": 373, "title": "Views should be shown on root/index page along with tables", "user": {"value": 416374, "label": "gfrmin"}, "state": "closed", "locked": 0, "assignee": null, "milestone": {"value": 4305096, "label": "0.28"}, "comments": 1, "created_at": "2018-11-05T06:28:41Z", "updated_at": "2019-05-16T00:29:22Z", "closed_at": "2019-05-16T00:29:22Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "At the moment the number of views is given on a datasette \"homepage\", but not links to any views themselves", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/373/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 398559195, "node_id": "MDU6SXNzdWUzOTg1NTkxOTU=", "number": 400, "title": "datasette publish cloudrun plugin", "user": {"value": 10352819, "label": "rprimet"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 1, "created_at": "2019-01-12T14:35:11Z", "updated_at": "2019-05-03T16:57:35Z", "closed_at": "2019-05-03T16:57:35Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "Google announced that they may launch a simple service for running Docker containers (previously serverless containers, now called \"cloud run\" -- link to alpha [here](https://services.google.com/fb/forms/serverlesscontainers/)). If/when this happens, it might be a good fit for publishing datasettes? (at least using the current version, manually publishing a datasette seems relatively painless).", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/400/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 415575624, "node_id": "MDU6SXNzdWU0MTU1NzU2MjQ=", "number": 414, "title": "datasette requires specific version of Click", "user": {"value": 82988, "label": "psychemedia"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 1, "created_at": "2019-02-28T11:24:59Z", "updated_at": "2019-03-15T04:42:13Z", "closed_at": "2019-03-15T04:42:13Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "Is `datasette` beholden to version `click==6.7`?\r\n\r\nCurrent release is at 7.0. Can the requirement be liberalised, eg to `>=6.7`?", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/414/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 432870248, "node_id": "MDU6SXNzdWU0MzI4NzAyNDg=", "number": 431, "title": "Datasette doesn't reload when database file changes", "user": {"value": 82988, "label": "psychemedia"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 3, "created_at": "2019-04-13T16:50:43Z", "updated_at": "2019-05-02T05:13:55Z", "closed_at": "2019-05-02T05:13:54Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "My understanding of the `--reload` option was that if the database file changed `datasette` would automatically reload.\r\n\r\nI'm running on a Mac and from the `datasette` UI queries don't seem to be picking up data in a newly changed db (I checked the db timestamp - it certainly updated).\r\n\r\nI was also expecting to see some sort of log statement in the datasette logging to say that it had detected a file change and restarted, but don't see anything there?\r\n\r\nWill try to check on an Ubuntu box when I get a chance to see if this is a Mac thing.", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/431/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 438200529, "node_id": "MDU6SXNzdWU0MzgyMDA1Mjk=", "number": 438, "title": "Plugins are loaded when running pytest", "user": {"value": 45057, "label": "russss"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 2, "created_at": "2019-04-29T08:25:58Z", "updated_at": "2019-05-02T05:09:18Z", "closed_at": "2019-05-02T05:09:11Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "If I have a datasette plugin installed on my system, its hooks are called when running the main datasette tests. This is probably undesirable, especially with the inspect hook in #437, as the plugin may rely on inspected state that the tests don't know about.", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/438/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 438259941, "node_id": "MDU6SXNzdWU0MzgyNTk5NDE=", "number": 440, "title": "Plugin hook for additional data export formats", "user": {"value": 45057, "label": "russss"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 0, "created_at": "2019-04-29T11:01:39Z", "updated_at": "2019-05-01T23:01:57Z", "closed_at": "2019-05-01T23:01:57Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "It would be nice to have a simple way for plugins to provide additional data export formats. Might require a bit of work on the internals. I can work around this at a lower level with the `prepare_sanic` hook from #437 in the mean time.\r\n\r\nI guess plugins should be able to register a function which takes a row or list of rows and returns the rendered data. They'll also need to provide a file extension and probably a Content-Type.\r\n\r\nDatasette could then automatically include this format in the list of export formats on each page.\r\n\r\nLooks like this is related to #119.", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/440/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 442327592, "node_id": "MDU6SXNzdWU0NDIzMjc1OTI=", "number": 456, "title": "Installing installs the tests package", "user": {"value": 7725188, "label": "hellerve"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 3, "created_at": "2019-05-09T16:35:16Z", "updated_at": "2020-07-24T20:39:54Z", "closed_at": "2020-07-24T20:39:54Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "Because `setup.py` uses `find_packages` and `tests` is on the top-level, `pip install datasette` will install a top-level package called `tests`, which is probably not desired behavior.\r\n\r\nThe offending line is here:\r\nhttps://github.com/simonw/datasette/blob/bfa2ae0d16d39bb82dbe4da4f3fdc3c7f6257418/setup.py#L40\r\n\r\nAnd only `pip uninstall datasette` with a conflicting package would warn you by default; apparently another package had the same problem, which is why I get this message when uninstalling:\r\n\r\n```\r\n$ pip uninstall datasette\r\nUninstalling datasette-0.27:\r\n Would remove:\r\n /usr/local/bin/datasette\r\n /usr/local/lib/python3.7/site-packages/datasette-0.27.dist-info/*\r\n /usr/local/lib/python3.7/site-packages/datasette/*\r\n /usr/local/lib/python3.7/site-packages/tests/*\r\n Would not remove (might be manually added):\r\n [ .. snip .. ]\r\nProceed (y/n)? \r\n```\r\n\r\nThis should be a relatively simple fix, and I could drop a PR if desired!\r\n\r\nCheers", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/456/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 459627549, "node_id": "MDU6SXNzdWU0NTk2Mjc1NDk=", "number": 523, "title": "Show total/unfiltered row count when filtering", "user": {"value": 2657547, "label": "rixx"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 2, "created_at": "2019-06-23T22:56:48Z", "updated_at": "2019-06-24T01:38:14Z", "closed_at": "2019-06-24T01:38:14Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "When I'm seeing a filtered view of a table, I'd like to be able to see something like '2 rows where status != \"closed\" (of 1000 total)' to have a context for the data I'm seeing \u2013 e.g. currently my database is being filled by an importer, so this information would be super helpful.\r\n\r\nSince this information would be a performance hit, maybe something like '12 rows where status != \"closed\" (of ??? total)' with lazy-loading on-click(?) could be applied (Or via a \"How many total?\" tooltip, or \u2026)", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/523/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 465731062, "node_id": "MDU6SXNzdWU0NjU3MzEwNjI=", "number": 555, "title": "Static mounts with relative paths not working", "user": {"value": 3243482, "label": "abdusco"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 0, "created_at": "2019-07-09T11:38:35Z", "updated_at": "2019-07-11T16:13:22Z", "closed_at": "2019-07-11T16:13:22Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "Datasette fails to serve files from static mounts that are created using relative paths `datasette --static mystatic:rel/path/to/static/dir`. \r\nI've explained the problem and the solution in the pull request: https://github.com/simonw/datasette/pull/554", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/555/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 471292050, "node_id": "MDU6SXNzdWU0NzEyOTIwNTA=", "number": 563, "title": "incorrect json url for row-level data?", "user": {"value": 10352819, "label": "rprimet"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 0, "created_at": "2019-07-22T19:59:38Z", "updated_at": "2019-10-21T02:03:09Z", "closed_at": "2019-10-21T02:03:09Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "While visiting [this example page](https://register-of-members-interests.datasettes.com/regmem-98dc8b7/people/uk.org.publicwhip%2Fperson%2F10001) (linked from Datasette documentation), manually clicking on [the link](https://register-of-members-interests.datasettes.com/regmem-98dc8b7/people/uk.org.publicwhip%2Fperson%2F10001?_format=json) (\"This data as .json\") to the json data results in an error 500 `data() got an unexpected keyword argument 'as_format'`\r\n\r\nThe [JSON page linked to from the documentation](https://register-of-members-interests.datasettes.com/regmem-d22c12c/people/uk.org.publicwhip%2Fperson%2F10001.json) however is correct (the page address ends in `.json` rather than using a query string `?format=json`)\r\n\r\nThis particular datasette demo page is now a few versions behind, but I was able to reproduce the issue using v0.29.2 and a downloaded copy of the demo database (and also with the current HEAD).\r\n\r\nHere is a stack trace:\r\n\r\n```\r\nTraceback (most recent call last):\r\n File \"/home/romain/miniconda3/envs/dsbug/lib/python3.7/site-packages/datasette/utils/asgi.py\", line 101, in __call__\r\n return await view(new_scope, receive, send)\r\n File \"/home/romain/miniconda3/envs/dsbug/lib/python3.7/site-packages/datasette/utils/asgi.py\", line 173, in view\r\n request, **scope[\"url_route\"][\"kwargs\"]\r\n File \"/home/romain/miniconda3/envs/dsbug/lib/python3.7/site-packages/datasette/views/base.py\", line 267, in get\r\n request, database, hash, correct_hash_provided, **kwargs\r\n File \"/home/romain/miniconda3/envs/dsbug/lib/python3.7/site-packages/datasette/views/base.py\", line 399, in view_get\r\n request, database, hash, **kwargs\r\nTypeError: data() got an unexpected keyword argument 'as_format'\r\n\r\n```", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/563/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 488293926, "node_id": "MDU6SXNzdWU0ODgyOTM5MjY=", "number": 58, "title": "Support enabling FTS on views", "user": {"value": 49260, "label": "amjith"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 1, "created_at": "2019-09-02T18:56:36Z", "updated_at": "2020-10-16T18:39:36Z", "closed_at": "2020-10-16T18:39:31Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "Right now enable_fts() is only implemented for Table(). Technically sqlite supports enabling fts on views. But it requires deeper thought since views don't have `rowid` and the current implementation of enable_fts() relies on the presence of `rowid` column. \r\n\r\nIt is possible to provide an alternative rowid using the `content_rowid` option to the FTS5() function. \r\n\r\nRef: https://sqlite.org/fts5.html#fts5_table_creation_and_initialization\r\n\r\n> The \"content_rowid\" option, used to set the rowid field of an external content table. \r\n\r\nThis will further complicate `enable_fts()` function by adding an extra argument. I'm wondering if that is outside the scope of this tool or should I work on that feature and send a PR? ", "repo": {"value": 140912432, "label": "sqlite-utils"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/sqlite-utils/issues/58/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 492153532, "node_id": "MDU6SXNzdWU0OTIxNTM1MzI=", "number": 573, "title": "Exposing Datasette via Jupyter-server-proxy", "user": {"value": 82988, "label": "psychemedia"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 3, "created_at": "2019-09-11T10:32:36Z", "updated_at": "2020-03-26T09:41:30Z", "closed_at": "2020-03-26T09:41:30Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "It is possible to expose a running `datasette` service in a Jupyter environment such as a MyBinder environment using the [`jupyter-server-proxy`](https://github.com/jupyterhub/jupyter-server-proxy).\r\n\r\nFor example, using [this demo Binder](https://mybinder.org/v2/gh/binder-examples/r/master?filepath=index.ipynb) which has the server proxy installed, we can then upload a simple test database from the notebook homepage, from a Jupyter termianl install datasette and set it running against the test db on eg port 8001 and then view it via the path `proxy/8001`.\r\n\r\nClicking links results in 404s though because the `datasette` links aren't relative to the current path?\r\n\r\n\r\n\r\n\r\n", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/573/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 518725064, "node_id": "MDU6SXNzdWU1MTg3MjUwNjQ=", "number": 29, "title": "`import` command fails on empty files", "user": {"value": 21148, "label": "jacobian"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 4, "created_at": "2019-11-06T20:34:26Z", "updated_at": "2019-11-09T20:33:38Z", "closed_at": "2019-11-09T19:36:36Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "If a file in the export is empty (in my case it was `account-suspensions.js`), `twitter-to-sqlite import` fails:\r\n\r\n```\r\n$ twitter-to-sqlite import twitter.db ~/Downloads/twitter-2019-11-06-926f4f3be4b3b1fcb1aa387c40cd14f7c8aaf9bbcdb2d78ac14d9989add501bb.zip\r\nTraceback (most recent call last):\r\n File \"/Users/jacob/Library/Caches/pypoetry/virtualenvs/jkm-dogsheep-ezLnyXZS-py3.7/bin/twitter-to-sqlite\", line 10, in <module>\r\n sys.exit(cli())\r\n File \"/Users/jacob/Library/Caches/pypoetry/virtualenvs/jkm-dogsheep-ezLnyXZS-py3.7/lib/python3.7/site-packages/click/core.py\", line 764, in __call__\r\n return self.main(*args, **kwargs)\r\n File \"/Users/jacob/Library/Caches/pypoetry/virtualenvs/jkm-dogsheep-ezLnyXZS-py3.7/lib/python3.7/site-packages/click/core.py\", line 717, in main\r\n rv = self.invoke(ctx)\r\n File \"/Users/jacob/Library/Caches/pypoetry/virtualenvs/jkm-dogsheep-ezLnyXZS-py3.7/lib/python3.7/site-packages/click/core.py\", line 1137, in invoke\r\n return _process_result(sub_ctx.command.invoke(sub_ctx))\r\n File \"/Users/jacob/Library/Caches/pypoetry/virtualenvs/jkm-dogsheep-ezLnyXZS-py3.7/lib/python3.7/site-packages/click/core.py\", line 956, in invoke\r\n return ctx.invoke(self.callback, **ctx.params)\r\n File \"/Users/jacob/Library/Caches/pypoetry/virtualenvs/jkm-dogsheep-ezLnyXZS-py3.7/lib/python3.7/site-packages/click/core.py\", line 555, in invoke\r\n return callback(*args, **kwargs)\r\n File \"/Users/jacob/Library/Caches/pypoetry/virtualenvs/jkm-dogsheep-ezLnyXZS-py3.7/lib/python3.7/site-packages/twitter_to_sqlite/cli.py\", line 627, in import_\r\n archive.import_from_file(db, filename, content)\r\n File \"/Users/jacob/Library/Caches/pypoetry/virtualenvs/jkm-dogsheep-ezLnyXZS-py3.7/lib/python3.7/site-packages/twitter_to_sqlite/archive.py\", line 224, in import_from_file\r\n db[table_name].upsert_all(rows, hash_id=\"pk\")\r\n File \"/Users/jacob/Library/Caches/pypoetry/virtualenvs/jkm-dogsheep-ezLnyXZS-py3.7/lib/python3.7/site-packages/sqlite_utils/db.py\", line 1113, in upsert_all\r\n extracts=extracts,\r\n File \"/Users/jacob/Library/Caches/pypoetry/virtualenvs/jkm-dogsheep-ezLnyXZS-py3.7/lib/python3.7/site-packages/sqlite_utils/db.py\", line 980, in insert_all\r\n first_record = next(records)\r\nStopIteration\r\n```\r\n\r\nThis appears to be because `db.upsert_all` is called with no rows -- I think? \r\n\r\nI hacked around this by modifying `import_from_file` to have an `if rows:` clause:\r\n\r\n```\r\n for table, rows in to_insert.items():\r\n if rows:\r\n table_name = \"archive_{}\".format(table.replace(\"-\", \"_\"))\r\n ...\r\n```\r\n\r\nI'm happy to work up a real PR if that's the right approach, but I'm not sure it is.", "repo": {"value": 206156866, "label": "twitter-to-sqlite"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/dogsheep/twitter-to-sqlite/issues/29/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 518739697, "node_id": "MDU6SXNzdWU1MTg3Mzk2OTc=", "number": 30, "title": "`followers` fails because `transform_user` is called twice", "user": {"value": 21148, "label": "jacobian"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 2, "created_at": "2019-11-06T20:44:52Z", "updated_at": "2019-11-09T20:15:28Z", "closed_at": "2019-11-09T19:55:52Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "Trying to run `twitter-to-sqlite followers` errors out:\r\n\r\n```\r\nTraceback (most recent call last):\r\n File \"/Users/jacob/Library/Caches/pypoetry/virtualenvs/jkm-dogsheep-ezLnyXZS-py3.7/bin/twitter-to-sqlite\", line 10, in <module>\r\n sys.exit(cli())\r\n File \"/Users/jacob/Library/Caches/pypoetry/virtualenvs/jkm-dogsheep-ezLnyXZS-py3.7/lib/python3.7/site-packages/click/core.py\", line 764, in __call__\r\n return self.main(*args, **kwargs)\r\n File \"/Users/jacob/Library/Caches/pypoetry/virtualenvs/jkm-dogsheep-ezLnyXZS-py3.7/lib/python3.7/site-packages/click/core.py\", line 717, in main\r\n rv = self.invoke(ctx)\r\n File \"/Users/jacob/Library/Caches/pypoetry/virtualenvs/jkm-dogsheep-ezLnyXZS-py3.7/lib/python3.7/site-packages/click/core.py\", line 1137, in invoke\r\n return _process_result(sub_ctx.command.invoke(sub_ctx))\r\n File \"/Users/jacob/Library/Caches/pypoetry/virtualenvs/jkm-dogsheep-ezLnyXZS-py3.7/lib/python3.7/site-packages/click/core.py\", line 956, in invoke\r\n return ctx.invoke(self.callback, **ctx.params)\r\n File \"/Users/jacob/Library/Caches/pypoetry/virtualenvs/jkm-dogsheep-ezLnyXZS-py3.7/lib/python3.7/site-packages/click/core.py\", line 555, in invoke\r\n return callback(*args, **kwargs)\r\n File \"/Users/jacob/Library/Caches/pypoetry/virtualenvs/jkm-dogsheep-ezLnyXZS-py3.7/lib/python3.7/site-packages/twitter_to_sqlite/cli.py\", line 130, in followers\r\n go(bar.update)\r\n File \"/Users/jacob/Library/Caches/pypoetry/virtualenvs/jkm-dogsheep-ezLnyXZS-py3.7/lib/python3.7/site-packages/twitter_to_sqlite/cli.py\", line 116, in go\r\n utils.save_users(db, [profile])\r\n File \"/Users/jacob/Library/Caches/pypoetry/virtualenvs/jkm-dogsheep-ezLnyXZS-py3.7/lib/python3.7/site-packages/twitter_to_sqlite/utils.py\", line 302, in save_users\r\n transform_user(user)\r\n File \"/Users/jacob/Library/Caches/pypoetry/virtualenvs/jkm-dogsheep-ezLnyXZS-py3.7/lib/python3.7/site-packages/twitter_to_sqlite/utils.py\", line 181, in transform_user\r\n user[\"created_at\"] = parser.parse(user[\"created_at\"])\r\n File \"/Users/jacob/Library/Caches/pypoetry/virtualenvs/jkm-dogsheep-ezLnyXZS-py3.7/lib/python3.7/site-packages/dateutil/parser/_parser.py\", line 1374, in parse\r\n return DEFAULTPARSER.parse(timestr, **kwargs)\r\n File \"/Users/jacob/Library/Caches/pypoetry/virtualenvs/jkm-dogsheep-ezLnyXZS-py3.7/lib/python3.7/site-packages/dateutil/parser/_parser.py\", line 646, in parse\r\n res, skipped_tokens = self._parse(timestr, **kwargs)\r\n File \"/Users/jacob/Library/Caches/pypoetry/virtualenvs/jkm-dogsheep-ezLnyXZS-py3.7/lib/python3.7/site-packages/dateutil/parser/_parser.py\", line 725, in _parse\r\n l = _timelex.split(timestr) # Splits the timestr into tokens\r\n File \"/Users/jacob/Library/Caches/pypoetry/virtualenvs/jkm-dogsheep-ezLnyXZS-py3.7/lib/python3.7/site-packages/dateutil/parser/_parser.py\", line 207, in split\r\n return list(cls(s))\r\n File \"/Users/jacob/Library/Caches/pypoetry/virtualenvs/jkm-dogsheep-ezLnyXZS-py3.7/lib/python3.7/site-packages/dateutil/parser/_parser.py\", line 76, in __init__\r\n '{itype}'.format(itype=instream.__class__.__name__))\r\nTypeError: Parser must be a string or character stream, not datetime\r\n```\r\n\r\nThis appears to be because https://github.com/dogsheep/twitter-to-sqlite/blob/master/twitter_to_sqlite/cli.py#L111 calls `transform_user`, and then https://github.com/dogsheep/twitter-to-sqlite/blob/master/twitter_to_sqlite/cli.py#L116 calls `transform_user` again, which fails because the user is already transformed.\r\n\r\nI was able to work around this by commenting out https://github.com/dogsheep/twitter-to-sqlite/blob/master/twitter_to_sqlite/cli.py#L116. \r\n\r\nShall I work up a patch for that, or is there a better approach?", "repo": {"value": 206156866, "label": "twitter-to-sqlite"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/dogsheep/twitter-to-sqlite/issues/30/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 527710055, "node_id": "MDU6SXNzdWU1Mjc3MTAwNTU=", "number": 640, "title": "Nicer error message for heroku publish name clash", "user": {"value": 82988, "label": "psychemedia"}, "state": "open", "locked": 0, "assignee": null, "milestone": null, "comments": 1, "created_at": "2019-11-24T14:57:07Z", "updated_at": "2019-12-06T07:19:34Z", "closed_at": null, "author_association": "CONTRIBUTOR", "pull_request": null, "body": "If you try to publish to Heroku using no set name (i.e. the default `datasette` name) and a project already exists under that name, you get a meaningful error report on the first line followed by Py error messages that drown it out:\r\n\r\n```\r\nCreating datasette... !\r\n \u25b8 Name datasette is already taken\r\nTraceback (most recent call last):\r\n File \"/usr/local/bin/datasette\", line 10, in <module>\r\n sys.exit(cli())\r\n File \"/usr/local/lib/python3.7/site-packages/click/core.py\", line 764, in __call__\r\n return self.main(*args, **kwargs)\r\n File \"/usr/local/lib/python3.7/site-packages/click/core.py\", line 717, in main\r\n rv = self.invoke(ctx)\r\n File \"/usr/local/lib/python3.7/site-packages/click/core.py\", line 1137, in invoke\r\n return _process_result(sub_ctx.command.invoke(sub_ctx))\r\n File \"/usr/local/lib/python3.7/site-packages/click/core.py\", line 1137, in invoke\r\n return _process_result(sub_ctx.command.invoke(sub_ctx))\r\n File \"/usr/local/lib/python3.7/site-packages/click/core.py\", line 956, in invoke\r\n return ctx.invoke(self.callback, **ctx.params)\r\n File \"/usr/local/lib/python3.7/site-packages/click/core.py\", line 555, in invoke\r\n return callback(*args, **kwargs)\r\n File \"/Users/NNNNN/Library/Python/3.7/lib/python/site-packages/datasette/publish/heroku.py\", line 124, in heroku\r\n create_output = check_output(cmd).decode(\"utf8\")\r\n File \"/usr/local/Cellar/python/3.7.5/Frameworks/Python.framework/Versions/3.7/lib/python3.7/subprocess.py\", line 411, in check_output\r\n **kwargs).stdout\r\n File \"/usr/local/Cellar/python/3.7.5/Frameworks/Python.framework/Versions/3.7/lib/python3.7/subprocess.py\", line 512, in run\r\n output=stdout, stderr=stderr)\r\nsubprocess.CalledProcessError: Command '['heroku', 'apps:create', 'datasette', '--json']' returned non-zero exit status 1.\r\n```\r\n\r\nIt would be neater if:\r\n\r\n- the Py error message was caught;\r\n- the report suggested setting a project name using `-n` etc.\r\n\r\nIt may also be useful to provide a command to list the current names that are being used, which I assume is available via a Heroku call?", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/640/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": null}

{"id": 546073980, "node_id": "MDU6SXNzdWU1NDYwNzM5ODA=", "number": 74, "title": "Test failures on openSUSE 15.1: AssertionError: Explicit other_table and other_column", "user": {"value": 15092, "label": "jayvdb"}, "state": "open", "locked": 0, "assignee": null, "milestone": null, "comments": 3, "created_at": "2020-01-07T04:35:50Z", "updated_at": "2020-01-12T07:21:17Z", "closed_at": null, "author_association": "CONTRIBUTOR", "pull_request": null, "body": "openSUSE 15.1 is using python 3.6.5 and click-7.0 , however it has test failures while openSUSE Tumbleweed on py37 passes.\r\n\r\nMost fail on the cli exit code like\r\n```py\r\n[ 74s] =================================== FAILURES ===================================\r\n[ 74s] _________________________________ test_tables __________________________________\r\n[ 74s] \r\n[ 74s] db_path = '/tmp/pytest-of-abuild/pytest-0/test_tables0/test.db'\r\n[ 74s] \r\n[ 74s] def test_tables(db_path):\r\n[ 74s] result = CliRunner().invoke(cli.cli, [\"tables\", db_path])\r\n[ 74s] > assert '[{\"table\": \"Gosh\"},\\n {\"table\": \"Gosh2\"}]' == result.output.strip()\r\n[ 74s] E assert '[{\"table\": \"...e\": \"Gosh2\"}]' == ''\r\n[ 74s] E - [{\"table\": \"Gosh\"},\r\n[ 74s] E - {\"table\": \"Gosh2\"}]\r\n[ 74s] \r\n[ 74s] tests/test_cli.py:28: AssertionError\r\n```\r\n\r\npackaging project at https://build.opensuse.org/package/show/home:jayvdb:py-new/python-sqlite-utils\r\n\r\nI'll keep digging into this after I have github-to-sqlite working on Tumbleweed, as I'll need openSUSE Leap 15.1 working before I can submit this into the main python repo.", "repo": {"value": 140912432, "label": "sqlite-utils"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/sqlite-utils/issues/74/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": null}

{"id": 546961357, "node_id": "MDU6SXNzdWU1NDY5NjEzNTc=", "number": 656, "title": "Display of the column definitions", "user": {"value": 6371750, "label": "JBPressac"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 1, "created_at": "2020-01-08T16:16:53Z", "updated_at": "2020-01-20T14:17:11Z", "closed_at": "2020-01-20T14:14:33Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "Hello,\r\nIs the nice display of headers and definitions at the top of https://fivethirtyeight.datasettes.com/fivethirtyeight-ac35616/antiquities-act%2Factions_under_antiquities_act is configured in the metadata.json file ?\r\nThank you,", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/656/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 600120439, "node_id": "MDU6SXNzdWU2MDAxMjA0Mzk=", "number": 726, "title": "Foreign key : case of a link to the associated row not displayed", "user": {"value": 6371750, "label": "JBPressac"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 1, "created_at": "2020-04-15T08:31:27Z", "updated_at": "2020-04-27T22:05:47Z", "closed_at": "2020-04-27T22:05:46Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "Hello,\r\nI use Datasette to publish tsv files linked together by foreign keys declared thanks to sqlite-utils. In one table, [prelib_personne](http://crbc-dataset.huma-num.fr/prelib/prelib_personne), the foreign keys are properly noticed by a link to the associated row (for instance ville_naissance_id is properly linked to prelib_ville). But every link to the foreign key prelib_oeuvre.id fails. For instance, [prelib_ecritoeuvre](http://crbc-dataset.huma-num.fr/prelib/prelib_ecritoeuvre) has links to prelib_personne but none to prelib_oeuvre. In despite of the schema:\r\n\r\nCREATE TABLE \"prelib_ecritoeuvre\" (\r\n\"id\" INTEGER,\r\n \"fonction_id\" INTEGER,\r\n \"oeuvre_id\" INTEGER,\r\n \"personne_id\" INTEGER\r\n ,PRIMARY KEY ([id]),\r\n FOREIGN KEY(fonction_id) REFERENCES prelib_fonctionecritoeuvre(id),\r\n FOREIGN KEY(personne_id) REFERENCES prelib_personne(id),\r\n FOREIGN KEY(oeuvre_id) REFERENCES prelib_oeuvre(id)\r\n); \r\n\r\nWould you have any clue to investigate the reason of this problem?\r\nThanks,", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/726/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 610517472, "node_id": "MDU6SXNzdWU2MTA1MTc0NzI=", "number": 103, "title": "sqlite3.OperationalError: too many SQL variables in insert_all when using rows with varying numbers of columns", "user": {"value": 32605365, "label": "b0b5h4rp13"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 8, "created_at": "2020-05-01T02:26:14Z", "updated_at": "2020-05-14T00:18:57Z", "closed_at": "2020-05-14T00:18:57Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "If using insert_all to put in 1000 rows of data with varying number of columns, it comes up with this message `sqlite3.OperationalError: too many SQL variables` if the number of columns is larger in later records (past the first row)\r\n\r\nI've reduced `SQLITE_MAX_VARS` by 100 to 899 at the top of `db.py` to add wiggle room, so that if the column count increases it wont go past SQLite's batch limit as calculated by this line of code based on the count of the first row's dict keys\r\n\r\n batch_size = max(1, min(batch_size, SQLITE_MAX_VARS // num_columns))", "repo": {"value": 140912432, "label": "sqlite-utils"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/sqlite-utils/issues/103/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 620969465, "node_id": "MDU6SXNzdWU2MjA5Njk0NjU=", "number": 767, "title": "Allow to specify a URL fragment for canned queries", "user": {"value": 2657547, "label": "rixx"}, "state": "closed", "locked": 0, "assignee": null, "milestone": {"value": 5471110, "label": "Datasette 0.43"}, "comments": 2, "created_at": "2020-05-19T13:17:42Z", "updated_at": "2020-05-27T21:52:25Z", "closed_at": "2020-05-27T21:52:25Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "Canned queries are very useful to direct users to prepared data and views. I like to use them with charts using datasette-vega a lot, because people get a direct impression at first glance.\r\n\r\ndatasette-vega doesn't show up by default though, and users have to click through to it. Also, datasette-vega does not always guess the best way to render columns correctly though, so it would be nice if I could specify a URL fragment in my canned queries to make sure people see what I want them to see.\r\n\r\nMy current workaround is to include a fragement link in ``description_html`` and ask people to reload the page, like [here](https://data.rixx.de/songs/show_by_bpm#g.mark=bar&g.x_column=bpm_floor&g.x_type=ordinal&g.y_column=bpm_count&g.y_type=quantitative), which is a bit hacky.", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/767/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 640330278, "node_id": "MDU6SXNzdWU2NDAzMzAyNzg=", "number": 851, "title": "Having trouble getting writable canned queries to work", "user": {"value": 3243482, "label": "abdusco"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 1, "created_at": "2020-06-17T10:30:28Z", "updated_at": "2020-06-17T10:33:25Z", "closed_at": "2020-06-17T10:32:33Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "Hey,\r\n\r\nI'm trying to get canned inserts to work. I have an DB with following metadata:\r\n\r\n```text\r\nsqlite> .mode line\r\n\r\nsqlite> select name, sql from sqlite_master where name like '%search%';\r\n name = search\r\n sql = CREATE TABLE \"search\" (\"id\" INTEGER NOT NULL PRIMARY KEY, \"name\" VARCHAR(255) NOT NULL, \"url\" VARCHAR(255) NOT NULL)\r\n```\r\n\r\n```yaml\r\n# ...\r\nqueries:\r\n add_search:\r\n sql: insert into search(name, url) VALUES (:name, :url),\r\n write: true\r\n```\r\nwhich renders a form as expected, but when I submit the form I get `incomplete input` error.\r\n\r\n\r\n\r\nbut when submit post the form\r\n\r\nI've attached a debugger to see where the error comes from, because `incomplete input` string doesn't appear in datasette codebase.\r\n\r\nInside `datasette.database.Database.execute_write_fn` \r\n\r\nhttps://github.com/simonw/datasette/blob/4fa7cf68536628344356d3ef8c92c25c249067a0/datasette/database.py#L69\r\n\r\n```py\r\nresult = await reply_queue.async_q.get()\r\n```\r\n\r\nthis line raises an exception. \r\n\r\nThat led me to believe I had something wrong with my SQL. But running the command in `sqlite3` inserts the record just fine.\r\n\r\n```text\r\nsqlite> insert into search (name, url) values ('my name', 'my url');\r\nsqlite> SELECT last_insert_rowid();\r\nlast_insert_rowid() = 3\r\n```\r\n\r\nSo I'm a bit lost here.\r\n\r\n---\r\n- datasette, version 0.44\r\n- Python 3.8.1", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/851/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 642572841, "node_id": "MDU6SXNzdWU2NDI1NzI4NDE=", "number": 859, "title": "Database page loads too slowly with many large tables (due to table counts)", "user": {"value": 3243482, "label": "abdusco"}, "state": "open", "locked": 0, "assignee": null, "milestone": null, "comments": 21, "created_at": "2020-06-21T14:23:17Z", "updated_at": "2021-08-25T21:59:55Z", "closed_at": null, "author_association": "CONTRIBUTOR", "pull_request": null, "body": "Hey,\r\nI have a database that I save in HTML from couple of web scrapers. There are around 200k+, 50+ rows in a couple of tables, with sqlite file weighing around 600MB.\r\n\r\nThe app runs on a VPS with 2 core CPU, 4GB RAM and refreshing database page regularly takes more than 10 seconds. I was suspecting that counting tables was the culprit, but manually running `select count(*) from table_name` for the largest table finishes under a second.\r\n\r\nI've looked at the source code. There's a check for index page for mutable databases larger than 100MB\r\nhttps://github.com/simonw/datasette/blob/799c5d53570d773203527f19530cf772dc2eeb24/datasette/views/index.py#L15\r\n\r\nbut this check is not performed for database page. \r\nI've manually crippled `Database::table_counts` method\r\n```py\r\nasync def table_counts(self, limit=10):\r\n if not self.is_mutable and self.cached_table_counts is not None:\r\n return self.cached_table_counts\r\n # Try to get counts for each table, $limit timeout for each count\r\n counts = {}\r\n for table in await self.table_names():\r\n try:\r\n # table_count = (\r\n # await self.execute(\r\n # \"select count(*) from [{}]\".format(table),\r\n # custom_time_limit=limit,\r\n # )\r\n # ).rows[0][0]\r\n counts[table] = 10 # table_count\r\n # In some cases I saw \"SQL Logic Error\" here in addition to\r\n # QueryInterrupted - so we catch that too:\r\n except (QueryInterrupted, sqlite3.OperationalError, sqlite3.DatabaseError):\r\n counts[table] = None\r\n if not self.is_mutable:\r\n self.cached_table_counts = counts\r\n return counts\r\n```\r\n\r\nnow the page loads in <100ms.\r\n\r\nIs it possible to apply size check on database page too?\r\n\r\n<details>\r\n<summary>\r\n/-/versions output\r\n</summary>\r\n<pre>\r\n{\r\n \"python\": {\r\n \"version\": \"3.8.0\",\r\n \"full\": \"3.8.0 (default, Oct 28 2019, 16:14:01) \\n[GCC 8.3.0]\"\r\n },\r\n \"datasette\": {\r\n \"version\": \"0.44\"\r\n },\r\n \"asgi\": \"3.0\",\r\n \"uvicorn\": \"0.11.5\",\r\n \"sqlite\": {\r\n \"version\": \"3.22.0\",\r\n \"fts_versions\": [\r\n \"FTS5\",\r\n \"FTS4\",\r\n \"FTS3\"\r\n ],\r\n \"extensions\": {\r\n \"json1\": null\r\n },\r\n \"compile_options\": [\r\n \"COMPILER=gcc-7.4.0\",\r\n \"ENABLE_COLUMN_METADATA\",\r\n \"ENABLE_DBSTAT_VTAB\",\r\n \"ENABLE_FTS3\",\r\n \"ENABLE_FTS3_PARENTHESIS\",\r\n \"ENABLE_FTS3_TOKENIZER\",\r\n \"ENABLE_FTS4\",\r\n \"ENABLE_FTS5\",\r\n \"ENABLE_JSON1\",\r\n \"ENABLE_LOAD_EXTENSION\",\r\n \"ENABLE_PREUPDATE_HOOK\",\r\n \"ENABLE_RTREE\",\r\n \"ENABLE_SESSION\",\r\n \"ENABLE_STMTVTAB\",\r\n \"ENABLE_UNLOCK_NOTIFY\",\r\n \"ENABLE_UPDATE_DELETE_LIMIT\",\r\n \"HAVE_ISNAN\",\r\n \"LIKE_DOESNT_MATCH_BLOBS\",\r\n \"MAX_SCHEMA_RETRY=25\",\r\n \"MAX_VARIABLE_NUMBER=250000\",\r\n \"OMIT_LOOKASIDE\",\r\n \"SECURE_DELETE\",\r\n \"SOUNDEX\",\r\n \"TEMP_STORE=1\",\r\n \"THREADSAFE=1\"\r\n ]\r\n }\r\n}\r\n</pre>\r\n</details>", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/859/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": null}

{"id": 649702801, "node_id": "MDU6SXNzdWU2NDk3MDI4MDE=", "number": 888, "title": "URLs in release notes point to 127.0.0.1", "user": {"value": 3243482, "label": "abdusco"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 1, "created_at": "2020-07-02T07:28:04Z", "updated_at": "2020-09-15T20:39:50Z", "closed_at": "2020-09-15T20:39:49Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "Just a quick heads up:\r\n\r\nRelease notes for 0.45 include urls that point to localhost. \r\n\r\nhttps://github.com/simonw/datasette/releases/tag/0.45", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/888/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 649907676, "node_id": "MDU6SXNzdWU2NDk5MDc2NzY=", "number": 889, "title": "asgi_wrapper plugin hook is crashing at startup", "user": {"value": 49260, "label": "amjith"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 3, "created_at": "2020-07-02T12:53:13Z", "updated_at": "2020-09-15T20:40:52Z", "closed_at": "2020-09-15T20:40:52Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "Steps to reproduce: \r\n\r\n1. Install datasette-media plugin \r\n`pip install datasette-media`\r\n2. Launch datasette\r\n`datasette databasename.db`\r\n3. Error\r\n\r\n```\r\nINFO: Started server process [927704]\r\nINFO: Waiting for application startup.\r\nERROR: Exception in 'lifespan' protocol\r\nTraceback (most recent call last):\r\n File \"/home/amjith/.virtualenvs/itsysearch/lib/python3.7/site-packages/uvicorn/lifespan/on.py\", line 48, in main\r\n await app(scope, self.receive, self.send)\r\n File \"/home/amjith/.virtualenvs/itsysearch/lib/python3.7/site-packages/uvicorn/middleware/proxy_headers.py\", line 45, in __call__\r\n return await self.app(scope, receive, send)\r\n File \"/home/amjith/.virtualenvs/itsysearch/lib/python3.7/site-packages/datasette_media/__init__.py\", line 9, in wrapped_app\r\n path = scope[\"path\"]\r\nKeyError: 'path'\r\nERROR: Application startup failed. Exiting.\r\n```", "repo": {"value": 107914493, "label": "datasette"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/datasette/issues/889/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 686978131, "node_id": "MDU6SXNzdWU2ODY5NzgxMzE=", "number": 139, "title": "insert_all(..., alter=True) should work for new columns introduced after the first 100 records", "user": {"value": 96218, "label": "simonwiles"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 7, "created_at": "2020-08-27T06:25:25Z", "updated_at": "2020-08-28T22:48:51Z", "closed_at": "2020-08-28T22:30:14Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "Is there a way to make `.insert_all()` work properly when new columns are introduced outside the first 100 records (with or without the `alter=True` argument)?\r\n\r\nI'm using `.insert_all()` to bulk insert ~3-4k records at a time and it is common for records to need to introduce new columns. However, if new columns are introduced after the first 100 records, `sqlite_utils` doesn't even raise the `OperationalError: table ... has no column named ...` exception; it just silently drops the extra data and moves on.\r\n\r\nIt took me a while to find this little snippet in the [documentation for `.insert_all()`](https://sqlite-utils.readthedocs.io/en/stable/python-api.html#bulk-inserts) (it's not mentioned under [Adding columns automatically on insert/update](https://sqlite-utils.readthedocs.io/en/stable/python-api.html#bulk-inserts)):\r\n\r\n> The column types used in the CREATE TABLE statement are automatically derived from the types of data in that first batch of rows. **_Any additional or missing columns in subsequent batches will be ignored._**\r\n\r\nI tried changing the `batch_size` argument to the total number of records, but it seems only to effect the number of rows that are committed at a time, and has no influence on this problem.\r\n\r\nIs there a way around this that you would suggest? It seems like it should raise an exception at least.", "repo": {"value": 140912432, "label": "sqlite-utils"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/sqlite-utils/issues/139/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}

{"id": 688659182, "node_id": "MDU6SXNzdWU2ODg2NTkxODI=", "number": 145, "title": "Bug when first record contains fewer columns than subsequent records", "user": {"value": 96218, "label": "simonwiles"}, "state": "closed", "locked": 0, "assignee": null, "milestone": null, "comments": 2, "created_at": "2020-08-30T05:44:44Z", "updated_at": "2020-09-08T23:21:23Z", "closed_at": "2020-09-08T23:21:23Z", "author_association": "CONTRIBUTOR", "pull_request": null, "body": "`insert_all()` selects the maximum batch size based on the number of fields in the first record. If the first record has fewer fields than subsequent records (and `alter=True` is passed), this can result in SQL statements with more than the maximum permitted number of host parameters. This situation is perhaps unlikely to occur, but could happen if the first record had, say, 10 columns, such that `batch_size` (based on `SQLITE_MAX_VARIABLE_NUMBER = 999`) would be 99. If the next 98 rows had 11 columns, the resulting SQL statement for the first batch would have `10 * 1 + 11 * 98 = 1088` host parameters (and subsequent batches, if the data were consistent from thereon out, would have `99 * 11 = 1089`).\r\n\r\nI suspect that this bug is masked somewhat by the fact that while:\r\n> [`SQLITE_MAX_VARIABLE_NUMBER`](https://www.sqlite.org/limits.html#max_variable_number) ... defaults to 999 for SQLite versions prior to 3.32.0 (2020-05-22) or 32766 for SQLite versions after 3.32.0.\r\n\r\nit is common that it is increased at compile time. Debian-based systems, for example, seem to ship with a version of sqlite compiled with `SQLITE_MAX_VARIABLE_NUMBER` set to 250,000, and I believe this is the case for homebrew installations too.\r\n\r\nA test for this issue might look like this:\r\n```python\r\ndef test_columns_not_in_first_record_should_not_cause_batch_to_be_too_large(fresh_db):\r\n # sqlite on homebrew and Debian/Ubuntu etc. is typically compiled with\r\n # SQLITE_MAX_VARIABLE_NUMBER set to 250,000, so we need to exceed this value to\r\n # trigger the error on these systems.\r\n THRESHOLD = 250000\r\n extra_columns = 1 + (THRESHOLD - 1) // 99\r\n records = [\r\n {\"c0\": \"first record\"}, # one column in first record -> batch_size = 100\r\n # fill out the batch with 99 records with enough columns to exceed THRESHOLD\r\n *[\r\n dict([(\"c{}\".format(i), j) for i in range(extra_columns)])\r\n for j in range(99)\r\n ]\r\n ]\r\n try:\r\n fresh_db[\"too_many_columns\"].insert_all(records, alter=True)\r\n except sqlite3.OperationalError:\r\n raise\r\n```\r\n\r\nThe best solution, I think, is simply to process all the records when determining columns, column types, and the batch size. In my tests this doesn't seem to be particularly costly at all, and cuts out a lot of complications (including obviating my implementation of #139 at #142). I'll raise a PR for your consideration.\r\n\r\n", "repo": {"value": 140912432, "label": "sqlite-utils"}, "type": "issue", "active_lock_reason": null, "performed_via_github_app": null, "reactions": "{\"url\": \"https://api.github.com/repos/simonw/sqlite-utils/issues/145/reactions\", \"total_count\": 0, \"+1\": 0, \"-1\": 0, \"laugh\": 0, \"hooray\": 0, \"confused\": 0, \"heart\": 0, \"rocket\": 0, \"eyes\": 0}", "draft": null, "state_reason": "completed"}