issues

22 rows where comments = 7, state = "open" and type = "issue" sorted by updated_at descending

This data as json, CSV (advanced)

Suggested facets: user, milestone, author_association, created_at (date), updated_at (date)

| id | node_id | number | title | user | state | locked | assignee | milestone | comments | created_at | updated_at ▲ | closed_at | author_association | pull_request | body | repo | type | active_lock_reason | performed_via_github_app | reactions | draft | state_reason |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1940346034 | I_kwDOBm6k_c5zp1Sy | 2199 | Detailed upgrade instructions for metadata.yaml -> datasette.yaml | simonw 9599 | open | 0 | Datasette 1.0 3268330 | 7 | 2023-10-12T16:21:25Z | 2023-10-12T22:08:42Z | OWNER |

Originally posted by @simonw in https://github.com/simonw/datasette/issues/2190#issuecomment-1759947021 |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/2199/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

|||||||

| 1010112818 | I_kwDOBm6k_c48NRky | 1479 | Win32 "used by another process" error with datasette publish | kirajano 76450761 | open | 0 | 7 | 2021-09-28T19:12:00Z | 2023-09-07T02:14:16Z | NONE | I unfortunately was not successful to deploy to fly.io. Please see the details above of the three scenarios that I took. I am also new to datasette. Failed to deploy. Attaching logs:

1. Tried with an app created via Error error connecting to docker: An unknown error occured. Traceback (most recent call last): File "c:\users\grott\anaconda3\lib\runpy.py", line 193, in _run_module_as_main "main", mod_spec) File "c:\users\grott\anaconda3\lib\runpy.py", line 85, in _run_code exec(code, run_globals) File "C:\Users\grott\Anaconda3\Scripts\datasette.exe__main__.py", line 7, in <module> File "c:\users\grott\anaconda3\lib\site-packages\click\core.py", line 829, in call return self.main(args, kwargs) File "c:\users\grott\anaconda3\lib\site-packages\click\core.py", line 782, in main rv = self.invoke(ctx) File "c:\users\grott\anaconda3\lib\site-packages\click\core.py", line 1259, in invoke return _process_result(sub_ctx.command.invoke(sub_ctx)) File "c:\users\grott\anaconda3\lib\site-packages\click\core.py", line 1259, in invoke return _process_result(sub_ctx.command.invoke(sub_ctx)) File "c:\users\grott\anaconda3\lib\site-packages\click\core.py", line 1066, in invoke return ctx.invoke(self.callback, ctx.params) File "c:\users\grott\anaconda3\lib\site-packages\click\core.py", line 610, in invoke return callback(args, **kwargs) File "c:\users\grott\anaconda3\lib\site-packages\datasette_publish_fly__init__.py", line 156, in fly "--remote-only", File "c:\users\grott\anaconda3\lib\contextlib.py", line 119, in exit next(self.gen) File "c:\users\grott\anaconda3\lib\site-packages\datasette\utils__init__.py", line 451, in temporary_docker_directory tmp.cleanup() File "c:\users\grott\anaconda3\lib\tempfile.py", line 811, in cleanup _shutil.rmtree(self.name) File "c:\users\grott\anaconda3\lib\shutil.py", line 516, in rmtree return _rmtree_unsafe(path, onerror) File "c:\users\grott\anaconda3\lib\shutil.py", line 395, in _rmtree_unsafe _rmtree_unsafe(fullname, onerror) File "c:\users\grott\anaconda3\lib\shutil.py", line 404, in _rmtree_unsafe onerror(os.rmdir, path, sys.exc_info()) File "c:\users\grott\anaconda3\lib\shutil.py", line 402, in _rmtree_unsafe os.rmdir(path) PermissionError: [WinError 32] The process cannot access the file because it is being used by another process: 'C:\Users\grott\AppData\Local\Temp\tmpgcm8cz66\frosty-fog-8565' ```

Error not possible to validate configuration: server returned Post "https://api.fly.io/graphql": unexpected EOF Traceback (most recent call last):

File "c:\users\grott\anaconda3\lib\runpy.py", line 193, in _run_module_as_main These are also the contents of the generated .toml file in 2 scenario: ``` fly.toml file generated for dark-feather-168 on 2021-09-28T20:35:44+02:00app = "dark-feather-168" kill_signal = "SIGINT" kill_timeout = 5 processes = [] [env] [experimental] allowed_public_ports = [] auto_rollback = true [[services]] http_checks = [] internal_port = 8080 processes = ["app"] protocol = "tcp" script_checks = [] [services.concurrency] hard_limit = 25 soft_limit = 20 type = "connections" [[services.ports]] handlers = ["http"] port = 80 [[services.ports]] handlers = ["tls", "http"] port = 443 [[services.tcp_checks]] grace_period = "1s" interval = "15s" restart_limit = 6 timeout = "2s" ```

```[+] Building 147.3s (11/11) FINISHED => [internal] load build definition from Dockerfile 0.2s => => transferring dockerfile: 396B 0.0s => [internal] load .dockerignore 0.1s => => transferring context: 2B 0.0s => [internal] load metadata for docker.io/library/python:3.8 4.7s => [auth] library/python:pull token for registry-1.docker.io 0.0s => [internal] load build context 0.1s => => transferring context: 82.37kB 0.0s => [1/5] FROM docker.io/library/python:3.8@sha256:530de807b46a11734e2587a784573c12c5034f2f14025f838589e6c0e3 108.3s => => resolve docker.io/library/python:3.8@sha256:530de807b46a11734e2587a784573c12c5034f2f14025f838589e6c0e3b5 0.0s => => sha256:56182bcdf4d4283aa1f46944b4ef7ac881e28b4d5526720a4e9ba03a4730846a 2.22kB / 2.22kB 0.0s => => sha256:955615a668ce169f8a1443fc6b6e6215f43fe0babfb4790712a2d3171f34d366 54.93MB / 54.93MB 21.6s => => sha256:911ea9f2bd51e53a455297e0631e18a72a86d7e2c8e1807176e80f991bde5d64 10.87MB / 10.87MB 15.5s => => sha256:530de807b46a11734e2587a784573c12c5034f2f14025f838589e6c0e3b5c5b6 1.86kB / 1.86kB 0.0s => => sha256:ff08f08727e50193dcf499afc30594c47e70cc96f6fcfd1a01240524624264d0 8.65kB / 8.65kB 0.0s => => sha256:2756ef5f69a5190f4308619e0f446d95f5515eef4a814dbad0bcebbbbc7b25a8 5.15MB / 5.15MB 6.4s => => sha256:27b0a22ee906271a6ce9ddd1754fdd7d3b59078e0b57b6cc054c7ed7ac301587 54.57MB / 54.57MB 37.7s => => sha256:8584d51a9262f9a3a436dea09ba40fa50f85802018f9bd299eee1bf538481077 196.45MB / 196.45MB 82.3s => => sha256:524774b7d3638702fe9ae0ea3fcfb81b027dfd75cc2fc14f0119e764b9543d58 6.29MB / 6.29MB 26.6s => => extracting sha256:955615a668ce169f8a1443fc6b6e6215f43fe0babfb4790712a2d3171f34d366 5.4s => => sha256:9460f6b75036e38367e2f27bb15e85777c5d6cd52ad168741c9566186415aa26 16.81MB / 16.81MB 40.5s => => extracting sha256:2756ef5f69a5190f4308619e0f446d95f5515eef4a814dbad0bcebbbbc7b25a8 0.6s => => extracting sha256:911ea9f2bd51e53a455297e0631e18a72a86d7e2c8e1807176e80f991bde5d64 0.6s => => sha256:9bc548096c181514aa1253966a330134d939496027f92f57ab376cd236eb280b 232B / 232B 40.1s => => extracting sha256:27b0a22ee906271a6ce9ddd1754fdd7d3b59078e0b57b6cc054c7ed7ac301587 5.8s => => sha256:1d87379b86b89fd3b8bb1621128f00c8f962756e6aaaed264ec38db733273543 2.35MB / 2.35MB 41.8s => => extracting sha256:8584d51a9262f9a3a436dea09ba40fa50f85802018f9bd299eee1bf538481077 18.8s => => extracting sha256:524774b7d3638702fe9ae0ea3fcfb81b027dfd75cc2fc14f0119e764b9543d58 1.2s => => extracting sha256:9460f6b75036e38367e2f27bb15e85777c5d6cd52ad168741c9566186415aa26 2.9s => => extracting sha256:9bc548096c181514aa1253966a330134d939496027f92f57ab376cd236eb280b 0.0s => => extracting sha256:1d87379b86b89fd3b8bb1621128f00c8f962756e6aaaed264ec38db733273543 0.8s => [2/5] COPY . /app 2.3s => [3/5] WORKDIR /app 0.2s => [4/5] RUN pip install -U datasette 26.9s => [5/5] RUN datasette inspect covid.db --inspect-file inspect-data.json 3.1s => exporting to image 1.2s => => exporting layers 1.2s => => writing image sha256:b5db0c205cd3454c21fbb00ecf6043f261540bcf91c2dfc36d418f1a23a75d7a 0.0s Use 'docker scan' to run Snyk tests against images to find vulnerabilities and learn how to fix them Traceback (most recent call last): "main", mod_spec) File "c:\users\grott\anaconda3\lib\runpy.py", line 85, in _run_code exec(code, run_globals) File "C:\Users\grott\Anaconda3\Scripts\datasette.exe__main__.py", line 7, in <module> File "c:\users\grott\anaconda3\lib\site-packages\click\core.py", line 829, in call return self.main(args, kwargs) File "c:\users\grott\anaconda3\lib\site-packages\click\core.py", line 782, in main rv = self.invoke(ctx) File "c:\users\grott\anaconda3\lib\site-packages\click\core.py", line 1259, in invoke return _process_result(sub_ctx.command.invoke(sub_ctx)) File "c:\users\grott\anaconda3\lib\site-packages\click\core.py", line 1066, in invoke return ctx.invoke(self.callback, ctx.params) File "c:\users\grott\anaconda3\lib\site-packages\click\core.py", line 610, in invoke return callback(args, **kwargs) File "c:\users\grott\anaconda3\lib\site-packages\datasette\cli.py", line 283, in package call(args) File "c:\users\grott\anaconda3\lib\contextlib.py", line 119, in exit next(self.gen) File "c:\users\grott\anaconda3\lib\site-packages\datasette\utils__init__.py", line 451, in temporary_docker_directory tmp.cleanup() File "c:\users\grott\anaconda3\lib\tempfile.py", line 811, in cleanup _shutil.rmtree(self.name) File "c:\users\grott\anaconda3\lib\shutil.py", line 516, in rmtree return _rmtree_unsafe(path, onerror) File "c:\users\grott\anaconda3\lib\shutil.py", line 395, in _rmtree_unsafe _rmtree_unsafe(fullname, onerror) File "c:\users\grott\anaconda3\lib\shutil.py", line 404, in _rmtree_unsafe onerror(os.rmdir, path, sys.exc_info()) File "c:\users\grott\anaconda3\lib\shutil.py", line 402, in _rmtree_unsafe os.rmdir(path) PermissionError: [WinError 32] The process cannot access the file because it is being used by another process: 'C:\Users\grott\AppData\Local\Temp\tmpkb27qid3\datasette'``` |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1479/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 323223872 | MDU6SXNzdWUzMjMyMjM4NzI= | 260 | Validate metadata.json on startup | simonw 9599 | open | 0 | 7 | 2018-05-15T13:42:56Z | 2023-06-21T12:51:22Z | OWNER | It's easy to misspell the name of a database or table and then be puzzled when the metadata settings silently fail. To avoid this, let's sanity check the provided metadata.json on startup and quit with a useful error message if we find any obvious mistakes. |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/260/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1383646615 | I_kwDOCGYnMM5SeMWX | 491 | Ability to merge databases and tables | sgraaf 8904453 | open | 0 | 7 | 2022-09-23T11:10:55Z | 2023-06-14T22:14:24Z | NONE | Hi! Let me firstly say that I am a big fan of your work -- I follow your tweets and blog posts with great interest 😄. Now onto the matter at hand: I think it would be great if This could look something like this:

I imagine this is rather straightforward if all databases involved in the merge contain differently named tables (i.e. no chance of conflicts), but things get slightly more complicated if two or more of the databases to be merged contain tables with the same name. Not only do you have to "do something" with the primary key(s), but these tables could also simply have different schemas (and therefore be incompatible for concatenation to begin with). Anyhow, I would love your thoughts on this, and, if you are open to it, work together on the design and implementation! |

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/491/reactions",

"total_count": 2,

"+1": 2,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1430797211 | I_kwDOBm6k_c5VSDub | 1875 | Figure out design for JSON errors (consider RFC 7807) | simonw 9599 | open | 0 | Datasette 1.0a-next 8755003 | 7 | 2022-11-01T03:14:15Z | 2022-12-13T05:29:08Z | OWNER | https://datatracker.ietf.org/doc/draft-ietf-httpapi-rfc7807bis/ is a brand new standard. Since I need a neat, predictable format for my JSON errors, maybe I should use this one? |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1875/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

|||||||

| 1363552780 | I_kwDOBm6k_c5RRioM | 1805 | truncate_cells_html does not work for links? | CharlesNepote 562352 | open | 0 | 7 | 2022-09-06T16:41:29Z | 2022-10-03T09:18:06Z | NONE | We have many links inside our dataset (please don't blame us ;-). When I use Eg. https://images.openfoodfacts.org/images/products/000/000/000/088/nutrition_fr.5.200.jpg (87 chars) is not truncated:

IMHO It would make sense that links should be treated as HTML. The link should work of course, but Datasette could truncate it: https://images.openfoodfacts.org/images/products/00[...].jpg |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1805/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

reopened | |||||||

| 1384273985 | I_kwDOBm6k_c5SglhB | 1817 | Expose `sql` and `params` arguments to various plugin hooks | simonw 9599 | open | 0 | 7 | 2022-09-23T20:34:45Z | 2022-09-27T00:27:53Z | OWNER | On Discord: https://discord.com/channels/823971286308356157/996877076982415491/1022784534363787305

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1817/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1082651698 | I_kwDOCGYnMM5Ah_Qy | 358 | Support for CHECK constraints | luxint 11597658 | open | 0 | 7 | 2021-12-16T21:19:45Z | 2022-09-25T07:15:59Z | NONE | Hi, I noticed the |

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/358/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 728905098 | MDU6SXNzdWU3Mjg5MDUwOTg= | 1048 | Documentation and unit tests for urls.row() urls.row_blob() methods | simonw 9599 | open | 0 | 7 | 2020-10-25T00:13:53Z | 2022-07-10T16:23:57Z | OWNER | datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1048/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

|||||||||

| 734777631 | MDU6SXNzdWU3MzQ3Nzc2MzE= | 1080 | "View all" option for facets, to provide a (paginated) list of ALL of the facet counts plus a link to view them | simonw 9599 | open | 0 | Datasette 1.0 3268330 | 7 | 2020-11-02T19:55:06Z | 2022-02-04T06:25:18Z | OWNER | Can use |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1080/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

|||||||

| 1100015398 | I_kwDOBm6k_c5BkOcm | 1591 | Maybe let plugins define custom serve options? | simonw 9599 | open | 0 | 7 | 2022-01-12T08:18:47Z | 2022-01-15T11:56:59Z | OWNER | https://twitter.com/psychemedia/status/1481171650934714370

I've thought something like this might be useful for other plugins in the past, too. |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1591/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 520681725 | MDU6SXNzdWU1MjA2ODE3MjU= | 621 | Syntax for ?_through= that works as a form field | simonw 9599 | open | 0 | 7 | 2019-11-11T00:19:03Z | 2021-12-18T01:42:33Z | OWNER | The current syntax for This means you can't target a form field at it. We should be able to support both - |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/621/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 972918533 | MDU6SXNzdWU5NzI5MTg1MzM= | 1438 | Query page .csv and .json links are not correctly URL-encoded on Vercel under unknown specific conditions | simonw 9599 | open | 0 | 7 | 2021-08-17T17:35:36Z | 2021-08-18T00:22:23Z | OWNER |

Originally posted by @simonw in https://github.com/simonw/datasette-publish-vercel/issues/48#issuecomment-900497579 |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1438/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 722816436 | MDU6SXNzdWU3MjI4MTY0MzY= | 186 | .extract() shouldn't extract null values | simonw 9599 | open | 0 | 7 | 2020-10-16T02:41:08Z | 2021-08-12T12:32:14Z | OWNER | This almost works, but it creates a rogue |

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/186/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 642388564 | MDU6SXNzdWU2NDIzODg1NjQ= | 858 | publish heroku does not work on Windows 10 | simonlau 870912 | open | 0 | 7 | 2020-06-20T14:40:28Z | 2021-06-10T17:44:09Z | NONE | When executing "datasette publish heroku schools.db" on Windows 10, I get the following error

to

as well as the other |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/858/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 672421411 | MDU6SXNzdWU2NzI0MjE0MTE= | 916 | Support reverse pagination (previous page, has-previous-items) | simonw 9599 | open | 0 | 7 | 2020-08-04T00:32:06Z | 2021-04-03T23:43:11Z | OWNER | I need this for

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/916/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 688670158 | MDU6SXNzdWU2ODg2NzAxNTg= | 147 | SQLITE_MAX_VARS maybe hard-coded too low | simonwiles 96218 | open | 0 | 7 | 2020-08-30T07:26:45Z | 2021-02-15T21:27:55Z | CONTRIBUTOR | I came across this while about to open an issue and PR against the documentation for As mentioned in #145, while:

it is common that it is increased at compile time. Debian-based systems, for example, seem to ship with a version of sqlite compiled with SQLITE_MAX_VARIABLE_NUMBER set to 250,000, and I believe this is the case for homebrew installations too. In working to understand what Unfortunately, it seems that Obviously this couldn't be relied upon in |

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/147/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

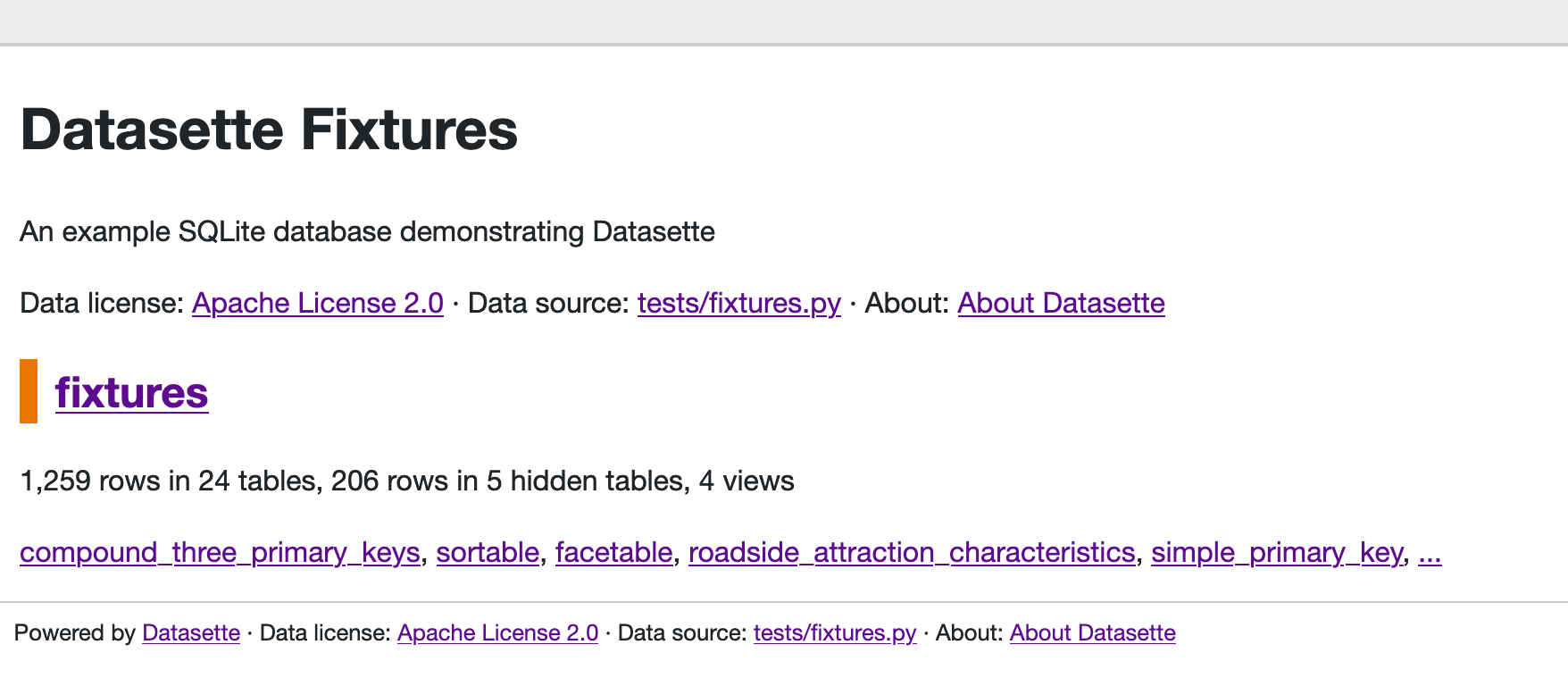

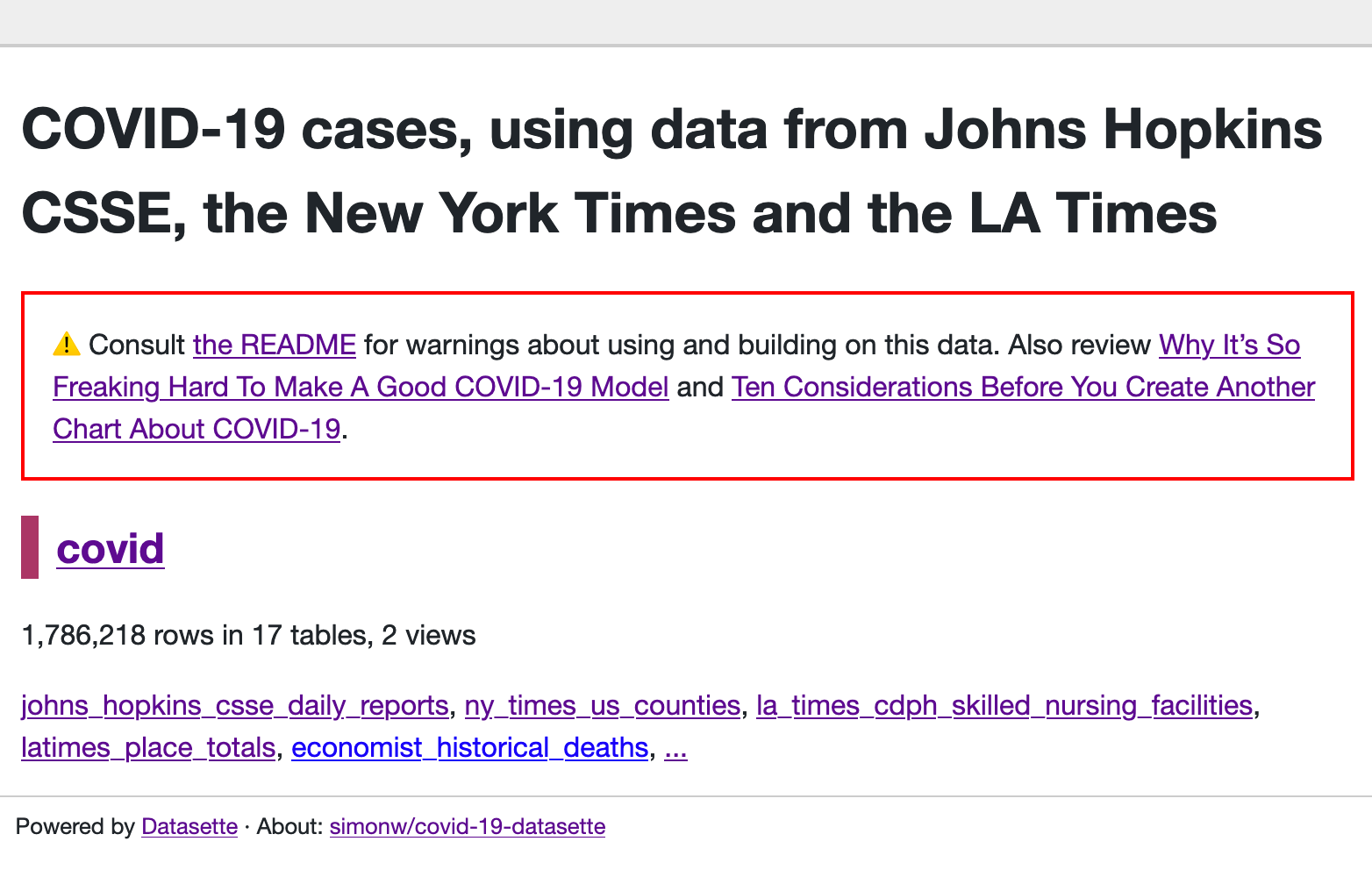

| 714377268 | MDU6SXNzdWU3MTQzNzcyNjg= | 991 | Redesign application homepage | simonw 9599 | open | 0 | 7 | 2020-10-04T18:48:45Z | 2021-01-26T19:06:36Z | OWNER | Most Datasette instances only host a single database, but the current homepage design assumes that it should leave plenty of space for multiple databases:

Reconsider this design - should the default show more information? The Covid-19 Datasette homepage looks particularly sparse I think: https://covid-19.datasettes.com/

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/991/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 712984738 | MDU6SXNzdWU3MTI5ODQ3Mzg= | 987 | Documented HTML hooks for JavaScript plugin authors | simonw 9599 | open | 0 | 7 | 2020-10-01T16:10:14Z | 2021-01-25T04:00:03Z | OWNER | In #981 I added |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/987/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 703218756 | MDU6SXNzdWU3MDMyMTg3NTY= | 50 | Commands for making authenticated API calls | simonw 9599 | open | 0 | 7 | 2020-09-17T02:39:07Z | 2020-10-19T05:01:29Z | MEMBER | Similar to |

github-to-sqlite 207052882 | issue | {

"url": "https://api.github.com/repos/dogsheep/github-to-sqlite/issues/50/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 705215230 | MDU6SXNzdWU3MDUyMTUyMzA= | 26 | Pagination | simonw 9599 | open | 0 | 7 | 2020-09-21T00:14:37Z | 2020-09-21T02:55:54Z | MEMBER | Useful for #16 (timeline view) since you can now filter to just the items on a specific day - but if there are more than 50 items you can't see them all. |

dogsheep-beta 197431109 | issue | {

"url": "https://api.github.com/repos/dogsheep/dogsheep-beta/issues/26/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 327365110 | MDU6SXNzdWUzMjczNjUxMTA= | 294 | inspect should record column types | simonw 9599 | open | 0 | 7 | 2018-05-29T15:10:41Z | 2019-06-28T16:45:28Z | OWNER | For each table we want to know the columns, their order and what type they are. I'm going to break with SQLite defaults a little on this one and allow datasette to define additional types - to start with just a Possible JSON design: Refs #276 |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/294/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Advanced export

JSON shape: default, array, newline-delimited, object

CREATE TABLE [issues] (

[id] INTEGER PRIMARY KEY,

[node_id] TEXT,

[number] INTEGER,

[title] TEXT,

[user] INTEGER REFERENCES [users]([id]),

[state] TEXT,

[locked] INTEGER,

[assignee] INTEGER REFERENCES [users]([id]),

[milestone] INTEGER REFERENCES [milestones]([id]),

[comments] INTEGER,

[created_at] TEXT,

[updated_at] TEXT,

[closed_at] TEXT,

[author_association] TEXT,

[pull_request] TEXT,

[body] TEXT,

[repo] INTEGER REFERENCES [repos]([id]),

[type] TEXT

, [active_lock_reason] TEXT, [performed_via_github_app] TEXT, [reactions] TEXT, [draft] INTEGER, [state_reason] TEXT);

CREATE INDEX [idx_issues_repo]

ON [issues] ([repo]);

CREATE INDEX [idx_issues_milestone]

ON [issues] ([milestone]);

CREATE INDEX [idx_issues_assignee]

ON [issues] ([assignee]);

CREATE INDEX [idx_issues_user]

ON [issues] ([user]);