github

| html_url | issue_url | id | node_id | user | created_at | updated_at | author_association | body | reactions | issue | performed_via_github_app |

|---|---|---|---|---|---|---|---|---|---|---|---|

| https://github.com/dogsheep/github-to-sqlite/issues/79#issuecomment-1847317568 | https://api.github.com/repos/dogsheep/github-to-sqlite/issues/79 | 1847317568 | IC_kwDODFdgUs5uG9RA | 23789 | 2023-12-08T14:50:13Z | 2023-12-08T14:50:13Z | NONE | Adding `&per_page=100` would reduce the number of API requests by 3x. | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1570375808 | |

| https://github.com/simonw/sqlite-utils/pull/604#issuecomment-1846560096 | https://api.github.com/repos/simonw/sqlite-utils/issues/604 | 1846560096 | IC_kwDOCGYnMM5uEEVg | 9599 | 2023-12-08T05:16:44Z | 2023-12-08T05:17:20Z | OWNER | Also tested this manually like so: ```bash sqlite-utils create-table strict.db strictint id integer size integer --strict sqlite-utils create-table strict.db notstrictint id integer size integer sqlite-utils install sqlite-utils-shell sqlite-utils shell strict.db ``` ``` Attached to strict.db Type 'exit' to exit. sqlite-utils> insert into strictint (size) values (4); 1 row affected sqlite-utils> insert into strictint (size) values ('four'); An error occurred: cannot store TEXT value in INTEGER column strictint.size sqlite-utils> insert into notstrictint (size) values ('four'); 1 row affected sqlite-utils> commit; Done ``` | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

2001006157 | |

| https://github.com/simonw/sqlite-utils/issues/603#issuecomment-1846555822 | https://api.github.com/repos/simonw/sqlite-utils/issues/603 | 1846555822 | IC_kwDOCGYnMM5uEDSu | 9599 | 2023-12-08T05:09:55Z | 2023-12-08T05:10:31Z | OWNER | I'm unable to replicate this issue. This is with a fresh install of `sqlite-utils==3.35.2`: ``` (base) ~ python3.12 Python 3.12.0 (v3.12.0:0fb18b02c8, Oct 2 2023, 09:45:56) [Clang 13.0.0 (clang-1300.0.29.30)] on darwin Type "help", "copyright", "credits" or "license" for more information. >>> import sqlite_utils >>> db = sqlite_utils.Database(memory=True) >>> db["foo"].insert({"bar": 1}) <Table foo (bar)> >>> import sys >>> sys.version '3.12.0 (v3.12.0:0fb18b02c8, Oct 2 2023, 09:45:56) [Clang 13.0.0 (clang-1300.0.29.30)]' ``` | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1988525411 | |

| https://github.com/simonw/sqlite-utils/issues/605#issuecomment-1846554637 | https://api.github.com/repos/simonw/sqlite-utils/issues/605 | 1846554637 | IC_kwDOCGYnMM5uEDAN | 9599 | 2023-12-08T05:07:54Z | 2023-12-08T05:07:54Z | OWNER | Thanks for opening an issue - this should help future Google searchers figure out what's going on here. Another approach here could be to store large integers as `TEXT` in SQLite (or even as `BLOB`). Both storing as `REAL` and storing as `TEXT/BLOB` feel nasty to me, but it looks like SQLite has a hard upper limit of 9223372036854775807 for integers. | {

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

2007893839 | |

| https://github.com/simonw/sqlite-utils/pull/604#issuecomment-1843585454 | https://api.github.com/repos/simonw/sqlite-utils/issues/604 | 1843585454 | IC_kwDOCGYnMM5t4uGu | 22429695 | 2023-12-06T19:48:26Z | 2023-12-08T05:05:03Z | NONE | ## [Codecov](https://app.codecov.io/gh/simonw/sqlite-utils/pull/604?src=pr&el=h1&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison) Report All modified and coverable lines are covered by tests :white_check_mark: > Comparison is base [(`9286c1b`)](https://app.codecov.io/gh/simonw/sqlite-utils/commit/9286c1ba432e890b1bb4b2a1f847b15364c1fa18?el=desc&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison) 95.77% compared to head [(`1698a9d`)](https://app.codecov.io/gh/simonw/sqlite-utils/pull/604?src=pr&el=desc&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison) 95.72%. > Report is 1 commits behind head on main. > :exclamation: Current head 1698a9d differs from pull request most recent head 61c6e26. Consider uploading reports for the commit 61c6e26 to get more accurate results <details><summary>Additional details and impacted files</summary> ```diff @@ Coverage Diff @@ ## main #604 +/- ## ========================================== - Coverage 95.77% 95.72% -0.06% ========================================== Files 8 8 Lines 2842 2852 +10 ========================================== + Hits 2722 2730 +8 - Misses 120 122 +2 ``` </details> [:umbrella: View full report in Codecov by Sentry](https://app.codecov.io/gh/simonw/sqlite-utils/pull/604?src=pr&el=continue&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison). :loudspeaker: Have feedback on the report? [Share it here](https://about.codecov.io/codecov-pr-comment-feedback/?utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison). | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

2001006157 | {

"id": 254,

"slug": "codecov",

"node_id": "MDM6QXBwMjU0",

"owner": {

"login": "codecov",

"id": 8226205,

"node_id": "MDEyOk9yZ2FuaXphdGlvbjgyMjYyMDU=",

"avatar_url": "https://avatars.githubusercontent.com/u/8226205?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/codecov",

"html_url": "https://github.com/codecov",

"followers_url": "https://api.github.com/users/codecov/followers",

"following_url": "https://api.github.com/users/codecov/following{/other_user}",

"gists_url": "https://api.github.com/users/codecov/gists{/gist_id}",

"starred_url": "https://api.github.com/users/codecov/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/codecov/subscriptions",

"organizations_url": "https://api.github.com/users/codecov/orgs",

"repos_url": "https://api.github.com/users/codecov/repos",

"events_url": "https://api.github.com/users/codecov/events{/privacy}",

"received_events_url": "https://api.github.com/users/codecov/received_events",

"type": "Organization",

"site_admin": false

},

"name": "Codecov",

"description": "Codecov provides highly integrated tools to group, merge, archive and compare coverage reports. Whether your team is comparing changes in a pull request or reviewing a single commit, Codecov will improve the code review workflow and quality.\r\n\r\n## Code coverage done right.\u00ae\r\n\r\n1. Upload coverage reports from your CI builds.\r\n2. Codecov merges all builds and languages into one beautiful coherent report.\r\n3. Get commit statuses, pull request comments and coverage overlay via our browser extension.\r\n\r\nWhen Codecov merges your uploads it keeps track of the CI provider (inc. build details) and user specified context, e.g. `#unittest` ~ `#smoketest` or `#oldcode` ~ `#newcode`. You can track the `#unittest` coverage independently of other groups. [Learn more here](\r\nhttp://docs.codecov.io/docs/flags)\r\n\r\nThrough **Codecov's Browser Extension** reports overlay directly in GitHub UI to assist in code review in [Chrome](https://chrome.google.com/webstore/detail/codecov/gedikamndpbemklijjkncpnolildpbgo) or Firefox (https://addons.mozilla.org/en-US/firefox/addon/codecov/)\r\n\r\n*Highly detailed* **pull request comments** and *customizable* **commit statuses** will improve your team's workflow and code coverage incrementally.\r\n\r\n**File backed configuration** all through the `codecov.yml`. \r\n\r\n## FAQ\r\n- Do you **merge multiple uploads** to the same commit? **Yes**\r\n- Do you **support multiple languages** in the same project? **Yes**\r\n- Can you **group coverage reports** by project and/or test type? **Yes**\r\n- How does **pricing** work? Only paid users can view reports and post statuses/comments. ",

"external_url": "https://codecov.io",

"html_url": "https://github.com/apps/codecov",

"created_at": "2016-09-25T14:18:27Z",

"updated_at": "2023-09-08T15:29:16Z",

"permissions": {

"administration": "read",

"checks": "write",

"contents": "read",

"emails": "read",

"issues": "read",

"members": "read",

"metadata": "read",

"pull_requests": "write",

"statuses": "write"

},

"events": [

"check_run",

"check_suite",

"create",

"delete",

"fork",

"member",

"membership",

"organization",

"public",

"pull_request",

"push",

"release",

"repository",

"status",

"team_add"

]

} |

| https://github.com/simonw/datasette/issues/2214#issuecomment-1844819002 | https://api.github.com/repos/simonw/datasette/issues/2214 | 1844819002 | IC_kwDOBm6k_c5t9bQ6 | 2874 | 2023-12-07T07:36:33Z | 2023-12-07T07:36:33Z | NONE | If I uncheck `expand labels` in the Advanced CSV export dialog, the error does not occur. Re-checking that box and re-running the export does cause the error to occur.  | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

2029908157 | |

| https://github.com/simonw/sqlite-utils/pull/604#issuecomment-1843975536 | https://api.github.com/repos/simonw/sqlite-utils/issues/604 | 1843975536 | IC_kwDOCGYnMM5t6NVw | 16437338 | 2023-12-07T01:17:05Z | 2023-12-07T01:17:05Z | CONTRIBUTOR | Apologies - I pushed a fix that addresses the mypy failures. | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

2001006157 | |

| https://github.com/simonw/sqlite-utils/pull/604#issuecomment-1843586503 | https://api.github.com/repos/simonw/sqlite-utils/issues/604 | 1843586503 | IC_kwDOCGYnMM5t4uXH | 9599 | 2023-12-06T19:49:10Z | 2023-12-06T19:49:29Z | OWNER | This looks really great on first glance - design is good, implementation is solid, tests and documentation look great. Looks like a couple of `mypy` failures in the tests at the moment: ``` mypy sqlite_utils tests sqlite_utils/db.py:543: error: Incompatible types in assignment (expression has type "type[Table]", variable has type "type[View]") [assignment] tests/test_lookup.py:156: error: Name "test_lookup_new_table" already defined on line 5 [no-redef] Found 2 errors in 2 files (checked 54 source files) Error: Process completed with exit code 1. ``` | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

2001006157 | |

| https://github.com/simonw/sqlite-utils/issues/606#issuecomment-1843579184 | https://api.github.com/repos/simonw/sqlite-utils/issues/606 | 1843579184 | IC_kwDOCGYnMM5t4skw | 9599 | 2023-12-06T19:43:55Z | 2023-12-06T19:43:55Z | OWNER | Updated documentation: - https://sqlite-utils.datasette.io/en/latest/cli.html#cli-add-column - https://sqlite-utils.datasette.io/en/latest/cli-reference.html#add-column | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

2029161033 | |

| https://github.com/simonw/sqlite-utils/issues/606#issuecomment-1843465748 | https://api.github.com/repos/simonw/sqlite-utils/issues/606 | 1843465748 | IC_kwDOCGYnMM5t4Q4U | 9599 | 2023-12-06T18:36:51Z | 2023-12-06T18:36:51Z | OWNER | I'll add `bytes` too - `float` already works. This makes sense because when you are working with the Python API you use `str` and `float` and `bytes` and `int` to specify column types. | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

2029161033 | |

| https://github.com/simonw/datasette/issues/2213#issuecomment-1843072926 | https://api.github.com/repos/simonw/datasette/issues/2213 | 1843072926 | IC_kwDOBm6k_c5t2w-e | 536941 | 2023-12-06T15:05:44Z | 2023-12-06T15:05:44Z | CONTRIBUTOR | it probably does not make sense to gzip large sqlite database files on the fly. it can take many seconds to gzip a large file and you either have to have this big thing in memory, or write it to disk, which some deployment environments will not like. i wonder if it would make sense to gzip the databases as part of the datasette publish process. it would be very cool to statically serve those as if they dynamically zipped (i.e. serve the filename example.db, not example.db.zip, and rely on the browser to expand). | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

2028698018 | |

| https://github.com/simonw/datasette/issues/670#issuecomment-1816642044 | https://api.github.com/repos/simonw/datasette/issues/670 | 1816642044 | IC_kwDOBm6k_c5sR8H8 | 16142258 | 2023-11-17T15:32:20Z | 2023-11-17T15:32:20Z | NONE | Any progress on this? It would be very helpful on my end as well. Thanks! | {

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

564833696 | |

| https://github.com/simonw/sqlite-utils/issues/344#issuecomment-1815825863 | https://api.github.com/repos/simonw/sqlite-utils/issues/344 | 1815825863 | IC_kwDOCGYnMM5sO03H | 16437338 | 2023-11-17T06:44:49Z | 2023-11-17T06:44:49Z | CONTRIBUTOR | hello Simon, I've added more STRICT table support per https://github.com/simonw/sqlite-utils/issues/344#issuecomment-982014776 in changeset https://github.com/simonw/sqlite-utils/commit/e4b9b582cdb4e48430865f8739f341bc8017c1e4. It also fixes table.transform() to preserve STRICT mode. Please pull if you deem appropriate. Thanks! | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1066474200 | |

| https://github.com/simonw/datasette/pull/2209#issuecomment-1812753347 | https://api.github.com/repos/simonw/datasette/issues/2209 | 1812753347 | IC_kwDOBm6k_c5sDGvD | 22429695 | 2023-11-15T15:31:12Z | 2023-11-15T15:31:12Z | NONE | ## [Codecov](https://app.codecov.io/gh/simonw/datasette/pull/2209?src=pr&el=h1&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison) Report All modified and coverable lines are covered by tests :white_check_mark: > Comparison is base [(`452a587`)](https://app.codecov.io/gh/simonw/datasette/commit/452a587e236ef642cbc6ae345b58767ea8420cb5?el=desc&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison) 92.69% compared to head [(`c88414b`)](https://app.codecov.io/gh/simonw/datasette/pull/2209?src=pr&el=desc&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison) 92.69%. <details><summary>Additional details and impacted files</summary> ```diff @@ Coverage Diff @@ ## main #2209 +/- ## ======================================= Coverage 92.69% 92.69% ======================================= Files 40 40 Lines 6047 6047 ======================================= Hits 5605 5605 Misses 442 442 ``` </details> [:umbrella: View full report in Codecov by Sentry](https://app.codecov.io/gh/simonw/datasette/pull/2209?src=pr&el=continue&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison). :loudspeaker: Have feedback on the report? [Share it here](https://about.codecov.io/codecov-pr-comment-feedback/?utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison). | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1994861266 | {

"id": 254,

"slug": "codecov",

"node_id": "MDM6QXBwMjU0",

"owner": {

"login": "codecov",

"id": 8226205,

"node_id": "MDEyOk9yZ2FuaXphdGlvbjgyMjYyMDU=",

"avatar_url": "https://avatars.githubusercontent.com/u/8226205?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/codecov",

"html_url": "https://github.com/codecov",

"followers_url": "https://api.github.com/users/codecov/followers",

"following_url": "https://api.github.com/users/codecov/following{/other_user}",

"gists_url": "https://api.github.com/users/codecov/gists{/gist_id}",

"starred_url": "https://api.github.com/users/codecov/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/codecov/subscriptions",

"organizations_url": "https://api.github.com/users/codecov/orgs",

"repos_url": "https://api.github.com/users/codecov/repos",

"events_url": "https://api.github.com/users/codecov/events{/privacy}",

"received_events_url": "https://api.github.com/users/codecov/received_events",

"type": "Organization",

"site_admin": false

},

"name": "Codecov",

"description": "Codecov provides highly integrated tools to group, merge, archive and compare coverage reports. Whether your team is comparing changes in a pull request or reviewing a single commit, Codecov will improve the code review workflow and quality.\r\n\r\n## Code coverage done right.\u00ae\r\n\r\n1. Upload coverage reports from your CI builds.\r\n2. Codecov merges all builds and languages into one beautiful coherent report.\r\n3. Get commit statuses, pull request comments and coverage overlay via our browser extension.\r\n\r\nWhen Codecov merges your uploads it keeps track of the CI provider (inc. build details) and user specified context, e.g. `#unittest` ~ `#smoketest` or `#oldcode` ~ `#newcode`. You can track the `#unittest` coverage independently of other groups. [Learn more here](\r\nhttp://docs.codecov.io/docs/flags)\r\n\r\nThrough **Codecov's Browser Extension** reports overlay directly in GitHub UI to assist in code review in [Chrome](https://chrome.google.com/webstore/detail/codecov/gedikamndpbemklijjkncpnolildpbgo) or Firefox (https://addons.mozilla.org/en-US/firefox/addon/codecov/)\r\n\r\n*Highly detailed* **pull request comments** and *customizable* **commit statuses** will improve your team's workflow and code coverage incrementally.\r\n\r\n**File backed configuration** all through the `codecov.yml`. \r\n\r\n## FAQ\r\n- Do you **merge multiple uploads** to the same commit? **Yes**\r\n- Do you **support multiple languages** in the same project? **Yes**\r\n- Can you **group coverage reports** by project and/or test type? **Yes**\r\n- How does **pricing** work? Only paid users can view reports and post statuses/comments. ",

"external_url": "https://codecov.io",

"html_url": "https://github.com/apps/codecov",

"created_at": "2016-09-25T14:18:27Z",

"updated_at": "2023-09-08T15:29:16Z",

"permissions": {

"administration": "read",

"checks": "write",

"contents": "read",

"emails": "read",

"issues": "read",

"members": "read",

"metadata": "read",

"pull_requests": "write",

"statuses": "write"

},

"events": [

"check_run",

"check_suite",

"create",

"delete",

"fork",

"member",

"membership",

"organization",

"public",

"pull_request",

"push",

"release",

"repository",

"status",

"team_add"

]

} |

| https://github.com/simonw/datasette/pull/2209#issuecomment-1812750369 | https://api.github.com/repos/simonw/datasette/issues/2209 | 1812750369 | IC_kwDOBm6k_c5sDGAh | 198537 | 2023-11-15T15:29:37Z | 2023-11-15T15:29:37Z | CONTRIBUTOR | Looks like tests are passing now but there is an issue with yaml loading and/or cog. https://github.com/simonw/datasette/actions/runs/6879299298/job/18710911166?pr=2209 | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1994861266 | |

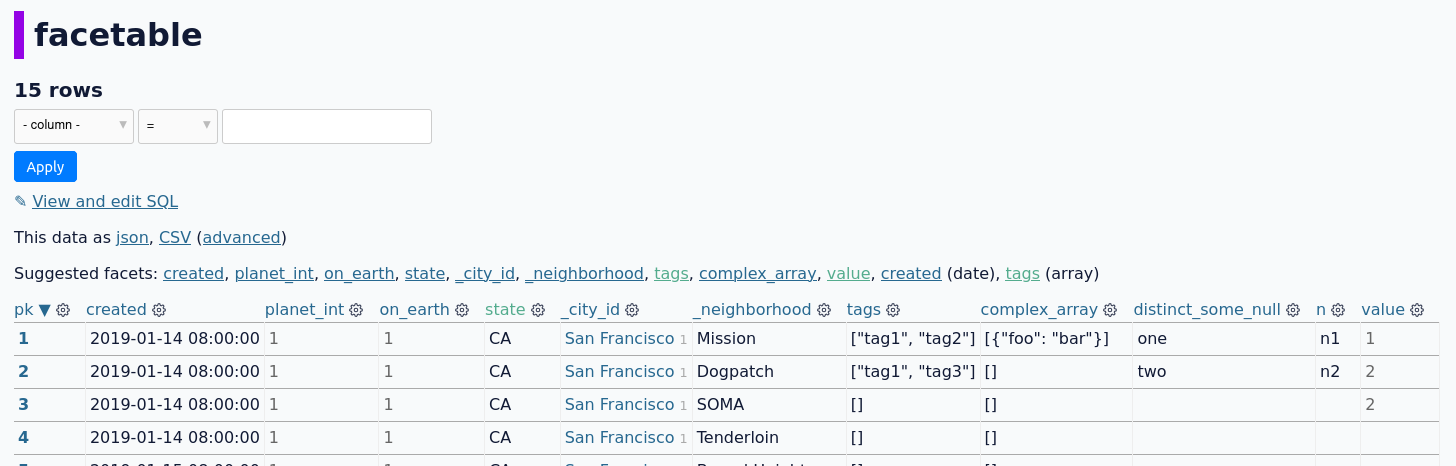

| https://github.com/simonw/datasette/pull/2209#issuecomment-1812623778 | https://api.github.com/repos/simonw/datasette/issues/2209 | 1812623778 | IC_kwDOBm6k_c5sCnGi | 198537 | 2023-11-15T14:22:42Z | 2023-11-15T15:24:09Z | CONTRIBUTOR | Whoops, looks like I forgot to check for other places where the 'facetable' table is used in the tests. | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1994861266 | |

| https://github.com/simonw/datasette/issues/2208#issuecomment-1812617851 | https://api.github.com/repos/simonw/datasette/issues/2208 | 1812617851 | IC_kwDOBm6k_c5sClp7 | 198537 | 2023-11-15T14:18:58Z | 2023-11-15T14:18:58Z | CONTRIBUTOR | Without aliases:  The proposed fix in #2209 also works when the 'value' column is actually facetable (just added another value in the 'value' column).  | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1994857251 | |

| https://github.com/simonw/datasette/pull/2206#issuecomment-1801888957 | https://api.github.com/repos/simonw/datasette/issues/2206 | 1801888957 | IC_kwDOBm6k_c5rZqS9 | 22429695 | 2023-11-08T13:26:13Z | 2023-11-08T13:26:13Z | NONE | ## [Codecov](https://app.codecov.io/gh/simonw/datasette/pull/2206?src=pr&el=h1&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison) Report All modified and coverable lines are covered by tests :white_check_mark: > Comparison is base [(`452a587`)](https://app.codecov.io/gh/simonw/datasette/commit/452a587e236ef642cbc6ae345b58767ea8420cb5?el=desc&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison) 92.69% compared to head [(`eec10df`)](https://app.codecov.io/gh/simonw/datasette/pull/2206?src=pr&el=desc&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison) 92.69%. <details><summary>Additional details and impacted files</summary> ```diff @@ Coverage Diff @@ ## main #2206 +/- ## ======================================= Coverage 92.69% 92.69% ======================================= Files 40 40 Lines 6047 6047 ======================================= Hits 5605 5605 Misses 442 442 ``` </details> [:umbrella: View full report in Codecov by Sentry](https://app.codecov.io/gh/simonw/datasette/pull/2206?src=pr&el=continue&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison). :loudspeaker: Have feedback on the report? [Share it here](https://about.codecov.io/codecov-pr-comment-feedback/?utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison). | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1983600865 | {

"id": 254,

"slug": "codecov",

"node_id": "MDM6QXBwMjU0",

"owner": {

"login": "codecov",

"id": 8226205,

"node_id": "MDEyOk9yZ2FuaXphdGlvbjgyMjYyMDU=",

"avatar_url": "https://avatars.githubusercontent.com/u/8226205?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/codecov",

"html_url": "https://github.com/codecov",

"followers_url": "https://api.github.com/users/codecov/followers",

"following_url": "https://api.github.com/users/codecov/following{/other_user}",

"gists_url": "https://api.github.com/users/codecov/gists{/gist_id}",

"starred_url": "https://api.github.com/users/codecov/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/codecov/subscriptions",

"organizations_url": "https://api.github.com/users/codecov/orgs",

"repos_url": "https://api.github.com/users/codecov/repos",

"events_url": "https://api.github.com/users/codecov/events{/privacy}",

"received_events_url": "https://api.github.com/users/codecov/received_events",

"type": "Organization",

"site_admin": false

},

"name": "Codecov",

"description": "Codecov provides highly integrated tools to group, merge, archive and compare coverage reports. Whether your team is comparing changes in a pull request or reviewing a single commit, Codecov will improve the code review workflow and quality.\r\n\r\n## Code coverage done right.\u00ae\r\n\r\n1. Upload coverage reports from your CI builds.\r\n2. Codecov merges all builds and languages into one beautiful coherent report.\r\n3. Get commit statuses, pull request comments and coverage overlay via our browser extension.\r\n\r\nWhen Codecov merges your uploads it keeps track of the CI provider (inc. build details) and user specified context, e.g. `#unittest` ~ `#smoketest` or `#oldcode` ~ `#newcode`. You can track the `#unittest` coverage independently of other groups. [Learn more here](\r\nhttp://docs.codecov.io/docs/flags)\r\n\r\nThrough **Codecov's Browser Extension** reports overlay directly in GitHub UI to assist in code review in [Chrome](https://chrome.google.com/webstore/detail/codecov/gedikamndpbemklijjkncpnolildpbgo) or Firefox (https://addons.mozilla.org/en-US/firefox/addon/codecov/)\r\n\r\n*Highly detailed* **pull request comments** and *customizable* **commit statuses** will improve your team's workflow and code coverage incrementally.\r\n\r\n**File backed configuration** all through the `codecov.yml`. \r\n\r\n## FAQ\r\n- Do you **merge multiple uploads** to the same commit? **Yes**\r\n- Do you **support multiple languages** in the same project? **Yes**\r\n- Can you **group coverage reports** by project and/or test type? **Yes**\r\n- How does **pricing** work? Only paid users can view reports and post statuses/comments. ",

"external_url": "https://codecov.io",

"html_url": "https://github.com/apps/codecov",

"created_at": "2016-09-25T14:18:27Z",

"updated_at": "2023-09-08T15:29:16Z",

"permissions": {

"administration": "read",

"checks": "write",

"contents": "read",

"emails": "read",

"issues": "read",

"members": "read",

"metadata": "read",

"pull_requests": "write",

"statuses": "write"

},

"events": [

"check_run",

"check_suite",

"create",

"delete",

"fork",

"member",

"membership",

"organization",

"public",

"pull_request",

"push",

"release",

"repository",

"status",

"team_add"

]

} |

| https://github.com/simonw/datasette/pull/2202#issuecomment-1801876943 | https://api.github.com/repos/simonw/datasette/issues/2202 | 1801876943 | IC_kwDOBm6k_c5rZnXP | 49699333 | 2023-11-08T13:19:00Z | 2023-11-08T13:19:00Z | CONTRIBUTOR | Superseded by #2206. | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1959278971 | {

"id": 29110,

"slug": "dependabot",

"node_id": "MDM6QXBwMjkxMTA=",

"owner": {

"login": "github",

"id": 9919,

"node_id": "MDEyOk9yZ2FuaXphdGlvbjk5MTk=",

"avatar_url": "https://avatars.githubusercontent.com/u/9919?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/github",

"html_url": "https://github.com/github",

"followers_url": "https://api.github.com/users/github/followers",

"following_url": "https://api.github.com/users/github/following{/other_user}",

"gists_url": "https://api.github.com/users/github/gists{/gist_id}",

"starred_url": "https://api.github.com/users/github/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/github/subscriptions",

"organizations_url": "https://api.github.com/users/github/orgs",

"repos_url": "https://api.github.com/users/github/repos",

"events_url": "https://api.github.com/users/github/events{/privacy}",

"received_events_url": "https://api.github.com/users/github/received_events",

"type": "Organization",

"site_admin": false

},

"name": "Dependabot",

"description": "",

"external_url": "https://dependabot-api.githubapp.com",

"html_url": "https://github.com/apps/dependabot",

"created_at": "2019-04-16T22:34:25Z",

"updated_at": "2023-10-12T13:35:09Z",

"permissions": {

"checks": "write",

"contents": "write",

"issues": "write",

"members": "read",

"metadata": "read",

"pull_requests": "write",

"statuses": "read",

"vulnerability_alerts": "read",

"workflows": "write"

},

"events": [

"check_suite",

"issues",

"issue_comment",

"label",

"pull_request",

"pull_request_review",

"pull_request_review_comment",

"repository"

]

} |

| https://github.com/simonw/datasette/issues/2205#issuecomment-1794054390 | https://api.github.com/repos/simonw/datasette/issues/2205 | 1794054390 | IC_kwDOBm6k_c5q7xj2 | 9599 | 2023-11-06T04:09:43Z | 2023-11-06T04:10:34Z | OWNER | That `keep_blank_values=True` is from https://github.com/simonw/datasette/commit/0934844c0b6d124163d0185fb6a41ba5a71433da Commit message: > request.post_vars() no longer discards empty values Relevant test: https://github.com/simonw/datasette/blob/452a587e236ef642cbc6ae345b58767ea8420cb5/tests/test_internals_request.py#L19-L27 | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1978023780 | |

| https://github.com/simonw/datasette/issues/2205#issuecomment-1794052079 | https://api.github.com/repos/simonw/datasette/issues/2205 | 1794052079 | IC_kwDOBm6k_c5q7w_v | 9599 | 2023-11-06T04:06:05Z | 2023-11-06T04:08:50Z | OWNER | It should return a `MultiParams`: https://github.com/simonw/datasette/blob/452a587e236ef642cbc6ae345b58767ea8420cb5/datasette/utils/__init__.py#L900-L917 Change needs to be made before 1.0. ```python return MultiParams(urllib.parse.parse_qs(body.decode("utf-8"))) ``` Need to remember why I was using `keep_blank_values=True` there and check that using `MultiParams` doesn't conflict with that reason. | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1978023780 | |

| https://github.com/simonw/datasette/issues/2205#issuecomment-1793880738 | https://api.github.com/repos/simonw/datasette/issues/2205 | 1793880738 | IC_kwDOBm6k_c5q7HKi | 9599 | 2023-11-05T23:26:14Z | 2023-11-05T23:26:14Z | OWNER | I found this problem while trying to use WTForms with this pattern: ```python choices = [(col, col) for col in await db.table_columns(table)] class ConfigForm(Form): template = TextAreaField("Template") api_token = PasswordField("OpenAI API token") columns = MultiCheckboxField('Columns', choices=choices) ``` | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1978023780 | |

| https://github.com/simonw/datasette/issues/1415#issuecomment-1793787454 | https://api.github.com/repos/simonw/datasette/issues/1415 | 1793787454 | IC_kwDOBm6k_c5q6wY- | 45269373 | 2023-11-05T16:44:49Z | 2023-11-05T16:46:59Z | NONE | thanks for documenting this @bendnorman! got stuck at exactly the same point `gcloud builds submit ... returned non-zero exit status 1`, without a clue why this was happening. i now managed to get the github action to deploy datasette by assigning the following roles to the service account: `roles/run.admin`, `roles/storage.admin`, `roles/cloudbuild.builds.builder`, `roles/viewer`, `roles/iam.serviceAccountUser`. | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

959137143 | |

| https://github.com/simonw/sqlite-utils/pull/591#issuecomment-1793278279 | https://api.github.com/repos/simonw/sqlite-utils/issues/591 | 1793278279 | IC_kwDOCGYnMM5q40FH | 9599 | 2023-11-04T00:58:03Z | 2023-11-04T00:58:03Z | OWNER | I'm going to abandon this PR and ship the 3.12 testing change directly to `main`. | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1884335789 | |

| https://github.com/simonw/sqlite-utils/pull/591#issuecomment-1708693020 | https://api.github.com/repos/simonw/sqlite-utils/issues/591 | 1708693020 | IC_kwDOCGYnMM5l2JYc | 22429695 | 2023-09-06T16:14:03Z | 2023-11-04T00:54:25Z | NONE | ## [Codecov](https://app.codecov.io/gh/simonw/sqlite-utils/pull/591?src=pr&el=h1&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison) Report All modified and coverable lines are covered by tests :white_check_mark: > Comparison is base [(`347fdc8`)](https://app.codecov.io/gh/simonw/sqlite-utils/commit/347fdc865e91b8d3410f49a5c9d5b499fbb594c1?el=desc&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison) 95.74% compared to head [(`1f14df1`)](https://app.codecov.io/gh/simonw/sqlite-utils/pull/591?src=pr&el=desc&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison) 95.74%. <details><summary>Additional details and impacted files</summary> ```diff @@ Coverage Diff @@ ## main #591 +/- ## ======================================= Coverage 95.74% 95.74% ======================================= Files 8 8 Lines 2842 2842 ======================================= Hits 2721 2721 Misses 121 121 ``` </details> [:umbrella: View full report in Codecov by Sentry](https://app.codecov.io/gh/simonw/sqlite-utils/pull/591?src=pr&el=continue&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison). :loudspeaker: Have feedback on the report? [Share it here](https://about.codecov.io/codecov-pr-comment-feedback/?utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison). | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1884335789 | |

| https://github.com/simonw/sqlite-utils/pull/596#issuecomment-1793274869 | https://api.github.com/repos/simonw/sqlite-utils/issues/596 | 1793274869 | IC_kwDOCGYnMM5q4zP1 | 9599 | 2023-11-04T00:47:55Z | 2023-11-04T00:47:55Z | OWNER | Thanks! | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1919296686 | |

| https://github.com/simonw/sqlite-utils/pull/598#issuecomment-1793274485 | https://api.github.com/repos/simonw/sqlite-utils/issues/598 | 1793274485 | IC_kwDOCGYnMM5q4zJ1 | 9599 | 2023-11-04T00:46:55Z | 2023-11-04T00:46:55Z | OWNER | Manually tested. Before:  After:  | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1926729132 | |

| https://github.com/simonw/sqlite-utils/issues/433#issuecomment-1793274350 | https://api.github.com/repos/simonw/sqlite-utils/issues/433 | 1793274350 | IC_kwDOCGYnMM5q4zHu | 9599 | 2023-11-04T00:46:30Z | 2023-11-04T00:46:30Z | OWNER | And a GIF of the fix after applying: - #598  | {

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1239034903 | |

| https://github.com/simonw/sqlite-utils/issues/433#issuecomment-1793273968 | https://api.github.com/repos/simonw/sqlite-utils/issues/433 | 1793273968 | IC_kwDOCGYnMM5q4zBw | 9599 | 2023-11-04T00:45:19Z | 2023-11-04T00:45:19Z | OWNER | Here's an animated GIF that demonstrates the bug:  | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1239034903 | |

| https://github.com/simonw/sqlite-utils/pull/598#issuecomment-1793272429 | https://api.github.com/repos/simonw/sqlite-utils/issues/598 | 1793272429 | IC_kwDOCGYnMM5q4ypt | 9599 | 2023-11-04T00:40:34Z | 2023-11-04T00:40:34Z | OWNER | Thanks! | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1926729132 | |

| https://github.com/simonw/sqlite-utils/pull/600#issuecomment-1793269219 | https://api.github.com/repos/simonw/sqlite-utils/issues/600 | 1793269219 | IC_kwDOCGYnMM5q4x3j | 9599 | 2023-11-04T00:34:33Z | 2023-11-04T00:34:33Z | OWNER | The GIS tests now pass in that container too: ```bash pytest tests/test_gis.py ``` ``` ======================== test session starts ========================= platform linux -- Python 3.10.12, pytest-7.4.3, pluggy-1.3.0 rootdir: /tmp/sqlite-utils plugins: hypothesis-6.88.1 collected 12 items tests/test_gis.py ............ [100%] ========================= 12 passed in 0.48s ========================= ``` | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1977004379 | |

| https://github.com/simonw/sqlite-utils/issues/599#issuecomment-1793268750 | https://api.github.com/repos/simonw/sqlite-utils/issues/599 | 1793268750 | IC_kwDOCGYnMM5q4xwO | 9599 | 2023-11-04T00:33:25Z | 2023-11-04T00:33:25Z | OWNER | See details of how I tested this here: - https://github.com/simonw/sqlite-utils/pull/600#issuecomment-1793268126 Short version: having applied this fix, the following command (on simulated `aarch64`): ```bash sqlite-utils memory "select spatialite_version()" --load-extension=spatialite ``` Outputs: ```json [{"spatialite_version()": "5.0.1"}] ``` | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1976986318 | |

| https://github.com/simonw/sqlite-utils/pull/600#issuecomment-1793268126 | https://api.github.com/repos/simonw/sqlite-utils/issues/600 | 1793268126 | IC_kwDOCGYnMM5q4xme | 9599 | 2023-11-04T00:31:34Z | 2023-11-04T00:31:34Z | OWNER | Testing this manually on macOS using Docker Desk top like this: ```bash docker run -it --rm arm64v8/ubuntu /bin/bash ``` Then inside the container: ```bash uname -m ``` Outputs: `aarch64` Then: ```bash apt install spatialite-bin libsqlite3-mod-spatialite git python3 python3-venv -y cd /tmp git clone https://github.com/simonw/sqlite-utils cd sqlite-utils python3 -m venv venv source venv/bin/activate pip install -e '.[test]' sqlite-utils memory "select spatialite_version()" --load-extension=spatialite ``` Which output: ``` Traceback (most recent call last): File "/tmp/sqlite-utils/venv/bin/sqlite-utils", line 33, in <module> sys.exit(load_entry_point('sqlite-utils', 'console_scripts', 'sqlite-utils')()) File "/tmp/sqlite-utils/venv/lib/python3.10/site-packages/click/core.py", line 1157, in __call__ return self.main(*args, **kwargs) File "/tmp/sqlite-utils/venv/lib/python3.10/site-packages/click/core.py", line 1078, in main rv = self.invoke(ctx) File "/tmp/sqlite-utils/venv/lib/python3.10/site-packages/click/core.py", line 1688, in invoke return _process_result(sub_ctx.command.invoke(sub_ctx)) File "/tmp/sqlite-utils/venv/lib/python3.10/site-packages/click/core.py", line 1434, in invoke return ctx.invoke(self.callback, **ctx.params) File "/tmp/sqlite-utils/venv/lib/python3.10/site-packages/click/core.py", line 783, in invoke return __callback(*args, **kwargs) File "/tmp/sqlite-utils/sqlite_utils/cli.py", line 1959, in memory _load_extensions(db, load_extension) File "/tmp/sqlite-utils/sqlite_utils/cli.py", line 3232, in _load_extensions if ":" in ext: TypeError: argument of type 'NoneType' is not iterable ``` Then I ran this: ```bash git checkout -b MikeCoats-spatialite-paths-linux-arm main git pull https://github.com/MikeCoats/sqlite-utils.git spatialite-paths-linux-arm ``` And now: ```bash sqlite-utils memory "select spatialite_version()" --load-extension=spatialite ``` Outputs: ```json [{"spatialite_version()": "… | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1977004379 | |

| https://github.com/simonw/sqlite-utils/pull/600#issuecomment-1793264654 | https://api.github.com/repos/simonw/sqlite-utils/issues/600 | 1793264654 | IC_kwDOCGYnMM5q4wwO | 22429695 | 2023-11-04T00:22:07Z | 2023-11-04T00:27:29Z | NONE | ## [Codecov](https://app.codecov.io/gh/simonw/sqlite-utils/pull/600?src=pr&el=h1&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison) Report All modified and coverable lines are covered by tests :white_check_mark: > Comparison is base [(`622c3a5`)](https://app.codecov.io/gh/simonw/sqlite-utils/commit/622c3a5a7dd53a09c029e2af40c2643fe7579340?el=desc&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison) 95.77% compared to head [(`b1a6076`)](https://app.codecov.io/gh/simonw/sqlite-utils/pull/600?src=pr&el=desc&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison) 95.77%. <details><summary>Additional details and impacted files</summary> ```diff @@ Coverage Diff @@ ## main #600 +/- ## ======================================= Coverage 95.77% 95.77% ======================================= Files 8 8 Lines 2840 2840 ======================================= Hits 2720 2720 Misses 120 120 ``` | [Files](https://app.codecov.io/gh/simonw/sqlite-utils/pull/600?src=pr&el=tree&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison) | Coverage Δ | | |---|---|---| | [sqlite\_utils/db.py](https://app.codecov.io/gh/simonw/sqlite-utils/pull/600?src=pr&el=tree&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison#diff-c3FsaXRlX3V0aWxzL2RiLnB5) | `97.22% <ø> (ø)` | | | [sqlite\_utils/utils.py](https://app.codecov.io/gh/simonw/sqlite-utils/pull/600?src=pr&el=tree&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison#diff-c3FsaXRlX3V0aWxzL3V0aWxzLnB5) | `94.56% <ø> (ø)` | | </details> [:umbrella: View full report in Codecov by Sentry](https://app.codecov.io/gh/… | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1977004379 | {

"id": 254,

"slug": "codecov",

"node_id": "MDM6QXBwMjU0",

"owner": {

"login": "codecov",

"id": 8226205,

"node_id": "MDEyOk9yZ2FuaXphdGlvbjgyMjYyMDU=",

"avatar_url": "https://avatars.githubusercontent.com/u/8226205?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/codecov",

"html_url": "https://github.com/codecov",

"followers_url": "https://api.github.com/users/codecov/followers",

"following_url": "https://api.github.com/users/codecov/following{/other_user}",

"gists_url": "https://api.github.com/users/codecov/gists{/gist_id}",

"starred_url": "https://api.github.com/users/codecov/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/codecov/subscriptions",

"organizations_url": "https://api.github.com/users/codecov/orgs",

"repos_url": "https://api.github.com/users/codecov/repos",

"events_url": "https://api.github.com/users/codecov/events{/privacy}",

"received_events_url": "https://api.github.com/users/codecov/received_events",

"type": "Organization",

"site_admin": false

},

"name": "Codecov",

"description": "Codecov provides highly integrated tools to group, merge, archive and compare coverage reports. Whether your team is comparing changes in a pull request or reviewing a single commit, Codecov will improve the code review workflow and quality.\r\n\r\n## Code coverage done right.\u00ae\r\n\r\n1. Upload coverage reports from your CI builds.\r\n2. Codecov merges all builds and languages into one beautiful coherent report.\r\n3. Get commit statuses, pull request comments and coverage overlay via our browser extension.\r\n\r\nWhen Codecov merges your uploads it keeps track of the CI provider (inc. build details) and user specified context, e.g. `#unittest` ~ `#smoketest` or `#oldcode` ~ `#newcode`. You can track the `#unittest` coverage independently of other groups. [Learn more here](\r\nhttp://docs.codecov.io/docs/flags)\r\n\r\nThrough **Codecov's Browser Extension** reports overlay directly in GitHub UI to assist in code review in [Chrome](https://chrome.google.com/webstore/detail/codecov/gedikamndpbemklijjkncpnolildpbgo) or Firefox (https://addons.mozilla.org/en-US/firefox/addon/codecov/)\r\n\r\n*Highly detailed* **pull request comments** and *customizable* **commit statuses** will improve your team's workflow and code coverage incrementally.\r\n\r\n**File backed configuration** all through the `codecov.yml`. \r\n\r\n## FAQ\r\n- Do you **merge multiple uploads** to the same commit? **Yes**\r\n- Do you **support multiple languages** in the same project? **Yes**\r\n- Can you **group coverage reports** by project and/or test type? **Yes**\r\n- How does **pricing** work? Only paid users can view reports and post statuses/comments. ",

"external_url": "https://codecov.io",

"html_url": "https://github.com/apps/codecov",

"created_at": "2016-09-25T14:18:27Z",

"updated_at": "2023-09-08T15:29:16Z",

"permissions": {

"administration": "read",

"checks": "write",

"contents": "read",

"emails": "read",

"issues": "read",

"members": "read",

"metadata": "read",

"pull_requests": "write",

"statuses": "write"

},

"events": [

"check_run",

"check_suite",

"create",

"delete",

"fork",

"member",

"membership",

"organization",

"public",

"pull_request",

"push",

"release",

"repository",

"status",

"team_add"

]

} |

| https://github.com/simonw/sqlite-utils/pull/600#issuecomment-1793265952 | https://api.github.com/repos/simonw/sqlite-utils/issues/600 | 1793265952 | IC_kwDOCGYnMM5q4xEg | 9599 | 2023-11-04T00:25:34Z | 2023-11-04T00:25:34Z | OWNER | The tests failed because they found a spelling mistake in a completely unrelated area of the code - not sure why that had not been caught before. | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1977004379 | |

| https://github.com/simonw/sqlite-utils/pull/600#issuecomment-1793263638 | https://api.github.com/repos/simonw/sqlite-utils/issues/600 | 1793263638 | IC_kwDOCGYnMM5q4wgW | 9599 | 2023-11-04T00:19:58Z | 2023-11-04T00:19:58Z | OWNER | Thanks for this! | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1977004379 | |

| https://github.com/simonw/datasette/issues/949#issuecomment-1791911093 | https://api.github.com/repos/simonw/datasette/issues/949 | 1791911093 | IC_kwDOBm6k_c5qzmS1 | 9599 | 2023-11-03T05:28:09Z | 2023-11-03T05:28:58Z | OWNER | Datasette is using that now, see: - #1893 | {

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

684961449 | |

| https://github.com/simonw/datasette/issues/949#issuecomment-1791571572 | https://api.github.com/repos/simonw/datasette/issues/949 | 1791571572 | IC_kwDOBm6k_c5qyTZ0 | 498744 | 2023-11-02T21:36:24Z | 2023-11-02T21:36:24Z | NONE | FWIW, code mirror 6 now has this standard although if you want table-specific suggestions, you'd have to handle parsing out which table the user is querying yourself. | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

684961449 | |

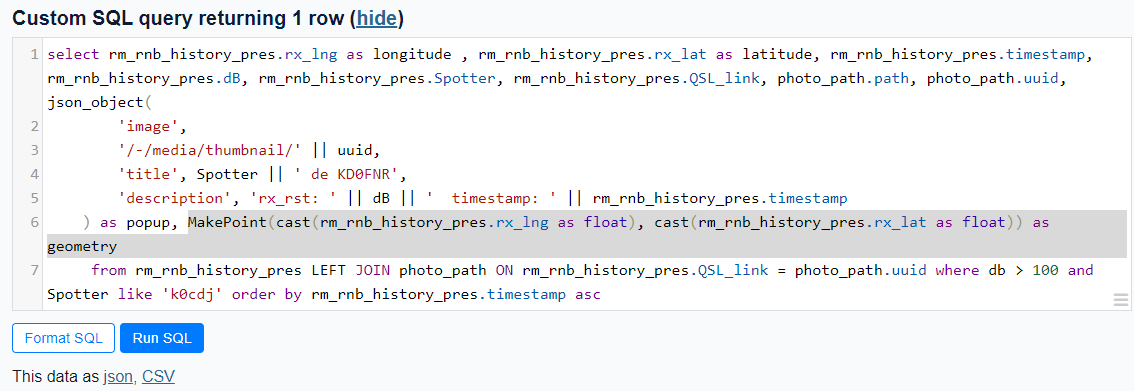

| https://github.com/simonw/datasette/issues/411#issuecomment-1779267468 | https://api.github.com/repos/simonw/datasette/issues/411 | 1779267468 | IC_kwDOBm6k_c5qDXeM | 363004 | 2023-10-25T13:23:04Z | 2023-10-25T13:23:04Z | NONE | Using the [Counties example](https://us-counties.datasette.io/counties/county_for_latitude_longitude?longitude=-122&latitude=37), I was able to pull out the MakePoint method as MakePoint(cast(rm_rnb_history_pres.rx_lng as float), cast(rm_rnb_history_pres.rx_lat as float)) as geometry which worked, giving me a geometry column.  gave  I believe it's the cast to float that does the trick. Prior to using the cast, I also received a 'wrong number of arguments' eror. | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

410384988 | |

| https://github.com/simonw/datasette/pull/2202#issuecomment-1777247375 | https://api.github.com/repos/simonw/datasette/issues/2202 | 1777247375 | IC_kwDOBm6k_c5p7qSP | 22429695 | 2023-10-24T13:49:27Z | 2023-10-24T13:49:27Z | NONE | ## [Codecov](https://app.codecov.io/gh/simonw/datasette/pull/2202?src=pr&el=h1&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison) Report All modified and coverable lines are covered by tests :white_check_mark: > Comparison is base [(`452a587`)](https://app.codecov.io/gh/simonw/datasette/commit/452a587e236ef642cbc6ae345b58767ea8420cb5?el=desc&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison) 92.69% compared to head [(`be4d0f0`)](https://app.codecov.io/gh/simonw/datasette/pull/2202?src=pr&el=desc&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison) 92.69%. <details><summary>Additional details and impacted files</summary> ```diff @@ Coverage Diff @@ ## main #2202 +/- ## ======================================= Coverage 92.69% 92.69% ======================================= Files 40 40 Lines 6047 6047 ======================================= Hits 5605 5605 Misses 442 442 ``` </details> [:umbrella: View full report in Codecov by Sentry](https://app.codecov.io/gh/simonw/datasette/pull/2202?src=pr&el=continue&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison). :loudspeaker: Have feedback on the report? [Share it here](https://about.codecov.io/codecov-pr-comment-feedback/?utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison). | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1959278971 | |

| https://github.com/simonw/datasette/pull/2200#issuecomment-1777228352 | https://api.github.com/repos/simonw/datasette/issues/2200 | 1777228352 | IC_kwDOBm6k_c5p7lpA | 49699333 | 2023-10-24T13:40:25Z | 2023-10-24T13:40:25Z | CONTRIBUTOR | Superseded by #2202. | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1949756141 | |

| https://github.com/simonw/datasette/issues/1655#issuecomment-1767248394 | https://api.github.com/repos/simonw/datasette/issues/1655 | 1767248394 | IC_kwDOBm6k_c5pVhIK | 6262071 | 2023-10-17T21:53:17Z | 2023-10-17T21:53:17Z | NONE | @fgregg, I am happy to do that and just could not find a way to create issues at your fork repo. | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1163369515 | |

| https://github.com/simonw/datasette/issues/1655#issuecomment-1767219901 | https://api.github.com/repos/simonw/datasette/issues/1655 | 1767219901 | IC_kwDOBm6k_c5pVaK9 | 536941 | 2023-10-17T21:29:03Z | 2023-10-17T21:29:03Z | CONTRIBUTOR | @yejiyang why don’t you move this discussion to my fork to spare simon’s notifications | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1163369515 | |

| https://github.com/simonw/datasette/issues/1655#issuecomment-1767133832 | https://api.github.com/repos/simonw/datasette/issues/1655 | 1767133832 | IC_kwDOBm6k_c5pVFKI | 6262071 | 2023-10-17T20:37:18Z | 2023-10-17T21:12:48Z | NONE | @fgregg Thanks for your reply. I tried to use your fork branch `datasette = {url = "https://github.com/fgregg/datasette/archive/refs/heads/no_limit_csv_publish.zip"}` and got error - TypeError: 'str' object is not callable. I used the same templates as in your branch [here ](https://github.com/labordata/warehouse/tree/main/templates). ``` INFO: 127.0.0.1:47232 - "GET /-/static/sql-formatter-2.3.3.min.js HTTP/1.1" 200 OK Traceback (most recent call last): File "/home/jiyang/github/global-chemical-inventory-database/.venv/lib/python3.10/site-packages/datasette/app.py", line 1632, in route_path response = await view(request, send) File "/home/jiyang/github/global-chemical-inventory-database/.venv/lib/python3.10/site-packages/datasette/app.py", line 1814, in async_view_fn response = await async_call_with_supported_arguments( File "/home/jiyang/github/global-chemical-inventory-database/.venv/lib/python3.10/site-packages/datasette/utils/__init__.py", line 1016, in async_call_with_supported_arguments return await fn(*call_with) File "/home/jiyang/github/global-chemical-inventory-database/.venv/lib/python3.10/site-packages/datasette/views/table.py", line 673, in table_view response = await table_view_traced(datasette, request) File "/home/jiyang/github/global-chemical-inventory-database/.venv/lib/python3.10/site-packages/datasette/views/table.py", line 822, in table_view_traced await datasette.render_template( File "/home/jiyang/github/global-chemical-inventory-database/.venv/lib/python3.10/site-packages/datasette/app.py", line 1307, in render_template return await template.render_async(template_context) File "/home/jiyang/github/global-chemical-inventory-database/.venv/lib/python3.10/site-packages/jinja2/environment.py", line 1324, in render_async return self.environment.handle_exception() File "/home/jiyang/github/global-chemical-inventory-database/.venv/lib/python3.10/site-packages/jinja2/environment.py", line 936, in handle_exception raise rewri… | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1163369515 | |

| https://github.com/simonw/datasette/issues/1655#issuecomment-1766994810 | https://api.github.com/repos/simonw/datasette/issues/1655 | 1766994810 | IC_kwDOBm6k_c5pUjN6 | 536941 | 2023-10-17T19:01:59Z | 2023-10-17T19:01:59Z | CONTRIBUTOR | hi @yejiyang, have your tried using my fork of datasette: https://github.com/fgregg/datasette/tree/no_limit_csv_publish | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1163369515 | |

| https://github.com/simonw/datasette/issues/1655#issuecomment-1761630595 | https://api.github.com/repos/simonw/datasette/issues/1655 | 1761630595 | IC_kwDOBm6k_c5pAFmD | 6262071 | 2023-10-13T14:37:48Z | 2023-10-13T14:37:48Z | NONE | Hi @fgregg, I came across this issue and found your setup at labordata.bunkum.us can help me with a research project at https://database.zeropm.eu/. I really like the approach [here](https://labordata.bunkum.us/f7-06c761c?sql=select+*+from+f7) when dealing with a custom SQL query returning more than 1000 rows: 1) At the table in HTML page, only first 1000 rows displayed; 2) When click the "Download this data as a CSV Spreadsheet(All Rows)" button, a csv with ALL ROWS (could be > 100 Mb) get downloaded. I am trying to repeat the setup but have yet to be successful so far. What I tried: 1) copy the query.html & table.html templates from this [github repo](https://github.com/labordata/warehouse/tree/main/templates) and use it my project 2) use the same datasette version 1.0a2. Do you know what else I should try to set it correctly? I appreciate your help. @simonw I would like to use this opportunity to thank you for developing & maintaining such an amazing project. I introduce your datasette to several projects in my institute. I am also interested in your cloud version. | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1163369515 | |

| https://github.com/simonw/datasette/issues/2196#issuecomment-1760560526 | https://api.github.com/repos/simonw/datasette/issues/2196 | 1760560526 | IC_kwDOBm6k_c5o8AWO | 1892194 | 2023-10-13T00:07:07Z | 2023-10-13T00:07:07Z | NONE | That worked! | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1910269679 | |

| https://github.com/simonw/datasette/pull/2052#issuecomment-1760552652 | https://api.github.com/repos/simonw/datasette/issues/2052 | 1760552652 | IC_kwDOBm6k_c5o7-bM | 9599 | 2023-10-12T23:59:21Z | 2023-10-12T23:59:21Z | OWNER | I'm landing this despite the cog failures. I'll fix them on main if I have to. | {

"total_count": 1,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 1,

"rocket": 0,

"eyes": 0

} |

1651082214 | |

| https://github.com/simonw/datasette/pull/2052#issuecomment-1632867333 | https://api.github.com/repos/simonw/datasette/issues/2052 | 1632867333 | IC_kwDOBm6k_c5hU5QF | 22429695 | 2023-07-12T16:38:27Z | 2023-10-12T23:52:24Z | NONE | ## [Codecov](https://app.codecov.io/gh/simonw/datasette/pull/2052?src=pr&el=h1&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison) Report All modified lines are covered by tests :white_check_mark: > Comparison is base [(`3feed1f`)](https://app.codecov.io/gh/simonw/datasette/commit/3feed1f66e2b746f349ee56970a62246a18bb164?el=desc&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison) 92.46% compared to head [(`8ae479c`)](https://app.codecov.io/gh/simonw/datasette/pull/2052?src=pr&el=desc&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison) 92.69%. > Report is 112 commits behind head on main. <details><summary>Additional details and impacted files</summary> ```diff @@ Coverage Diff @@ ## main #2052 +/- ## ========================================== + Coverage 92.46% 92.69% +0.22% ========================================== Files 38 40 +2 Lines 5750 6047 +297 ========================================== + Hits 5317 5605 +288 - Misses 433 442 +9 ``` [see 19 files with indirect coverage changes](https://app.codecov.io/gh/simonw/datasette/pull/2052/indirect-changes?src=pr&el=tree-more&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison) </details> [:umbrella: View full report in Codecov by Sentry](https://app.codecov.io/gh/simonw/datasette/pull/2052?src=pr&el=continue&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison). :loudspeaker: Have feedback on the report? [Share it here](https://about.codecov.io/codecov-pr-comment-feedback/?utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison). | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1651082214 | |

| https://github.com/simonw/datasette/pull/2052#issuecomment-1760545012 | https://api.github.com/repos/simonw/datasette/issues/2052 | 1760545012 | IC_kwDOBm6k_c5o78j0 | 9599 | 2023-10-12T23:48:16Z | 2023-10-12T23:48:16Z | OWNER | Oh! I think I broke Cog on `main` and these tests are running against this branch rebased against main. | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1651082214 | |

| https://github.com/simonw/datasette/pull/2052#issuecomment-1760542865 | https://api.github.com/repos/simonw/datasette/issues/2052 | 1760542865 | IC_kwDOBm6k_c5o78CR | 9599 | 2023-10-12T23:44:53Z | 2023-10-12T23:45:15Z | OWNER | Weird, the `cog` check is failing in CI. ``` Run cog --check docs/*.rst cog --check docs/*.rst shell: /usr/bin/bash -e {0} env: pythonLocation: /opt/hostedtoolcache/Python/3.9.18/x64 PKG_CONFIG_PATH: /opt/hostedtoolcache/Python/3.9.18/x64/lib/pkgconfig Python_ROOT_DIR: /opt/hostedtoolcache/Python/3.9.18/x64 Python2_ROOT_DIR: /opt/hostedtoolcache/Python/3.9.18/x64 Python3_ROOT_DIR: /opt/hostedtoolcache/Python/3.9.18/x64 LD_LIBRARY_PATH: /opt/hostedtoolcache/Python/3.9.18/x64/lib Check failed Checking docs/authentication.rst Checking docs/binary_data.rst Checking docs/changelog.rst Checking docs/cli-reference.rst Checking docs/configuration.rst (changed) ``` | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1651082214 | |

| https://github.com/simonw/datasette/issues/2199#issuecomment-1760441535 | https://api.github.com/repos/simonw/datasette/issues/2199 | 1760441535 | IC_kwDOBm6k_c5o7jS_ | 9599 | 2023-10-12T22:08:42Z | 2023-10-12T22:08:42Z | OWNER | Pushed that incomplete code here: https://github.com/datasette/datasette-upgrade | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1940346034 | |

| https://github.com/simonw/datasette/issues/2196#issuecomment-1760417555 | https://api.github.com/repos/simonw/datasette/issues/2196 | 1760417555 | IC_kwDOBm6k_c5o7dcT | 9599 | 2023-10-12T21:54:52Z | 2023-10-12T21:54:52Z | OWNER | I can't replicate this bug. Closing, but please re-open if it's still happening. As an aside, the link I promote is https://datasette.io/discord which redirects: ``` curl -i 'https://datasette.io/discord' HTTP/2 301 location: https://discord.gg/ktd74dm5mw content-type: text/plain x-cloud-trace-context: 8dcfd08d3d1fa44f7ee78568e0f5305e;o=1 date: Thu, 12 Oct 2023 21:54:17 GMT server: Google Frontend content-length: 0 ``` ``` curl -i 'https://discord.gg/ktd74dm5mw' HTTP/2 301 date: Thu, 12 Oct 2023 21:54:28 GMT content-type: text/plain;charset=UTF-8 content-length: 0 location: https://discord.com/invite/ktd74dm5mw strict-transport-security: max-age=31536000; includeSubDomains; preload permissions-policy: interest-cohort=() x-content-type-options: nosniff x-frame-options: DENY x-robots-tag: noindex, nofollow, noarchive, nocache, noimageindex, noodp x-xss-protection: 1; mode=block report-to: {"endpoints":[{"url":"https:\/\/a.nel.cloudflare.com\/report\/v3?s=Dzzrf%2FgGkfFxtzSAQ46slMVDLcFjsH9fsvVkzHtgUUiZ891rXAa6LvTRpHK%2BdSMSQ54F57hS9z1mZXXklIbONZW1bfBuFjSK9J4XmjjLjsFUulMXvpjfCLkB6PI%3D"}],"group":"cf-nel","max_age":604800} nel: {"success_fraction":0,"report_to":"cf-nel","max_age":604800} server: cloudflare cf-ray: 815294ddff282511-SJC ``` | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1910269679 | |

| https://github.com/simonw/datasette/issues/2199#issuecomment-1760413191 | https://api.github.com/repos/simonw/datasette/issues/2199 | 1760413191 | IC_kwDOBm6k_c5o7cYH | 9599 | 2023-10-12T21:52:25Z | 2023-10-12T21:52:25Z | OWNER | Demo of that logic: ``` $ datasette upgrade metadata-to-config ../datasette/metadata.json Upgrading ../datasette/metadata.json to new metadata.yaml format New metadata.yaml file will be written to metadata-new-1.yaml New datasette.yaml file will be written to datasette.yaml $ touch metadata-new-1.yaml $ datasette upgrade metadata-to-config ../datasette/metadata.json Upgrading ../datasette/metadata.json to new metadata.yaml format New metadata.yaml file will be written to metadata-new-2.yaml New datasette.yaml file will be written to datasette.yaml $ touch datasette.yaml $ datasette upgrade metadata-to-config ../datasette/metadata.json Upgrading ../datasette/metadata.json to new metadata.yaml format New metadata.yaml file will be written to metadata-new-2.yaml New datasette.yaml file will be written to datasette-new.yaml ``` | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1940346034 | |

| https://github.com/simonw/datasette/issues/2199#issuecomment-1760412424 | https://api.github.com/repos/simonw/datasette/issues/2199 | 1760412424 | IC_kwDOBm6k_c5o7cMI | 9599 | 2023-10-12T21:51:44Z | 2023-10-12T21:51:44Z | OWNER | Started playing with this plugin idea, now tearing myself away to work on something more important: ```python from datasette import hookimpl import click import pathlib @hookimpl def register_commands(cli): @cli.group() def upgrade(): """ Apply configuration upgrades to an existing Datasette instance """ pass @upgrade.command() @click.argument( "metadata", type=click.Path(exists=True) ) @click.option( "new_metadata", "-m", "--new-metadata", help="Path to new metadata.yaml file", type=click.Path(exists=False) ) @click.option( "new_datasette", "-c", "--new-datasette", help="Path to new datasette.yaml file", type=click.Path(exists=False) ) @click.option( "output_dir", "-e", "--output-dir", help="Directory to write new files to", type=click.Path(), default="." ) def metadata_to_config(metadata, new_metadata, new_datasette, output_dir): """ Upgrade an existing metadata.json/yaml file to the new metadata.yaml and datasette.yaml split introduced prior to Datasette 1.0. """ print("Upgrading {} to new metadata.yaml format".format(metadata)) output_dir = pathlib.Path(output_dir) if not new_metadata: # Pick a filename for the new metadata.yaml file that does not yet exist new_metadata = pick_filename("metadata", output_dir) if not new_datasette: new_datasette = pick_filename("datasette", output_dir) print("New metadata.yaml file will be written to {}".format(new_metadata)) print("New datasette.yaml file will be written to {}".format(new_datasette)) def pick_filename(base, output_dir): options = ["{}.yaml".format(base), "{}-new.yaml".format(base)] i = 0 while True: option = options.pop(0) option_path = output_dir / option if not option_path.exists(): return option_path # If we ran out … | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1940346034 | |

| https://github.com/simonw/datasette/issues/2199#issuecomment-1760411937 | https://api.github.com/repos/simonw/datasette/issues/2199 | 1760411937 | IC_kwDOBm6k_c5o7cEh | 9599 | 2023-10-12T21:51:16Z | 2023-10-12T21:51:16Z | OWNER | I think I'm OK with not preserving comments, just because it adds a level of complexity to the tool which I don't think is worth the value it provides. If people want to keep their comments I'm happy to leave them to copy those over by hand. | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1940346034 | |

| https://github.com/simonw/datasette/issues/2199#issuecomment-1760401731 | https://api.github.com/repos/simonw/datasette/issues/2199 | 1760401731 | IC_kwDOBm6k_c5o7ZlD | 15178711 | 2023-10-12T21:41:42Z | 2023-10-12T21:41:42Z | CONTRIBUTOR | I dig it - I was thinking an Observable notebook where you paste your `metadata.json`/`metadata.yaml` and it would generate the new metadata + datasette.yaml files, but an extensible `datasette upgrade` plugin would be nice for future plugins. One thing to think about: If someone has comments in their original `metadata.yaml`, could we preserve them in the new files? tbh maybe not too important bc if people cared that much they could just copy + paste, and it might be too distracting | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1940346034 | |

| https://github.com/simonw/datasette/issues/2199#issuecomment-1760396195 | https://api.github.com/repos/simonw/datasette/issues/2199 | 1760396195 | IC_kwDOBm6k_c5o7YOj | 9599 | 2023-10-12T21:36:25Z | 2023-10-12T21:36:25Z | OWNER | Related idea: how about a `datasette-upgrade` plugin which adds a `datasette upgrade` command that can be used to automate this process? Maybe something like this: ```bash datasette install datasette-upgrade datasette upgrade metadata-to-config metadata.json ``` This would output two new files: `metadata.yaml` and `datasette.yaml`. If files with those names existed already in the current directory they would be called `metadata-new.yaml` and `datasette-new.yaml`. The command would tell you what it did: ``` Your metadata.json file has been rewritten as two files: metadata-new.yaml datasette.yaml Start Datasette like this to try them out: datasette -m metadata-new.yaml -c datasette.yaml ``` The command is `datasette upgrade metadata-to-config` because `metadata-to-config` is the name of the upgrade recipe. The first version of the plugin would only have that single recipe, but we could add more recipes in the future for other upgrades. | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1940346034 | |

| https://github.com/simonw/datasette/issues/2199#issuecomment-1759952247 | https://api.github.com/repos/simonw/datasette/issues/2199 | 1759952247 | IC_kwDOBm6k_c5o5r13 | 9599 | 2023-10-12T16:23:10Z | 2023-10-12T16:23:10Z | OWNER | Some options for where this could go: - Directly in the release notes? I'm not sure about that, those are getting pretty long already. I think the release notes should link to relevant upgrade guides. - On a new page? We could have a "upgrade instructions" page in the documentation. - At the bottom of the new https://docs.datasette.io/en/latest/configuration.html page I'm leaning towards the third option at the moment. But... we may also need to provide upgrade instructions for plugin authors. Those could live in a separate area of the documentation though, since issues affecting end-users who configure Datasette and issues affecting plugin authors are unlikely to overlap much. | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1940346034 | |

| https://github.com/simonw/datasette/pull/2190#issuecomment-1759948683 | https://api.github.com/repos/simonw/datasette/issues/2190 | 1759948683 | IC_kwDOBm6k_c5o5q-L | 9599 | 2023-10-12T16:20:41Z | 2023-10-12T16:20:41Z | OWNER | I'm going to land this and open a new issue for the upgrade instructions. | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1901483874 | |

| https://github.com/simonw/datasette/pull/2190#issuecomment-1759947534 | https://api.github.com/repos/simonw/datasette/issues/2190 | 1759947534 | IC_kwDOBm6k_c5o5qsO | 9599 | 2023-10-12T16:19:59Z | 2023-10-12T16:19:59Z | OWNER | It would be nice if we could catch that and turn that into a less intimidating Click exception too. | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1901483874 | |

| https://github.com/simonw/datasette/pull/2190#issuecomment-1759947021 | https://api.github.com/repos/simonw/datasette/issues/2190 | 1759947021 | IC_kwDOBm6k_c5o5qkN | 9599 | 2023-10-12T16:19:38Z | 2023-10-12T16:19:38Z | OWNER | This looks good and works well. The error from this currently looks like: ``` datasette -m metadata.json -p 8844 Traceback (most recent call last): File "/Users/simon/.local/share/virtualenvs/datasette-AWNrQs95/bin/datasette", line 33, in <module> sys.exit(load_entry_point('datasette', 'console_scripts', 'datasette')()) File "/Users/simon/.local/share/virtualenvs/datasette-AWNrQs95/lib/python3.10/site-packages/click/core.py", line 1130, in __call__ return self.main(*args, **kwargs) File "/Users/simon/.local/share/virtualenvs/datasette-AWNrQs95/lib/python3.10/site-packages/click/core.py", line 1055, in main rv = self.invoke(ctx) File "/Users/simon/.local/share/virtualenvs/datasette-AWNrQs95/lib/python3.10/site-packages/click/core.py", line 1657, in invoke return _process_result(sub_ctx.command.invoke(sub_ctx)) File "/Users/simon/.local/share/virtualenvs/datasette-AWNrQs95/lib/python3.10/site-packages/click/core.py", line 1404, in invoke return ctx.invoke(self.callback, **ctx.params) File "/Users/simon/.local/share/virtualenvs/datasette-AWNrQs95/lib/python3.10/site-packages/click/core.py", line 760, in invoke return __callback(*args, **kwargs) File "/Users/simon/Dropbox/Development/datasette/datasette/cli.py", line 98, in wrapped return fn(*args, **kwargs) File "/Users/simon/Dropbox/Development/datasette/datasette/cli.py", line 546, in serve metadata_data = fail_if_plugins_in_metadata(parse_metadata(metadata.read())) File "/Users/simon/Dropbox/Development/datasette/datasette/utils/__init__.py", line 1282, in fail_if_plugins_in_metadata raise Exception( Exception: Datasette no longer accepts plugin configuration in --metadata. Move your "plugins" configuration blocks to a separate file - we suggest calling that datasette..json - and start Datasette with datasette -c datasette..json. See https://docs.datasette.io/en/latest/configuration.html for more details. ``` With wrapping: `Exception: Datasette no longer accepts plugin configu… | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1901483874 | |

| https://github.com/simonw/datasette/pull/2191#issuecomment-1724480716 | https://api.github.com/repos/simonw/datasette/issues/2191 | 1724480716 | IC_kwDOBm6k_c5myXzM | 22429695 | 2023-09-18T21:28:36Z | 2023-10-12T16:15:40Z | NONE | ## [Codecov](https://app.codecov.io/gh/simonw/datasette/pull/2191?src=pr&el=h1&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison) Report All modified lines are covered by tests :white_check_mark: > Comparison is base [(`6ed7908`)](https://app.codecov.io/gh/simonw/datasette/commit/6ed7908580fa2ba9297c3225d85c56f8b08b9937?el=desc&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison) 92.69% compared to head [(`0135e7c`)](https://app.codecov.io/gh/simonw/datasette/pull/2191?src=pr&el=desc&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison) 92.68%. > Report is 14 commits behind head on main. > :exclamation: Current head 0135e7c differs from pull request most recent head 18b48f8. Consider uploading reports for the commit 18b48f8 to get more accurate results <details><summary>Additional details and impacted files</summary> ```diff @@ Coverage Diff @@ ## main #2191 +/- ## ========================================== - Coverage 92.69% 92.68% -0.02% ========================================== Files 40 40 Lines 6039 6042 +3 ========================================== + Hits 5598 5600 +2 - Misses 441 442 +1 ``` | [Files](https://app.codecov.io/gh/simonw/datasette/pull/2191?src=pr&el=tree&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison) | Coverage Δ | | |---|---|---| | [datasette/app.py](https://app.codecov.io/gh/simonw/datasette/pull/2191?src=pr&el=tree&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=Simon+Willison#diff-ZGF0YXNldHRlL2FwcC5weQ==) | `94.09% <100.00%> (-0.11%)` | :arrow_down: | | [datasette/default\_permissions.py](https://app.codecov.io/gh/simonw/datasette/pull/2191?src=pr&el=tree&utm_medi… | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1901768721 | |