issue_comments

10,495 rows sorted by updated_at descending

This data as json, CSV (advanced)

issue >30

- Port Datasette to ASGI 42

- Authentication (and permissions) as a core concept 40

- Ability to sort (and paginate) by column 31

- link_or_copy_directory() error - Invalid cross-device link 28

- Export to CSV 27

- base_url configuration setting 27

- Documentation with recommendations on running Datasette in production without using Docker 26

- Ability for a canned query to write to the database 26

- Proof of concept for Datasette on AWS Lambda with EFS 25

- Redesign register_output_renderer callback 24

- Datasette Plugins 22

- "flash messages" mechanism 20

- Handle spatialite geometry columns better 19

- Ability to ship alpha and beta releases 18

- Facets 16

- Support "allow" block on root, databases and tables, not just queries 16

- Bug: Sort by column with NULL in next_page URL 15

- Support cross-database joins 15

- The ".upsert()" method is misnamed 15

- --dirs option for scanning directories for SQLite databases 15

- Document (and reconsider design of) Database.execute() and Database.execute_against_connection_in_thread() 15

- latest.datasette.io is no longer updating 15

- Ability to customize presentation of specific columns in HTML view 14

- Allow plugins to define additional URL routes and views 14

- Mechanism for customizing the SQL used to select specific columns in the table view 14

- .execute_write() and .execute_write_fn() methods on Database 14

- Upload all my photos to a secure S3 bucket 14

- Canned query permissions mechanism 14

- Dockerfile should build more recent SQLite with FTS5 and spatialite support 13

- Fix all the places that currently use .inspect() data 13

- …

| id | html_url | issue_url | node_id | user | created_at | updated_at ▲ | author_association | body | reactions | issue | performed_via_github_app |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 646938984 | https://github.com/simonw/datasette/issues/687#issuecomment-646938984 | https://api.github.com/repos/simonw/datasette/issues/687 | MDEyOklzc3VlQ29tbWVudDY0NjkzODk4NA== | simonw 9599 | 2020-06-20T04:22:25Z | 2020-06-20T04:23:02Z | OWNER | I think I want the "Plugin hooks" page to be top-level, parallel to "Plugins" and "Internals for Plugins". It's the page of documentation refer to most often so I don't want to have to click down a hierarchy from the side navigation to find it. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Expand plugins documentation to multiple pages 572896293 | |

| 646930455 | https://github.com/simonw/datasette/issues/687#issuecomment-646930455 | https://api.github.com/repos/simonw/datasette/issues/687 | MDEyOklzc3VlQ29tbWVudDY0NjkzMDQ1NQ== | simonw 9599 | 2020-06-20T03:22:21Z | 2020-06-20T03:22:21Z | OWNER | The tutorial can start by showing how to use the new cookiecutter template from #642. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Expand plugins documentation to multiple pages 572896293 | |

| 646930365 | https://github.com/simonw/datasette/issues/855#issuecomment-646930365 | https://api.github.com/repos/simonw/datasette/issues/855 | MDEyOklzc3VlQ29tbWVudDY0NjkzMDM2NQ== | simonw 9599 | 2020-06-20T03:21:48Z | 2020-06-20T03:21:48Z | OWNER | Maybe I should also refactor the plugin documentation, as contemplated in #687. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Add instructions for using cookiecutter plugin template to plugin docs 642127307 | |

| 646930160 | https://github.com/simonw/datasette/issues/642#issuecomment-646930160 | https://api.github.com/repos/simonw/datasette/issues/642 | MDEyOklzc3VlQ29tbWVudDY0NjkzMDE2MA== | simonw 9599 | 2020-06-20T03:20:25Z | 2020-06-20T03:20:25Z | OWNER | Shipped this today! https://github.com/simonw/datasette-plugin is a cookiecutter template for creating new plugins. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Provide a cookiecutter template for creating new plugins 529429214 | |

| 646930059 | https://github.com/simonw/datasette/issues/642#issuecomment-646930059 | https://api.github.com/repos/simonw/datasette/issues/642 | MDEyOklzc3VlQ29tbWVudDY0NjkzMDA1OQ== | simonw 9599 | 2020-06-20T03:19:57Z | 2020-06-20T03:19:57Z | OWNER | @psychemedia sorry I missed your comment before. Niche Museums is definitely the best example of custom templates at the moment: https://github.com/simonw/museums/tree/master/templates I want to comprehensively document the variables made available to custom templates before shipping Datasette 1.0 - just filed that as #857. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Provide a cookiecutter template for creating new plugins 529429214 | |

| 646928638 | https://github.com/simonw/datasette/issues/855#issuecomment-646928638 | https://api.github.com/repos/simonw/datasette/issues/855 | MDEyOklzc3VlQ29tbWVudDY0NjkyODYzOA== | simonw 9599 | 2020-06-20T03:09:41Z | 2020-06-20T03:09:41Z | OWNER | I've shipped the cookiecutter template and used it to build https://github.com/simonw/datasette-saved-queries - it's ready to add to the official documentation. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Add instructions for using cookiecutter plugin template to plugin docs 642127307 | |

| 646905073 | https://github.com/simonw/datasette/issues/852#issuecomment-646905073 | https://api.github.com/repos/simonw/datasette/issues/852 | MDEyOklzc3VlQ29tbWVudDY0NjkwNTA3Mw== | simonw 9599 | 2020-06-20T00:21:34Z | 2020-06-20T00:22:28Z | OWNER | New repo: https://github.com/simonw/datasette-saved-queries - which I created using the new cookiecutter template at https://github.com/simonw/datasette-plugin |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

canned_queries() plugin hook 640917326 | |

| 646760805 | https://github.com/simonw/datasette/issues/852#issuecomment-646760805 | https://api.github.com/repos/simonw/datasette/issues/852 | MDEyOklzc3VlQ29tbWVudDY0Njc2MDgwNQ== | simonw 9599 | 2020-06-19T17:07:45Z | 2020-06-19T17:07:45Z | OWNER | Plugin idea: Then it returns any queries from that table as additional canned queries. Bonus idea: it could write the user's actor_id to a column if they are signed in, and provide a link to see "just my saved queries" in that case. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

canned_queries() plugin hook 640917326 | |

| 646686493 | https://github.com/simonw/datasette/issues/849#issuecomment-646686493 | https://api.github.com/repos/simonw/datasette/issues/849 | MDEyOklzc3VlQ29tbWVudDY0NjY4NjQ5Mw== | simonw 9599 | 2020-06-19T15:04:51Z | 2020-06-19T15:04:51Z | OWNER | https://twitter.com/jaffathecake/status/1273983493006077952 concerns what happens to open pull requests - they will automatically close when you remove |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Rename master branch to main 639072811 | |

| 646396772 | https://github.com/simonw/datasette/issues/852#issuecomment-646396772 | https://api.github.com/repos/simonw/datasette/issues/852 | MDEyOklzc3VlQ29tbWVudDY0NjM5Njc3Mg== | simonw 9599 | 2020-06-19T02:16:47Z | 2020-06-19T02:16:47Z | OWNER | I'll close this once I've built a plugin against it. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

canned_queries() plugin hook 640917326 | |

| 646396690 | https://github.com/simonw/datasette/issues/852#issuecomment-646396690 | https://api.github.com/repos/simonw/datasette/issues/852 | MDEyOklzc3VlQ29tbWVudDY0NjM5NjY5MA== | simonw 9599 | 2020-06-19T02:16:24Z | 2020-06-19T02:16:24Z | OWNER | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

canned_queries() plugin hook 640917326 | ||

| 646396499 | https://github.com/simonw/datasette/issues/852#issuecomment-646396499 | https://api.github.com/repos/simonw/datasette/issues/852 | MDEyOklzc3VlQ29tbWVudDY0NjM5NjQ5OQ== | simonw 9599 | 2020-06-19T02:15:49Z | 2020-06-19T02:15:58Z | OWNER | Released an alpha preview in https://github.com/simonw/datasette/releases/tag/0.45a1 Wrote about this here: https://simonwillison.net/2020/Jun/19/datasette-alphas/ |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

canned_queries() plugin hook 640917326 | |

| 646350530 | https://github.com/simonw/datasette/issues/852#issuecomment-646350530 | https://api.github.com/repos/simonw/datasette/issues/852 | MDEyOklzc3VlQ29tbWVudDY0NjM1MDUzMA== | simonw 9599 | 2020-06-18T23:13:57Z | 2020-06-18T23:14:11Z | OWNER |

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

canned_queries() plugin hook 640917326 | |

| 646329456 | https://github.com/simonw/datasette/issues/852#issuecomment-646329456 | https://api.github.com/repos/simonw/datasette/issues/852 | MDEyOklzc3VlQ29tbWVudDY0NjMyOTQ1Ng== | simonw 9599 | 2020-06-18T22:07:09Z | 2020-06-18T22:07:37Z | OWNER | It would be neat if the queries returned by this hook could be restricted to specific users. I think I can do that by returning an "allow" block as part of the query. But... what if we allow users to save private queries and we might have thousands of users each with hundreds of saved queries? For that case it would be good if the plugin hook could take an optional This would also allow us to dynamically generate a canned query for "return the bookmarks belonging to this actor" or similar! |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

canned_queries() plugin hook 640917326 | |

| 646320237 | https://github.com/simonw/datasette/issues/807#issuecomment-646320237 | https://api.github.com/repos/simonw/datasette/issues/807 | MDEyOklzc3VlQ29tbWVudDY0NjMyMDIzNw== | simonw 9599 | 2020-06-18T21:41:16Z | 2020-06-18T21:41:16Z | OWNER | https://pypi.org/project/datasette/0.45a0/ is the release on PyPI. And in a fresh virtual environment:

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Ability to ship alpha and beta releases 632843030 | |

| 646319315 | https://github.com/simonw/datasette/issues/807#issuecomment-646319315 | https://api.github.com/repos/simonw/datasette/issues/807 | MDEyOklzc3VlQ29tbWVudDY0NjMxOTMxNQ== | simonw 9599 | 2020-06-18T21:38:56Z | 2020-06-18T21:38:56Z | OWNER | This worked! https://pypi.org/project/datasette/#history

https://github.com/simonw/datasette/releases/tag/0.45a0 is my manually created GitHub prerelease. https://datasette.readthedocs.io/en/latest/changelog.html#a0-2020-06-18 has the release notes. A shame Read The Docs doesn't seem to build the docs for these releases -it's not showing the tag in the releases pane here:

Also the new tag isn't an option in the Build menu on https://readthedocs.org/projects/datasette/builds/ Not a big problem though since the "latest" tag on Read The Docs will still carry the in-development documentation. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Ability to ship alpha and beta releases 632843030 | |

| 646308467 | https://github.com/simonw/datasette/issues/835#issuecomment-646308467 | https://api.github.com/repos/simonw/datasette/issues/835 | MDEyOklzc3VlQ29tbWVudDY0NjMwODQ2Nw== | simonw 9599 | 2020-06-18T21:12:50Z | 2020-06-18T21:12:50Z | OWNER | Problem there is Login CSRF attacks: https://cheatsheetseries.owasp.org/cheatsheets/Cross-Site_Request_Forgery_Prevention_Cheat_Sheet.html#login-csrf - I still want to perform CSRF checks on login forms, even though the user may not yet have any cookies. Maybe I can turn off CSRF checks for cookie-free requests but allow login forms to specifically opt back in to CSRF protection? |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Mechanism for skipping CSRF checks on API posts 637363686 | |

| 646307083 | https://github.com/simonw/datasette/issues/835#issuecomment-646307083 | https://api.github.com/repos/simonw/datasette/issues/835 | MDEyOklzc3VlQ29tbWVudDY0NjMwNzA4Mw== | simonw 9599 | 2020-06-18T21:09:35Z | 2020-06-18T21:09:35Z | OWNER | So maybe one really easy fix here is to disable CSRF checks entirely for any request that doesn't have any cookies? Also suggested here: https://twitter.com/mrkurt/status/1273682965168603137 |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Mechanism for skipping CSRF checks on API posts 637363686 | |

| 646303240 | https://github.com/simonw/datasette/issues/807#issuecomment-646303240 | https://api.github.com/repos/simonw/datasette/issues/807 | MDEyOklzc3VlQ29tbWVudDY0NjMwMzI0MA== | simonw 9599 | 2020-06-18T21:00:41Z | 2020-06-18T21:00:41Z | OWNER | New documentation about the alpha/beta releases: https://datasette.readthedocs.io/en/latest/contributing.html#contributing-alpha-beta |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Ability to ship alpha and beta releases 632843030 | |

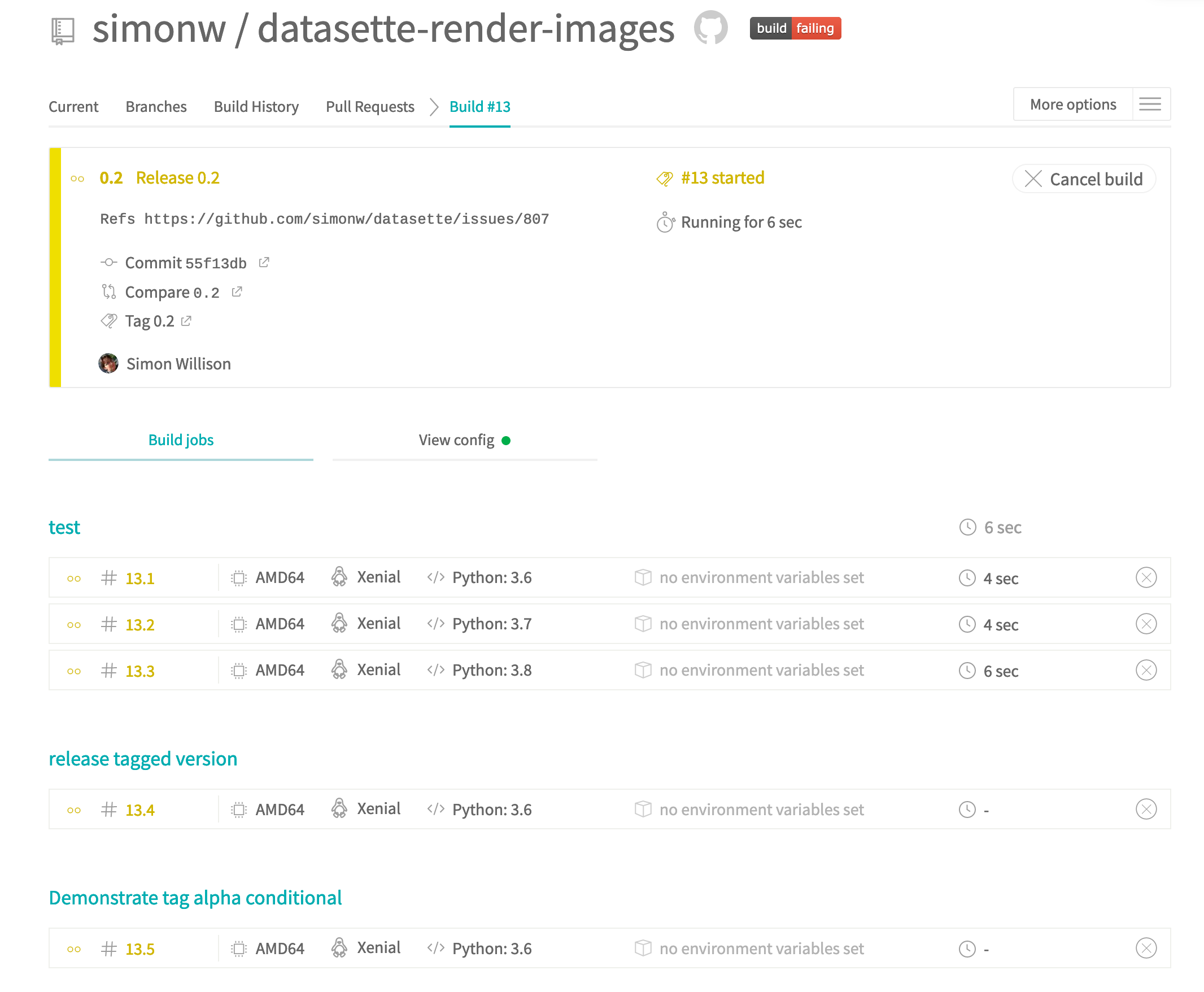

| 646302909 | https://github.com/simonw/datasette/issues/807#issuecomment-646302909 | https://api.github.com/repos/simonw/datasette/issues/807 | MDEyOklzc3VlQ29tbWVudDY0NjMwMjkwOQ== | simonw 9599 | 2020-06-18T21:00:02Z | 2020-06-18T21:00:02Z | OWNER | Alpha release is running through Travis now: https://travis-ci.org/github/simonw/datasette/builds/699864168 |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Ability to ship alpha and beta releases 632843030 | |

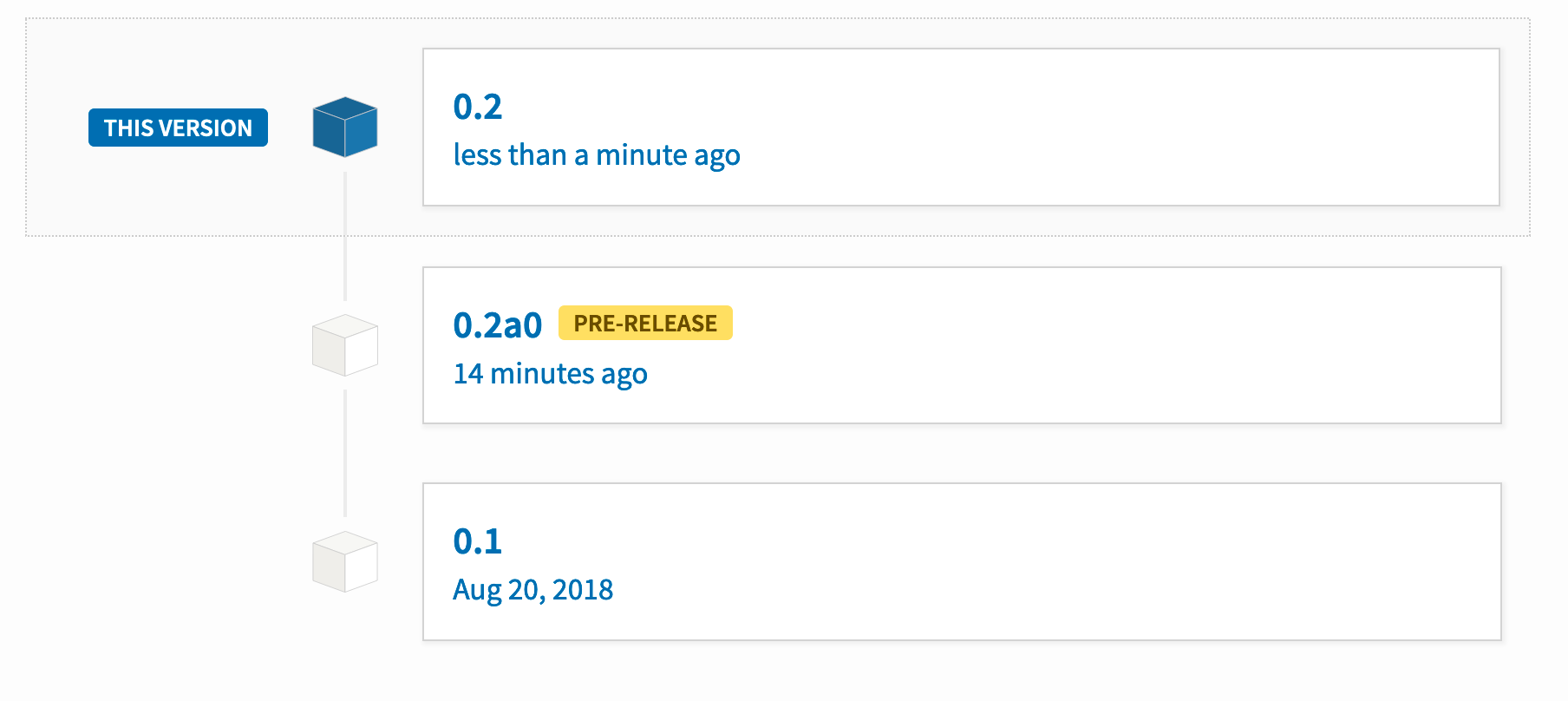

| 646293670 | https://github.com/simonw/datasette/issues/807#issuecomment-646293670 | https://api.github.com/repos/simonw/datasette/issues/807 | MDEyOklzc3VlQ29tbWVudDY0NjI5MzY3MA== | simonw 9599 | 2020-06-18T20:38:50Z | 2020-06-18T20:38:50Z | OWNER | https://pypi.org/project/datasette-render-images/#history worked:

I'm now confident enough that I'll make these changes and ship an alpha of Datasette itself. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Ability to ship alpha and beta releases 632843030 | |

| 646293029 | https://github.com/simonw/datasette/issues/807#issuecomment-646293029 | https://api.github.com/repos/simonw/datasette/issues/807 | MDEyOklzc3VlQ29tbWVudDY0NjI5MzAyOQ== | simonw 9599 | 2020-06-18T20:37:28Z | 2020-06-18T20:37:46Z | OWNER | Here's the Read The Docs documentation on versioned releases: https://docs.readthedocs.io/en/stable/versions.html It looks like they do the right thing:

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Ability to ship alpha and beta releases 632843030 | |

| 646292578 | https://github.com/simonw/datasette/issues/807#issuecomment-646292578 | https://api.github.com/repos/simonw/datasette/issues/807 | MDEyOklzc3VlQ29tbWVudDY0NjI5MjU3OA== | simonw 9599 | 2020-06-18T20:36:22Z | 2020-06-18T20:36:22Z | OWNER | https://travis-ci.com/github/simonw/datasette-render-images/builds/172118541 demonstrates that the alpha/beta conditional is working as intended:

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Ability to ship alpha and beta releases 632843030 | |

| 646291309 | https://github.com/simonw/datasette/issues/807#issuecomment-646291309 | https://api.github.com/repos/simonw/datasette/issues/807 | MDEyOklzc3VlQ29tbWVudDY0NjI5MTMwOQ== | simonw 9599 | 2020-06-18T20:33:31Z | 2020-06-18T20:33:31Z | OWNER | One more experiment: I'm going to ship In the alpha releasing run on Travis that echo statement did NOT execute: https://travis-ci.com/github/simonw/datasette-render-images/builds/172116625 |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Ability to ship alpha and beta releases 632843030 | |

| 646290171 | https://github.com/simonw/datasette/issues/807#issuecomment-646290171 | https://api.github.com/repos/simonw/datasette/issues/807 | MDEyOklzc3VlQ29tbWVudDY0NjI5MDE3MQ== | simonw 9599 | 2020-06-18T20:30:48Z | 2020-06-18T20:30:48Z | OWNER | OK, I just shipped 0.2a0 of

But this page does: https://pypi.org/project/datasette-render-images/#history

And https://pypi.org/project/datasette-render-images/0.2a0/ exists. In a fresh virtual environment

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Ability to ship alpha and beta releases 632843030 | |

| 646288146 | https://github.com/simonw/datasette/issues/835#issuecomment-646288146 | https://api.github.com/repos/simonw/datasette/issues/835 | MDEyOklzc3VlQ29tbWVudDY0NjI4ODE0Ng== | simonw 9599 | 2020-06-18T20:26:22Z | 2020-06-18T20:26:31Z | OWNER | Useful tip from Carlton Gibson: https://twitter.com/carltongibson/status/1273680590672453632

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Mechanism for skipping CSRF checks on API posts 637363686 | |

| 646280134 | https://github.com/simonw/datasette/issues/807#issuecomment-646280134 | https://api.github.com/repos/simonw/datasette/issues/807 | MDEyOklzc3VlQ29tbWVudDY0NjI4MDEzNA== | simonw 9599 | 2020-06-18T20:08:15Z | 2020-06-18T20:08:15Z | OWNER | https://github.com/simonw/datasette-render-images uses Travis and is low-risk for trying this out. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Ability to ship alpha and beta releases 632843030 | |

| 646279428 | https://github.com/simonw/datasette/issues/807#issuecomment-646279428 | https://api.github.com/repos/simonw/datasette/issues/807 | MDEyOklzc3VlQ29tbWVudDY0NjI3OTQyOA== | simonw 9599 | 2020-06-18T20:06:43Z | 2020-06-18T20:06:43Z | OWNER | I'm going to try this on a separate repository so I don't accidentally publish a Datasette release I didn't mean to publish! |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Ability to ship alpha and beta releases 632843030 | |

| 646279280 | https://github.com/simonw/datasette/issues/807#issuecomment-646279280 | https://api.github.com/repos/simonw/datasette/issues/807 | MDEyOklzc3VlQ29tbWVudDY0NjI3OTI4MA== | simonw 9599 | 2020-06-18T20:06:24Z | 2020-06-18T20:06:24Z | OWNER | So maybe this condition is right? |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Ability to ship alpha and beta releases 632843030 | |

| 646278801 | https://github.com/simonw/datasette/issues/807#issuecomment-646278801 | https://api.github.com/repos/simonw/datasette/issues/807 | MDEyOklzc3VlQ29tbWVudDY0NjI3ODgwMQ== | simonw 9599 | 2020-06-18T20:05:18Z | 2020-06-18T20:05:18Z | OWNER | Travis conditions documentation: https://docs.travis-ci.com/user/conditions-v1 These look useful:

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Ability to ship alpha and beta releases 632843030 | |

| 646277680 | https://github.com/simonw/datasette/issues/807#issuecomment-646277680 | https://api.github.com/repos/simonw/datasette/issues/807 | MDEyOklzc3VlQ29tbWVudDY0NjI3NzY4MA== | simonw 9599 | 2020-06-18T20:02:42Z | 2020-06-18T20:02:42Z | OWNER | So I think if I push a tag of Except... I don't want to push alphas as Docker images - so I need to fix this code: |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Ability to ship alpha and beta releases 632843030 | |

| 646277155 | https://github.com/simonw/datasette/issues/807#issuecomment-646277155 | https://api.github.com/repos/simonw/datasette/issues/807 | MDEyOklzc3VlQ29tbWVudDY0NjI3NzE1NQ== | simonw 9599 | 2020-06-18T20:01:31Z | 2020-06-18T20:01:31Z | OWNER | I thought I might have to update a regex (my CircleCI configs won't match on |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Ability to ship alpha and beta releases 632843030 | |

| 646276150 | https://github.com/simonw/datasette/issues/807#issuecomment-646276150 | https://api.github.com/repos/simonw/datasette/issues/807 | MDEyOklzc3VlQ29tbWVudDY0NjI3NjE1MA== | simonw 9599 | 2020-06-18T19:59:17Z | 2020-06-18T19:59:17Z | OWNER | Relevant PEP: https://www.python.org/dev/peps/pep-0440/ Django's implementation dates back 8 years: https://github.com/django/django/commit/40f0ecc56a23d35c2849f8e79276f6d8931412d1 From the PEP:

I'm going to habitually include the 0. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Ability to ship alpha and beta releases 632843030 | |

| 646273035 | https://github.com/simonw/datasette/issues/807#issuecomment-646273035 | https://api.github.com/repos/simonw/datasette/issues/807 | MDEyOklzc3VlQ29tbWVudDY0NjI3MzAzNQ== | simonw 9599 | 2020-06-18T19:52:28Z | 2020-06-18T19:52:28Z | OWNER | I'd like this soon, because I want to start experimenting with things like #852 and #842 without shipping those plugin hooks in a full stable release. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Ability to ship alpha and beta releases 632843030 | |

| 646272627 | https://github.com/simonw/datasette/issues/842#issuecomment-646272627 | https://api.github.com/repos/simonw/datasette/issues/842 | MDEyOklzc3VlQ29tbWVudDY0NjI3MjYyNw== | simonw 9599 | 2020-06-18T19:51:32Z | 2020-06-18T19:51:32Z | OWNER | I'd be OK with the first version of this not including a plugin hook. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Magic parameters for canned queries 638212085 | |

| 646264051 | https://github.com/simonw/datasette/issues/842#issuecomment-646264051 | https://api.github.com/repos/simonw/datasette/issues/842 | MDEyOklzc3VlQ29tbWVudDY0NjI2NDA1MQ== | simonw 9599 | 2020-06-18T19:32:13Z | 2020-06-18T19:32:37Z | OWNER | If every magic parameter has a prefix and suffix, like But does it make sense for every magic parameter to be of form |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Magic parameters for canned queries 638212085 | |

| 646246062 | https://github.com/simonw/datasette/issues/842#issuecomment-646246062 | https://api.github.com/repos/simonw/datasette/issues/842 | MDEyOklzc3VlQ29tbWVudDY0NjI0NjA2Mg== | simonw 9599 | 2020-06-18T18:54:41Z | 2020-06-18T18:54:41Z | OWNER | The |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Magic parameters for canned queries 638212085 | |

| 646242172 | https://github.com/simonw/datasette/issues/842#issuecomment-646242172 | https://api.github.com/repos/simonw/datasette/issues/842 | MDEyOklzc3VlQ29tbWVudDY0NjI0MjE3Mg== | simonw 9599 | 2020-06-18T18:46:06Z | 2020-06-18T18:53:31Z | OWNER | Yes that can work - and using conn = sqlite3.connect(":memory:") class Magic(dict): def missing(self, key): return key.upper() conn.execute("select :name", Magic()).fetchall()

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Magic parameters for canned queries 638212085 | |

| 646238702 | https://github.com/simonw/datasette/issues/842#issuecomment-646238702 | https://api.github.com/repos/simonw/datasette/issues/842 | MDEyOklzc3VlQ29tbWVudDY0NjIzODcwMg== | simonw 9599 | 2020-06-18T18:39:07Z | 2020-06-18T18:39:07Z | OWNER | It would be nice if Datasette didn't have to do any additional work to find e.g. Could I do this with a custom class that implements |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Magic parameters for canned queries 638212085 | |

| 646218809 | https://github.com/simonw/datasette/issues/820#issuecomment-646218809 | https://api.github.com/repos/simonw/datasette/issues/820 | MDEyOklzc3VlQ29tbWVudDY0NjIxODgwOQ== | simonw 9599 | 2020-06-18T17:58:02Z | 2020-06-18T17:58:02Z | OWNER | I had the same idea again ten days later: #852. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Idea: Plugin hook for registering canned queries 635049296 | |

| 646217766 | https://github.com/simonw/datasette/issues/835#issuecomment-646217766 | https://api.github.com/repos/simonw/datasette/issues/835 | MDEyOklzc3VlQ29tbWVudDY0NjIxNzc2Ng== | simonw 9599 | 2020-06-18T17:55:54Z | 2020-06-18T17:56:04Z | OWNER | Idea: a mechanism where the |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Mechanism for skipping CSRF checks on API posts 637363686 | |

| 646216934 | https://github.com/simonw/datasette/issues/835#issuecomment-646216934 | https://api.github.com/repos/simonw/datasette/issues/835 | MDEyOklzc3VlQ29tbWVudDY0NjIxNjkzNA== | simonw 9599 | 2020-06-18T17:54:14Z | 2020-06-18T17:54:14Z | OWNER |

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Mechanism for skipping CSRF checks on API posts 637363686 | |

| 646214158 | https://github.com/simonw/datasette/issues/835#issuecomment-646214158 | https://api.github.com/repos/simonw/datasette/issues/835 | MDEyOklzc3VlQ29tbWVudDY0NjIxNDE1OA== | simonw 9599 | 2020-06-18T17:48:45Z | 2020-06-18T17:48:45Z | OWNER | I wonder if it's safe to generically say "Don't do CSRF protection on any request that includes a |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Mechanism for skipping CSRF checks on API posts 637363686 | |

| 646209520 | https://github.com/simonw/datasette/issues/835#issuecomment-646209520 | https://api.github.com/repos/simonw/datasette/issues/835 | MDEyOklzc3VlQ29tbWVudDY0NjIwOTUyMA== | simonw 9599 | 2020-06-18T17:39:30Z | 2020-06-18T17:40:53Z | OWNER |

Since |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Mechanism for skipping CSRF checks on API posts 637363686 | |

| 646204308 | https://github.com/simonw/datasette/issues/835#issuecomment-646204308 | https://api.github.com/repos/simonw/datasette/issues/835 | MDEyOklzc3VlQ29tbWVudDY0NjIwNDMwOA== | simonw 9599 | 2020-06-18T17:32:41Z | 2020-06-18T17:32:41Z | OWNER | The only way I can think of for a view to opt-out of CSRF protection is for them to be able to reconfigure the |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Mechanism for skipping CSRF checks on API posts 637363686 | |

| 646175055 | https://github.com/simonw/datasette/issues/835#issuecomment-646175055 | https://api.github.com/repos/simonw/datasette/issues/835 | MDEyOklzc3VlQ29tbWVudDY0NjE3NTA1NQ== | simonw 9599 | 2020-06-18T17:00:45Z | 2020-06-18T17:00:45Z | OWNER | Here's the Rails pattern for this: https://gist.github.com/maxivak/a25957942b6c21a41acd |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Mechanism for skipping CSRF checks on API posts 637363686 | |

| 646172200 | https://github.com/simonw/datasette/issues/835#issuecomment-646172200 | https://api.github.com/repos/simonw/datasette/issues/835 | MDEyOklzc3VlQ29tbWVudDY0NjE3MjIwMA== | simonw 9599 | 2020-06-18T16:57:45Z | 2020-06-18T16:57:45Z | OWNER | I think there are a couple of steps to this one. The nature of CSRF is that it's about hijacking existing authentication credentials. If your Datasette site runs without any authentication plugins at all CSRF protection isn't actually useful. Some POST endpoints should be able to opt-out of CSRF protection entirely. A writable canned query that accepts anonymous poll submissions for example might determine that CSRF is not needed. If a plugin adds This means I need two new mechanisms:

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Mechanism for skipping CSRF checks on API posts 637363686 | |

| 646151706 | https://github.com/simonw/datasette/issues/835#issuecomment-646151706 | https://api.github.com/repos/simonw/datasette/issues/835 | MDEyOklzc3VlQ29tbWVudDY0NjE1MTcwNg== | simonw 9599 | 2020-06-18T16:36:23Z | 2020-06-18T16:36:23Z | OWNER | Tweeted about this here: https://twitter.com/simonw/status/1273655053170077701 |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Mechanism for skipping CSRF checks on API posts 637363686 | |

| 646140022 | https://github.com/simonw/datasette/issues/853#issuecomment-646140022 | https://api.github.com/repos/simonw/datasette/issues/853 | MDEyOklzc3VlQ29tbWVudDY0NjE0MDAyMg== | simonw 9599 | 2020-06-18T16:21:53Z | 2020-06-18T16:21:53Z | OWNER | I have a test that demonstrates this working, but also demonstrates that the CSRF protection from #798 makes this really tricky to work with. I'd like to improve that. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Ensure register_routes() works for POST 640943441 | |

| 645785830 | https://github.com/simonw/datasette/issues/852#issuecomment-645785830 | https://api.github.com/repos/simonw/datasette/issues/852 | MDEyOklzc3VlQ29tbWVudDY0NTc4NTgzMA== | simonw 9599 | 2020-06-18T05:37:00Z | 2020-06-18T05:37:00Z | OWNER | The easiest way to do this would be with a new plugin hook: Another approach would be to make the whole of I think I like the dedicated |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

canned_queries() plugin hook 640917326 | |

| 645781482 | https://github.com/simonw/datasette/issues/852#issuecomment-645781482 | https://api.github.com/repos/simonw/datasette/issues/852 | MDEyOklzc3VlQ29tbWVudDY0NTc4MTQ4Mg== | simonw 9599 | 2020-06-18T05:24:55Z | 2020-06-18T05:25:00Z | OWNER | Question about this on Twitter: https://twitter.com/amjithr/status/1273440766862352384 |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

canned_queries() plugin hook 640917326 | |

| 645599881 | https://github.com/dogsheep/twitter-to-sqlite/issues/47#issuecomment-645599881 | https://api.github.com/repos/dogsheep/twitter-to-sqlite/issues/47 | MDEyOklzc3VlQ29tbWVudDY0NTU5OTg4MQ== | simonw 9599 | 2020-06-17T20:13:48Z | 2020-06-17T20:13:48Z | MEMBER | I've now figured out how to compile specific SQLite versions to help replicate this problem: https://github.com/simonw/til/blob/master/sqlite/ld-preload.md Next step: replicate the problem! |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Fall back to FTS4 if FTS5 is not available 639542974 | |

| 645515103 | https://github.com/dogsheep/twitter-to-sqlite/issues/47#issuecomment-645515103 | https://api.github.com/repos/dogsheep/twitter-to-sqlite/issues/47 | MDEyOklzc3VlQ29tbWVudDY0NTUxNTEwMw== | hpk42 73579 | 2020-06-17T17:30:01Z | 2020-06-17T17:30:01Z | NONE | It's the one with python3.7:: On Wed, Jun 17, 2020 at 10:24 -0700, Simon Willison wrote:

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Fall back to FTS4 if FTS5 is not available 639542974 | |

| 645512127 | https://github.com/dogsheep/twitter-to-sqlite/issues/47#issuecomment-645512127 | https://api.github.com/repos/dogsheep/twitter-to-sqlite/issues/47 | MDEyOklzc3VlQ29tbWVudDY0NTUxMjEyNw== | simonw 9599 | 2020-06-17T17:24:22Z | 2020-06-17T17:24:22Z | MEMBER | That means your version of SQLite is old enough that it doesn't support the FTS5 extension. Could you share what operating system you're running, and what the output is that you get from running this? I can teach this tool to fall back on FTS4 if FTS5 isn't available. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Fall back to FTS4 if FTS5 is not available 639542974 | |

| 645293374 | https://github.com/simonw/datasette/issues/851#issuecomment-645293374 | https://api.github.com/repos/simonw/datasette/issues/851 | MDEyOklzc3VlQ29tbWVudDY0NTI5MzM3NA== | abdusco 3243482 | 2020-06-17T10:32:02Z | 2020-06-17T10:32:28Z | CONTRIBUTOR | Welp, I'm an idiot. Turns out I had a sneaky comma Correcting the SQL solved the issue. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Having trouble getting writable canned queries to work 640330278 | |

| 645068128 | https://github.com/simonw/datasette/issues/850#issuecomment-645068128 | https://api.github.com/repos/simonw/datasette/issues/850 | MDEyOklzc3VlQ29tbWVudDY0NTA2ODEyOA== | simonw 9599 | 2020-06-16T23:52:16Z | 2020-06-16T23:52:16Z | OWNER | https://aws.amazon.com/blogs/compute/announcing-http-apis-for-amazon-api-gateway/ looks very important here: AWS HTTP APIs were introduced in December 2019 and appear to be a third of the price of API Gateway. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proof of concept for Datasette on AWS Lambda with EFS 639993467 | |

| 645067611 | https://github.com/simonw/datasette/issues/236#issuecomment-645067611 | https://api.github.com/repos/simonw/datasette/issues/236 | MDEyOklzc3VlQ29tbWVudDY0NTA2NzYxMQ== | simonw 9599 | 2020-06-16T23:50:12Z | 2020-06-16T23:50:59Z | OWNER | As for your other questions:

Yes, exactly. I know this will limit the size of database that can be deployed (since Lambda has a 50MB total package limit as far as I can tell) but there are plenty of interesting databases that are small enough to fit there. The new EFS support for Lambda means that theoretically the size of database is now unlimited, which is really interesting. That's what got me inspired to take a look at a proof of concept in #850.

I personally like scale-to-zero because many of my projects are likely to receive very little traffic. So API GW first, and maybe ALB as an option later on for people operating at scale?

As you've found, the only native component is uvloop which is only needed if uvicorn is being used to serve requests.

For the eventual "datasette publish lambda" command I want whatever results in the smallest amount of inconvenience for users. I've been trying out Amazon SAM in #850 and it requires users to run Docker on their machines, which is a pretty huge barrier to entry! I don't have much experience with CloudFormation but it's probably a better bet, especially if you can "pip install" the dependencies needed to deploy with it. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

datasette publish lambda plugin 317001500 | |

| 645066486 | https://github.com/simonw/datasette/issues/236#issuecomment-645066486 | https://api.github.com/repos/simonw/datasette/issues/236 | MDEyOklzc3VlQ29tbWVudDY0NTA2NjQ4Ng== | simonw 9599 | 2020-06-16T23:45:45Z | 2020-06-16T23:45:45Z | OWNER | Hi Colin, Sorry I didn't see this sooner! I've just started digging into this myself, to try and play with the new EFS Lambda support: #850. Yes, uvloop is only needed because of uvicorn. I have a branch here that removes that dependency just for trying out Lambda: https://github.com/simonw/datasette/tree/no-uvicorn - so you can run I'm going to try out your |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

datasette publish lambda plugin 317001500 | |

| 645064332 | https://github.com/simonw/datasette/issues/850#issuecomment-645064332 | https://api.github.com/repos/simonw/datasette/issues/850 | MDEyOklzc3VlQ29tbWVudDY0NTA2NDMzMg== | simonw 9599 | 2020-06-16T23:37:34Z | 2020-06-16T23:37:34Z | OWNER | Just realized Colin Dellow reported an issue with Datasette and Mangum back in April - #719 - and has in fact been working on https://github.com/code402/datasette-lambda for a while! |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proof of concept for Datasette on AWS Lambda with EFS 639993467 | |

| 645063386 | https://github.com/simonw/datasette/issues/850#issuecomment-645063386 | https://api.github.com/repos/simonw/datasette/issues/850 | MDEyOklzc3VlQ29tbWVudDY0NTA2MzM4Ng== | simonw 9599 | 2020-06-16T23:34:07Z | 2020-06-16T23:34:07Z | OWNER | Tried Fetching lambci/lambda:python3.8 Docker container image...... Mounting /private/tmp/datasette-proof-of-concept/.aws-sam/build/HelloWorldFunction as /var/task:ro,delegated inside runtime container START RequestId: 7c04480b-5d42-168e-dec0-4e8bf34fa596 Version: $LATEST [INFO] 2020-06-16T23:33:27.24Z 7c04480b-5d42-168e-dec0-4e8bf34fa596 Waiting for application startup. [INFO] 2020-06-16T23:33:27.24Z 7c04480b-5d42-168e-dec0-4e8bf34fa596 LifespanCycleState.STARTUP: 'lifespan.startup.complete' event received from application. [INFO] 2020-06-16T23:33:27.24Z 7c04480b-5d42-168e-dec0-4e8bf34fa596 Application startup complete. [INFO] 2020-06-16T23:33:27.24Z 7c04480b-5d42-168e-dec0-4e8bf34fa596 Waiting for application shutdown. [INFO] 2020-06-16T23:33:27.24Z 7c04480b-5d42-168e-dec0-4e8bf34fa596 LifespanCycleState.SHUTDOWN: 'lifespan.shutdown.complete' event received from application. [ERROR] KeyError: 'requestContext' Traceback (most recent call last): File "/var/task/mangum/adapter.py", line 110, in call return self.handler(event, context) File "/var/task/mangum/adapter.py", line 130, in handler if "eventType" in event["requestContext"]: END RequestId: 7c04480b-5d42-168e-dec0-4e8bf34fa596 REPORT RequestId: 7c04480b-5d42-168e-dec0-4e8bf34fa596 Init Duration: 1120.76 ms Duration: 7.08 ms Billed Duration: 100 ms Memory Size: 128 MBMax Memory Used: 47 MB {"errorType":"KeyError","errorMessage":"'requestContext'","stackTrace":[" File \"/var/task/mangum/adapter.py\", line 110, in call\n return self.handler(event, context)\n"," File \"/var/task/mangum/adapter.py\", line 130, in handler\n if \"eventType\" in event[\"requestContext\"]:\n"]} ``` |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proof of concept for Datasette on AWS Lambda with EFS 639993467 | |

| 645062266 | https://github.com/simonw/datasette/issues/850#issuecomment-645062266 | https://api.github.com/repos/simonw/datasette/issues/850 | MDEyOklzc3VlQ29tbWVudDY0NTA2MjI2Ng== | simonw 9599 | 2020-06-16T23:30:12Z | 2020-06-16T23:33:12Z | OWNER | OK, changed |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proof of concept for Datasette on AWS Lambda with EFS 639993467 | |

| 645063058 | https://github.com/simonw/datasette/issues/850#issuecomment-645063058 | https://api.github.com/repos/simonw/datasette/issues/850 | MDEyOklzc3VlQ29tbWVudDY0NTA2MzA1OA== | simonw 9599 | 2020-06-16T23:32:57Z | 2020-06-16T23:32:57Z | OWNER | https://q7lymja3sj.execute-api.us-east-1.amazonaws.com/Prod/hello/ is now giving me a 500 internal server error. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proof of concept for Datasette on AWS Lambda with EFS 639993467 | |

| 645061088 | https://github.com/simonw/datasette/issues/850#issuecomment-645061088 | https://api.github.com/repos/simonw/datasette/issues/850 | MDEyOklzc3VlQ29tbWVudDY0NTA2MTA4OA== | simonw 9599 | 2020-06-16T23:25:41Z | 2020-06-16T23:25:41Z | OWNER | Someone else ran into this problem: https://github.com/iwpnd/fastapi-aws-lambda-example/issues/1 So I need to be able to pip install MOST of Datasette, but skip |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proof of concept for Datasette on AWS Lambda with EFS 639993467 | |

| 645060598 | https://github.com/simonw/datasette/issues/850#issuecomment-645060598 | https://api.github.com/repos/simonw/datasette/issues/850 | MDEyOklzc3VlQ29tbWVudDY0NTA2MDU5OA== | simonw 9599 | 2020-06-16T23:24:01Z | 2020-06-16T23:24:01Z | OWNER | I changed datasette = Datasette([], memory=True)

lambda_handler = Mangum(datasette.app())

Fetching lambci/lambda:build-python3.8 Docker container image...... Mounting /private/tmp/datasette-proof-of-concept/hello_world as /tmp/samcli/source:ro,delegated inside runtime container Build Failed

Running PythonPipBuilder:ResolveDependencies

Error: PythonPipBuilder:ResolveDependencies - {uvloop==0.14.0(wheel)}

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proof of concept for Datasette on AWS Lambda with EFS 639993467 | |

| 645059663 | https://github.com/simonw/datasette/issues/850#issuecomment-645059663 | https://api.github.com/repos/simonw/datasette/issues/850 | MDEyOklzc3VlQ29tbWVudDY0NTA1OTY2Mw== | simonw 9599 | 2020-06-16T23:20:46Z | 2020-06-16T23:20:46Z | OWNER | I added an exclamation mark to hello world and ran Running |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proof of concept for Datasette on AWS Lambda with EFS 639993467 | |

| 645058947 | https://github.com/simonw/datasette/issues/850#issuecomment-645058947 | https://api.github.com/repos/simonw/datasette/issues/850 | MDEyOklzc3VlQ29tbWVudDY0NTA1ODk0Nw== | simonw 9599 | 2020-06-16T23:18:18Z | 2020-06-16T23:18:18Z | OWNER | https://q7lymja3sj.execute-api.us-east-1.amazonaws.com/Prod/hello/ That's a pretty ugly URL. I'm not sure how to get rid of the |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proof of concept for Datasette on AWS Lambda with EFS 639993467 | |

| 645058617 | https://github.com/simonw/datasette/issues/850#issuecomment-645058617 | https://api.github.com/repos/simonw/datasette/issues/850 | MDEyOklzc3VlQ29tbWVudDY0NTA1ODYxNw== | simonw 9599 | 2020-06-16T23:17:09Z | 2020-06-16T23:17:09Z | OWNER | OK, Configuring SAM deployInitiating deploymentUploading to datasette-proof-of-concept/0c208b5656a7aeb6186d49bebc595237 535344 / 535344.0 (100.00%) HelloWorldFunction may not have authorization defined. Uploading to datasette-proof-of-concept/14bd9ce3e21f9c88634d13c0c9b377e4.template 1147 / 1147.0 (100.00%) Waiting for changeset to be created.. CloudFormation stack changesetOperation LogicalResourceId ResourceType

Changeset created successfully. arn:aws:cloudformation:us-east-1:462092780466:changeSet/samcli-deploy1592349262/d685f2de-87c1-4b8e-b13a-67b94f8fc928 2020-06-16 16:14:29 - Waiting for stack create/update to complete CloudFormation events from changesetResourceStatus ResourceType LogicalResourceId ResourceStatusReasonCREATE_IN_PROGRESS AWS::IAM::Role HelloWorldFunctionRole - CloudFormation outputs from deployed stackOutputsKey HelloWorldFunctionIamRole Key HelloWorldApi Key HelloWorldFunction Successfully created/updated stack - datasette-proof-of-concept in us-east-1 ``` |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proof of concept for Datasette on AWS Lambda with EFS 639993467 | |

| 645056636 | https://github.com/simonw/datasette/issues/850#issuecomment-645056636 | https://api.github.com/repos/simonw/datasette/issues/850 | MDEyOklzc3VlQ29tbWVudDY0NTA1NjYzNg== | simonw 9599 | 2020-06-16T23:10:22Z | 2020-06-16T23:10:22Z | OWNER | Clicking that button generated me an access key ID / access key secret pair. Dropping those into |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proof of concept for Datasette on AWS Lambda with EFS 639993467 | |

| 645055200 | https://github.com/simonw/datasette/issues/850#issuecomment-645055200 | https://api.github.com/repos/simonw/datasette/issues/850 | MDEyOklzc3VlQ29tbWVudDY0NTA1NTIwMA== | simonw 9599 | 2020-06-16T23:05:48Z | 2020-06-16T23:05:48Z | OWNER | Logged in as

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proof of concept for Datasette on AWS Lambda with EFS 639993467 | |

| 645054206 | https://github.com/simonw/datasette/issues/850#issuecomment-645054206 | https://api.github.com/repos/simonw/datasette/issues/850 | MDEyOklzc3VlQ29tbWVudDY0NTA1NDIwNg== | simonw 9599 | 2020-06-16T23:02:54Z | 2020-06-16T23:04:59Z | OWNER | I think I need to sign in to the AWS console with this new ... for which I needed my root "account ID" - a 12 digit number - to use on the IAM login form. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proof of concept for Datasette on AWS Lambda with EFS 639993467 | |

| 645053923 | https://github.com/simonw/datasette/issues/850#issuecomment-645053923 | https://api.github.com/repos/simonw/datasette/issues/850 | MDEyOklzc3VlQ29tbWVudDY0NTA1MzkyMw== | simonw 9599 | 2020-06-16T23:01:49Z | 2020-06-16T23:01:49Z | OWNER | I used https://console.aws.amazon.com/billing/home?#/account and activated "IAM user/role access to billing information" - what a puzzling first step! I created a new user with AWS console access (which means access to the web UI) called

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proof of concept for Datasette on AWS Lambda with EFS 639993467 | |

| 645051972 | https://github.com/simonw/datasette/issues/850#issuecomment-645051972 | https://api.github.com/repos/simonw/datasette/issues/850 | MDEyOklzc3VlQ29tbWVudDY0NTA1MTk3Mg== | simonw 9599 | 2020-06-16T22:55:04Z | 2020-06-16T22:55:04Z | OWNER | ``` simon@Simons-MacBook-Pro datasette-proof-of-concept % sam deploy --guided Configuring SAM deployError: Failed to create managed resources: Unable to locate credentials ``` I need to get my AWS credentials sorted. I'm going to follow https://docs.aws.amazon.com/IAM/latest/UserGuide/getting-started_create-admin-group.html and https://docs.aws.amazon.com/serverless-application-model/latest/developerguide/serverless-getting-started-set-up-credentials.html |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proof of concept for Datasette on AWS Lambda with EFS 639993467 | |

| 645051370 | https://github.com/simonw/datasette/issues/850#issuecomment-645051370 | https://api.github.com/repos/simonw/datasette/issues/850 | MDEyOklzc3VlQ29tbWVudDY0NTA1MTM3MA== | simonw 9599 | 2020-06-16T22:53:05Z | 2020-06-16T22:53:05Z | OWNER | ``` simon@Simons-MacBook-Pro datasette-proof-of-concept % sam local invoke Invoking app.lambda_handler (python3.8) Fetching lambci/lambda:python3.8 Docker container image.................................................................................................................................................................................................................................... Mounting /private/tmp/datasette-proof-of-concept/.aws-sam/build/HelloWorldFunction as /var/task:ro,delegated inside runtime container START RequestId: 4616ab43-6882-1627-e5e3-5a29730d52f9 Version: $LATEST END RequestId: 4616ab43-6882-1627-e5e3-5a29730d52f9 REPORT RequestId: 4616ab43-6882-1627-e5e3-5a29730d52f9 Init Duration: 140.84 ms Duration: 2.49 ms Billed Duration: 100 ms Memory Size: 128 MBMax Memory Used: 25 MB {"statusCode":200,"body":"{\"message\": \"hello world\"}"} simon@Simons-MacBook-Pro datasette-proof-of-concept % sam local invoke Invoking app.lambda_handler (python3.8) Fetching lambci/lambda:python3.8 Docker container image...... Mounting /private/tmp/datasette-proof-of-concept/.aws-sam/build/HelloWorldFunction as /var/task:ro,delegated inside runtime container START RequestId: 3189df2f-e9c0-1be4-b9ac-f329c5fcd067 Version: $LATEST END RequestId: 3189df2f-e9c0-1be4-b9ac-f329c5fcd067 REPORT RequestId: 3189df2f-e9c0-1be4-b9ac-f329c5fcd067 Init Duration: 87.22 ms Duration: 2.34 ms Billed Duration: 100 ms Memory Size: 128 MB Max Memory Used: 25 MB {"statusCode":200,"body":"{\"message\": \"hello world\"}"} ``` |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proof of concept for Datasette on AWS Lambda with EFS 639993467 | |

| 645050948 | https://github.com/simonw/datasette/issues/850#issuecomment-645050948 | https://api.github.com/repos/simonw/datasette/issues/850 | MDEyOklzc3VlQ29tbWVudDY0NTA1MDk0OA== | simonw 9599 | 2020-06-16T22:51:30Z | 2020-06-16T22:52:30Z | OWNER | ``` simon@Simons-MacBook-Pro datasette-proof-of-concept % sam build --use-container Starting Build inside a container Building function 'HelloWorldFunction' Fetching lambci/lambda:build-python3.8 Docker container image.......................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................... Mounting /private/tmp/datasette-proof-of-concept/hello_world as /tmp/samcli/source:ro,delegated inside runtime container Build Succeeded Built Artifacts : .aws-sam/build Built Template : .aws-sam/build/template.yaml Commands you can use next[] Invoke Function: sam local invoke [] Deploy: sam deploy --guided Running PythonPipBuilder:ResolveDependencies Running PythonPipBuilder:CopySource ``` |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proof of concept for Datasette on AWS Lambda with EFS 639993467 | |

| 645048062 | https://github.com/simonw/datasette/issues/850#issuecomment-645048062 | https://api.github.com/repos/simonw/datasette/issues/850 | MDEyOklzc3VlQ29tbWVudDY0NTA0ODA2Mg== | simonw 9599 | 2020-06-16T22:41:33Z | 2020-06-16T22:41:33Z | OWNER | ``` simon@Simons-MacBook-Pro /tmp % sam init Which template source would you like to use? 1 - AWS Quick Start Templates 2 - Custom Template Location Choice: 1 Which runtime would you like to use? 1 - nodejs12.x 2 - python3.8 3 - ruby2.7 4 - go1.x 5 - java11 6 - dotnetcore3.1 7 - nodejs10.x 8 - python3.7 9 - python3.6 10 - python2.7 11 - ruby2.5 12 - java8 13 - dotnetcore2.1 Runtime: 2 Project name [sam-app]: datasette-proof-of-concept Cloning app templates from https://github.com/awslabs/aws-sam-cli-app-templates.git AWS quick start application templates: 1 - Hello World Example 2 - EventBridge Hello World 3 - EventBridge App from scratch (100+ Event Schemas) 4 - Step Functions Sample App (Stock Trader) Template selection: 1 Generating application:Name: datasette-proof-of-concept Runtime: python3.8 Dependency Manager: pip Application Template: hello-world Output Directory: . Next steps can be found in the README file at ./datasette-proof-of-concept/README.md ``` |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proof of concept for Datasette on AWS Lambda with EFS 639993467 | |

| 645047703 | https://github.com/simonw/datasette/issues/850#issuecomment-645047703 | https://api.github.com/repos/simonw/datasette/issues/850 | MDEyOklzc3VlQ29tbWVudDY0NTA0NzcwMw== | simonw 9599 | 2020-06-16T22:40:19Z | 2020-06-16T22:40:19Z | OWNER | Installed SAM:

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proof of concept for Datasette on AWS Lambda with EFS 639993467 | |

| 645045055 | https://github.com/simonw/datasette/issues/850#issuecomment-645045055 | https://api.github.com/repos/simonw/datasette/issues/850 | MDEyOklzc3VlQ29tbWVudDY0NTA0NTA1NQ== | simonw 9599 | 2020-06-16T22:31:49Z | 2020-06-16T22:31:49Z | OWNER | It looks like SAM - AWS Serverless Application Model - is the currently recommended way to deploy Python apps to Lambda from the command-line: https://docs.aws.amazon.com/serverless-application-model/latest/developerguide/serverless-getting-started-hello-world.html |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proof of concept for Datasette on AWS Lambda with EFS 639993467 | |

| 645042625 | https://github.com/simonw/datasette/issues/850#issuecomment-645042625 | https://api.github.com/repos/simonw/datasette/issues/850 | MDEyOklzc3VlQ29tbWVudDY0NTA0MjYyNQ== | simonw 9599 | 2020-06-16T22:24:26Z | 2020-06-16T22:24:26Z | OWNER | From https://mangum.io/adapter/

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proof of concept for Datasette on AWS Lambda with EFS 639993467 | |

| 645041663 | https://github.com/simonw/datasette/issues/850#issuecomment-645041663 | https://api.github.com/repos/simonw/datasette/issues/850 | MDEyOklzc3VlQ29tbWVudDY0NTA0MTY2Mw== | simonw 9599 | 2020-06-16T22:21:44Z | 2020-06-16T22:21:44Z | OWNER | https://github.com/jordaneremieff/mangum looks like the best way to run an ASGI app on Lambda at the moment. ```python from mangum import Mangum async def app(scope, receive, send): await send( { "type": "http.response.start", "status": 200, "headers": [[b"content-type", b"text/plain; charset=utf-8"]], } ) await send({"type": "http.response.body", "body": b"Hello, world!"}) handler = Mangum(app) ``` |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proof of concept for Datasette on AWS Lambda with EFS 639993467 | |

| 645032643 | https://github.com/simonw/datasette/issues/850#issuecomment-645032643 | https://api.github.com/repos/simonw/datasette/issues/850 | MDEyOklzc3VlQ29tbWVudDY0NTAzMjY0Mw== | simonw 9599 | 2020-06-16T21:57:10Z | 2020-06-16T21:57:10Z | OWNER | https://docs.aws.amazon.com/efs/latest/ug/wt1-getting-started.html is an EFS walk-through using the AWS CLI tool instead of clicking around in their web interface. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proof of concept for Datasette on AWS Lambda with EFS 639993467 | |

| 645031225 | https://github.com/simonw/datasette/issues/850#issuecomment-645031225 | https://api.github.com/repos/simonw/datasette/issues/850 | MDEyOklzc3VlQ29tbWVudDY0NTAzMTIyNQ== | simonw 9599 | 2020-06-16T21:53:25Z | 2020-06-16T21:53:25Z | OWNER | Easier solution to this might be to have two functions - a "read-only" one which is allowed to scale as much as it likes, and a "write-only" one which can write to the database files but is limited to running a maximum of one Lambda instance. https://docs.aws.amazon.com/lambda/latest/dg/invocation-scaling.html |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proof of concept for Datasette on AWS Lambda with EFS 639993467 | |

| 645030262 | https://github.com/simonw/datasette/issues/850#issuecomment-645030262 | https://api.github.com/repos/simonw/datasette/issues/850 | MDEyOklzc3VlQ29tbWVudDY0NTAzMDI2Mg== | simonw 9599 | 2020-06-16T21:51:01Z | 2020-06-16T21:51:39Z | OWNER | File locking is interesting here. https://docs.aws.amazon.com/lambda/latest/dg/services-efs.html

SQLite can apparently work on NFS v4.1. I think I'd rather set things up so there's only ever one writer - so a Datasette instance could scale reads by running lots more lambda functions but only one function ever writes to a file at a time. Not sure if that's feasible with Lambda though - maybe by adding some additional shared state mechanism like Redis? |

{

"total_count": 1,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 1,

"rocket": 0,

"eyes": 0

} |

Proof of concept for Datasette on AWS Lambda with EFS 639993467 | |

| 644987083 | https://github.com/simonw/datasette/issues/690#issuecomment-644987083 | https://api.github.com/repos/simonw/datasette/issues/690 | MDEyOklzc3VlQ29tbWVudDY0NDk4NzA4Mw== | simonw 9599 | 2020-06-16T20:11:35Z | 2020-06-16T20:11:35Z | OWNER | Twitter conversation about drop-down menu solutions that are accessible, fast loading and use minimal JavaScript: https://twitter.com/simonw/status/1272974294545395712 I really like the approach taken by GitHub Primer, which builds on top of HTML |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Mechanism for plugins to add action menu items for various things 573755726 | |

| 644584075 | https://github.com/simonw/datasette/issues/849#issuecomment-644584075 | https://api.github.com/repos/simonw/datasette/issues/849 | MDEyOklzc3VlQ29tbWVudDY0NDU4NDA3NQ== | simonw 9599 | 2020-06-16T07:24:08Z | 2020-06-16T07:24:08Z | OWNER | This guide is fantastic - I'll be following it closely: https://github.com/chancancode/branch-rename/blob/main/README.md - in particular the Action to mirror master and main for a while. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Rename master branch to main 639072811 | |

| 644384787 | https://github.com/simonw/datasette/issues/849#issuecomment-644384787 | https://api.github.com/repos/simonw/datasette/issues/849 | MDEyOklzc3VlQ29tbWVudDY0NDM4NDc4Nw== | simonw 9599 | 2020-06-15T20:56:07Z | 2020-06-15T20:56:19Z | OWNER | The big question is how this impacts existing CI configuration. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Rename master branch to main 639072811 | |

| 644384417 | https://github.com/simonw/datasette/issues/849#issuecomment-644384417 | https://api.github.com/repos/simonw/datasette/issues/849 | MDEyOklzc3VlQ29tbWVudDY0NDM4NDQxNw== | simonw 9599 | 2020-06-15T20:55:23Z | 2020-06-15T20:55:23Z | OWNER | I'm doing https://github.com/simonw/datasette-psutil first. In my local checkout:

Then in https://github.com/simonw/datasette-psutil/settings/branches I changed the default branch to

Links to these docs: https://help.github.com/en/github/administering-a-repository/setting-the-default-branch That worked! https://github.com/simonw/datasette-psutil One catch, which I think will impact my most widely used repos the most (like datasette) - linking to a specific file now looks like this: https://github.com/simonw/datasette-psutil/blob/main/datasette_psutil/init.py The old https://github.com/simonw/datasette-psutil/blob/master/datasette_psutil/init.py link is presumably frozen in time? I've definitely got links spread around the web to my "most recent version of this code" that would use the |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Rename master branch to main 639072811 | |

| 644322234 | https://github.com/simonw/datasette/issues/849#issuecomment-644322234 | https://api.github.com/repos/simonw/datasette/issues/849 | MDEyOklzc3VlQ29tbWVudDY0NDMyMjIzNA== | simonw 9599 | 2020-06-15T19:06:16Z | 2020-06-15T19:06:16Z | OWNER | I'll make this change on a few of my other repos first to make sure I haven't missed any tricky edge-cases. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Rename master branch to main 639072811 | |

| 643709037 | https://github.com/simonw/datasette/issues/691#issuecomment-643709037 | https://api.github.com/repos/simonw/datasette/issues/691 | MDEyOklzc3VlQ29tbWVudDY0MzcwOTAzNw== | amjith 49260 | 2020-06-14T02:35:16Z | 2020-06-14T02:35:16Z | CONTRIBUTOR | The server should reload in the Ref: #848 |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

--reload sould reload server if code in --plugins-dir changes 574021194 | |

| 643704730 | https://github.com/simonw/datasette/issues/847#issuecomment-643704730 | https://api.github.com/repos/simonw/datasette/issues/847 | MDEyOklzc3VlQ29tbWVudDY0MzcwNDczMA== | simonw 9599 | 2020-06-14T01:28:34Z | 2020-06-14T01:28:34Z | OWNER | Here's the plugin that adds those custom SQLite functions: ```python from datasette import hookimpl from coverage.numbits import register_sqlite_functions @hookimpl def prepare_connection(conn): register_sqlite_functions(conn) ``` |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Take advantage of .coverage being a SQLite database 638259643 | |

| 643704565 | https://github.com/simonw/datasette/issues/847#issuecomment-643704565 | https://api.github.com/repos/simonw/datasette/issues/847 | MDEyOklzc3VlQ29tbWVudDY0MzcwNDU2NQ== | simonw 9599 | 2020-06-14T01:26:56Z | 2020-06-14T01:26:56Z | OWNER | On closer inspection, I don't know if there's that much useful stuff you can do with the data from Consider the following query against a

It looks like this tells me which lines of which files were executed during the test run. But... without the actual source code, I don't think I can calculate the coverage percentage for each file. I don't want to count comment lines or whitespace as untested for example, and I don't know how many lines were in the file. If I'm right that it's not possible to calculate percentage coverage from just the |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Take advantage of .coverage being a SQLite database 638259643 | |

| 643702715 | https://github.com/simonw/datasette/issues/847#issuecomment-643702715 | https://api.github.com/repos/simonw/datasette/issues/847 | MDEyOklzc3VlQ29tbWVudDY0MzcwMjcxNQ== | simonw 9599 | 2020-06-14T01:03:30Z | 2020-06-14T01:03:40Z | OWNER | Filed a related issue with some ideas against |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Take advantage of .coverage being a SQLite database 638259643 | |

| 643699583 | https://github.com/simonw/datasette/issues/846#issuecomment-643699583 | https://api.github.com/repos/simonw/datasette/issues/846 | MDEyOklzc3VlQ29tbWVudDY0MzY5OTU4Mw== | simonw 9599 | 2020-06-14T00:26:31Z | 2020-06-14T00:26:31Z | OWNER | That seems to have fixed the problem, at least for the moment. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

"Too many open files" error running tests 638241779 | |

| 643699063 | https://github.com/simonw/datasette/issues/846#issuecomment-643699063 | https://api.github.com/repos/simonw/datasette/issues/846 | MDEyOklzc3VlQ29tbWVudDY0MzY5OTA2Mw== | simonw 9599 | 2020-06-14T00:22:32Z | 2020-06-14T00:22:32Z | OWNER | Idea: |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

"Too many open files" error running tests 638241779 | |

| 643698790 | https://github.com/simonw/datasette/issues/846#issuecomment-643698790 | https://api.github.com/repos/simonw/datasette/issues/846 | MDEyOklzc3VlQ29tbWVudDY0MzY5ODc5MA== | simonw 9599 | 2020-06-14T00:20:42Z | 2020-06-14T00:20:42Z | OWNER | Released a new plugin, |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

"Too many open files" error running tests 638241779 | |

| 643685669 | https://github.com/simonw/datasette/issues/846#issuecomment-643685669 | https://api.github.com/repos/simonw/datasette/issues/846 | MDEyOklzc3VlQ29tbWVudDY0MzY4NTY2OQ== | simonw 9599 | 2020-06-13T22:24:22Z | 2020-06-13T22:24:22Z | OWNER | I tried this experiment:

Likewise: ``` In [11]: conn = sqlite3.connect("fixtures.db") In [12]: psutil.Process().open_files() In [13]: del conn In [14]: psutil.Process().open_files() |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

"Too many open files" error running tests 638241779 | |

| 643685333 | https://github.com/simonw/datasette/issues/846#issuecomment-643685333 | https://api.github.com/repos/simonw/datasette/issues/846 | MDEyOklzc3VlQ29tbWVudDY0MzY4NTMzMw== | simonw 9599 | 2020-06-13T22:19:38Z | 2020-06-13T22:19:38Z | OWNER | That's 91 open files but only 29 unique filenames. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

"Too many open files" error running tests 638241779 | |

| 643685207 | https://github.com/simonw/datasette/issues/846#issuecomment-643685207 | https://api.github.com/repos/simonw/datasette/issues/846 | MDEyOklzc3VlQ29tbWVudDY0MzY4NTIwNw== | simonw 9599 | 2020-06-13T22:18:01Z | 2020-06-13T22:18:01Z | OWNER | This shows currently open files (after I ran it inside

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

"Too many open files" error running tests 638241779 | |

| 643681747 | https://github.com/simonw/datasette/issues/841#issuecomment-643681747 | https://api.github.com/repos/simonw/datasette/issues/841 | MDEyOklzc3VlQ29tbWVudDY0MzY4MTc0Nw== | simonw 9599 | 2020-06-13T21:38:46Z | 2020-06-13T21:38:46Z | OWNER | Closing this because I've researched feasibility. I may start a milestone in the future to help me get to 100%. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Research feasibility of 100% test coverage 638104520 | |

| 643681517 | https://github.com/simonw/datasette/pull/844#issuecomment-643681517 | https://api.github.com/repos/simonw/datasette/issues/844 | MDEyOklzc3VlQ29tbWVudDY0MzY4MTUxNw== | simonw 9599 | 2020-06-13T21:36:15Z | 2020-06-13T21:36:15Z | OWNER | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Action to run tests and upload coverage report 638230433 | ||

| 643676314 | https://github.com/simonw/datasette/issues/843#issuecomment-643676314 | https://api.github.com/repos/simonw/datasette/issues/843 | MDEyOklzc3VlQ29tbWVudDY0MzY3NjMxNA== | simonw 9599 | 2020-06-13T20:47:37Z | 2020-06-13T20:47:37Z | OWNER | I can use this action: https://github.com/codecov/codecov-action |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Configure codecov.io 638229448 |

Advanced export

JSON shape: default, array, newline-delimited, object

CREATE TABLE [issue_comments] (

[html_url] TEXT,

[issue_url] TEXT,

[id] INTEGER PRIMARY KEY,

[node_id] TEXT,

[user] INTEGER REFERENCES [users]([id]),

[created_at] TEXT,

[updated_at] TEXT,

[author_association] TEXT,

[body] TEXT,

[reactions] TEXT,

[issue] INTEGER REFERENCES [issues]([id])

, [performed_via_github_app] TEXT);

CREATE INDEX [idx_issue_comments_issue]

ON [issue_comments] ([issue]);

CREATE INDEX [idx_issue_comments_user]

ON [issue_comments] ([user]);

user >30