issues

90 rows where comments = 2 and state = "open" sorted by updated_at descending

This data as json, CSV (advanced)

Suggested facets: milestone, author_association, created_at (date), updated_at (date)

repo 10

state 1

- open · 90 ✖

| id | node_id | number | title | user | state | locked | assignee | milestone | comments | created_at | updated_at ▲ | closed_at | author_association | pull_request | body | repo | type | active_lock_reason | performed_via_github_app | reactions | draft | state_reason |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1570375808 | I_kwDODFdgUs5dmgiA | 79 | Deploy demo job is failing due to rate limit | simonw 9599 | open | 0 | 2 | 2023-02-03T20:05:01Z | 2023-12-08T14:50:15Z | MEMBER | github-to-sqlite 207052882 | issue | {

"url": "https://api.github.com/repos/dogsheep/github-to-sqlite/issues/79/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

|||||||||

| 1865572575 | PR_kwDOBm6k_c5Yt2eO | 2155 | Fix hupper.start_reloader entry point | cadeef 79087 | open | 0 | 2 | 2023-08-24T17:14:08Z | 2023-09-27T18:44:02Z | FIRST_TIME_CONTRIBUTOR | simonw/datasette/pulls/2155 | Update hupper's entry point so that click commands are processed properly. Fixes #2123 :books: Documentation preview :books:: https://datasette--2155.org.readthedocs.build/en/2155/ |

datasette 107914493 | pull | {

"url": "https://api.github.com/repos/simonw/datasette/issues/2155/reactions",

"total_count": 2,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 2,

"eyes": 0

} |

0 | ||||||

| 1825007061 | I_kwDOBm6k_c5sx2XV | 2123 | datasette serve when invoked with --reload interprets the serve command as a file | cadeef 79087 | open | 0 | 2 | 2023-07-27T19:07:22Z | 2023-09-18T13:02:46Z | NONE | When running

If a 'serve' file is created it launches properly (albeit with an empty database called serve):

Version (running from HEAD on main):

This issue appears to have existed for awhile as https://github.com/simonw/datasette/issues/1380#issuecomment-953366110 mentions the error in a different context. I'm happy to debug and land a patch if it's welcome. |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/2123/reactions",

"total_count": 2,

"+1": 2,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1900026059 | I_kwDOBm6k_c5xQBjL | 2188 | Plugin Hooks for "compile to SQL" languages | asg017 15178711 | open | 0 | 2 | 2023-09-18T01:37:15Z | 2023-09-18T06:58:53Z | CONTRIBUTOR | There's a ton of tools/languages that compile to SQL, which may be nice in Datasette. Some examples:

It would be cool if plugins could extend Datasette to use these languages, in both the code editor and API usage. A few things I'd imagine a

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/2188/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1898927976 | I_kwDOBm6k_c5xL1do | 2186 | Mechanism for register_output_renderer hooks to access full count | simonw 9599 | open | 0 | Datasette 1.0 3268330 | 2 | 2023-09-15T18:57:54Z | 2023-09-15T19:27:59Z | OWNER | The cause of this bug: - https://github.com/simonw/datasette-export-notebook/issues/17 Is that That field is no longer available by default - the It would be useful if plugins like this could access the total count on demand, should they need to. |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/2186/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

|||||||

| 1891614971 | I_kwDOCGYnMM5wv8D7 | 594 | Represent compound foreign keys in table.foreign_keys output | simonw 9599 | open | 0 | 2 | 2023-09-12T03:48:24Z | 2023-09-12T03:51:13Z | OWNER | Given this schema:

|

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/594/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1808215339 | I_kwDOBm6k_c5rxy0r | 2104 | Tables starting with an underscore should be treated as hidden | simonw 9599 | open | 0 | 2 | 2023-07-17T17:13:53Z | 2023-07-18T22:41:37Z | OWNER | Plugins can then take advantage of this pattern, for example: - https://github.com/simonw/datasette-auth-tokens/pull/8 |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/2104/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1765870617 | I_kwDOBm6k_c5pQQwZ | 2087 | `--settings settings.json` option | simonw 9599 | open | 0 | 2 | 2023-06-20T17:48:45Z | 2023-07-14T17:02:03Z | OWNER | https://discord.com/channels/823971286308356157/823971286941302908/1120705940728066080

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/2087/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1795219865 | I_kwDOCGYnMM5rAOGZ | 566 | `--no-headers` doesn't work on most formats | zellyn 33625 | open | 0 | 2 | 2023-07-09T03:43:36Z | 2023-07-09T04:13:35Z | NONE | Version 3.33

|

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/566/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1781005740 | I_kwDOBm6k_c5qJ_2s | 2090 | Adopt ruff for linting | simonw 9599 | open | 0 | 2 | 2023-06-29T14:56:43Z | 2023-06-29T15:05:04Z | OWNER | datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/2090/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

|||||||||

| 1708030220 | I_kwDOBm6k_c5lznkM | 2073 | Faceting doesn't work against integer columns in views | simonw 9599 | open | 0 | 2 | 2023-05-12T18:20:10Z | 2023-05-12T18:24:07Z | OWNER | Spotted this issue here: https://til.simonwillison.net/datasette/baseline I had to do this workaround:

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/2073/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1595340692 | I_kwDOCGYnMM5fFveU | 530 | add ability to configure "on delete" and "on update" attributes of foreign keys: | fgregg 536941 | open | 0 | 2 | 2023-02-22T15:44:14Z | 2023-05-08T20:39:01Z | CONTRIBUTOR | sqlite supports these, and it would be quite nice to be able to add them with sqlite-utils. |

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/530/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1617602868 | I_kwDOJHON9s5gaqk0 | 6 | Character encoding problem | simonw 9599 | open | 0 | 2 | 2023-03-09T16:44:34Z | 2023-04-14T15:22:09Z | MEMBER | I ran against a recent note with this in it:

And got back:

Pasting that into https://ftfy.vercel.app/?s=Actions+%E2%80%9A%C3%B6%C3%B4%C3%94%E2%88%8F%C3%A8+ gives this:

|

apple-notes-to-sqlite 611552758 | issue | {

"url": "https://api.github.com/repos/dogsheep/apple-notes-to-sqlite/issues/6/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1515883470 | I_kwDOC8tyDs5aWovO | 24 | DOC: xml.etree.ElementTree.ParseError due to healthkit version 12 | mmngreco 6231413 | open | 0 | 2 | 2023-01-01T23:00:38Z | 2023-03-30T10:17:31Z | NONE | Hi @simonw I hope you find this issue ok, the idea is provide some documentation to other users like me about how to solve this problem and save some time. Following the instructions from the

So, after debugging and searching on internet I found this useful link: https://discussions.apple.com/thread/254202523 (etresoft, the real hero). Which basically says that the xml given by the health app (healthkit version 12) has some bugs but fortunately, they can be solved with a couple of commads:

|

healthkit-to-sqlite 197882382 | issue | {

"url": "https://api.github.com/repos/dogsheep/healthkit-to-sqlite/issues/24/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1613974869 | PR_kwDOBm6k_c5LgPS- | 2034 | remove an unused `app` var in cli.py | wenhoujx 4370201 | open | 0 | 2 | 2023-03-07T18:19:05Z | 2023-03-29T20:56:20Z | FIRST_TIME_CONTRIBUTOR | simonw/datasette/pulls/2034 | this var Feel free to ignore this PR if the deleted line actually does something. :books: Documentation preview :books:: https://datasette--2034.org.readthedocs.build/en/2034/ |

datasette 107914493 | pull | {

"url": "https://api.github.com/repos/simonw/datasette/issues/2034/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

0 | ||||||

| 1618249044 | I_kwDOBm6k_c5gdIVU | 2038 | Consider a `strict_templates` setting | simonw 9599 | open | 0 | 2 | 2023-03-10T02:09:13Z | 2023-03-10T02:11:06Z | OWNER | A setting which turns on Jinja strict mode, so any templates that access undefined variables raise a hard error. Prototype here:

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/2038/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1616429236 | I_kwDOJHON9s5gWMC0 | 4 | Support incremental updates | simonw 9599 | open | 0 | 2 | 2023-03-09T05:14:00Z | 2023-03-09T18:20:56Z | MEMBER | Running this script can take several hours against a large notes database. Would be neat if it could run against just the notes that have been modified since it last ran. Could pull the max Problem is I don't actually know what order it iterates over the notes in. |

apple-notes-to-sqlite 611552758 | issue | {

"url": "https://api.github.com/repos/dogsheep/apple-notes-to-sqlite/issues/4/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1186696202 | I_kwDOBm6k_c5Gu4wK | 1696 | Show foreign key label when filtering | simonw 9599 | open | 0 | 2 | 2022-03-30T16:18:54Z | 2023-01-29T20:56:20Z | OWNER | For example here:

3 corresponds to "Human Related: Other" - it would be neat to display this in this area of the page somehow. |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1696/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1558644003 | I_kwDOBm6k_c5c5wUj | 2006 | Teach `datasette publish` to pin to `datasette<1.0` in a 0.x release | simonw 9599 | open | 0 | Datasette 1.0 3268330 | 2 | 2023-01-26T19:17:40Z | 2023-01-26T19:20:53Z | OWNER | I just realized that when I ship Datasette 1.0 there may be automated deployments out there which could deploy the 1.0 version by accident, potentially breaking any customizations that aren't compatible with the 1.0 changes. I can hopefully help avoid that by shipping one last entry in the |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/2006/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

|||||||

| 1551113681 | I_kwDOBm6k_c5cdB3R | 1998 | `datasette --version` should also show the SQLite version | simonw 9599 | open | 0 | 2 | 2023-01-20T16:11:30Z | 2023-01-20T18:19:06Z | OWNER | datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1998/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

|||||||||

| 1524867951 | I_kwDOBm6k_c5a46Nv | 1980 | "Cannot sort table by id" when sortable_columns is used | simonw 9599 | open | 0 | 2 | 2023-01-09T03:21:33Z | 2023-01-09T03:23:53Z | OWNER | I had an instance with this in

It sent me to

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1980/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 855296937 | MDU6SXNzdWU4NTUyOTY5Mzc= | 1295 | Errors should have links to further information | simonw 9599 | open | 0 | 2 | 2021-04-11T12:39:12Z | 2022-12-14T23:28:49Z | OWNER | Inspired by this tweet: https://twitter.com/willmcgugan/status/1381186384510255104

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1295/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1200649124 | I_kwDOBm6k_c5HkHOk | 1708 | Datasette 1.0 alpha upcoming release notes | simonw 9599 | open | 0 | Datasette 1.0a-next 8755003 | 2 | 2022-04-11T22:57:12Z | 2022-12-13T05:29:06Z | OWNER | I'm going to try writing the release notes first, to see if that helps unblock me. ⚠️ Any release notes in this issue are a draft, and should not be treated as the real thing ⚠️ |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1708/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

|||||||

| 1483250004 | I_kwDOBm6k_c5YaJlU | 1936 | Fix /db/table/-/upsert in the API explorer | simonw 9599 | open | 0 | Datasette 1.0 3268330 | 2 | 2022-12-08T00:59:34Z | 2022-12-08T01:36:02Z | OWNER | Split from: - #1931 - #1878 This is a bit tricky because the code needs to figure out what the primary keys are for an item, and whether or not |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1936/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

|||||||

| 1455928469 | I_kwDOBm6k_c5Wx7SV | 1903 | Refactor all error classes into a datasette.exceptions module | simonw 9599 | open | 0 | Datasette 1.0 3268330 | 2 | 2022-11-18T22:44:45Z | 2022-11-20T22:35:01Z | OWNER | While working on this issue: - #1896 I realized that Datasette has error classes scattered around a fair bit, including some in the I should clean these up. |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1903/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

|||||||

| 1405196044 | PR_kwDOCGYnMM5AmYzy | 499 | feat: recreate fts triggers after table transform | chapmanjacobd 7908073 | open | 0 | 2 | 2022-10-11T20:35:39Z | 2022-10-26T17:54:51Z | CONTRIBUTOR | simonw/sqlite-utils/pulls/499 | https://github.com/simonw/sqlite-utils/pull/498 :books: Documentation preview :books:: https://sqlite-utils--499.org.readthedocs.build/en/499/ alternatively, |

sqlite-utils 140912432 | pull | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/499/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

0 | ||||||

| 923270900 | MDExOlB1bGxSZXF1ZXN0NjcyMDUzODEx | 65 | basic support for events | khimaros 231498 | open | 0 | 2 | 2021-06-17T00:51:30Z | 2022-10-03T22:35:03Z | FIRST_TIME_CONTRIBUTOR | dogsheep/github-to-sqlite/pulls/65 | a quick first pass at implementing the feature requested in https://github.com/dogsheep/github-to-sqlite/issues/64 testing instructions:

if the specified user is the authenticated user, it will also include private events. caveat: pagination appears to be broken (i don't see |

github-to-sqlite 207052882 | pull | {

"url": "https://api.github.com/repos/dogsheep/github-to-sqlite/issues/65/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

0 | ||||||

| 1122427321 | I_kwDOBm6k_c5C5uG5 | 1624 | Index page `/` has no CORS headers | simonw 9599 | open | 0 | 2 | 2022-02-02T21:56:10Z | 2022-09-28T16:54:22Z | OWNER | Compare the following: ``` % curl -I 'https://latest.datasette.io/fixtures' HTTP/1.1 200 OK link: https://latest.datasette.io/fixtures.json; rel="alternate"; type="application/json+datasette" cache-control: max-age=5 referrer-policy: no-referrer access-control-allow-origin: * access-control-allow-headers: Authorization access-control-expose-headers: Link content-type: text/html; charset=utf-8 x-databases: _memory, _internal, fixtures, extra_database Date: Wed, 02 Feb 2022 21:55:49 GMT Server: Google Frontend Transfer-Encoding: chunked % curl -I 'https://latest.datasette.io/' |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1624/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1339444565 | I_kwDOBm6k_c5P1k1V | 1783 | Better guidance as to what to do after you've installed Datasette | simonw 9599 | open | 0 | 2 | 2022-08-15T20:11:06Z | 2022-08-15T20:14:01Z | OWNER | Feedback from Discord:

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1783/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1224112817 | I_kwDOCGYnMM5I9nqx | 430 | Document how to use `PRAGMA temp_store` to avoid errors when running VACUUM against huge databases | rayvoelker 9308268 | open | 0 | 2 | 2022-05-03T13:33:58Z | 2022-06-14T23:26:37Z | NONE | I'm trying to figure out a way to get the Here's the bit that's causing the error, and the resulting error output: ```python combine these columns into 1 table "bib_properties" :best_titlebib_level_codemat_typematerial_codebest_authordb["circ_trans"].extract( ["best_title", "bib_level_code", "mat_type", "material_code", "best_author"], table="bib_properties", fk_column="bib_properties_id" ) db["circ_trans"].extract( ["call_number"], table="call_number", fk_column="call_number_id", rename={"call_number": "value"} ) ``` ```pythonOperationalError Traceback (most recent call last) Input In [17], in <cell line: 7>() 1 # combine these columns into 1 table "bib_properties" : 2 # best_title 3 # bib_level_code 4 # mat_type 5 # material_code 6 # best_author ----> 7 db["circ_trans"].extract( 8 ["best_title", "bib_level_code", "mat_type", "material_code", "best_author"], 9 table="bib_properties", 10 fk_column="bib_properties_id" 11 ) 13 db["circ_trans"].extract( 14 ["call_number"], 15 table="call_number", 16 fk_column="call_number_id", 17 rename={"call_number": "value"} 18 ) File ~/jupyter/venv/lib/python3.10/site-packages/sqlite_utils/db.py:1764, in Table.extract(self, columns, table, fk_column, rename) 1761 column_order.append(c.name) 1763 # Drop the unnecessary columns and rename lookup column -> 1764 self.transform( 1765 drop=set(columns), 1766 rename={magic_lookup_column: fk_column}, 1767 column_order=column_order, 1768 ) 1770 # And add the foreign key constraint 1771 self.add_foreign_key(fk_column, table, "id") File ~/jupyter/venv/lib/python3.10/site-packages/sqlite_utils/db.py:1526, in Table.transform(self, types, rename, drop, pk, not_null, defaults, drop_foreign_keys, column_order) 1524 with self.db.conn: 1525 for sql in sqls: -> 1526 self.db.execute(sql) 1527 # Run the foreign_key_check before we commit 1528 if pragma_foreign_keys_was_on: File ~/jupyter/venv/lib/python3.10/site-packages/sqlite_utils/db.py:465, in Database.execute(self, sql, parameters) 463 return self.conn.execute(sql, parameters) 464 else: --> 465 return self.conn.execute(sql) OperationalError: database or disk is full ``` This database is about 17G in total size, so I'm assuming the error is coming from the vacuum ... where i'm assuming it's maybe trying to do the temp storage in a location that doesn't have sufficient room. The disk space is more than ample on the host in question (1.8T is free in the directory where the sqlite db resides) The I'm trying to think if there's a way to set the ```python SET the temp file store to be a file ...print(db.execute('PRAGMA temp_store').fetchall()) print(db.execute('PRAGMA temp_store=FILE').fetchall()) print(db.execute('PRAGMA temp_store').fetchall()) the users home directory ...print(db.execute("PRAGMA temp_store_directory='/home/plchuser/'").fetchall()) print(db.execute("PRAGMA sqlite3_temp_directory='/home/plchuser/'").fetchall()) print(db.execute("PRAGMA temp_store_directory").fetchall())

print(db.execute("PRAGMA sqlite3_temp_directory").fetchall())

Here's the docs on the Temporary File Storage Locations https://www.sqlite.org/tempfiles.html |

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/430/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1221849746 | I_kwDOBm6k_c5I0_KS | 1732 | Custom page variables aren't decoded | tannewt 52649 | open | 0 | 2 | 2022-04-30T14:55:46Z | 2022-05-03T01:50:45Z | NONE | I have a page Datasette should unescape the url component before passing them into the template. |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1732/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1129052172 | I_kwDOBm6k_c5DS_gM | 1633 | base_url or prefix does not work with _exact match | henrikek 6613091 | open | 0 | 2 | 2022-02-09T21:45:07Z | 2022-04-28T09:12:56Z | NONE | When i hit "Apply" button to search with "_exact" for a column syntax the URL prefix is removed from the url.

And the result is:

If I add the marked row to url_builder.py it seams to work:

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1633/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1182227211 | I_kwDOBm6k_c5Gd1sL | 1692 | [plugins][feature request]: Support additional script tag attributes when loading custom JS | hydrosquall 9020979 | open | 0 | 2 | 2022-03-27T01:16:03Z | 2022-03-30T06:14:51Z | CONTRIBUTOR | Motivation

To be able to support legacy browsers without slowing down users with modern browsers, I would like to be able to set additional HTML attributes on the tag fallback script, ```html <script type="module" src="/index.my-es-module-bundle.js"></script> <script src="/index.my-legacy-fallback-bundle.js" nomodule="" defer></script>``` ProposalTo achieve this, I propose additional optional properties to the API accepted by the Under this API, I'd write something like this to get the above HTML rendered in Datasette.

Resources

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1692/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1185868354 | I_kwDOBm6k_c5GrupC | 1695 | Option to un-filter facet not shown for `?col__exact=value` | simonw 9599 | open | 0 | 2 | 2022-03-30T04:44:02Z | 2022-03-30T04:46:18Z | OWNER | Spotted this on a page with

With

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1695/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1182141761 | I_kwDOBm6k_c5Gdg1B | 1690 | Idea: `datasette.set_actor_cookie(response, actor)` | simonw 9599 | open | 0 | 2 | 2022-03-26T22:41:52Z | 2022-03-26T22:43:00Z | OWNER | I just wrote this code in a plugin and it felt like it could benefit from an abstraction: https://github.com/simonw/datasette-auth0/blob/152e6eb21e96e9b73bd9c205f9749a1297d0ef0b/datasette_auth0/init.py#L79-L92

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1690/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1177101697 | I_kwDOBm6k_c5GKSWB | 1681 | Potential bug in numeric handling where_clause for filters | simonw 9599 | open | 0 | 2 | 2022-03-22T17:43:50Z | 2022-03-22T17:49:09Z | OWNER |

Originally posted by @simonw in https://github.com/simonw/datasette/issues/1671#issuecomment-1075432283 |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1681/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 910088936 | MDU6SXNzdWU5MTAwODg5MzY= | 1355 | datasette --get should efficiently handle streaming CSV | simonw 9599 | open | 0 | 2 | 2021-06-03T04:40:40Z | 2022-03-20T22:38:53Z | OWNER | It would be great if you could use Current implementation looks like it loads the entire result into memory first: https://github.com/simonw/datasette/blob/f78ebdc04537a6102316d6dbbf6c887565806078/datasette/cli.py#L546-L552 |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1355/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 626593402 | MDU6SXNzdWU2MjY1OTM0MDI= | 780 | Internals documentation for datasette.metadata() method | simonw 9599 | open | 0 | Datasette 1.0 3268330 | 2 | 2020-05-28T15:14:22Z | 2022-03-15T20:50:34Z | OWNER | datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/780/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1161937073 | I_kwDOBm6k_c5FQcCx | 1653 | Mechanism to default a table to sorting by multiple columns | simonw 9599 | open | 0 | 2 | 2022-03-07T21:20:11Z | 2022-03-07T21:23:39Z | OWNER | Discussed in https://github.com/simonw/datasette/discussions/1652

<sup>Originally posted by **zaneselvans** March 7, 2022</sup>

It's easy to tell datasette to sort tables using a single column, as [described in the docs](https://docs.datasette.io/en/stable/metadata.html#setting-a-default-sort-order):

```yaml

databases:

ferc1:

tables:

f1_edcfu_epda:

sort: created_time

```

But is there some way to tell it to sort using a composite key, like you would in an `ORDER BY` clause instead? For example, the way it's being done **[in this query](https://data.catalyst.coop/ferc1?sql=select%0D%0A++rowid%2C%0D%0A++respondent_id%2C%0D%0A++report_year%2C%0D%0A++spplmnt_num%2C%0D%0A++row_number%2C%0D%0A++row_seq%2C%0D%0A++row_prvlg%2C%0D%0A++acct_num%2C%0D%0A++depr_plnt_base%2C%0D%0A++est_avg_srvce_lf%2C%0D%0A++net_salvage%2C%0D%0A++apply_depr_rate%2C%0D%0A++mrtlty_crv_typ%2C%0D%0A++avg_remaining_lf%2C%0D%0A++report_prd%0D%0Afrom%0D%0A++f1_edcfu_epda%0D%0Awhere%0D%0A++respondent_id+%3D+210%0D%0A++AND+report_year+%3D+2020%0D%0Aorder+by%0D%0A++report_year%2C+report_prd%2C+respondent_id%2C+spplmnt_num%2C+row_number%0D%0Alimit%0D%0A++1000)** on our Datasette?

```sql

SELECT

respondent_id,

report_year,

spplmnt_num,

row_number,

row_seq,

row_prvlg,

acct_num,

depr_plnt_base,

est_avg_srvce_lf,

net_salvage,

apply_depr_rate,

mrtlty_crv_typ,

avg_remaining_lf,

report_prd

FROM

f1_edcfu_epda

WHERE

respondent_id = 210

AND report_year = 2020

ORDER BY

report_year, report_prd, respondent_id, spplmnt_num, row_number

LIMIT

1000

```

The problem here is that by default it's using `rowid` (the SQLite assigned autoincrementing integer key) to order the records, but the table **should** have a natural composite primary key, but the original database that this data is being migrated from doesn't enforce unique primary keys, so there are dupes, and we don't want to drop those rows, and the records are somehow getting jumbled in the database (the `rowid` ordering isn't lined up with the expected ordering based on the composite primary key, though it's close) and this jumbling is confusing to users that expect to see the data ordered based on the natural primary key.

I've tried setting the `sort` metadata parameter to a list of column names, a tuple of column names, a quoted string of comma-separated column names, a quoted string of a tuple of column names...

```yaml

databases:

ferc1:

tables:

f1_edcfu_epda:

sort: "(report_year, report_prd, respondent_id, spplmnt_num, row_number)"

```

and they all give me server errors like:

```

Cannot sort table by (report_year, report_prd, respondent_id, spplmnt_num, row_number)

``` |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1653/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1148725876 | I_kwDOBm6k_c5EeCp0 | 1640 | Support static assets where file length may change, e.g. logs | broccolihighkicks 57859326 | open | 0 | 2 | 2022-02-24T00:34:42Z | 2022-03-05T01:19:25Z | NONE | This is a bit of an oxymoron. I am serving a log.txt file for a background process using the Datasette --static CLI. This is useful as I can observe a background process from the web UI to see any errors that occur (instead of spelunking the logs via docker exec/ssh etc). I get this error, which I think is because Datasette assumes that the size of the content does not change (but appending new log lines means the content length changes).

Thanks, I am finding Datasette very useful. |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1640/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 712202333 | MDU6SXNzdWU3MTIyMDIzMzM= | 982 | SQL editor should allow execution of write queries, if you have permission | simonw 9599 | open | 0 | 2 | 2020-09-30T19:04:35Z | 2022-01-13T22:21:29Z | OWNER | The UI concept: if you have write permission then the existing SQL editor gets an "execute write" checkbox underneath it. JavaScript can spot if you appear to be trying to execute an UPDATE or INSERT or DELETE query and check that checkbox for you. If you link to a query page with a non-SELECT then that query will be displayed in the box ready for you to POST submit it. The page will also then get "cannot be embedded" headers to protect against clickjacking. |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/982/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1077628073 | I_kwDOBm6k_c5AO0yp | 1550 | Research option for returning all rows from arbitrary query | simonw 9599 | open | 0 | 2 | 2021-12-11T19:31:11Z | 2021-12-11T23:43:24Z | OWNER | Inspired by thinking about #1549 - returning ALL rows from an arbitrary query is a lot easier if you just run that query and keep iterating over the cursor. I've avoided doing that in the past because it could tie up a connection for a long time - but in private instances this wouldn't be such a problem. |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1550/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 950664971 | MDU6SXNzdWU5NTA2NjQ5NzE= | 1401 | unordered list is not rendering bullet points in description_html on database page | fgregg 536941 | open | 0 | 2 | 2021-07-22T13:24:18Z | 2021-10-23T13:09:10Z | CONTRIBUTOR | Thanks for this tremendous package, @simonw! In the However, on the database page on the deployed site, it is not rendering this as a bulleted list.

Page here: https://labordata-warehouse.herokuapp.com/nlrb-9da4ae5 The documentation gives an example of using an unordered list in a |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1401/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 602533481 | MDU6SXNzdWU2MDI1MzM0ODE= | 3 | Import EXIF data into SQLite - lens used, ISO, aperture etc | simonw 9599 | open | 0 | Apple Photos online and securely browsable 5324096 | 2 | 2020-04-18T19:24:31Z | 2021-10-05T12:38:24Z | MEMBER | dogsheep-photos 256834907 | issue | {

"url": "https://api.github.com/repos/dogsheep/dogsheep-photos/issues/3/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 999902754 | I_kwDOBm6k_c47mU4i | 1473 | base logo link visits `undefined` rather than href url | mroswell 192568 | open | 0 | 2 | 2021-09-18T04:17:04Z | 2021-09-19T00:45:32Z | CONTRIBUTOR | I have two connected sites: http://www.SaferOrToxic.org (a Hugo website) and: http://disinfectants.SaferOrToxic.org/disinfectants/listN (a datasette table page) The latter is linked as "The List" in the former's menu. (I'd love a prettier URL, but that's what I've got.) On: http://disinfectants.SaferOrToxic.org/disinfectants/listN ... all the other menu links should point back to: https://www.SaferOrToxic.org And they do! But the logo, for some reason--though it has an href pointing to: https://www.SaferOrToxic.org Keeps going to this instead: https://disinfectants.saferortoxic.org/disinfectants/undefined What is causing that? How can I fix it? In #1284 back in March, I was doing battle with the index.html template, in a still unresolved issue. (I wanted only a single table page at the root.) But I thought, well, if I can't resolve that, at least I could just point the main website to the datasette page ("The List,") and then have the List point back to the home website. The menu hrefs to https://www.SaferOrToxic.org work just fine, exactly as they should, from the datasette page. Even the Home link works properly. But the logo link keeps rewriting to: https://disinfectants.saferortoxic.org/disinfectants/undefined This is the HTML:

Is this somehow related to cloudflare? Or something in the datasette code? I'm starting to think it's a cloudflare issue.

Can I at least rule out it being a datasette issue? My repository is here: https://github.com/mroswell/list-N (BTW, I couldn't figure out how to reference a local image, either, on the datasette side, which is why I'm using the image from the www home page.) |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1473/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 602585497 | MDU6SXNzdWU2MDI1ODU0OTc= | 7 | Integrate image content hashing | simonw 9599 | open | 0 | 2 | 2020-04-19T00:36:58Z | 2021-08-26T02:01:01Z | MEMBER | To spot duplicate images (where the file content differs such that the sha256 is no longer a match) it would be useful to calculate and store perceptual hashes of some sort. |

dogsheep-photos 256834907 | issue | {

"url": "https://api.github.com/repos/dogsheep/dogsheep-photos/issues/7/reactions",

"total_count": 1,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 1,

"rocket": 0,

"eyes": 0

} |

||||||||

| 975166271 | MDU6SXNzdWU5NzUxNjYyNzE= | 20 | Add index on workout_points.date | simonw 9599 | open | 0 | 2 | 2021-08-20T01:08:04Z | 2021-08-20T01:12:48Z | MEMBER | Sorting that by date makes sense for seeing most recent points, and my DB has 2.5m points in so it's an expensive sort! |

healthkit-to-sqlite 197882382 | issue | {

"url": "https://api.github.com/repos/dogsheep/healthkit-to-sqlite/issues/20/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 974067156 | MDU6SXNzdWU5NzQwNjcxNTY= | 318 | Research: handle gzipped CSV directly | simonw 9599 | open | 0 | 2 | 2021-08-18T21:23:04Z | 2021-08-18T21:25:30Z | OWNER | Would it be worthwhile for the Maybe add |

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/318/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 969548935 | MDU6SXNzdWU5Njk1NDg5MzU= | 1429 | UI for setting `?_size=max` on table page | simonw 9599 | open | 0 | 2 | 2021-08-12T20:52:09Z | 2021-08-13T04:37:41Z | OWNER | It defaults to 100 per page, but you can increase that to 1000 per page using But... that's only available to people who know how to hack URLs. Solution: add a link that sets that option to the pagination block at the bottom of the table:

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1429/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 961008507 | MDU6SXNzdWU5NjEwMDg1MDc= | 308 | Add an interactive tutorial as a Jupyter notebook | simonw 9599 | open | 0 | 2 | 2021-08-04T20:34:22Z | 2021-08-04T21:30:59Z | OWNER | Can show people how to open this up in Binder. |

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/308/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 957302085 | MDU6SXNzdWU5NTczMDIwODU= | 1408 | Review places in codebase that use os.chdir(), in particularly relating to tests | simonw 9599 | open | 0 | 2 | 2021-07-31T18:57:06Z | 2021-07-31T19:00:32Z | OWNER |

Originally posted by @simonw in https://github.com/simonw/datasette/issues/1406#issuecomment-890390198 |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1408/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 951185411 | MDU6SXNzdWU5NTExODU0MTE= | 1402 | feature request: social meta tags | fgregg 536941 | open | 0 | 2 | 2021-07-23T01:57:23Z | 2021-07-26T19:31:41Z | CONTRIBUTOR | it would be very nice if the twitter, slack, and other social media could make rich cards when people post a link to a datasette instance |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1402/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 792652391 | MDU6SXNzdWU3OTI2NTIzOTE= | 1199 | Experiment with PRAGMA mmap_size=N | simonw 9599 | open | 0 | 2 | 2021-01-23T21:24:09Z | 2021-07-17T17:39:17Z | OWNER | https://sqlite.org/mmap.html - SQLite supports memory-mapped I/O but it's disabled by default. The It would be very interesting to understand the impact this could have on Datasette performance for various different shapes of data. |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1199/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 736365306 | MDU6SXNzdWU3MzYzNjUzMDY= | 1083 | Advanced CSV export for arbitrary queries | simonw 9599 | open | 0 | 2 | 2020-11-04T19:23:05Z | 2021-06-17T18:12:31Z | OWNER | There's no link to download the CSV file - the table page has that as an advanced export option, but this is missing from the query page. |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1083/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 913809802 | MDU6SXNzdWU5MTM4MDk4MDI= | 1366 | Get rid of this `restore_working_directory` hack entirely | simonw 9599 | open | 0 | 2 | 2021-06-07T18:01:21Z | 2021-06-07T18:03:03Z | OWNER |

Originally posted by @simonw in https://github.com/simonw/datasette/issues/1361#issuecomment-855308811 |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1366/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 904598267 | MDExOlB1bGxSZXF1ZXN0NjU1NzQxNDI4 | 1348 | DRAFT: add test and scan for docker images | blairdrummond 10801138 | open | 0 | 2 | 2021-05-28T03:02:12Z | 2021-05-28T03:06:16Z | CONTRIBUTOR | simonw/datasette/pulls/1348 | NOTE: I don't think this PR is ready, since the arm/v6 and arm/v7 images are failing pytest due to missing dependencies (gcc and friends). But it's pretty close. Closes https://github.com/simonw/datasette/issues/1344 . Using a build-matrix for the platforms and this test, we test all the platforms in parallel. I also threw in container scanning. Switch

|

datasette 107914493 | pull | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1348/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

0 | ||||||

| 842862708 | MDU6SXNzdWU4NDI4NjI3MDg= | 1280 | Ability to run CI against multiple SQLite versions | simonw 9599 | open | 0 | 2 | 2021-03-28T23:54:50Z | 2021-05-10T19:07:46Z | OWNER | Issue #1276 happened because I didn't run tests against a SQLite version prior to 3.16.0 (released 2017-01-02). Glitch is a deployment target and runs SQLite 3.11.0 from 2016-02-15. If CI ran against that version of SQLite this bug could have been avoided. |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1280/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 281110295 | MDU6SXNzdWUyODExMTAyOTU= | 173 | I18n and L10n support | janimo 50138 | open | 0 | 2 | 2017-12-11T17:49:58Z | 2021-04-26T12:10:01Z | NONE | It would be less geeky and more user friendly if the display strings in the filter menu and possibly other parts could be localized. |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/173/reactions",

"total_count": 2,

"+1": 2,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 791237799 | MDU6SXNzdWU3OTEyMzc3OTk= | 1196 | Access Denied Error in Windows | QAInsights 2826376 | open | 0 | 2 | 2021-01-21T15:40:40Z | 2021-04-14T19:28:38Z | NONE | I am trying to publish a db to vercel. But while issuing the below command throwing I am using PyCharm and Python 3.9. I have reinstalled both and launched PyCharm as Admin in Windows 10. But still the issue persists. Issued command PS: localhost is working fine. |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1196/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 826700095 | MDU6SXNzdWU4MjY3MDAwOTU= | 1255 | Facets timing out but work when filtering | robroc 1219001 | open | 0 | 2 | 2021-03-09T22:01:39Z | 2021-04-02T20:50:08Z | NONE | System info: Windows 10 Datasette 0.55 installed via pip Python 3.8.5 in a conda environment I'm getting the message |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1255/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 617323873 | MDU6SXNzdWU2MTczMjM4NzM= | 766 | Enable wildcard-searches by default | clausjuhl 2181410 | open | 0 | 2 | 2020-05-13T10:14:48Z | 2021-03-05T16:35:21Z | NONE | Hi Simon. It seems that datasette currently has wildcard-searches disabled by default (along with the boolean search-options, NEAR-queries and more, and despite the docs). If I try out the search-url provided in the docs (https://fara.datasettes.com/fara/FARA_All_ShortForms?_search=manafort), it does not handle wildcard-searches, and I'm unable to make it work on my datasette-instance. I would argue that wildcard-searches is such a standard query, that it should be enabled by default. Requiring "_searchmode=raw" when using prefix-searches seems unnecessary. Plus: What happens to non-ascii searches when using "_searchmode=raw"? Is the "escape_fts"-function from datasette.utils ignored? Thanks! /Claus |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/766/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 792890765 | MDU6SXNzdWU3OTI4OTA3NjU= | 1200 | ?_size=10 option for the arbitrary query page would be useful | simonw 9599 | open | 0 | 2 | 2021-01-24T20:55:35Z | 2021-02-11T03:13:59Z | OWNER | https://latest.datasette.io/fixtures?sql=select+*+from+compound_three_primary_keys&_size=10 - Would also be good if it persisted in a hidden form field. |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1200/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 803929694 | MDU6SXNzdWU4MDM5Mjk2OTQ= | 1219 | Try profiling Datasette using scalene | simonw 9599 | open | 0 | 2 | 2021-02-08T20:37:06Z | 2021-02-08T22:13:00Z | OWNER | https://github.com/emeryberger/scalene looks like an interesting profiling tool. |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1219/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 801780625 | MDU6SXNzdWU4MDE3ODA2MjU= | 9 | SSL Error | jfeiwell 12669260 | open | 0 | 2 | 2021-02-05T02:12:56Z | 2021-02-07T18:45:04Z | NONE | Here's the error I get when running

Does this require python 3? |

pocket-to-sqlite 213286752 | issue | {

"url": "https://api.github.com/repos/dogsheep/pocket-to-sqlite/issues/9/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 782708469 | MDU6SXNzdWU3ODI3MDg0Njk= | 1183 | Take advantage of sqlite-utils cached table counts, if available | simonw 9599 | open | 0 | 2 | 2021-01-09T23:51:48Z | 2021-01-12T02:42:08Z | OWNER | sqlite-utils 3.2 now has a mechanism for creating a https://sqlite-utils.datasette.io/en/stable/python-api.html#cached-table-counts-using-triggers |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1183/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 780767542 | MDU6SXNzdWU3ODA3Njc1NDI= | 1180 | Lazily evaluated arguments for call_with_supported_arguments | simonw 9599 | open | 0 | 2 | 2021-01-06T18:43:34Z | 2021-01-07T18:56:24Z | OWNER | While building https://github.com/simonw/datasette-export-notebook I thought it would be nice to be able to show a count of exported records on the page "This will stream 10,422 records to your notebook". None of the documented arguments on https://docs.datasette.io/en/0.53/plugin_hooks.html#register-output-renderer-datasette expose the count. The closest is So, idea: if your defined render function takes a To implement this I would need to teach the |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1180/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

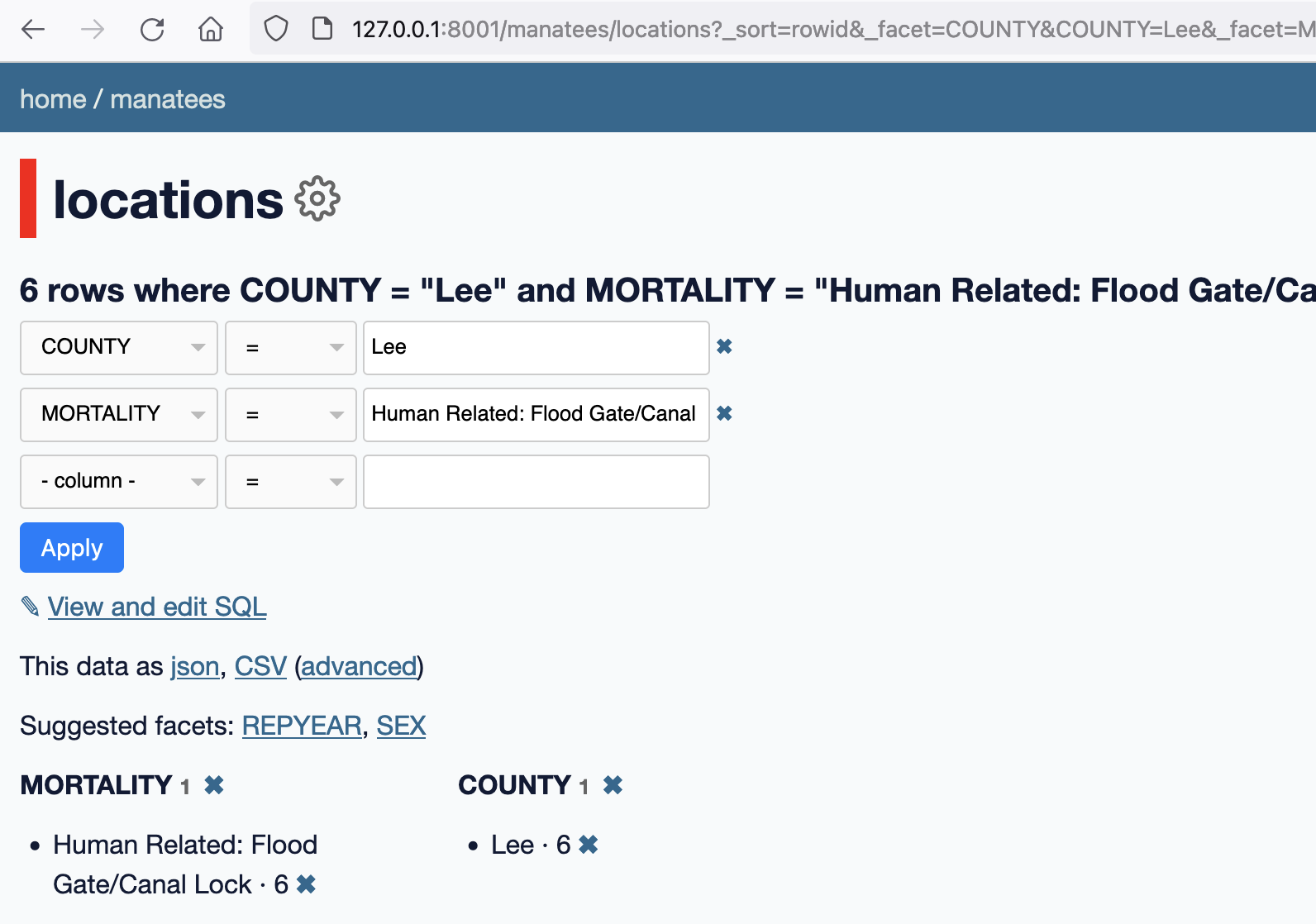

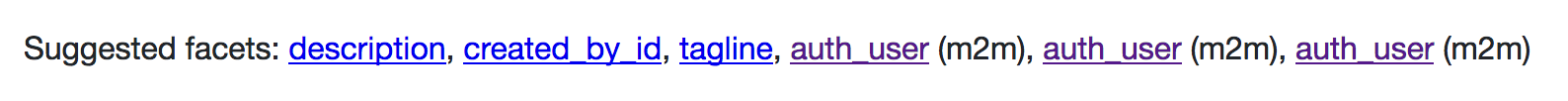

| 450032134 | MDU6SXNzdWU0NTAwMzIxMzQ= | 495 | facet_m2m gets confused by multiple relationships | simonw 9599 | open | 0 | 2 | 2019-05-29T21:37:28Z | 2020-12-17T05:08:22Z | OWNER | I got this for a database I was playing with:

I think this is because of these three tables:

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/495/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 769376447 | MDU6SXNzdWU3NjkzNzY0NDc= | 2 | killed by oomkiller on large location-history | khimaros 231498 | open | 0 | 2 | 2020-12-17T00:32:24Z | 2020-12-17T00:48:32Z | NONE | memory seems to grow unbounded and is oom-killed after about 20GB memory usage. this is happening while loading a ~1GB uncompressed location history. |

google-takeout-to-sqlite 206649770 | issue | {

"url": "https://api.github.com/repos/dogsheep/google-takeout-to-sqlite/issues/2/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 628572716 | MDU6SXNzdWU2Mjg1NzI3MTY= | 791 | Tutorial: building a something-interesting with writable canned queries | simonw 9599 | open | 0 | 2 | 2020-06-01T16:32:05Z | 2020-10-10T23:34:42Z | OWNER | Initial idea: TODO list, as a tutorial for #698 writable canned queries. |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/791/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 698791218 | MDU6SXNzdWU2OTg3OTEyMTg= | 50 | favorites --stop_after=N stops after min(N, 200) | mikepqr 370930 | open | 0 | 2 | 2020-09-11T03:38:14Z | 2020-09-13T05:11:14Z | CONTRIBUTOR | For any number greater than 200, |

twitter-to-sqlite 206156866 | issue | {

"url": "https://api.github.com/repos/dogsheep/twitter-to-sqlite/issues/50/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 649429772 | MDU6SXNzdWU2NDk0Mjk3NzI= | 886 | Reconsider how _actor_X magic parameter deals with missing values | simonw 9599 | open | 0 | 2 | 2020-07-02T00:00:38Z | 2020-09-11T21:35:26Z | OWNER | I had to build a custom @hookimpl

def register_magic_parameters():

return [

("actorornull", actorornull),

]

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/886/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 696908389 | MDU6SXNzdWU2OTY5MDgzODk= | 961 | Verification checks for metadata.json on startup | simonw 9599 | open | 0 | 2 | 2020-09-09T15:21:53Z | 2020-09-09T15:24:31Z | OWNER | I lost a bunch of time yesterday trying to figure out why a Datasette instance wasn't starting up - it turned out it was because I had a Catching these on startup would be good. |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/961/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 695556681 | MDU6SXNzdWU2OTU1NTY2ODE= | 19 | Figure out incremental re-indexing | simonw 9599 | open | 0 | 2 | 2020-09-08T05:23:31Z | 2020-09-08T05:27:07Z | MEMBER | As tables get bigger reindexing everything on a schedule (essentially recreating the entire index from scratch) will start to become a performance bottleneck. |

dogsheep-beta 197431109 | issue | {

"url": "https://api.github.com/repos/dogsheep/dogsheep-beta/issues/19/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 695553522 | MDU6SXNzdWU2OTU1NTM1MjI= | 18 | Deleted records stay in the search index | simonw 9599 | open | 0 | 2 | 2020-09-08T05:14:23Z | 2020-09-08T05:15:51Z | MEMBER | That should probably do |

dogsheep-beta 197431109 | issue | {

"url": "https://api.github.com/repos/dogsheep/dogsheep-beta/issues/18/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 695441530 | MDU6SXNzdWU2OTU0NDE1MzA= | 154 | OperationalError: cannot change into wal mode from within a transaction | simonw 9599 | open | 0 | 2 | 2020-09-07T23:42:44Z | 2020-09-07T23:47:10Z | OWNER | I'm getting this error when running:

I'm worried that maybe that's because of this new code from #152: |

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/154/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 659873662 | MDU6SXNzdWU2NTk4NzM2NjI= | 898 | datasette.utils.testing module | simonw 9599 | open | 0 | 2 | 2020-07-18T03:53:24Z | 2020-07-18T03:57:46Z | OWNER | The unit tests for plugins could benefit from reusing code from Datasette's own testing fixtures, e.g.:

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/898/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 628156527 | MDU6SXNzdWU2MjgxNTY1Mjc= | 789 | Mechanism for enabling pluggy tracing | simonw 9599 | open | 0 | 2 | 2020-06-01T05:10:14Z | 2020-06-01T05:11:03Z | OWNER | Could be useful for debugging plugins: https://pluggy.readthedocs.io/en/latest/#call-tracing I tried this out by adding these two lines in Added these:pm.trace.root.setwriter(print)

pm.enable_tracing()

finish actor_from_request --> [] [hook] extra_body_script [hook] template: show_json.html database: None table: None view_name: json_data datasette: <datasette.app.Datasette object at 0x106277ad0> finish extra_body_script --> [] [hook] extra_template_vars [hook] template: show_json.html database: None table: None view_name: json_data request: <datasette.utils.asgi.Request object at 0x1065504d0> datasette: <datasette.app.Datasette object at 0x106277ad0> finish extra_template_vars --> [] [hook] extra_css_urls [hook] template: show_json.html database: None table: None datasette: <datasette.app.Datasette object at 0x106277ad0> finish extra_css_urls --> [] [hook] extra_js_urls [hook] template: show_json.html database: None table: None datasette: <datasette.app.Datasette object at 0x106277ad0> finish extra_js_urls --> [] [hook] INFO: 127.0.0.1:52724 - "GET /-/actor HTTP/1.1" 200 OK actor_from_request [hook] datasette: <datasette.app.Datasette object at 0x106277ad0> request: <datasette.utils.asgi.Request object at 0x1065500d0> finish actor_from_request --> [] [hook] ``` |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/789/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 374953006 | MDU6SXNzdWUzNzQ5NTMwMDY= | 369 | Interface should show same JSON shape options for custom SQL queries | gfrmin 416374 | open | 0 | Datasette 1.0 3268330 | 2 | 2018-10-29T10:39:15Z | 2020-05-30T17:24:06Z | CONTRIBUTOR | At the moment the page returning a custom SQL query shows the JSON and CSV APIs, but not the multiple JSON shapes. However, adding the |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/369/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

|||||||

| 607888367 | MDU6SXNzdWU2MDc4ODgzNjc= | 13 | Also upload movie files | simonw 9599 | open | 0 | 2 | 2020-04-27T22:11:25Z | 2020-04-28T00:39:45Z | MEMBER | The Need to cover movies taken by my phone and DSLR too. |

dogsheep-photos 256834907 | issue | {

"url": "https://api.github.com/repos/dogsheep/dogsheep-photos/issues/13/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 539204432 | MDU6SXNzdWU1MzkyMDQ0MzI= | 70 | Implement ON DELETE and ON UPDATE actions for foreign keys | LucasElArruda 26292069 | open | 0 | 2 | 2019-12-17T17:19:10Z | 2020-02-27T04:18:53Z | NONE | Hi! I did not find any mention on the library about ON DELETE and ON UPDATE actions for foreign keys. Are those expected to be implemented? If not, it would be a nice thing to include! |

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/70/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 550293770 | MDU6SXNzdWU1NTAyOTM3NzA= | 658 | How do I use the app.css as style sheet? | null92 49656826 | open | 0 | 2 | 2020-01-15T16:27:57Z | 2020-02-07T00:29:50Z | NONE | Simon, I'm trying to use the app.css (in static folder) as style sheet but the datasette on Heroku simply ignore it! I read everything about customization here and on readthedocs but still can't. Is this possible? Thanks! |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/658/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 464987783 | MDExOlB1bGxSZXF1ZXN0Mjk1MTI3MjEz | 546 | Facet by delimiter | simonw 9599 | open | 0 | 2 | 2019-07-07T20:06:05Z | 2019-11-18T23:46:01Z | OWNER | simonw/datasette/pulls/546 | Refs #510 |

datasette 107914493 | pull | {

"url": "https://api.github.com/repos/simonw/datasette/issues/546/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

0 | ||||||

| 510076368 | MDU6SXNzdWU1MTAwNzYzNjg= | 605 | Support queries at the table level | bsilverm 12617395 | open | 0 | 2 | 2019-10-21T15:58:30Z | 2019-10-30T18:55:37Z | NONE | Per the issue described in issue #588, it was determined queries are not supported at the table level. Per my last comment in the issue, I'd like to request support for this as it would help eliminate errors in the event certain tables are not present in the database. |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/605/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 465003070 | MDU6SXNzdWU0NjUwMDMwNzA= | 551 | Ship many-to-many faceting support (and facet-by-delimiter) | simonw 9599 | open | 0 | 2 | 2019-07-07T23:11:45Z | 2019-07-08T15:45:23Z | OWNER | datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/551/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

|||||||||

| 275755475 | MDU6SXNzdWUyNzU3NTU0NzU= | 140 | Heatmap visualization plugin | simonw 9599 | open | 0 | 2 | 2017-11-21T15:34:23Z | 2019-05-13T18:33:51Z | OWNER | datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/140/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

|||||||||

| 288438570 | MDU6SXNzdWUyODg0Mzg1NzA= | 179 | More metadata options for template authors | simonw 9599 | open | 0 | 2 | 2018-01-14T20:51:04Z | 2019-05-13T18:33:33Z | OWNER | See this thread on Twitter: https://twitter.com/simonw/status/952637152797458432 |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/179/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 411257981 | MDU6SXNzdWU0MTEyNTc5ODE= | 412 | Linked Data(sette) | sfkeller 43340 | open | 0 | 2 | 2019-02-18T00:38:14Z | 2019-03-19T10:09:46Z | NONE | I've a radical feature idea (possible first as an extension in order to experiment?): I'd like to link to a remote table from a remote database, e.g. with a function "linked_datasette()". So one could do following query:

There's a foundation in the SQL Standard called SQL/MED (https://rhaas.blogspot.com/2011/01/why-sqlmed-is-cool.html ). And here's an implementation from me in Postgres FDW to connect another Postgres "endpoint": https://pastebin.com/Fz2v64Cz . |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/412/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 330826972 | MDU6SXNzdWUzMzA4MjY5NzI= | 308 | Support extra Heroku apps:create options - region, space, team | annapowellsmith 78156 | open | 0 | 2 | 2018-06-08T23:08:33Z | 2018-09-21T14:09:28Z | NONE | It would be useful to document how to pass Heroku CLI options on |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/308/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 326599525 | MDU6SXNzdWUzMjY1OTk1MjU= | 286 | Database hash should include current datasette version | simonw 9599 | open | 0 | 2 | 2018-05-25T17:03:42Z | 2018-05-25T17:07:36Z | OWNER | Right now deploying a new version of datasette doesn't invalidate existing URLs, so users may still see a cached copy of the old templates. We can fix this by including the current datasette version in the input to the hash function (which currently just the database file contents). |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/286/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 316621102 | MDU6SXNzdWUzMTY2MjExMDI= | 235 | Add limit on the size in KB of data returned from a single query | simonw 9599 | open | 0 | 2 | 2018-04-22T23:01:15Z | 2018-04-24T00:30:02Z | OWNER | Datasette limits the number of rows returned to 1,000 and limits the time spent executing a SQL query to 1000ms - and both of these limits can be customized. It does not have a limit on the size of the response returned. It's possible to compose maliciously large SQL responses in a small number of rows using mechanisms like the I think the easiest place to implement that is here: Currently we use The bigger challenge here is understanding how well this approach works and what impact it will have on overall Datasette performance. I think I need #33 for this. |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/235/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Advanced export

JSON shape: default, array, newline-delimited, object

CREATE TABLE [issues] (

[id] INTEGER PRIMARY KEY,

[node_id] TEXT,

[number] INTEGER,

[title] TEXT,

[user] INTEGER REFERENCES [users]([id]),

[state] TEXT,

[locked] INTEGER,

[assignee] INTEGER REFERENCES [users]([id]),

[milestone] INTEGER REFERENCES [milestones]([id]),

[comments] INTEGER,

[created_at] TEXT,

[updated_at] TEXT,

[closed_at] TEXT,

[author_association] TEXT,

[pull_request] TEXT,

[body] TEXT,

[repo] INTEGER REFERENCES [repos]([id]),

[type] TEXT

, [active_lock_reason] TEXT, [performed_via_github_app] TEXT, [reactions] TEXT, [draft] INTEGER, [state_reason] TEXT);

CREATE INDEX [idx_issues_repo]

ON [issues] ([repo]);

CREATE INDEX [idx_issues_milestone]

ON [issues] ([milestone]);

CREATE INDEX [idx_issues_assignee]

ON [issues] ([assignee]);

CREATE INDEX [idx_issues_user]

ON [issues] ([user]);