issues

78 rows where comments = 7 sorted by updated_at descending

This data as json, CSV (advanced)

Suggested facets: user, milestone, author_association, draft, state_reason, created_at (date), updated_at (date), closed_at (date)

repo 8

| id | node_id | number | title | user | state | locked | assignee | milestone | comments | created_at | updated_at ▲ | closed_at | author_association | pull_request | body | repo | type | active_lock_reason | performed_via_github_app | reactions | draft | state_reason |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1940346034 | I_kwDOBm6k_c5zp1Sy | 2199 | Detailed upgrade instructions for metadata.yaml -> datasette.yaml | simonw 9599 | open | 0 | Datasette 1.0 3268330 | 7 | 2023-10-12T16:21:25Z | 2023-10-12T22:08:42Z | OWNER |

Originally posted by @simonw in https://github.com/simonw/datasette/issues/2190#issuecomment-1759947021 |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/2199/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

|||||||

| 1426379903 | PR_kwDOBm6k_c5BtJNn | 1870 | don't use immutable=1, only mode=ro | fgregg 536941 | open | 0 | 7 | 2022-10-27T23:33:04Z | 2023-10-03T19:12:37Z | CONTRIBUTOR | simonw/datasette/pulls/1870 | Opening db files in immutable mode sometimes leads to the file being mutated, which causes duplication in the docker image layers: see #1836, #1480 That this happens in "immutable" mode is surprising, because the sqlite docs say that setting this should open the database as read only. https://www.sqlite.org/c3ref/open.html

Perhaps this is a bug in sqlite? :books: Documentation preview :books:: https://datasette--1870.org.readthedocs.build/en/1870/ |

datasette 107914493 | pull | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1870/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

0 | ||||||

| 954546309 | MDExOlB1bGxSZXF1ZXN0Njk4NDIzNjY3 | 8 | Add Gmail takeout mbox import (v2) | maxhawkins 28565 | open | 0 | 7 | 2021-07-28T07:05:32Z | 2023-09-08T01:22:49Z | FIRST_TIME_CONTRIBUTOR | dogsheep/google-takeout-to-sqlite/pulls/8 | WIP This PR builds on #5 to continue implementing gmail import support. Building on @UtahDave's work, these commits add a few performance and bug fixes:

I will send more commits to fix any errors I encounter as I run the importer on my personal takeout data. |

google-takeout-to-sqlite 206649770 | pull | {

"url": "https://api.github.com/repos/dogsheep/google-takeout-to-sqlite/issues/8/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

0 | ||||||

| 1010112818 | I_kwDOBm6k_c48NRky | 1479 | Win32 "used by another process" error with datasette publish | kirajano 76450761 | open | 0 | 7 | 2021-09-28T19:12:00Z | 2023-09-07T02:14:16Z | NONE | I unfortunately was not successful to deploy to fly.io. Please see the details above of the three scenarios that I took. I am also new to datasette. Failed to deploy. Attaching logs:

1. Tried with an app created via Error error connecting to docker: An unknown error occured. Traceback (most recent call last): File "c:\users\grott\anaconda3\lib\runpy.py", line 193, in _run_module_as_main "main", mod_spec) File "c:\users\grott\anaconda3\lib\runpy.py", line 85, in _run_code exec(code, run_globals) File "C:\Users\grott\Anaconda3\Scripts\datasette.exe__main__.py", line 7, in <module> File "c:\users\grott\anaconda3\lib\site-packages\click\core.py", line 829, in call return self.main(args, kwargs) File "c:\users\grott\anaconda3\lib\site-packages\click\core.py", line 782, in main rv = self.invoke(ctx) File "c:\users\grott\anaconda3\lib\site-packages\click\core.py", line 1259, in invoke return _process_result(sub_ctx.command.invoke(sub_ctx)) File "c:\users\grott\anaconda3\lib\site-packages\click\core.py", line 1259, in invoke return _process_result(sub_ctx.command.invoke(sub_ctx)) File "c:\users\grott\anaconda3\lib\site-packages\click\core.py", line 1066, in invoke return ctx.invoke(self.callback, ctx.params) File "c:\users\grott\anaconda3\lib\site-packages\click\core.py", line 610, in invoke return callback(args, **kwargs) File "c:\users\grott\anaconda3\lib\site-packages\datasette_publish_fly__init__.py", line 156, in fly "--remote-only", File "c:\users\grott\anaconda3\lib\contextlib.py", line 119, in exit next(self.gen) File "c:\users\grott\anaconda3\lib\site-packages\datasette\utils__init__.py", line 451, in temporary_docker_directory tmp.cleanup() File "c:\users\grott\anaconda3\lib\tempfile.py", line 811, in cleanup _shutil.rmtree(self.name) File "c:\users\grott\anaconda3\lib\shutil.py", line 516, in rmtree return _rmtree_unsafe(path, onerror) File "c:\users\grott\anaconda3\lib\shutil.py", line 395, in _rmtree_unsafe _rmtree_unsafe(fullname, onerror) File "c:\users\grott\anaconda3\lib\shutil.py", line 404, in _rmtree_unsafe onerror(os.rmdir, path, sys.exc_info()) File "c:\users\grott\anaconda3\lib\shutil.py", line 402, in _rmtree_unsafe os.rmdir(path) PermissionError: [WinError 32] The process cannot access the file because it is being used by another process: 'C:\Users\grott\AppData\Local\Temp\tmpgcm8cz66\frosty-fog-8565' ```

Error not possible to validate configuration: server returned Post "https://api.fly.io/graphql": unexpected EOF Traceback (most recent call last):

File "c:\users\grott\anaconda3\lib\runpy.py", line 193, in _run_module_as_main These are also the contents of the generated .toml file in 2 scenario: ``` fly.toml file generated for dark-feather-168 on 2021-09-28T20:35:44+02:00app = "dark-feather-168" kill_signal = "SIGINT" kill_timeout = 5 processes = [] [env] [experimental] allowed_public_ports = [] auto_rollback = true [[services]] http_checks = [] internal_port = 8080 processes = ["app"] protocol = "tcp" script_checks = [] [services.concurrency] hard_limit = 25 soft_limit = 20 type = "connections" [[services.ports]] handlers = ["http"] port = 80 [[services.ports]] handlers = ["tls", "http"] port = 443 [[services.tcp_checks]] grace_period = "1s" interval = "15s" restart_limit = 6 timeout = "2s" ```

```[+] Building 147.3s (11/11) FINISHED => [internal] load build definition from Dockerfile 0.2s => => transferring dockerfile: 396B 0.0s => [internal] load .dockerignore 0.1s => => transferring context: 2B 0.0s => [internal] load metadata for docker.io/library/python:3.8 4.7s => [auth] library/python:pull token for registry-1.docker.io 0.0s => [internal] load build context 0.1s => => transferring context: 82.37kB 0.0s => [1/5] FROM docker.io/library/python:3.8@sha256:530de807b46a11734e2587a784573c12c5034f2f14025f838589e6c0e3 108.3s => => resolve docker.io/library/python:3.8@sha256:530de807b46a11734e2587a784573c12c5034f2f14025f838589e6c0e3b5 0.0s => => sha256:56182bcdf4d4283aa1f46944b4ef7ac881e28b4d5526720a4e9ba03a4730846a 2.22kB / 2.22kB 0.0s => => sha256:955615a668ce169f8a1443fc6b6e6215f43fe0babfb4790712a2d3171f34d366 54.93MB / 54.93MB 21.6s => => sha256:911ea9f2bd51e53a455297e0631e18a72a86d7e2c8e1807176e80f991bde5d64 10.87MB / 10.87MB 15.5s => => sha256:530de807b46a11734e2587a784573c12c5034f2f14025f838589e6c0e3b5c5b6 1.86kB / 1.86kB 0.0s => => sha256:ff08f08727e50193dcf499afc30594c47e70cc96f6fcfd1a01240524624264d0 8.65kB / 8.65kB 0.0s => => sha256:2756ef5f69a5190f4308619e0f446d95f5515eef4a814dbad0bcebbbbc7b25a8 5.15MB / 5.15MB 6.4s => => sha256:27b0a22ee906271a6ce9ddd1754fdd7d3b59078e0b57b6cc054c7ed7ac301587 54.57MB / 54.57MB 37.7s => => sha256:8584d51a9262f9a3a436dea09ba40fa50f85802018f9bd299eee1bf538481077 196.45MB / 196.45MB 82.3s => => sha256:524774b7d3638702fe9ae0ea3fcfb81b027dfd75cc2fc14f0119e764b9543d58 6.29MB / 6.29MB 26.6s => => extracting sha256:955615a668ce169f8a1443fc6b6e6215f43fe0babfb4790712a2d3171f34d366 5.4s => => sha256:9460f6b75036e38367e2f27bb15e85777c5d6cd52ad168741c9566186415aa26 16.81MB / 16.81MB 40.5s => => extracting sha256:2756ef5f69a5190f4308619e0f446d95f5515eef4a814dbad0bcebbbbc7b25a8 0.6s => => extracting sha256:911ea9f2bd51e53a455297e0631e18a72a86d7e2c8e1807176e80f991bde5d64 0.6s => => sha256:9bc548096c181514aa1253966a330134d939496027f92f57ab376cd236eb280b 232B / 232B 40.1s => => extracting sha256:27b0a22ee906271a6ce9ddd1754fdd7d3b59078e0b57b6cc054c7ed7ac301587 5.8s => => sha256:1d87379b86b89fd3b8bb1621128f00c8f962756e6aaaed264ec38db733273543 2.35MB / 2.35MB 41.8s => => extracting sha256:8584d51a9262f9a3a436dea09ba40fa50f85802018f9bd299eee1bf538481077 18.8s => => extracting sha256:524774b7d3638702fe9ae0ea3fcfb81b027dfd75cc2fc14f0119e764b9543d58 1.2s => => extracting sha256:9460f6b75036e38367e2f27bb15e85777c5d6cd52ad168741c9566186415aa26 2.9s => => extracting sha256:9bc548096c181514aa1253966a330134d939496027f92f57ab376cd236eb280b 0.0s => => extracting sha256:1d87379b86b89fd3b8bb1621128f00c8f962756e6aaaed264ec38db733273543 0.8s => [2/5] COPY . /app 2.3s => [3/5] WORKDIR /app 0.2s => [4/5] RUN pip install -U datasette 26.9s => [5/5] RUN datasette inspect covid.db --inspect-file inspect-data.json 3.1s => exporting to image 1.2s => => exporting layers 1.2s => => writing image sha256:b5db0c205cd3454c21fbb00ecf6043f261540bcf91c2dfc36d418f1a23a75d7a 0.0s Use 'docker scan' to run Snyk tests against images to find vulnerabilities and learn how to fix them Traceback (most recent call last): "main", mod_spec) File "c:\users\grott\anaconda3\lib\runpy.py", line 85, in _run_code exec(code, run_globals) File "C:\Users\grott\Anaconda3\Scripts\datasette.exe__main__.py", line 7, in <module> File "c:\users\grott\anaconda3\lib\site-packages\click\core.py", line 829, in call return self.main(args, kwargs) File "c:\users\grott\anaconda3\lib\site-packages\click\core.py", line 782, in main rv = self.invoke(ctx) File "c:\users\grott\anaconda3\lib\site-packages\click\core.py", line 1259, in invoke return _process_result(sub_ctx.command.invoke(sub_ctx)) File "c:\users\grott\anaconda3\lib\site-packages\click\core.py", line 1066, in invoke return ctx.invoke(self.callback, ctx.params) File "c:\users\grott\anaconda3\lib\site-packages\click\core.py", line 610, in invoke return callback(args, **kwargs) File "c:\users\grott\anaconda3\lib\site-packages\datasette\cli.py", line 283, in package call(args) File "c:\users\grott\anaconda3\lib\contextlib.py", line 119, in exit next(self.gen) File "c:\users\grott\anaconda3\lib\site-packages\datasette\utils__init__.py", line 451, in temporary_docker_directory tmp.cleanup() File "c:\users\grott\anaconda3\lib\tempfile.py", line 811, in cleanup _shutil.rmtree(self.name) File "c:\users\grott\anaconda3\lib\shutil.py", line 516, in rmtree return _rmtree_unsafe(path, onerror) File "c:\users\grott\anaconda3\lib\shutil.py", line 395, in _rmtree_unsafe _rmtree_unsafe(fullname, onerror) File "c:\users\grott\anaconda3\lib\shutil.py", line 404, in _rmtree_unsafe onerror(os.rmdir, path, sys.exc_info()) File "c:\users\grott\anaconda3\lib\shutil.py", line 402, in _rmtree_unsafe os.rmdir(path) PermissionError: [WinError 32] The process cannot access the file because it is being used by another process: 'C:\Users\grott\AppData\Local\Temp\tmpkb27qid3\datasette'``` |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1479/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 449886319 | MDU6SXNzdWU0NDk4ODYzMTk= | 493 | Rename metadata.json to config.json | simonw 9599 | closed | 0 | Datasette 1.0 3268330 | 7 | 2019-05-29T15:48:03Z | 2023-08-23T01:29:21Z | 2023-08-23T01:29:20Z | OWNER | It is increasingly being useful configuration options, when it started out as purely metadata. Could cause confusion with the |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/493/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | |||||

| 1855894222 | I_kwDOCGYnMM5unrLO | 585 | CLI equivalents to `transform(add_foreign_keys=)` | simonw 9599 | closed | 0 | 7 | 2023-08-18T01:07:15Z | 2023-08-18T01:51:16Z | 2023-08-18T01:51:15Z | OWNER | The new options added in: - #577 Deserve consideration in the CLI as well. |

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/585/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 771511344 | MDExOlB1bGxSZXF1ZXN0NTQzMDE1ODI1 | 31 | Update for Big Sur | RhetTbull 41546558 | open | 0 | 7 | 2020-12-20T04:36:45Z | 2023-08-08T15:52:52Z | CONTRIBUTOR | dogsheep/dogsheep-photos/pulls/31 | Refactored out the SQL for extracting aesthetic scores to use osxphotos -- adds compatbility for Big Sur via osxphotos which has been updated for new table names in Big Sur. Have not yet refactored the SQL for extracting labels which is still compatible with Big Sur. |

dogsheep-photos 256834907 | pull | {

"url": "https://api.github.com/repos/dogsheep/dogsheep-photos/issues/31/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

0 | ||||||

| 1816851056 | I_kwDOCGYnMM5sSvJw | 568 | table.create(..., replace=True) | simonw 9599 | closed | 0 | 7 | 2023-07-22T18:12:22Z | 2023-07-22T19:25:35Z | 2023-07-22T19:15:44Z | OWNER | Found myself using this pattern to quickly prototype a schema: ```python import sqlite_utils db = sqlite_utils.Database(memory=True) print(db["answers_chunks"].create({ "id": int, "content": str, "embedding_type_id": int, "embedding": bytes, "embedding_content_md5": str, "source": str, }, pk="id", transform=True).schema) ``` Using |

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/568/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 323223872 | MDU6SXNzdWUzMjMyMjM4NzI= | 260 | Validate metadata.json on startup | simonw 9599 | open | 0 | 7 | 2018-05-15T13:42:56Z | 2023-06-21T12:51:22Z | OWNER | It's easy to misspell the name of a database or table and then be puzzled when the metadata settings silently fail. To avoid this, let's sanity check the provided metadata.json on startup and quit with a useful error message if we find any obvious mistakes. |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/260/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1383646615 | I_kwDOCGYnMM5SeMWX | 491 | Ability to merge databases and tables | sgraaf 8904453 | open | 0 | 7 | 2022-09-23T11:10:55Z | 2023-06-14T22:14:24Z | NONE | Hi! Let me firstly say that I am a big fan of your work -- I follow your tweets and blog posts with great interest 😄. Now onto the matter at hand: I think it would be great if This could look something like this:

I imagine this is rather straightforward if all databases involved in the merge contain differently named tables (i.e. no chance of conflicts), but things get slightly more complicated if two or more of the databases to be merged contain tables with the same name. Not only do you have to "do something" with the primary key(s), but these tables could also simply have different schemas (and therefore be incompatible for concatenation to begin with). Anyhow, I would love your thoughts on this, and, if you are open to it, work together on the design and implementation! |

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/491/reactions",

"total_count": 2,

"+1": 2,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1114543475 | I_kwDOCGYnMM5CbpVz | 388 | Link to stable docs from older versions | simonw 9599 | closed | 0 | 7 | 2022-01-26T01:55:46Z | 2023-03-26T23:43:12Z | 2022-01-26T02:00:22Z | OWNER | https://sqlite-utils.datasette.io/en/2.14.1/ isn't showing a link to the stable release right now. I should also apply the same fix I used for Datasette in: - https://github.com/simonw/datasette/issues/1608 TIL: https://til.simonwillison.net/readthedocs/link-from-latest-to-stable |

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/388/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 1616354999 | I_kwDOJHON9s5gV563 | 2 | First working version | simonw 9599 | closed | 0 | 7 | 2023-03-09T03:53:00Z | 2023-03-09T05:10:22Z | 2023-03-09T05:10:22Z | MEMBER | It's going to shell out to I'm going with that option because https://appscript.sourceforge.io/status.html warns against the other potential methods:

But |

apple-notes-to-sqlite 611552758 | issue | {

"url": "https://api.github.com/repos/dogsheep/apple-notes-to-sqlite/issues/2/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 743384829 | MDExOlB1bGxSZXF1ZXN0NTIxMjg3OTk0 | 203 | changes to allow for compound foreign keys | drkane 1049910 | open | 0 | 7 | 2020-11-16T00:30:10Z | 2023-01-25T18:47:18Z | FIRST_TIME_CONTRIBUTOR | simonw/sqlite-utils/pulls/203 | Add support for compound foreign keys, as per issue #117 Not sure if this is the right approach. In particular I'm unsure about:

The PR also contains a minor related change that columns and tables are always quoted in foreign key definitions. |

sqlite-utils 140912432 | pull | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/203/reactions",

"total_count": 1,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 1,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

0 | ||||||

| 1430797211 | I_kwDOBm6k_c5VSDub | 1875 | Figure out design for JSON errors (consider RFC 7807) | simonw 9599 | open | 0 | Datasette 1.0a-next 8755003 | 7 | 2022-11-01T03:14:15Z | 2022-12-13T05:29:08Z | OWNER | https://datatracker.ietf.org/doc/draft-ietf-httpapi-rfc7807bis/ is a brand new standard. Since I need a neat, predictable format for my JSON errors, maybe I should use this one? |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1875/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

|||||||

| 1473481262 | I_kwDOBm6k_c5X04ou | 1928 | Hacker News Datasette write demo | simonw 9599 | closed | 0 | 7 | 2022-12-02T21:17:41Z | 2022-12-02T23:47:11Z | 2022-12-02T21:43:19Z | OWNER | Idea is to have my existing scraper at https://github.com/simonw/scrape-hacker-news-by-domain also write to my private Datasette Cloud account, then create an atom feed from it. |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1928/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 1422915587 | I_kwDOBm6k_c5Uz_gD | 1853 | Upgrade Datasette Docker to Python 3.11 | simonw 9599 | closed | 0 | 7 | 2022-10-25T18:44:31Z | 2022-10-25T19:28:56Z | 2022-10-25T19:05:16Z | OWNER | Related: - #1768 I think this base image looks right: 3.11.0-slim-bullseye |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1853/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 1363552780 | I_kwDOBm6k_c5RRioM | 1805 | truncate_cells_html does not work for links? | CharlesNepote 562352 | open | 0 | 7 | 2022-09-06T16:41:29Z | 2022-10-03T09:18:06Z | NONE | We have many links inside our dataset (please don't blame us ;-). When I use Eg. https://images.openfoodfacts.org/images/products/000/000/000/088/nutrition_fr.5.200.jpg (87 chars) is not truncated:

IMHO It would make sense that links should be treated as HTML. The link should work of course, but Datasette could truncate it: https://images.openfoodfacts.org/images/products/00[...].jpg |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1805/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

reopened | |||||||

| 1384273985 | I_kwDOBm6k_c5SglhB | 1817 | Expose `sql` and `params` arguments to various plugin hooks | simonw 9599 | open | 0 | 7 | 2022-09-23T20:34:45Z | 2022-09-27T00:27:53Z | OWNER | On Discord: https://discord.com/channels/823971286308356157/996877076982415491/1022784534363787305

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1817/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1082651698 | I_kwDOCGYnMM5Ah_Qy | 358 | Support for CHECK constraints | luxint 11597658 | open | 0 | 7 | 2021-12-16T21:19:45Z | 2022-09-25T07:15:59Z | NONE | Hi, I noticed the |

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/358/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1366512990 | PR_kwDOCGYnMM4-nBs9 | 486 | progressbar for inserts/upserts of all fileformats, closes #485 | MischaU8 99098079 | closed | 0 | 7 | 2022-09-08T14:58:02Z | 2022-09-15T20:40:03Z | 2022-09-15T20:37:51Z | CONTRIBUTOR | simonw/sqlite-utils/pulls/486 | :books: Documentation preview :books:: https://sqlite-utils--486.org.readthedocs.build/en/486/ |

sqlite-utils 140912432 | pull | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/486/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

0 | |||||

| 728905098 | MDU6SXNzdWU3Mjg5MDUwOTg= | 1048 | Documentation and unit tests for urls.row() urls.row_blob() methods | simonw 9599 | open | 0 | 7 | 2020-10-25T00:13:53Z | 2022-07-10T16:23:57Z | OWNER | datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1048/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

|||||||||

| 1239008850 | I_kwDOBm6k_c5J2cZS | 1744 | `--nolock` feature for opening locked databases | simonw 9599 | closed | 0 | 7 | 2022-05-17T18:25:16Z | 2022-05-17T19:46:38Z | 2022-05-17T19:40:30Z | OWNER | The getting started docs currently suggest you try this to browse your Chrome history: But if Chrome is running you will likely get this error: Turns out there's a workaround for this which I just spotted on the SQLite forum:

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1744/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 1175648453 | I_kwDOBm6k_c5GEvjF | 1675 | Extract out `check_permissions()` from `BaseView | simonw 9599 | closed | 0 | 7 | 2022-03-21T16:39:46Z | 2022-03-21T17:14:31Z | 2022-03-21T17:13:21Z | OWNER |

Originally posted by @simonw in https://github.com/simonw/datasette/issues/1660#issuecomment-1074136176 |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1675/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 1173017980 | PR_kwDOBm6k_c40oRq- | 1664 | Remove hashed URL mode | simonw 9599 | closed | 0 | 7 | 2022-03-17T23:19:10Z | 2022-03-19T00:12:04Z | 2022-03-19T00:12:04Z | OWNER | simonw/datasette/pulls/1664 | Refs #1661. |

datasette 107914493 | pull | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1664/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

0 | |||||

| 1166731361 | I_kwDOCGYnMM5Fiuhh | 414 | I forgot to include the changelog in the 3.25.1 release | simonw 9599 | closed | 0 | 7 | 2022-03-11T18:32:36Z | 2022-03-11T18:40:39Z | 2022-03-11T18:40:39Z | OWNER | I pushed a release for https://github.com/simonw/sqlite-utils/releases/tag/3.25.1 but forgot to include the release notes in This means https://sqlite-utils.datasette.io/en/stable/changelog.html isn't showing them. |

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/414/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 1160432941 | PR_kwDOBm6k_c4z_p6S | 1648 | Use dash encoding for table names and row primary keys in URLs | simonw 9599 | closed | 0 | 7 | 2022-03-05T19:50:45Z | 2022-03-07T15:38:30Z | 2022-03-07T15:38:30Z | OWNER | simonw/datasette/pulls/1648 | Refs #1439.

|

datasette 107914493 | pull | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1648/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

0 | |||||

| 1138948786 | PR_kwDOCGYnMM4y3yW0 | 407 | Add SpatiaLite helpers to CLI | eyeseast 25778 | closed | 0 | 7 | 2022-02-15T16:50:17Z | 2022-02-16T01:49:40Z | 2022-02-16T00:58:08Z | CONTRIBUTOR | simonw/sqlite-utils/pulls/407 | Closes #398 This adds SpatiaLite helpers to the CLI. ```sh init spatialite when creating a databasesqlite-utils create database.db --enable-wal --init-spatialite add geometry columnsneeds a database, table, geometry column name, type, with optional SRID and not-nullthis will throw an error if the table doesn't already existsqlite-utils add-geometry-column database.db table-name geometry --srid 4326 --not-null spatial index an existing table/columnthis will throw an error it the table and column don't existsqlite-utils create-spatial-index database.db table-name geometry ``` Docs and tests are included. |

sqlite-utils 140912432 | pull | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/407/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

0 | |||||

| 688622148 | MDU6SXNzdWU2ODg2MjIxNDg= | 957 | Simplify imports of common classes | simonw 9599 | closed | 0 | Datasette 1.0 3268330 | 7 | 2020-08-29T23:44:04Z | 2022-02-06T06:36:41Z | 2022-02-06T06:34:37Z | OWNER | There are only a few classes that plugins need to import. It would be nice if these imports were as short and memorable as possible. For example:

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/957/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | |||||

| 734777631 | MDU6SXNzdWU3MzQ3Nzc2MzE= | 1080 | "View all" option for facets, to provide a (paginated) list of ALL of the facet counts plus a link to view them | simonw 9599 | open | 0 | Datasette 1.0 3268330 | 7 | 2020-11-02T19:55:06Z | 2022-02-04T06:25:18Z | OWNER | Can use |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1080/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

|||||||

| 1100015398 | I_kwDOBm6k_c5BkOcm | 1591 | Maybe let plugins define custom serve options? | simonw 9599 | open | 0 | 7 | 2022-01-12T08:18:47Z | 2022-01-15T11:56:59Z | OWNER | https://twitter.com/psychemedia/status/1481171650934714370

I've thought something like this might be useful for other plugins in the past, too. |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1591/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1059555791 | I_kwDOBm6k_c4_J4nP | 1527 | Columns starting with an underscore behave poorly in filters | simonw 9599 | closed | 0 | Datasette 0.60 7571612 | 7 | 2021-11-22T01:01:36Z | 2022-01-14T00:57:08Z | 2022-01-14T00:57:08Z | OWNER | Similar bug to #1525 (and #1506 before it). Start on https://latest.datasette.io/fixtures/facetable?_facet=_neighborhood - then select a neighborhood - then try to remove that filter using the little "x" and submitting the form again.

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1527/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | |||||

| 1087931918 | I_kwDOBm6k_c5A2IYO | 1579 | `.execute_write(... block=True)` should be the default behaviour | simonw 9599 | closed | 0 | Datasette 0.60 7571612 | 7 | 2021-12-23T18:54:28Z | 2022-01-13T22:28:08Z | 2021-12-23T19:18:26Z | OWNER | Every single piece of code I've written against the write APIs has used the Without that, it instead fires the write into the queue but then continues even before it has finished executing.

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1579/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | |||||

| 520681725 | MDU6SXNzdWU1MjA2ODE3MjU= | 621 | Syntax for ?_through= that works as a form field | simonw 9599 | open | 0 | 7 | 2019-11-11T00:19:03Z | 2021-12-18T01:42:33Z | OWNER | The current syntax for This means you can't target a form field at it. We should be able to support both - |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/621/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 1077243232 | I_kwDOCGYnMM5ANW1g | 354 | Test failure in test_rebuild_fts | simonw 9599 | closed | 0 | 7 | 2021-12-10T21:27:55Z | 2021-12-11T01:08:46Z | 2021-12-11T01:08:46Z | OWNER | Not sure why this has only just started failing, but I'm getting this: https://github.com/simonw/sqlite-utils/runs/4488687639 ``` E sqlite3.DatabaseError: database disk image is malformed sqlite_utils/db.py:425: DatabaseError ___ test_rebuild_fts[searchable_fts] ___ fresh_db = <Database \<sqlite3.Connection object at 0x1084ea9d0>> table_to_fix = 'searchable_fts'

|

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/354/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 1058196641 | I_kwDOCGYnMM4_Esyh | 342 | Extra options to `lookup()` which get passed to `insert()` | simonw 9599 | closed | 0 | 7 | 2021-11-19T06:53:03Z | 2021-11-19T07:26:54Z | 2021-11-19T07:26:54Z | OWNER | For https://github.com/simonw/git-history/issues/12 I found myself wanting to pass extra options to |

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/342/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 1055402144 | PR_kwDOBm6k_c4unfnq | 1512 | New pattern for async view classes | simonw 9599 | closed | 0 | 7 | 2021-11-16T21:55:44Z | 2021-11-17T01:39:54Z | 2021-11-17T01:39:44Z | OWNER | simonw/datasette/pulls/1512 | Refs #878 - starting out with the new |

datasette 107914493 | pull | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1512/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

1 | |||||

| 597671518 | MDU6SXNzdWU1OTc2NzE1MTg= | 98 | Only set .last_rowid and .last_pk for single update/inserts, not for .insert_all()/.upsert_all() with multiple records | simonw 9599 | closed | 0 | 7 | 2020-04-10T03:19:40Z | 2021-09-28T04:38:44Z | 2020-04-13T03:29:15Z | OWNER | sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/98/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | |||||||

| 972918533 | MDU6SXNzdWU5NzI5MTg1MzM= | 1438 | Query page .csv and .json links are not correctly URL-encoded on Vercel under unknown specific conditions | simonw 9599 | open | 0 | 7 | 2021-08-17T17:35:36Z | 2021-08-18T00:22:23Z | OWNER |

Originally posted by @simonw in https://github.com/simonw/datasette-publish-vercel/issues/48#issuecomment-900497579 |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1438/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 722816436 | MDU6SXNzdWU3MjI4MTY0MzY= | 186 | .extract() shouldn't extract null values | simonw 9599 | open | 0 | 7 | 2020-10-16T02:41:08Z | 2021-08-12T12:32:14Z | OWNER | This almost works, but it creates a rogue |

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/186/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 925410305 | MDU6SXNzdWU5MjU0MTAzMDU= | 285 | Introspection property for telling if a table is a rowid table | simonw 9599 | closed | 0 | 7 | 2021-06-19T14:56:16Z | 2021-06-19T15:12:33Z | 2021-06-19T15:12:33Z | OWNER | Originally posted by @simonw in https://github.com/simonw/sqlite-utils/issues/284#issuecomment-864416785 |

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/285/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 924990677 | MDU6SXNzdWU5MjQ5OTA2Nzc= | 279 | sqlite-utils memory should handle TSV and JSON in addition to CSV | simonw 9599 | closed | 0 | 7 | 2021-06-18T15:02:54Z | 2021-06-19T03:11:59Z | 2021-06-19T03:11:59Z | OWNER |

Follow-on from #272 |

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/279/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 642388564 | MDU6SXNzdWU2NDIzODg1NjQ= | 858 | publish heroku does not work on Windows 10 | simonlau 870912 | open | 0 | 7 | 2020-06-20T14:40:28Z | 2021-06-10T17:44:09Z | NONE | When executing "datasette publish heroku schools.db" on Windows 10, I get the following error

to

as well as the other |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/858/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 904071938 | MDU6SXNzdWU5MDQwNzE5Mzg= | 1345 | ?_nocol= does not interact well with default facets | simonw 9599 | closed | 0 | 7 | 2021-05-27T18:39:55Z | 2021-05-31T02:40:44Z | 2021-05-31T02:31:21Z | OWNER | Clicking "Hide this column" on https://covid-19.datasettes.com/covid/ny_times_us_counties?_nocol=fips

The reason is that https://covid-19.datasettes.com/-/metadata sets up the following:

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1345/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 898904402 | MDU6SXNzdWU4OTg5MDQ0MDI= | 1337 | "More" link for facets that shows _facet_size=max results | simonw 9599 | closed | 0 | 7 | 2021-05-23T00:08:51Z | 2021-05-27T16:14:14Z | 2021-05-27T16:01:03Z | OWNER | Original title: "More" link for facets that shows the full set of results The simplest way to do this will be to have it link to a generated SQL query. Originally posted by @simonw in https://github.com/simonw/datasette/issues/1332#issuecomment-846479062 |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1337/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 672421411 | MDU6SXNzdWU2NzI0MjE0MTE= | 916 | Support reverse pagination (previous page, has-previous-items) | simonw 9599 | open | 0 | 7 | 2020-08-04T00:32:06Z | 2021-04-03T23:43:11Z | OWNER | I need this for

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/916/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 841456306 | MDU6SXNzdWU4NDE0NTYzMDY= | 1276 | Invalid SQL: "no such table: pragma_database_list" on database page | justinallen 1314318 | closed | 0 | 7 | 2021-03-26T00:03:53Z | 2021-03-31T16:27:27Z | 2021-03-28T23:52:31Z | NONE | Don't think this has been covered here yet. I'm a little stumped with this one and can't tell if it's a bug or I have something misconfigured. Oddly, when running locally the usual list of tables populates (i.e. at /charts a list of tables in charts.db). But when on the web server it throws an Invalid SQL error and "no such table: pragma_database_list" below. All the url endpoints seem to work fine aside from this - individual tables (/charts/chart_one), as well as stored queries (/charts/query_one). Not sure if this has anything to do with upgrading to Datasette 0.55, or something to do with our setup, which uses a metadata build script similar to the one for the 538 server, or something else. |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1276/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 842881221 | MDU6SXNzdWU4NDI4ODEyMjE= | 1281 | Latest Datasette tags missing from Docker Hub | simonw 9599 | closed | 0 | 7 | 2021-03-29T00:58:30Z | 2021-03-29T01:41:48Z | 2021-03-29T01:41:48Z | OWNER | Spotted this while testing https://github.com/simonw/datasette/issues/1249#issuecomment-808998719_ https://hub.docker.com/r/datasetteproject/datasette/tags?page=1&ordering=last_updated isn't showing the tags for any version more recent than 0.54.1 - we are up to 0.56 now. But the |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1281/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 816560819 | MDU6SXNzdWU4MTY1NjA4MTk= | 240 | table.pks_and_rows_where() method returning primary keys along with the rows | simonw 9599 | closed | 0 | 7 | 2021-02-25T15:49:28Z | 2021-02-25T16:39:23Z | 2021-02-25T16:28:23Z | OWNER | Original title: Easier way to update a row returned from .rows Here's a surprisingly hard problem I ran into while trying to implement #239 - given a row returned by The problem is that the Instead, currently, you need to introspect the table and, if A utility mechanism to make this easier would be very welcome. |

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/240/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 688670158 | MDU6SXNzdWU2ODg2NzAxNTg= | 147 | SQLITE_MAX_VARS maybe hard-coded too low | simonwiles 96218 | open | 0 | 7 | 2020-08-30T07:26:45Z | 2021-02-15T21:27:55Z | CONTRIBUTOR | I came across this while about to open an issue and PR against the documentation for As mentioned in #145, while:

it is common that it is increased at compile time. Debian-based systems, for example, seem to ship with a version of sqlite compiled with SQLITE_MAX_VARIABLE_NUMBER set to 250,000, and I believe this is the case for homebrew installations too. In working to understand what Unfortunately, it seems that Obviously this couldn't be relied upon in |

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/147/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

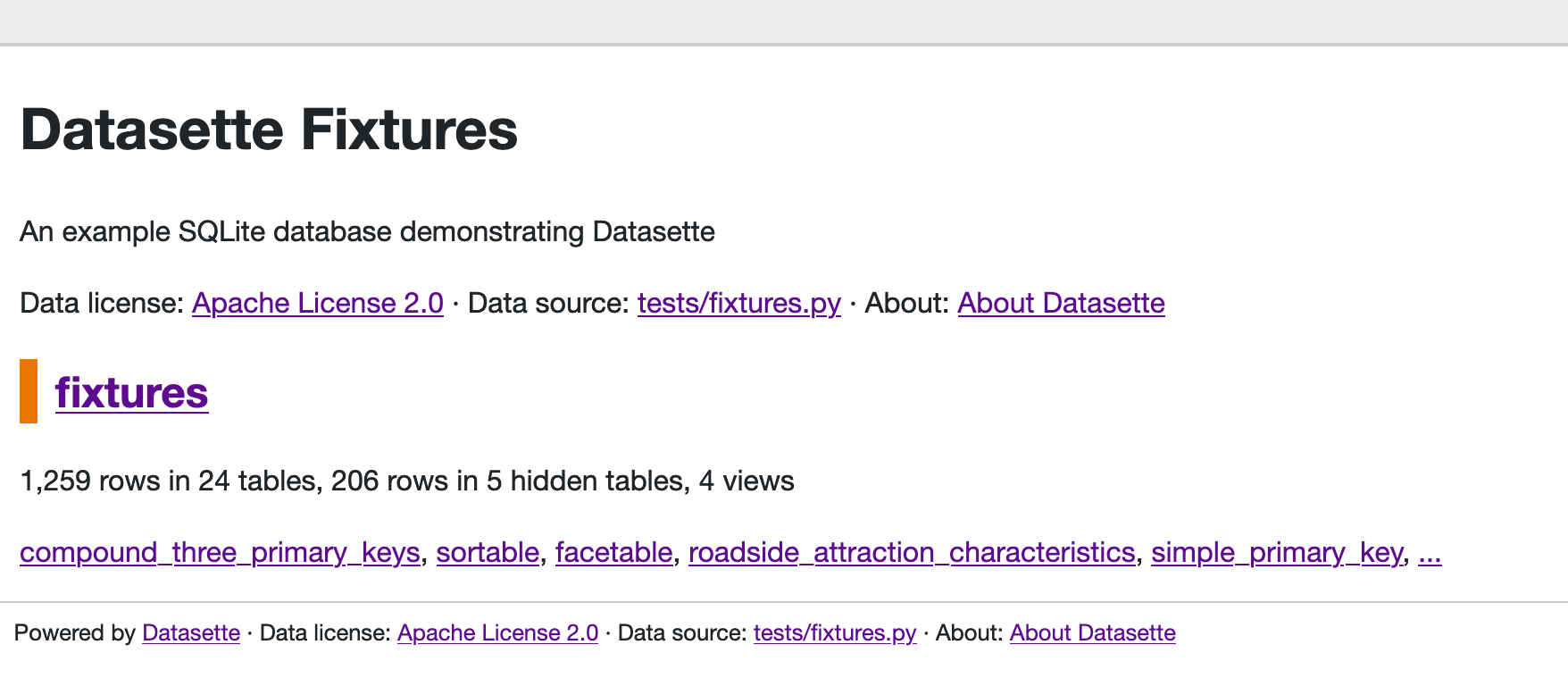

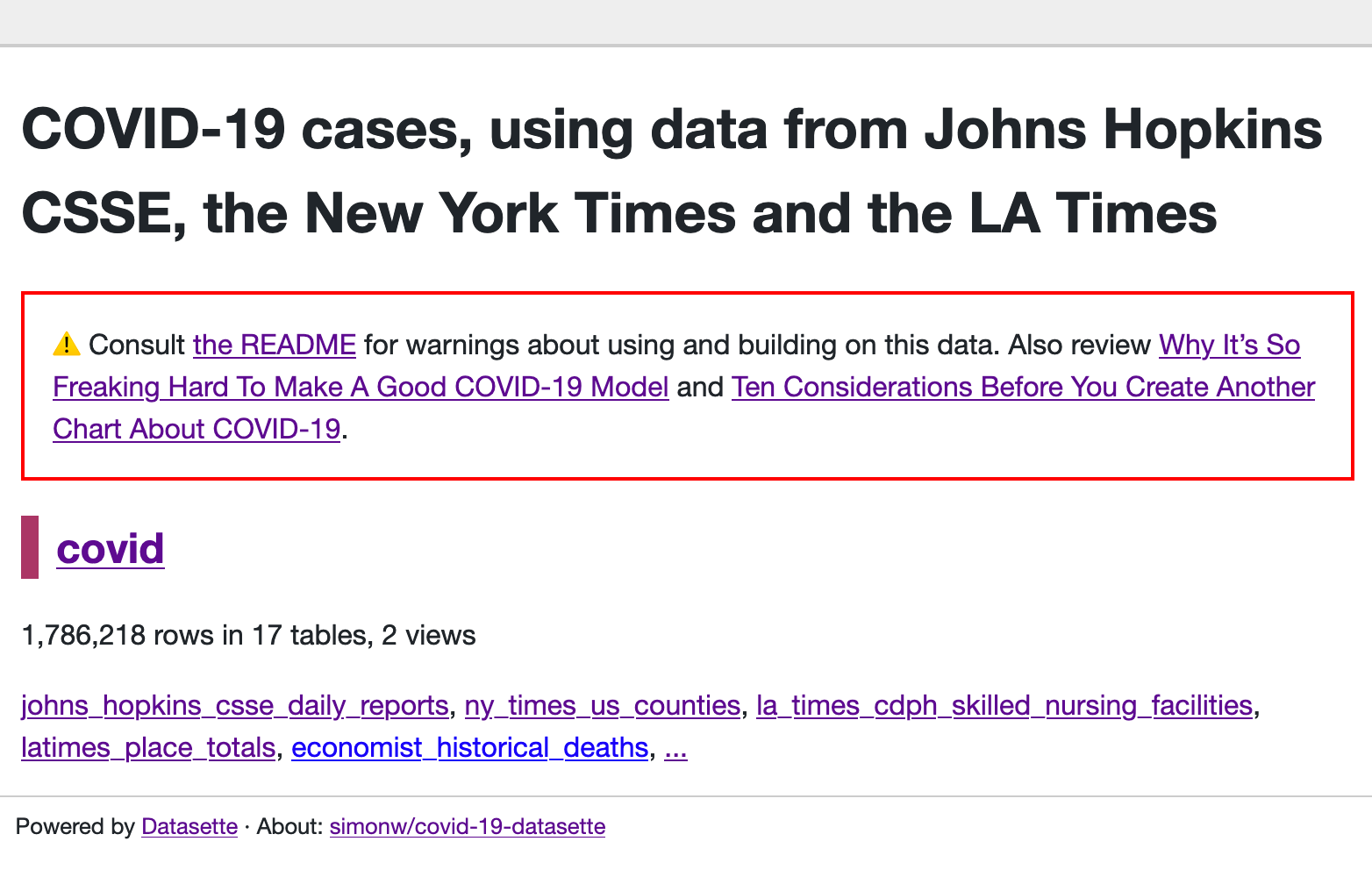

| 714377268 | MDU6SXNzdWU3MTQzNzcyNjg= | 991 | Redesign application homepage | simonw 9599 | open | 0 | 7 | 2020-10-04T18:48:45Z | 2021-01-26T19:06:36Z | OWNER | Most Datasette instances only host a single database, but the current homepage design assumes that it should leave plenty of space for multiple databases:

Reconsider this design - should the default show more information? The Covid-19 Datasette homepage looks particularly sparse I think: https://covid-19.datasettes.com/

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/991/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 712984738 | MDU6SXNzdWU3MTI5ODQ3Mzg= | 987 | Documented HTML hooks for JavaScript plugin authors | simonw 9599 | open | 0 | 7 | 2020-10-01T16:10:14Z | 2021-01-25T04:00:03Z | OWNER | In #981 I added |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/987/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 612151767 | MDU6SXNzdWU2MTIxNTE3Njc= | 15 | Expose scores from ZCOMPUTEDASSETATTRIBUTES | simonw 9599 | closed | 0 | 7 | 2020-05-04T20:36:07Z | 2020-12-20T04:44:22Z | 2020-05-05T00:11:45Z | MEMBER | The Apple Photos database has a

|

dogsheep-photos 256834907 | issue | {

"url": "https://api.github.com/repos/dogsheep/dogsheep-photos/issues/15/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 721050815 | MDU6SXNzdWU3MjEwNTA4MTU= | 1019 | "Edit SQL" button on canned queries | jsfenfen 639012 | closed | 0 | 0.51 6026070 | 7 | 2020-10-14T00:51:39Z | 2020-10-23T19:44:06Z | 2020-10-14T03:44:23Z | CONTRIBUTOR | Feature request: Would it be possible to add an "edit this query" button on canned queries? Clicking it would open the canned query as an editable sql query. I think the intent is to have named parameters to allow this, but sometimes you just gotta rewrite it? |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1019/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | |||||

| 722724086 | MDU6SXNzdWU3MjI3MjQwODY= | 1025 | Fix last remaining links to "/" that do not respect base_url | simonw 9599 | closed | 0 | 0.51 6026070 | 7 | 2020-10-15T22:46:38Z | 2020-10-23T19:44:06Z | 2020-10-20T05:21:29Z | OWNER | Refs #1023

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/1025/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | |||||

| 703218756 | MDU6SXNzdWU3MDMyMTg3NTY= | 50 | Commands for making authenticated API calls | simonw 9599 | open | 0 | 7 | 2020-09-17T02:39:07Z | 2020-10-19T05:01:29Z | MEMBER | Similar to |

github-to-sqlite 207052882 | issue | {

"url": "https://api.github.com/repos/dogsheep/github-to-sqlite/issues/50/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 723838331 | MDU6SXNzdWU3MjM4MzgzMzE= | 11 | export.xml file name varies with different language settings | jarib 572 | closed | 0 | 7 | 2020-10-17T20:07:18Z | 2020-10-17T21:39:15Z | 2020-10-17T21:14:10Z | NONE | The XML file exported from my phone has a Norwegian file name – I can work around this by unpacking the zip and using Perhaps this could be solved by |

healthkit-to-sqlite 197882382 | issue | {

"url": "https://api.github.com/repos/dogsheep/healthkit-to-sqlite/issues/11/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 440134714 | MDU6SXNzdWU0NDAxMzQ3MTQ= | 446 | Define mechanism for plugins to return structured data | simonw 9599 | closed | 0 | Datasette 1.0 3268330 | 7 | 2019-05-03T17:00:16Z | 2020-10-02T00:08:54Z | 2020-10-02T00:08:47Z | OWNER | Several plugin hooks now expect plugins to return data in a specific shape - notably the new output format hook and the custom facet hook. These use Python dictionaries right now but that's quite error prone: it would be good to have a mechanism that supported a more structured format. Full list of current hooks is here: https://datasette.readthedocs.io/en/latest/plugins.html#plugin-hooks |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/446/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | |||||

| 707944044 | MDExOlB1bGxSZXF1ZXN0NDkyMjU3NDA1 | 174 | Much, much faster extract() implementation | simonw 9599 | closed | 0 | 7 | 2020-09-24T07:52:31Z | 2020-09-24T15:44:00Z | 2020-09-24T15:43:56Z | OWNER | simonw/sqlite-utils/pulls/174 | Takes my test down from ten minutes to four seconds. Refs #172. |

sqlite-utils 140912432 | pull | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/174/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

0 | |||||

| 705827457 | MDU6SXNzdWU3MDU4Mjc0NTc= | 971 | Support the dbstat table | simonw 9599 | closed | 0 | 7 | 2020-09-21T18:38:53Z | 2020-09-21T19:00:02Z | 2020-09-21T18:59:52Z | OWNER |

| name | path | pageno | pagetype | ncell | payload | unused | mx_payload | pgoffset | pgsize | |---------------------------|--------|----------|------------|---------|-----------|----------|--------------|------------|----------| | bachelorette/bachelorette | / | 89 | internal | 13 | 0 | 3981 | 0 | 360448 | 4096 | | bachelorette/bachelorette | /000/ | 91 | leaf | 66 | 3792 | 32 | 74 | 368640 | 4096 | | bachelorette/bachelorette | /001/ | 92 | leaf | 67 | 3800 | 14 | 74 | 372736 | 4096 | | bachelorette/bachelorette | /002/ | 93 | leaf | 65 | 3717 | 46 | 70 | 376832 | 4096 | | bachelorette/bachelorette | /003/ | 94 | leaf | 68 | 3742 | 6 | 71 | 380928 | 4096 | | bachelorette/bachelorette | /004/ | 95 | leaf | 70 | 3696 | 42 | 66 | 385024 | 4096 | | bachelorette/bachelorette | /005/ | 96 | leaf | 69 | 3721 | 22 | 71 | 389120 | 4096 | | bachelorette/bachelorette | /006/ | 97 | leaf | 70 | 3737 | 1 | 72 | 393216 | 4096 | | bachelorette/bachelorette | /007/ | 98 | leaf | 69 | 3728 | 15 | 69 | 397312 | 4096 | | bachelorette/bachelorette | /008/ | 99 | leaf | 73 | 3715 | 8 | 64 | 401408 | 4096 | | bachelorette/bachelorette | /009/ | 100 | leaf | 73 | 3705 | 18 | 62 | 405504 | 4096 | | bachelorette/bachelorette | /00a/ | 101 | leaf | 75 | 3681 | 32 | 62 | 409600 | 4096 | | bachelorette/bachelorette | /00b/ | 102 | leaf | 77 | 3694 | 9 | 62 | 413696 | 4096 | | bachelorette/bachelorette | /00c/ | 103 | leaf | 74 | 3673 | 45 | 62 | 417792 | 4096 | | bachelorette/bachelorette | /00d/ | 104 | leaf | 5 | 228 | 3835 | 48 | 421888 | 4096 | Other than direct It would be cool if https://fivethirtyeight.datasettes.com/fivethirtyeight/dbstat didn't 404 (on databases for which that table was available). |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/971/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 705215230 | MDU6SXNzdWU3MDUyMTUyMzA= | 26 | Pagination | simonw 9599 | open | 0 | 7 | 2020-09-21T00:14:37Z | 2020-09-21T02:55:54Z | MEMBER | Useful for #16 (timeline view) since you can now filter to just the items on a specific day - but if there are more than 50 items you can't see them all. |

dogsheep-beta 197431109 | issue | {

"url": "https://api.github.com/repos/dogsheep/dogsheep-beta/issues/26/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 688427751 | MDU6SXNzdWU2ODg0Mjc3NTE= | 956 | Push to Docker Hub failed - but it shouldn't run for alpha releases anyway | simonw 9599 | closed | 0 | 7 | 2020-08-29T01:09:12Z | 2020-09-15T20:46:41Z | 2020-09-15T20:36:34Z | OWNER | https://github.com/simonw/datasette/runs/1043709494?check_suite_focus=true

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/956/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 686978131 | MDU6SXNzdWU2ODY5NzgxMzE= | 139 | insert_all(..., alter=True) should work for new columns introduced after the first 100 records | simonwiles 96218 | closed | 0 | 7 | 2020-08-27T06:25:25Z | 2020-08-28T22:48:51Z | 2020-08-28T22:30:14Z | CONTRIBUTOR | Is there a way to make I'm using It took me a while to find this little snippet in the documentation for

I tried changing the Is there a way around this that you would suggest? It seems like it should raise an exception at least. |

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/139/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 677926613 | MDU6SXNzdWU2Nzc5MjY2MTM= | 931 | Docker container is no longer being pushed (it's stuck on 0.45) | simonw 9599 | closed | 0 | 7 | 2020-08-12T19:33:03Z | 2020-08-12T21:36:20Z | 2020-08-12T21:36:20Z | OWNER | e.g. https://travis-ci.org/github/simonw/datasette/jobs/717123725 Here's how it broke:

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/931/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 651159727 | MDU6SXNzdWU2NTExNTk3Mjc= | 41 | Demo is failing to deploy | simonw 9599 | closed | 0 | 7 | 2020-07-05T22:40:33Z | 2020-07-06T01:07:03Z | 2020-07-06T01:07:02Z | MEMBER | https://github.com/dogsheep/github-to-sqlite/runs/837714622?check_suite_focus=true ``` Creating Revision.........................................................................................................................................failed Deployment failed ERROR: (gcloud.run.deploy) Cloud Run error: Container failed to start. Failed to start and then listen on the port defined by the PORT environment variable. Logs for this revision might contain more information. Traceback (most recent call last): File "/opt/hostedtoolcache/Python/3.8.3/x64/bin/datasette", line 8, in <module> sys.exit(cli()) File "/opt/hostedtoolcache/Python/3.8.3/x64/lib/python3.8/site-packages/click/core.py", line 829, in call return self.main(args, kwargs) File "/opt/hostedtoolcache/Python/3.8.3/x64/lib/python3.8/site-packages/click/core.py", line 782, in main rv = self.invoke(ctx) File "/opt/hostedtoolcache/Python/3.8.3/x64/lib/python3.8/site-packages/click/core.py", line 1259, in invoke return _process_result(sub_ctx.command.invoke(sub_ctx)) File "/opt/hostedtoolcache/Python/3.8.3/x64/lib/python3.8/site-packages/click/core.py", line 1259, in invoke return _process_result(sub_ctx.command.invoke(sub_ctx)) File "/opt/hostedtoolcache/Python/3.8.3/x64/lib/python3.8/site-packages/click/core.py", line 1066, in invoke return ctx.invoke(self.callback, ctx.params) File "/opt/hostedtoolcache/Python/3.8.3/x64/lib/python3.8/site-packages/click/core.py", line 610, in invoke return callback(args, **kwargs) File "/opt/hostedtoolcache/Python/3.8.3/x64/lib/python3.8/site-packages/datasette/publish/cloudrun.py", line 138, in cloudrun check_call( File "/opt/hostedtoolcache/Python/3.8.3/x64/lib/python3.8/subprocess.py", line 364, in check_call raise CalledProcessError(retcode, cmd) subprocess.CalledProcessError: Command 'gcloud run deploy --allow-unauthenticated --platform=managed --image gcr.io/datasette-222320/datasette github-to-sqlite' returned non-zero exit status 1. [error]Process completed with exit code 1.``` |

github-to-sqlite 207052882 | issue | {

"url": "https://api.github.com/repos/dogsheep/github-to-sqlite/issues/41/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 581339961 | MDU6SXNzdWU1ODEzMzk5NjE= | 92 | .columns_dict doesn't work for all possible column types | simonw 9599 | closed | 0 | 7 | 2020-03-14T19:30:35Z | 2020-03-15T18:37:43Z | 2020-03-14T20:04:14Z | OWNER | Got this error:

|

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/92/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 577578306 | MDU6SXNzdWU1Nzc1NzgzMDY= | 697 | index.html is not reliably loaded from a plugin | simonw 9599 | closed | 0 | 7 | 2020-03-08T22:37:55Z | 2020-03-08T23:33:28Z | 2020-03-08T23:11:27Z | OWNER | Lots of detail in https://github.com/simonw/datasette-search-all/issues/2 - short version is that I have a plugin with its own Related:

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/697/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 576711589 | MDU6SXNzdWU1NzY3MTE1ODk= | 695 | Update SQLite bundled with Docker container | simonw 9599 | closed | 0 | 7 | 2020-03-06T05:42:12Z | 2020-03-08T23:33:23Z | 2020-03-06T06:15:27Z | OWNER | It's 3.26.0 at the moment: https://github.com/simonw/datasette/blob/af9cd4ca64652fae262e6f7b5d201f6e0adc989b/Dockerfile#L9-L11 Most recent release is 3.31.1: https://www.sqlite.org/releaselog/3_31_1.html |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/695/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 564579430 | MDU6SXNzdWU1NjQ1Nzk0MzA= | 86 | Problem with square bracket in CSV column name | foscoj 8149512 | closed | 0 | 7 | 2020-02-13T10:19:57Z | 2020-02-27T04:16:08Z | 2020-02-27T04:16:07Z | NONE | testing some data from european power information (entsoe.eu), the title of the csv contains square brackets. as I am playing with glitch, sqlite-utils are used for creating the db. Traceback (most recent call last): File "/app/.local/bin/sqlite-utils", line 8, in <module> File "/app/.local/lib/python3.7/site-packages/click/core.py", line 764, in call File "/app/.local/lib/python3.7/site-packages/click/core.py", line 717, in main File "/app/.local/lib/python3.7/site-packages/click/core.py", line 1137, in invoke File "/app/.local/lib/python3.7/site-packages/click/core.py", line 956, in invoke File "/app/.local/lib/python3.7/site-packages/click/core.py", line 555, in invoke File "/app/.local/lib/python3.7/site-packages/sqlite_utils/cli.py", line 434, in insert File "/app/.local/lib/python3.7/site-packages/sqlite_utils/cli.py", line 384, in insert_upsert_implementation File "/app/.local/lib/python3.7/site-packages/sqlite_utils/db.py", line 997, in insert_all File "/app/.local/lib/python3.7/site-packages/sqlite_utils/db.py", line 618, in create File "/app/.local/lib/python3.7/site-packages/sqlite_utils/db.py", line 310, in create_table sqlite3.OperationalError: unrecognized token: "]" entsoe_2016.csv renamed to txt for uploading compatibility code is remixed directly from your https://glitch.com/edit/#!/datasette-csvs repo |

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/86/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 497171390 | MDU6SXNzdWU0OTcxNzEzOTA= | 577 | Utility mechanism for plugins to render templates | simonw 9599 | closed | 0 | Datasette 1.0 3268330 | 7 | 2019-09-23T15:30:36Z | 2020-02-04T20:26:20Z | 2020-02-04T20:26:19Z | OWNER | Sometimes a plugin will need to render a template for some custom UI. We need a documented API for doing this, which ensures that everything will work correctly if you extend base.html etc. See also #576. This could be a |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/577/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | |||||

| 289425975 | MDExOlB1bGxSZXF1ZXN0MTYzNTYxODMw | 181 | add "format sql" button to query page, uses sql-formatter | bsmithgall 1957344 | closed | 0 | 7 | 2018-01-17T21:50:04Z | 2019-11-11T03:08:25Z | 2019-11-11T03:08:25Z | NONE | simonw/datasette/pulls/181 | Cool project! This fixes #136 using the suggested sql formatter library. I included the minified version in the bundle and added the relevant scripts to the codemirror includes instead of adding new files, though I could also add new files. I wanted to keep it all together, since the result of the format needs access to the editor in order to properly update the codemirror instance. |

datasette 107914493 | pull | {

"url": "https://api.github.com/repos/simonw/datasette/issues/181/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

0 | |||||

| 327365110 | MDU6SXNzdWUzMjczNjUxMTA= | 294 | inspect should record column types | simonw 9599 | open | 0 | 7 | 2018-05-29T15:10:41Z | 2019-06-28T16:45:28Z | OWNER | For each table we want to know the columns, their order and what type they are. I'm going to break with SQLite defaults a little on this one and allow datasette to define additional types - to start with just a Possible JSON design: Refs #276 |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/294/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

||||||||

| 309033998 | MDU6SXNzdWUzMDkwMzM5OTg= | 187 | Windows installation error | robmarkcole 11855322 | closed | 0 | 7 | 2018-03-27T16:04:37Z | 2019-06-15T21:44:23Z | 2019-06-15T21:44:23Z | NONE | On attempting install on a Win 7 PC with py 3.6.2 (Anaconda dist) I get the error:

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/187/reactions",

"total_count": 4,

"+1": 4,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 432636432 | MDU6SXNzdWU0MzI2MzY0MzI= | 429 | ?_where=sql-fragment parameter for table views | simonw 9599 | closed | 0 | 7 | 2019-04-12T15:58:51Z | 2019-04-15T10:48:01Z | 2019-04-13T01:37:25Z | OWNER | Only available if arbitrary SQL is enabled (the default).

Allows any table (or view) page to have arbitrary additional This would be extremely useful for building JavaScript applications against the Datasette API that only need on extra tiny bit of SQL but still want to benefit from other table view features like faceting. Would be nice if this could take |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/429/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 413868452 | MDU6SXNzdWU0MTM4Njg0NTI= | 17 | Improve and document foreign_keys=... argument to insert/create/etc | simonw 9599 | closed | 0 | 7 | 2019-02-24T21:09:11Z | 2019-02-24T23:45:48Z | 2019-02-24T23:45:48Z | OWNER | The It is not yet documented. It also requires you to specify the SQLite type of each column, even though this can be detected by introspecting the referenced table: Relates to #2 |

sqlite-utils 140912432 | issue | {

"url": "https://api.github.com/repos/simonw/sqlite-utils/issues/17/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 336465018 | MDU6SXNzdWUzMzY0NjUwMTg= | 329 | Travis should push tagged images to Docker Hub for each release | simonw 9599 | closed | 0 | 7 | 2018-06-28T04:01:31Z | 2018-11-05T06:54:10Z | 2018-11-05T06:53:28Z | OWNER | datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/329/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | |||||||

| 273127694 | MDU6SXNzdWUyNzMxMjc2OTQ= | 57 | Ship a Docker image of the whole thing | simonw 9599 | closed | 0 | 7 | 2017-11-11T07:51:28Z | 2018-06-28T04:01:51Z | 2018-06-28T04:01:38Z | OWNER | The generated Docker images can then just inherit from that. This will speed up deploys as no need to

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/57/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

| 269731374 | MDU6SXNzdWUyNjk3MzEzNzQ= | 44 | ?_group_count=country - return counts by specific column(s) | simonw 9599 | closed | 0 | 7 | 2017-10-30T19:50:32Z | 2018-04-26T15:09:58Z | 2018-04-26T15:09:58Z | OWNER | Imagine if this: Turned into this: This would involve introducing a new precedent of query string arguments that start with an _ having special meanings. While we're at it, could try adding _fields=x,y,z Tasks:

|

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/44/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | ||||||

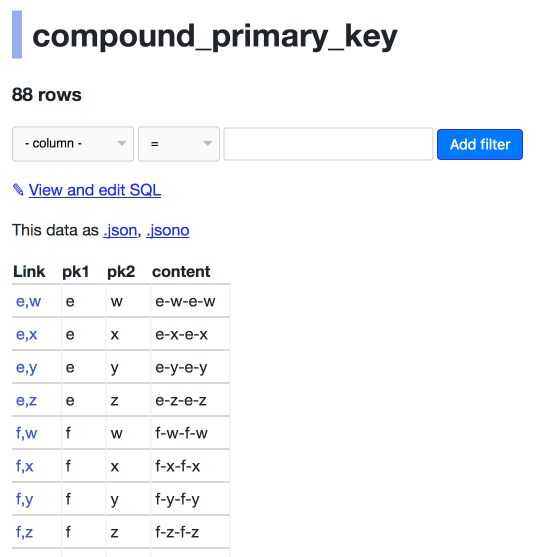

| 309558826 | MDU6SXNzdWUzMDk1NTg4MjY= | 190 | Keyset pagination doesn't work correctly for compound primary keys | simonw 9599 | closed | 0 | 7 | 2018-03-28T22:45:06Z | 2018-03-30T06:31:15Z | 2018-03-30T06:26:28Z | OWNER | Consider https://datasette-issue-190-compound-pks.now.sh/compound-pks-9aafe8f/compound_primary_key

The next= link is to But that page starts with:

The next key in the sequence should be |

datasette 107914493 | issue | {

"url": "https://api.github.com/repos/simonw/datasette/issues/190/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed |

Advanced export

JSON shape: default, array, newline-delimited, object

CREATE TABLE [issues] (

[id] INTEGER PRIMARY KEY,

[node_id] TEXT,

[number] INTEGER,

[title] TEXT,

[user] INTEGER REFERENCES [users]([id]),

[state] TEXT,

[locked] INTEGER,

[assignee] INTEGER REFERENCES [users]([id]),

[milestone] INTEGER REFERENCES [milestones]([id]),

[comments] INTEGER,

[created_at] TEXT,

[updated_at] TEXT,

[closed_at] TEXT,

[author_association] TEXT,

[pull_request] TEXT,

[body] TEXT,

[repo] INTEGER REFERENCES [repos]([id]),

[type] TEXT

, [active_lock_reason] TEXT, [performed_via_github_app] TEXT, [reactions] TEXT, [draft] INTEGER, [state_reason] TEXT);

CREATE INDEX [idx_issues_repo]

ON [issues] ([repo]);

CREATE INDEX [idx_issues_milestone]

ON [issues] ([milestone]);

CREATE INDEX [idx_issues_assignee]

ON [issues] ([assignee]);

CREATE INDEX [idx_issues_user]

ON [issues] ([user]);