issue_comments

10,495 rows sorted by updated_at descending

This data as json, CSV (advanced)

issue >30

- Show column metadata plus links for foreign keys on arbitrary query results 50

- Redesign default .json format 48

- Rethink how .ext formats (v.s. ?_format=) works before 1.0 48

- JavaScript plugin hooks mechanism similar to pluggy 47

- Updated Dockerfile with SpatiaLite version 5.0 45

- Complete refactor of TableView and table.html template 45

- Port Datasette to ASGI 42

- Authentication (and permissions) as a core concept 40

- Deploy a live instance of demos/apache-proxy 34

- await datasette.client.get(path) mechanism for executing internal requests 33

- Maintain an in-memory SQLite table of connected databases and their tables 32

- Ability to sort (and paginate) by column 31

- link_or_copy_directory() error - Invalid cross-device link 28

- Export to CSV 27

- base_url configuration setting 27

- Documentation with recommendations on running Datasette in production without using Docker 27

- Optimize all those calls to index_list and foreign_key_list 27

- Support cross-database joins 26

- Ability for a canned query to write to the database 26

- table.transform() method for advanced alter table 26

- New pattern for views that return either JSON or HTML, available for plugins 26

- Proof of concept for Datasette on AWS Lambda with EFS 25

- WIP: Add Gmail takeout mbox import 25

- Redesign register_output_renderer callback 24

- Make it easier to insert geometries, with documentation and maybe code 24

- "datasette insert" command and plugin hook 23

- Datasette Plugins 22

- .json and .csv exports fail to apply base_url 22

- Idea: import CSV to memory, run SQL, export in a single command 22

- Plugin hook for dynamic metadata 22

- …

| id | html_url | issue_url | node_id | user | created_at | updated_at ▲ | author_association | body | reactions | issue | performed_via_github_app |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1110219185 | https://github.com/simonw/datasette/issues/1715#issuecomment-1110219185 | https://api.github.com/repos/simonw/datasette/issues/1715 | IC_kwDOBm6k_c5CLJmx | simonw 9599 | 2022-04-26T20:28:40Z | 2022-04-26T20:56:48Z | OWNER | The refactor I did in #1719 pretty much clashes with all of the changes in https://github.com/simonw/datasette/commit/5053f1ea83194ecb0a5693ad5dada5b25bf0f7e6 so I'll probably need to start my Using a new |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Refactor TableView to use asyncinject 1212823665 | |

| 1110239536 | https://github.com/simonw/datasette/issues/1715#issuecomment-1110239536 | https://api.github.com/repos/simonw/datasette/issues/1715 | IC_kwDOBm6k_c5CLOkw | simonw 9599 | 2022-04-26T20:54:53Z | 2022-04-26T20:54:53Z | OWNER |

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Refactor TableView to use asyncinject 1212823665 | |

| 1110238896 | https://github.com/simonw/datasette/issues/1715#issuecomment-1110238896 | https://api.github.com/repos/simonw/datasette/issues/1715 | IC_kwDOBm6k_c5CLOaw | simonw 9599 | 2022-04-26T20:53:59Z | 2022-04-26T20:53:59Z | OWNER | I'm going to rename |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Refactor TableView to use asyncinject 1212823665 | |

| 1110229319 | https://github.com/simonw/datasette/issues/1715#issuecomment-1110229319 | https://api.github.com/repos/simonw/datasette/issues/1715 | IC_kwDOBm6k_c5CLMFH | simonw 9599 | 2022-04-26T20:41:32Z | 2022-04-26T20:44:38Z | OWNER | This time I'm not going to bother with the Most importantly: I want that |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Refactor TableView to use asyncinject 1212823665 | |

| 1110212021 | https://github.com/simonw/datasette/issues/1720#issuecomment-1110212021 | https://api.github.com/repos/simonw/datasette/issues/1720 | IC_kwDOBm6k_c5CLH21 | simonw 9599 | 2022-04-26T20:20:27Z | 2022-04-26T20:20:27Z | OWNER | Closing this because I have a good enough idea of the design for now - the details of the parameters can be figured out when I implement this. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Design plugin hook for extras 1215174094 | |

| 1109309683 | https://github.com/simonw/datasette/issues/1720#issuecomment-1109309683 | https://api.github.com/repos/simonw/datasette/issues/1720 | IC_kwDOBm6k_c5CHrjz | simonw 9599 | 2022-04-26T04:12:39Z | 2022-04-26T04:12:39Z | OWNER | I think the rough shape of the three plugin hooks is right. The detailed decisions that are needed concern what the parameters should be, which I think will mainly happen as part of:

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Design plugin hook for extras 1215174094 | |

| 1109306070 | https://github.com/simonw/datasette/issues/1720#issuecomment-1109306070 | https://api.github.com/repos/simonw/datasette/issues/1720 | IC_kwDOBm6k_c5CHqrW | simonw 9599 | 2022-04-26T04:05:20Z | 2022-04-26T04:05:20Z | OWNER | The proposed plugin for annotations - allowing users to attach comments to database tables, columns and rows - would be a great application for all three of those |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Design plugin hook for extras 1215174094 | |

| 1109305184 | https://github.com/simonw/datasette/issues/1720#issuecomment-1109305184 | https://api.github.com/repos/simonw/datasette/issues/1720 | IC_kwDOBm6k_c5CHqdg | simonw 9599 | 2022-04-26T04:03:35Z | 2022-04-26T04:03:35Z | OWNER | I bet there's all kinds of interesting potential extras that could be calculated by loading the results of the query into a Pandas DataFrame. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Design plugin hook for extras 1215174094 | |

| 1109200774 | https://github.com/simonw/datasette/issues/1720#issuecomment-1109200774 | https://api.github.com/repos/simonw/datasette/issues/1720 | IC_kwDOBm6k_c5CHQ-G | simonw 9599 | 2022-04-26T01:25:43Z | 2022-04-26T01:26:15Z | OWNER | Had a thought: if a custom HTML template is going to make use of stuff generated using these extras, it will need a way to tell Datasette to execute those extras even in the absence of the Is that necessary? Or should those kinds of plugins use the existing Or maybe the |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Design plugin hook for extras 1215174094 | |

| 1109200335 | https://github.com/simonw/datasette/issues/1720#issuecomment-1109200335 | https://api.github.com/repos/simonw/datasette/issues/1720 | IC_kwDOBm6k_c5CHQ3P | simonw 9599 | 2022-04-26T01:24:47Z | 2022-04-26T01:24:47Z | OWNER | Sketching out a ```python from datasette import hookimpl @hookimpl def register_table_extras(datasette): return [statistics] async def statistics(datasette, query, columns, sql): # ... need to figure out which columns are integer/floats # then build and execute a SQL query that calculates sum/avg/etc for each column ``` |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Design plugin hook for extras 1215174094 | |

| 1109190401 | https://github.com/simonw/sqlite-utils/issues/428#issuecomment-1109190401 | https://api.github.com/repos/simonw/sqlite-utils/issues/428 | IC_kwDOCGYnMM5CHOcB | simonw 9599 | 2022-04-26T01:05:29Z | 2022-04-26T01:05:29Z | OWNER | Django makes extensive use of savepoints for nested transactions: https://docs.djangoproject.com/en/4.0/topics/db/transactions/#savepoints |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Research adding support for savepoints 1215216249 | |

| 1109174715 | https://github.com/simonw/datasette/issues/1720#issuecomment-1109174715 | https://api.github.com/repos/simonw/datasette/issues/1720 | IC_kwDOBm6k_c5CHKm7 | simonw 9599 | 2022-04-26T00:40:13Z | 2022-04-26T00:43:33Z | OWNER | Some of the things I'd like to use

Looking at https://github-to-sqlite.dogsheep.net/github/commits.json?_labels=on&_shape=objects for inspiration. I think there's a separate potential mechanism in the future that lets you add custom columns to a table. This would affect |

{

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Design plugin hook for extras 1215174094 | |

| 1109171871 | https://github.com/simonw/datasette/issues/1720#issuecomment-1109171871 | https://api.github.com/repos/simonw/datasette/issues/1720 | IC_kwDOBm6k_c5CHJ6f | simonw 9599 | 2022-04-26T00:34:48Z | 2022-04-26T00:34:48Z | OWNER | Let's try sketching out a The first idea I came up with suggests adding new fields to the individual row records that come back - my mental model for extras so far has been that they add new keys to the root object. So if a table result looked like this:

Here's a plugin idea I came up with that would probably justify adding to the individual row objects instead:

This could also work by adding a I think I need some better plugin concepts before committing to this new hook. There's overlap between this and how I want the enrichments mechanism (see here) to work. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Design plugin hook for extras 1215174094 | |

| 1109165411 | https://github.com/simonw/datasette/issues/1720#issuecomment-1109165411 | https://api.github.com/repos/simonw/datasette/issues/1720 | IC_kwDOBm6k_c5CHIVj | simonw 9599 | 2022-04-26T00:22:42Z | 2022-04-26T00:22:42Z | OWNER | Passing |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Design plugin hook for extras 1215174094 | |

| 1109164803 | https://github.com/simonw/datasette/issues/1720#issuecomment-1109164803 | https://api.github.com/repos/simonw/datasette/issues/1720 | IC_kwDOBm6k_c5CHIMD | simonw 9599 | 2022-04-26T00:21:40Z | 2022-04-26T00:21:40Z | OWNER | What would the existing https://latest.datasette.io/fixtures/simple_primary_key/1.json?_extras=foreign_key_tables feature look like if it was re-imagined as a Rough sketch, copying most of the code from https://github.com/simonw/datasette/blob/579f59dcec43a91dd7d404e00b87a00afd8515f2/datasette/views/row.py#L98 ```python from datasette import hookimpl @hookimpl def register_row_extras(datasette): return [foreign_key_tables] async def foreign_key_tables(datasette, database, table, pk_values): if len(pk_values) != 1: return [] db = datasette.get_database(database) all_foreign_keys = await db.get_all_foreign_keys() foreign_keys = all_foreign_keys[table]["incoming"] if len(foreign_keys) == 0: return [] ``` |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Design plugin hook for extras 1215174094 | |

| 1109162123 | https://github.com/simonw/datasette/issues/1720#issuecomment-1109162123 | https://api.github.com/repos/simonw/datasette/issues/1720 | IC_kwDOBm6k_c5CHHiL | simonw 9599 | 2022-04-26T00:16:42Z | 2022-04-26T00:16:51Z | OWNER | Actually I'm going to imitate the existing

So I'm going to call the new hooks:

They'll return a list of |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Design plugin hook for extras 1215174094 | |

| 1109160226 | https://github.com/simonw/datasette/issues/1720#issuecomment-1109160226 | https://api.github.com/repos/simonw/datasette/issues/1720 | IC_kwDOBm6k_c5CHHEi | simonw 9599 | 2022-04-26T00:14:11Z | 2022-04-26T00:14:11Z | OWNER | There are four existing plugin hooks that include the word "extra" but use it to mean something else - to mean additional CSS/JS/variables to be injected into the page:

I think |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Design plugin hook for extras 1215174094 | |

| 1109159307 | https://github.com/simonw/datasette/issues/1720#issuecomment-1109159307 | https://api.github.com/repos/simonw/datasette/issues/1720 | IC_kwDOBm6k_c5CHG2L | simonw 9599 | 2022-04-26T00:12:28Z | 2022-04-26T00:12:28Z | OWNER | I'm going to keep table and row separate. So I think I need to add three new plugin hooks:

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Design plugin hook for extras 1215174094 | |

| 1109158903 | https://github.com/simonw/datasette/issues/1720#issuecomment-1109158903 | https://api.github.com/repos/simonw/datasette/issues/1720 | IC_kwDOBm6k_c5CHGv3 | simonw 9599 | 2022-04-26T00:11:42Z | 2022-04-26T00:11:42Z | OWNER | Places this plugin hook (or hooks?) should be able to affect:

I'm going to combine those last two, which means there are three places. But maybe I can combine the table one and the row one as well? |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Design plugin hook for extras 1215174094 | |

| 1108907238 | https://github.com/simonw/datasette/issues/1719#issuecomment-1108907238 | https://api.github.com/repos/simonw/datasette/issues/1719 | IC_kwDOBm6k_c5CGJTm | simonw 9599 | 2022-04-25T18:34:21Z | 2022-04-25T18:34:21Z | OWNER | Well this refactor turned out to be pretty quick and really does greatly simplify both the |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Refactor `RowView` and remove `RowTableShared` 1214859703 | |

| 1108890170 | https://github.com/simonw/datasette/issues/262#issuecomment-1108890170 | https://api.github.com/repos/simonw/datasette/issues/262 | IC_kwDOBm6k_c5CGFI6 | simonw 9599 | 2022-04-25T18:17:09Z | 2022-04-25T18:18:39Z | OWNER | I spotted in https://github.com/simonw/datasette/issues/1719#issuecomment-1108888494 that there's actually already an undocumented implementation of I added that feature all the way back in November 2017! https://github.com/simonw/datasette/commit/a30c5b220c15360d575e94b0e67f3255e120b916 |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Add ?_extra= mechanism for requesting extra properties in JSON 323658641 | |

| 1108888494 | https://github.com/simonw/datasette/issues/1719#issuecomment-1108888494 | https://api.github.com/repos/simonw/datasette/issues/1719 | IC_kwDOBm6k_c5CGEuu | simonw 9599 | 2022-04-25T18:15:42Z | 2022-04-25T18:15:42Z | OWNER | Here's an undocumented feature I forgot existed: https://latest.datasette.io/fixtures/simple_primary_key/1.json?_extras=foreign_key_tables

It's even covered by the tests: |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Refactor `RowView` and remove `RowTableShared` 1214859703 | |

| 1108884171 | https://github.com/simonw/datasette/issues/1719#issuecomment-1108884171 | https://api.github.com/repos/simonw/datasette/issues/1719 | IC_kwDOBm6k_c5CGDrL | simonw 9599 | 2022-04-25T18:10:46Z | 2022-04-25T18:12:45Z | OWNER | It looks like the only class method from that shared class needed by Which I've been wanting to refactor to provide to

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Refactor `RowView` and remove `RowTableShared` 1214859703 | |

| 1108875068 | https://github.com/simonw/datasette/issues/1715#issuecomment-1108875068 | https://api.github.com/repos/simonw/datasette/issues/1715 | IC_kwDOBm6k_c5CGBc8 | simonw 9599 | 2022-04-25T18:03:13Z | 2022-04-25T18:06:33Z | OWNER | The I'm going to split the |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Refactor TableView to use asyncinject 1212823665 | |

| 1108877454 | https://github.com/simonw/datasette/issues/1715#issuecomment-1108877454 | https://api.github.com/repos/simonw/datasette/issues/1715 | IC_kwDOBm6k_c5CGCCO | simonw 9599 | 2022-04-25T18:04:27Z | 2022-04-25T18:04:27Z | OWNER | Pushed my WIP on this to the |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Refactor TableView to use asyncinject 1212823665 | |

| 1107873311 | https://github.com/simonw/datasette/issues/1718#issuecomment-1107873311 | https://api.github.com/repos/simonw/datasette/issues/1718 | IC_kwDOBm6k_c5CCM4f | simonw 9599 | 2022-04-24T16:24:14Z | 2022-04-24T16:24:14Z | OWNER | Wrote up what I learned in a TIL: https://til.simonwillison.net/sphinx/blacken-docs |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Code examples in the documentation should be formatted with Black 1213683988 | |

| 1107873271 | https://github.com/simonw/datasette/issues/1718#issuecomment-1107873271 | https://api.github.com/repos/simonw/datasette/issues/1718 | IC_kwDOBm6k_c5CCM33 | simonw 9599 | 2022-04-24T16:23:57Z | 2022-04-24T16:23:57Z | OWNER | Turns out I didn't need that Submitted a documentation PR to that project instead: |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Code examples in the documentation should be formatted with Black 1213683988 | |

| 1107870788 | https://github.com/simonw/datasette/issues/1718#issuecomment-1107870788 | https://api.github.com/repos/simonw/datasette/issues/1718 | IC_kwDOBm6k_c5CCMRE | simonw 9599 | 2022-04-24T16:09:23Z | 2022-04-24T16:09:23Z | OWNER | One more attempt at testing the |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Code examples in the documentation should be formatted with Black 1213683988 | |

| 1107869884 | https://github.com/simonw/datasette/issues/1718#issuecomment-1107869884 | https://api.github.com/repos/simonw/datasette/issues/1718 | IC_kwDOBm6k_c5CCMC8 | simonw 9599 | 2022-04-24T16:04:03Z | 2022-04-24T16:04:03Z | OWNER | OK, I'm expecting this one to fail at the |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Code examples in the documentation should be formatted with Black 1213683988 | |

| 1107869556 | https://github.com/simonw/datasette/issues/1718#issuecomment-1107869556 | https://api.github.com/repos/simonw/datasette/issues/1718 | IC_kwDOBm6k_c5CCL90 | simonw 9599 | 2022-04-24T16:02:27Z | 2022-04-24T16:02:27Z | OWNER | Looking at that first error it appears to be a place where I had deliberately omitted the body of the function: I can use Fixing those warnings actually helped me spot a couple of bugs, so I'm glad this happened. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Code examples in the documentation should be formatted with Black 1213683988 | |

| 1107868585 | https://github.com/simonw/datasette/issues/1718#issuecomment-1107868585 | https://api.github.com/repos/simonw/datasette/issues/1718 | IC_kwDOBm6k_c5CCLup | simonw 9599 | 2022-04-24T15:57:10Z | 2022-04-24T15:57:19Z | OWNER | The tests failed there because of what I thought were warnings but turn out to be treated as errors:

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Code examples in the documentation should be formatted with Black 1213683988 | |

| 1107867281 | https://github.com/simonw/datasette/issues/1718#issuecomment-1107867281 | https://api.github.com/repos/simonw/datasette/issues/1718 | IC_kwDOBm6k_c5CCLaR | simonw 9599 | 2022-04-24T15:49:23Z | 2022-04-24T15:49:23Z | OWNER | I'm going to push the first commit with a deliberate missing formatting to check that the tests fail. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Code examples in the documentation should be formatted with Black 1213683988 | |

| 1107866013 | https://github.com/simonw/datasette/issues/1718#issuecomment-1107866013 | https://api.github.com/repos/simonw/datasette/issues/1718 | IC_kwDOBm6k_c5CCLGd | simonw 9599 | 2022-04-24T15:42:07Z | 2022-04-24T15:42:07Z | OWNER | In the absence of |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Code examples in the documentation should be formatted with Black 1213683988 | |

| 1107865493 | https://github.com/simonw/datasette/issues/1718#issuecomment-1107865493 | https://api.github.com/repos/simonw/datasette/issues/1718 | IC_kwDOBm6k_c5CCK-V | simonw 9599 | 2022-04-24T15:39:02Z | 2022-04-24T15:39:02Z | OWNER | There's no |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Code examples in the documentation should be formatted with Black 1213683988 | |

| 1107863924 | https://github.com/simonw/datasette/issues/1718#issuecomment-1107863924 | https://api.github.com/repos/simonw/datasette/issues/1718 | IC_kwDOBm6k_c5CCKl0 | simonw 9599 | 2022-04-24T15:30:03Z | 2022-04-24T15:30:03Z | OWNER | On the one hand, I'm not crazy about some of the indentation decisions Black made here - in particular this one, which I had indented deliberately for readability:

Also: I've been mentally trying to keep the line lengths a bit shorter to help them be more readable on mobile devices. I'll try a different line length using I like this more - here's the result for that example:

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Code examples in the documentation should be formatted with Black 1213683988 | |

| 1107863365 | https://github.com/simonw/datasette/issues/1718#issuecomment-1107863365 | https://api.github.com/repos/simonw/datasette/issues/1718 | IC_kwDOBm6k_c5CCKdF | simonw 9599 | 2022-04-24T15:26:41Z | 2022-04-24T15:26:41Z | OWNER | Tried this:

Note that you need to pass @@ -412,12 +408,16 @@ To include an expiry, add a

The resulting cookie will encode data that looks something like this: diff --git a/docs/spatialite.rst b/docs/spatialite.rst index d1b300b..556bad8 100644 --- a/docs/spatialite.rst +++ b/docs/spatialite.rst @@ -58,19 +58,22 @@ Here's a recipe for taking a table with existing latitude and longitude columns, .. code-block:: python

Querying polygons using within()

diff --git a/docs/writing_plugins.rst b/docs/writing_plugins.rst

index bd60a4b..5af01f6 100644

--- a/docs/writing_plugins.rst

+++ b/docs/writing_plugins.rst

@@ -18,9 +18,10 @@ The quickest way to start writing a plugin is to create a + @hookimpl def prepare_connection(conn): - conn.create_function('hello_world', 0, lambda: 'Hello world!') + conn.create_function("hello_world", 0, lambda: "Hello world!") If you save this in @@ -60,22 +61,18 @@ The example consists of two files: a

And a Python module file,

Having built a plugin in this way you can turn it into an installable package using the following command:: @@ -123,11 +120,13 @@ To bundle the static assets for a plugin in the package that you publish to PyPI .. code-block:: python

Where @@ -152,11 +151,13 @@ Templates should be bundled for distribution using the same .. code-block:: python

You can also use wildcards here such as |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Code examples in the documentation should be formatted with Black 1213683988 | |

| 1107862882 | https://github.com/simonw/datasette/issues/1718#issuecomment-1107862882 | https://api.github.com/repos/simonw/datasette/issues/1718 | IC_kwDOBm6k_c5CCKVi | simonw 9599 | 2022-04-24T15:23:56Z | 2022-04-24T15:23:56Z | OWNER | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Code examples in the documentation should be formatted with Black 1213683988 | ||

| 1107848097 | https://github.com/simonw/datasette/pull/1717#issuecomment-1107848097 | https://api.github.com/repos/simonw/datasette/issues/1717 | IC_kwDOBm6k_c5CCGuh | simonw 9599 | 2022-04-24T14:02:37Z | 2022-04-24T14:02:37Z | OWNER | This is a neat feature, thanks! |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Add timeout option to Cloudrun build 1213281044 | |

| 1107459446 | https://github.com/simonw/datasette/pull/1717#issuecomment-1107459446 | https://api.github.com/repos/simonw/datasette/issues/1717 | IC_kwDOBm6k_c5CAn12 | codecov[bot] 22429695 | 2022-04-23T11:56:36Z | 2022-04-23T11:56:36Z | NONE | Codecov Report

```diff @@ Coverage Diff @@ main #1717 +/-=======================================

Coverage 91.75% 91.75% | Impacted Files | Coverage Δ | |

|---|---|---|

| datasette/publish/cloudrun.py | Continue to review full report at Codecov.

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Add timeout option to Cloudrun build 1213281044 | |

| 1106989581 | https://github.com/simonw/datasette/issues/1715#issuecomment-1106989581 | https://api.github.com/repos/simonw/datasette/issues/1715 | IC_kwDOBm6k_c5B-1IN | simonw 9599 | 2022-04-22T23:03:29Z | 2022-04-22T23:03:29Z | OWNER | I'm having second thoughts about injecting |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Refactor TableView to use asyncinject 1212823665 | |

| 1106947168 | https://github.com/simonw/datasette/issues/1715#issuecomment-1106947168 | https://api.github.com/repos/simonw/datasette/issues/1715 | IC_kwDOBm6k_c5B-qxg | simonw 9599 | 2022-04-22T22:25:57Z | 2022-04-22T22:26:06Z | OWNER | ```python async def database(request: Request, datasette: Datasette) -> Database: database_route = tilde_decode(request.url_vars["database"]) try: return datasette.get_database(route=database_route) except KeyError: raise NotFound("Database not found: {}".format(database_route)) async def table_name(request: Request) -> str: return tilde_decode(request.url_vars["table"]) ``` |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Refactor TableView to use asyncinject 1212823665 | |

| 1106945876 | https://github.com/simonw/datasette/issues/1715#issuecomment-1106945876 | https://api.github.com/repos/simonw/datasette/issues/1715 | IC_kwDOBm6k_c5B-qdU | simonw 9599 | 2022-04-22T22:24:29Z | 2022-04-22T22:24:29Z | OWNER | Looking at the start of I'm going to resolve |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Refactor TableView to use asyncinject 1212823665 | |

| 1106923258 | https://github.com/simonw/datasette/issues/1716#issuecomment-1106923258 | https://api.github.com/repos/simonw/datasette/issues/1716 | IC_kwDOBm6k_c5B-k76 | simonw 9599 | 2022-04-22T22:02:07Z | 2022-04-22T22:02:07Z | OWNER | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Configure git blame to ignore Black commit 1212838949 | ||

| 1106908642 | https://github.com/simonw/datasette/issues/1715#issuecomment-1106908642 | https://api.github.com/repos/simonw/datasette/issues/1715 | IC_kwDOBm6k_c5B-hXi | simonw 9599 | 2022-04-22T21:47:55Z | 2022-04-22T21:47:55Z | OWNER | I need a Something like this perhaps:

One thing I could do: break out is the code that turns a request into a list of pairs extracted from the request - this code here: https://github.com/simonw/datasette/blob/8338c66a57502ef27c3d7afb2527fbc0663b2570/datasette/views/table.py#L442-L449 I could turn that into a typed dependency injection function like this:

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Refactor TableView to use asyncinject 1212823665 | |

| 1105642187 | https://github.com/simonw/datasette/issues/1101#issuecomment-1105642187 | https://api.github.com/repos/simonw/datasette/issues/1101 | IC_kwDOBm6k_c5B5sLL | eyeseast 25778 | 2022-04-21T18:59:08Z | 2022-04-21T18:59:08Z | CONTRIBUTOR | Ha! That was your idea (and a good one). But it's probably worth measuring to see what overhead it adds. It did require both passing in the database and making the whole thing Just timing the queries themselves:

Looking at the network panel:

I'm not sure how best to time the GeoJSON generation, but it would be interesting to check. Maybe I'll write a plugin to add query times to response headers. The other thing to consider with async streaming is that it might be well-suited for a slower response. When I have to get the whole result and send a response in a fixed amount of time, I need the most efficient query possible. If I can hang onto a connection and get things one chunk at a time, maybe it's ok if there's some overhead. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

register_output_renderer() should support streaming data 749283032 | |

| 1105615625 | https://github.com/simonw/datasette/issues/1101#issuecomment-1105615625 | https://api.github.com/repos/simonw/datasette/issues/1101 | IC_kwDOBm6k_c5B5lsJ | simonw 9599 | 2022-04-21T18:31:41Z | 2022-04-21T18:32:22Z | OWNER | The ```python

My PostgreSQL/MySQL engineering brain says that this would be better handled by doing a chunk of these (maybe 100) at once, to avoid the per-query-overhead - but with SQLite that might not be necessary. At any rate, this is one of the reasons I'm interested in "iterate over this sequence of chunks of 100 rows at a time" as a potential option here. Of course, a better solution would be for |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

register_output_renderer() should support streaming data 749283032 | |

| 1105608964 | https://github.com/simonw/datasette/issues/1101#issuecomment-1105608964 | https://api.github.com/repos/simonw/datasette/issues/1101 | IC_kwDOBm6k_c5B5kEE | simonw 9599 | 2022-04-21T18:26:29Z | 2022-04-21T18:26:29Z | OWNER | I'm questioning if the mechanisms should be separate at all now - a single response rendering is really just a case of a streaming response that only pulls the first N records from the iterator. It probably needs to be an This actually gets a fair bit more complicated due to the work I'm doing right now to improve the default JSON API:

I want to do things like make faceting results optionally available to custom renderers - which is a separate concern from streaming rows. I'm going to poke around with a bunch of prototypes and see what sticks. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

register_output_renderer() should support streaming data 749283032 | |

| 1105588651 | https://github.com/simonw/datasette/issues/1101#issuecomment-1105588651 | https://api.github.com/repos/simonw/datasette/issues/1101 | IC_kwDOBm6k_c5B5fGr | eyeseast 25778 | 2022-04-21T18:15:39Z | 2022-04-21T18:15:39Z | CONTRIBUTOR | What if you split rendering and streaming into two things:

That way current plugins still work, and streaming is purely additive. A |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

register_output_renderer() should support streaming data 749283032 | |

| 1105571003 | https://github.com/simonw/datasette/issues/1101#issuecomment-1105571003 | https://api.github.com/repos/simonw/datasette/issues/1101 | IC_kwDOBm6k_c5B5ay7 | simonw 9599 | 2022-04-21T18:10:38Z | 2022-04-21T18:10:46Z | OWNER | Maybe the simplest design for this is to add an optional

Or it could use the existing |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

register_output_renderer() should support streaming data 749283032 | |

| 1105474232 | https://github.com/dogsheep/github-to-sqlite/issues/72#issuecomment-1105474232 | https://api.github.com/repos/dogsheep/github-to-sqlite/issues/72 | IC_kwDODFdgUs5B5DK4 | simonw 9599 | 2022-04-21T17:02:15Z | 2022-04-21T17:02:15Z | MEMBER | That's interesting - yeah it looks like the number of pages can be derived from the https://docs.github.com/en/rest/guides/traversing-with-pagination |

{

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

feature: display progress bar when downloading multi-page responses 1211283427 | |

| 1105464661 | https://github.com/simonw/datasette/pull/1574#issuecomment-1105464661 | https://api.github.com/repos/simonw/datasette/issues/1574 | IC_kwDOBm6k_c5B5A1V | dholth 208018 | 2022-04-21T16:51:24Z | 2022-04-21T16:51:24Z | NONE | tfw you have more ephemeral storage than upstream bandwidth ``` FROM python:3.10-slim AS base RUN apt update && apt -y install zstd ENV DATASETTE_SECRET 'sosecret' RUN --mount=type=cache,target=/root/.cache/pip pip install -U datasette datasette-pretty-json datasette-graphql ENV PORT 8080 EXPOSE 8080 FROM base AS pack COPY . /app WORKDIR /app RUN datasette inspect --inspect-file inspect-data.json RUN zstd --rm *.db FROM base AS unpack COPY --from=pack /app /app WORKDIR /app CMD ["/bin/bash", "-c", "shopt -s nullglob && zstd --rm -d .db.zst && datasette serve --host 0.0.0.0 --cors --inspect-file inspect-data.json --metadata metadata.json --create --port $PORT .db"] ``` |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

introduce new option for datasette package to use a slim base image 1084193403 | |

| 1103312860 | https://github.com/simonw/datasette/issues/1713#issuecomment-1103312860 | https://api.github.com/repos/simonw/datasette/issues/1713 | IC_kwDOBm6k_c5Bwzfc | fgregg 536941 | 2022-04-20T00:52:19Z | 2022-04-20T00:52:19Z | CONTRIBUTOR | feels related to #1402 |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Datasette feature for publishing snapshots of query results 1203943272 | |

| 1101594549 | https://github.com/simonw/sqlite-utils/issues/425#issuecomment-1101594549 | https://api.github.com/repos/simonw/sqlite-utils/issues/425 | IC_kwDOCGYnMM5BqP-1 | simonw 9599 | 2022-04-18T17:36:14Z | 2022-04-18T17:36:14Z | OWNER | Releated: - #408 |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

`sqlite3.NotSupportedError`: deterministic=True requires SQLite 3.8.3 or higher 1203842656 | |

| 1100243987 | https://github.com/simonw/datasette/pull/1159#issuecomment-1100243987 | https://api.github.com/repos/simonw/datasette/issues/1159 | IC_kwDOBm6k_c5BlGQT | lovasoa 552629 | 2022-04-15T17:24:43Z | 2022-04-15T17:24:43Z | NONE | @simonw : do you think this could be merged ? |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Improve the display of facets information 774332247 | |

| 1099540225 | https://github.com/simonw/datasette/issues/1713#issuecomment-1099540225 | https://api.github.com/repos/simonw/datasette/issues/1713 | IC_kwDOBm6k_c5BiacB | eyeseast 25778 | 2022-04-14T19:09:57Z | 2022-04-14T19:09:57Z | CONTRIBUTOR | I wonder if this overlaps with what I outlined in #1605. You could run something like this:

And maybe that does what you need. Of course, that plugin isn't built yet. But that's the idea. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Datasette feature for publishing snapshots of query results 1203943272 | |

| 1099443468 | https://github.com/simonw/datasette/issues/1713#issuecomment-1099443468 | https://api.github.com/repos/simonw/datasette/issues/1713 | IC_kwDOBm6k_c5BiC0M | rayvoelker 9308268 | 2022-04-14T17:26:27Z | 2022-04-14T17:26:27Z | NONE | What would be an awesome feature as a plugin would be to be able to save a query (and possibly even results) to a github gist. Being able to share results that way would be super fantastic. Possibly even in Jupyter Notebook format (since github and github gists nicely render those)! I know there's the handy datasette-saved-queries plugin, but a button that could export stuff out and then even possibly import stuff back in (I'm sort of thinking the way that Google Colab allows you to save to github, and then pull the notebook back in is a really great workflow

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Datasette feature for publishing snapshots of query results 1203943272 | |

| 1098628334 | https://github.com/simonw/datasette/issues/1713#issuecomment-1098628334 | https://api.github.com/repos/simonw/datasette/issues/1713 | IC_kwDOBm6k_c5Be7zu | simonw 9599 | 2022-04-14T01:43:00Z | 2022-04-14T01:43:13Z | OWNER | Current workaround for fast publishing to S3: |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Datasette feature for publishing snapshots of query results 1203943272 | |

| 1098548931 | https://github.com/simonw/sqlite-utils/issues/421#issuecomment-1098548931 | https://api.github.com/repos/simonw/sqlite-utils/issues/421 | IC_kwDOCGYnMM5BeobD | simonw 9599 | 2022-04-13T22:41:59Z | 2022-04-13T22:41:59Z | OWNER | I'm going to close this ticket since it looks like this is a bug in the way the Dockerfile builds Python, but I'm going to ship a fix for that issue I found so the |

{

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

"Error: near "(": syntax error" when using sqlite-utils indexes CLI 1180427792 | |

| 1098548090 | https://github.com/simonw/sqlite-utils/issues/424#issuecomment-1098548090 | https://api.github.com/repos/simonw/sqlite-utils/issues/424 | IC_kwDOCGYnMM5BeoN6 | simonw 9599 | 2022-04-13T22:40:15Z | 2022-04-13T22:40:15Z | OWNER | New error: ```pycon

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Better error message if you try to create a table with no columns 1200866134 | |

| 1098545390 | https://github.com/simonw/sqlite-utils/issues/425#issuecomment-1098545390 | https://api.github.com/repos/simonw/sqlite-utils/issues/425 | IC_kwDOCGYnMM5Benju | simonw 9599 | 2022-04-13T22:34:52Z | 2022-04-13T22:34:52Z | OWNER | That broke Python 3.7 because it doesn't support

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

`sqlite3.NotSupportedError`: deterministic=True requires SQLite 3.8.3 or higher 1203842656 | |

| 1098537000 | https://github.com/simonw/sqlite-utils/issues/425#issuecomment-1098537000 | https://api.github.com/repos/simonw/sqlite-utils/issues/425 | IC_kwDOCGYnMM5Belgo | simonw 9599 | 2022-04-13T22:18:22Z | 2022-04-13T22:18:22Z | OWNER | I figured out a workaround in https://github.com/simonw/sqlite-utils/issues/421#issuecomment-1098535531 The current This alternative implementation worked in the environment where that failed:

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

`sqlite3.NotSupportedError`: deterministic=True requires SQLite 3.8.3 or higher 1203842656 | |

| 1098535531 | https://github.com/simonw/sqlite-utils/issues/421#issuecomment-1098535531 | https://api.github.com/repos/simonw/sqlite-utils/issues/421 | IC_kwDOCGYnMM5BelJr | simonw 9599 | 2022-04-13T22:15:48Z | 2022-04-13T22:15:48Z | OWNER | Trying this alternative implementation of the

countries idx_countries_country_name 0 1 country 0 BINARY 1 countries idx_countries_country_name 1 2 name 0 BINARY 1 ``` |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

"Error: near "(": syntax error" when using sqlite-utils indexes CLI 1180427792 | |

| 1098532220 | https://github.com/simonw/sqlite-utils/issues/421#issuecomment-1098532220 | https://api.github.com/repos/simonw/sqlite-utils/issues/421 | IC_kwDOCGYnMM5BekV8 | simonw 9599 | 2022-04-13T22:09:52Z | 2022-04-13T22:09:52Z | OWNER | That error is weird - it's not supposed to happen according to this code here: https://github.com/simonw/sqlite-utils/blob/95522ad919f96eb6cc8cd3cd30389b534680c717/sqlite_utils/db.py#L389-L400 |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

"Error: near "(": syntax error" when using sqlite-utils indexes CLI 1180427792 | |

| 1098531354 | https://github.com/simonw/sqlite-utils/issues/421#issuecomment-1098531354 | https://api.github.com/repos/simonw/sqlite-utils/issues/421 | IC_kwDOCGYnMM5BekIa | simonw 9599 | 2022-04-13T22:08:20Z | 2022-04-13T22:08:20Z | OWNER | OK I figured out what's going on here. First I added an extra Error: near "(": syntax error

Then I checked the version that So the problem here is that the Python in that Docker image is running a very old version of SQLite. I tried using the trick in https://til.simonwillison.net/sqlite/ld-preload as a workaround, and it almost worked:

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

"Error: near "(": syntax error" when using sqlite-utils indexes CLI 1180427792 | |

| 1098295517 | https://github.com/simonw/sqlite-utils/issues/421#issuecomment-1098295517 | https://api.github.com/repos/simonw/sqlite-utils/issues/421 | IC_kwDOCGYnMM5Bdqjd | simonw 9599 | 2022-04-13T17:16:20Z | 2022-04-13T17:16:20Z | OWNER | Aha! I was able to replicate the bug using your To build your |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

"Error: near "(": syntax error" when using sqlite-utils indexes CLI 1180427792 | |

| 1098288158 | https://github.com/simonw/sqlite-utils/issues/421#issuecomment-1098288158 | https://api.github.com/repos/simonw/sqlite-utils/issues/421 | IC_kwDOCGYnMM5Bdowe | simonw 9599 | 2022-04-13T17:07:53Z | 2022-04-13T17:07:53Z | OWNER | I can't replicate the bug I'm afraid:

```

% wget "https://github.com/wri/global-power-plant-database/blob/232a6666/output_database/global_power_plant_database.csv?raw=true" % sqlite-utils extract global.db power_plants country country_long \

--table countries \

--fk-column country_id \

--rename country_long name

% sqlite-utils indexes global.db --table countries idx_countries_country_name 0 1 country 0 BINARY 1 countries idx_countries_country_name 1 2 name 0 BINARY 1 ``` |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

"Error: near "(": syntax error" when using sqlite-utils indexes CLI 1180427792 | |

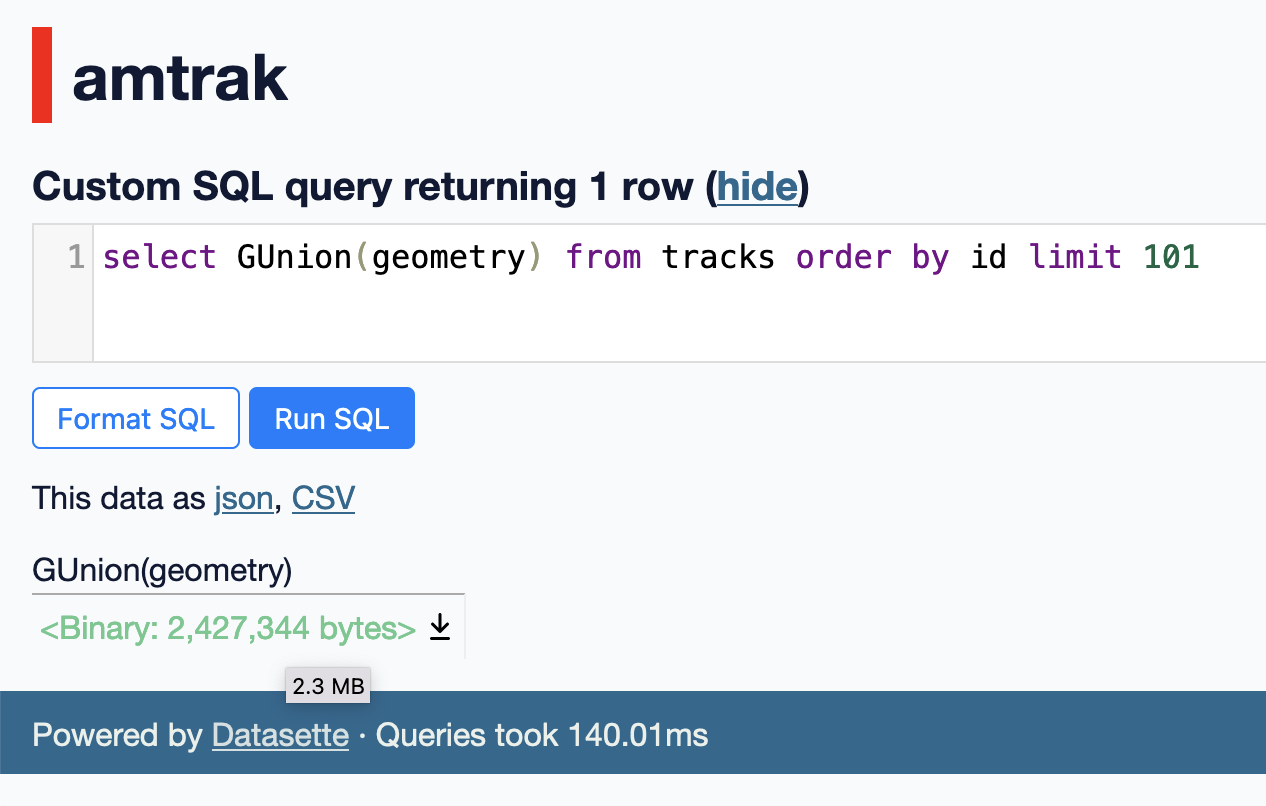

| 1097115034 | https://github.com/simonw/datasette/issues/1712#issuecomment-1097115034 | https://api.github.com/repos/simonw/datasette/issues/1712 | IC_kwDOBm6k_c5BZKWa | simonw 9599 | 2022-04-12T19:12:21Z | 2022-04-12T19:12:21Z | OWNER | Got a TIL out of this too: https://til.simonwillison.net/spatialite/gunion-to-combine-geometries |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Make "<Binary: 2427344 bytes>" easier to read 1202227104 | |

| 1097076622 | https://github.com/simonw/datasette/issues/1712#issuecomment-1097076622 | https://api.github.com/repos/simonw/datasette/issues/1712 | IC_kwDOBm6k_c5BZA-O | simonw 9599 | 2022-04-12T18:42:04Z | 2022-04-12T18:42:04Z | OWNER | I'm not going to show the tooltip if the formatted number is in bytes. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Make "<Binary: 2427344 bytes>" easier to read 1202227104 | |

| 1097068474 | https://github.com/simonw/datasette/issues/1712#issuecomment-1097068474 | https://api.github.com/repos/simonw/datasette/issues/1712 | IC_kwDOBm6k_c5BY--6 | simonw 9599 | 2022-04-12T18:38:18Z | 2022-04-12T18:38:18Z | OWNER |

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Make "<Binary: 2427344 bytes>" easier to read 1202227104 | |

| 1095687566 | https://github.com/simonw/datasette/issues/1708#issuecomment-1095687566 | https://api.github.com/repos/simonw/datasette/issues/1708 | IC_kwDOBm6k_c5BTt2O | simonw 9599 | 2022-04-11T23:24:30Z | 2022-04-11T23:24:30Z | OWNER | Redesigned template contextWarning: if you use any custom templates with your Datasette instance they are likely to break when you upgrade to 1.0. The template context has been redesigned to be based on the documented JSON API. This means that the template context can be considered stable going forward, so any custom templates you implement should continue to work when you upgrade Datasette in the future. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Datasette 1.0 alpha upcoming release notes 1200649124 | |

| 1095673947 | https://github.com/simonw/datasette/issues/1705#issuecomment-1095673947 | https://api.github.com/repos/simonw/datasette/issues/1705 | IC_kwDOBm6k_c5BTqhb | simonw 9599 | 2022-04-11T23:03:49Z | 2022-04-11T23:03:49Z | OWNER | I'll also encourage testing against both Datasette 0.x and Datasette 1.0 using a GitHub Actions matrix. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

How to upgrade your plugin for 1.0 documentation 1197926598 | |

| 1095673670 | https://github.com/simonw/datasette/issues/1710#issuecomment-1095673670 | https://api.github.com/repos/simonw/datasette/issues/1710 | IC_kwDOBm6k_c5BTqdG | simonw 9599 | 2022-04-11T23:03:25Z | 2022-04-11T23:03:25Z | OWNER | Dupe of: - #1705 |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Guide for plugin authors to upgrade their plugins for 1.0 1200649889 | |

| 1095671940 | https://github.com/simonw/datasette/issues/1709#issuecomment-1095671940 | https://api.github.com/repos/simonw/datasette/issues/1709 | IC_kwDOBm6k_c5BTqCE | simonw 9599 | 2022-04-11T23:00:39Z | 2022-04-11T23:01:41Z | OWNER |

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Redesigned JSON API with ?_extra= parameters 1200649502 | |

| 1095672127 | https://github.com/simonw/datasette/issues/1711#issuecomment-1095672127 | https://api.github.com/repos/simonw/datasette/issues/1711 | IC_kwDOBm6k_c5BTqE_ | simonw 9599 | 2022-04-11T23:00:58Z | 2022-04-11T23:00:58Z | OWNER |

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Template context powered entirely by the JSON API format 1200650491 | |

| 1095277937 | https://github.com/simonw/datasette/issues/1707#issuecomment-1095277937 | https://api.github.com/repos/simonw/datasette/issues/1707 | IC_kwDOBm6k_c5BSJ1x | simonw 9599 | 2022-04-11T16:32:31Z | 2022-04-11T16:33:00Z | OWNER | That's a really interesting idea! That page is one of the least developed at the moment. There's plenty of room for it to grow new useful features. I like this suggestion because it feels like a good opportunity to introduce some unobtrusive JavaScript. Could use a details/summary element that uses Could even do something with the |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

[feature] expanded detail page 1200224939 | |

| 1094453751 | https://github.com/simonw/datasette/issues/1699#issuecomment-1094453751 | https://api.github.com/repos/simonw/datasette/issues/1699 | IC_kwDOBm6k_c5BPAn3 | eyeseast 25778 | 2022-04-11T01:32:12Z | 2022-04-11T01:32:12Z | CONTRIBUTOR | Was looking through old issues and realized a bunch of this got discussed in #1101 (including by me!), so sorry to rehash all this. Happy to help with whatever piece of it I can. Would be very excited to be able to use format plugins with exports. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proposal: datasette query 1193090967 | |

| 1094152642 | https://github.com/simonw/datasette/issues/1706#issuecomment-1094152642 | https://api.github.com/repos/simonw/datasette/issues/1706 | IC_kwDOBm6k_c5BN3HC | simonw 9599 | 2022-04-10T01:11:54Z | 2022-04-10T01:11:54Z | OWNER | This relates to this much larger vision: - #417 |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

[feature] immutable mode for a directory, not just individual sqlite file 1198822563 | |

| 1094152173 | https://github.com/simonw/datasette/issues/1706#issuecomment-1094152173 | https://api.github.com/repos/simonw/datasette/issues/1706 | IC_kwDOBm6k_c5BN2_t | simonw 9599 | 2022-04-10T01:08:50Z | 2022-04-10T01:08:50Z | OWNER | This is a good idea - it matches the way |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

[feature] immutable mode for a directory, not just individual sqlite file 1198822563 | |

| 1093454899 | https://github.com/simonw/datasette/pull/1693#issuecomment-1093454899 | https://api.github.com/repos/simonw/datasette/issues/1693 | IC_kwDOBm6k_c5BLMwz | simonw 9599 | 2022-04-08T23:07:04Z | 2022-04-08T23:07:04Z | OWNER | Tests failed here due to this issue: - https://github.com/psf/black/pull/2987 A future Black release should fix that. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Bump black from 22.1.0 to 22.3.0 1184850337 | |

| 1092850719 | https://github.com/simonw/datasette/pull/1703#issuecomment-1092850719 | https://api.github.com/repos/simonw/datasette/issues/1703 | IC_kwDOBm6k_c5BI5Qf | codecov[bot] 22429695 | 2022-04-08T13:18:04Z | 2022-04-08T13:18:04Z | NONE | Codecov Report

```diff @@ Coverage Diff @@ main #1703 +/-=======================================

Coverage 91.75% 91.75% Continue to review full report at Codecov.

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Update beautifulsoup4 requirement from <4.11.0,>=4.8.1 to >=4.8.1,<4.12.0 1197298420 | |

| 1092386254 | https://github.com/simonw/datasette/issues/1699#issuecomment-1092386254 | https://api.github.com/repos/simonw/datasette/issues/1699 | IC_kwDOBm6k_c5BHH3O | eyeseast 25778 | 2022-04-08T02:39:25Z | 2022-04-08T02:39:25Z | CONTRIBUTOR | And just to think this through a little more, here's what

Alternately, that could be an async generator, like this:

Not sure which makes more sense, but I think this pattern would open up a lot of possibility. If you had your stream_indented_json function, you could do |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proposal: datasette query 1193090967 | |

| 1092370880 | https://github.com/simonw/datasette/issues/1699#issuecomment-1092370880 | https://api.github.com/repos/simonw/datasette/issues/1699 | IC_kwDOBm6k_c5BHEHA | eyeseast 25778 | 2022-04-08T02:07:40Z | 2022-04-08T02:07:40Z | CONTRIBUTOR | So maybe

And stream gets an iterator, instead of a list of rows, so it can efficiently handle large queries. Maybe it also gets passed a destination stream, or it returns an iterator. I'm not sure what makes more sense. Either way, that might cover both CLI exports and streaming responses. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proposal: datasette query 1193090967 | |

| 1092361727 | https://github.com/simonw/datasette/issues/1699#issuecomment-1092361727 | https://api.github.com/repos/simonw/datasette/issues/1699 | IC_kwDOBm6k_c5BHB3_ | simonw 9599 | 2022-04-08T01:47:43Z | 2022-04-08T01:47:43Z | OWNER | A render mode for that plugin hook that writes to a stream is exactly what I have in mind: - #1062 |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proposal: datasette query 1193090967 | |

| 1092357672 | https://github.com/simonw/datasette/issues/1699#issuecomment-1092357672 | https://api.github.com/repos/simonw/datasette/issues/1699 | IC_kwDOBm6k_c5BHA4o | eyeseast 25778 | 2022-04-08T01:39:40Z | 2022-04-08T01:39:40Z | CONTRIBUTOR |

That's my thinking, too. It's really the thing I've been wanting since writing

I think this probably needs either a new plugin hook separate from |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proposal: datasette query 1193090967 | |

| 1092321966 | https://github.com/simonw/datasette/issues/1699#issuecomment-1092321966 | https://api.github.com/repos/simonw/datasette/issues/1699 | IC_kwDOBm6k_c5BG4Ku | simonw 9599 | 2022-04-08T00:20:32Z | 2022-04-08T00:20:56Z | OWNER | If we do this I'm keen to have it be more than just an alternative to the existing My best thought on how to differentiate them so far is plugins: if Datasette plugins that provide alternative outputs - like One way that could work: a |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Proposal: datasette query 1193090967 | |

| 1087428593 | https://github.com/simonw/datasette/issues/1549#issuecomment-1087428593 | https://api.github.com/repos/simonw/datasette/issues/1549 | IC_kwDOBm6k_c5A0Nfx | fgregg 536941 | 2022-04-04T11:17:13Z | 2022-04-04T11:17:13Z | CONTRIBUTOR | another way to get the behavior of downloading the file is to use the download attribute of the anchor tag https://developer.mozilla.org/en-US/docs/Web/HTML/Element/a#attr-download |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Redesign CSV export to improve usability 1077620955 | |

| 1086784547 | https://github.com/simonw/datasette/issues/1698#issuecomment-1086784547 | https://api.github.com/repos/simonw/datasette/issues/1698 | IC_kwDOBm6k_c5AxwQj | simonw 9599 | 2022-04-03T06:10:24Z | 2022-04-03T06:10:24Z | OWNER | Warning added here: https://docs.datasette.io/en/latest/publish.html#publishing-to-google-cloud-run |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Add a warning about bots and Cloud Run 1190828163 | |

| 1085323192 | https://github.com/simonw/datasette/issues/1697#issuecomment-1085323192 | https://api.github.com/repos/simonw/datasette/issues/1697 | IC_kwDOBm6k_c5AsLe4 | simonw 9599 | 2022-04-01T02:01:51Z | 2022-04-01T02:01:51Z | OWNER | Huh, turns out |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

`Request.fake(..., url_vars={})` 1189113609 | |

| 1084216224 | https://github.com/simonw/datasette/pull/1574#issuecomment-1084216224 | https://api.github.com/repos/simonw/datasette/issues/1574 | IC_kwDOBm6k_c5An9Og | fs111 33631 | 2022-03-31T07:45:25Z | 2022-03-31T07:45:25Z | NONE | @simonw I like that you want to go "slim by default". Do you want another PR for that or should I just wait? |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

introduce new option for datasette package to use a slim base image 1084193403 | |

| 1083351437 | https://github.com/simonw/datasette/issues/1696#issuecomment-1083351437 | https://api.github.com/repos/simonw/datasette/issues/1696 | IC_kwDOBm6k_c5AkqGN | simonw 9599 | 2022-03-30T16:20:49Z | 2022-03-30T16:21:02Z | OWNER | Maybe like this:

```html 283 rows where dcode = 3 (Human Related: Other)``` |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Show foreign key label when filtering 1186696202 | |

| 1082663746 | https://github.com/simonw/datasette/issues/1692#issuecomment-1082663746 | https://api.github.com/repos/simonw/datasette/issues/1692 | IC_kwDOBm6k_c5AiCNC | simonw 9599 | 2022-03-30T06:14:39Z | 2022-03-30T06:14:51Z | OWNER | I like your design, though I think it should be I think |

{

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

[plugins][feature request]: Support additional script tag attributes when loading custom JS 1182227211 | |

| 1082661795 | https://github.com/simonw/datasette/issues/1692#issuecomment-1082661795 | https://api.github.com/repos/simonw/datasette/issues/1692 | IC_kwDOBm6k_c5AiBuj | simonw 9599 | 2022-03-30T06:11:41Z | 2022-03-30T06:11:41Z | OWNER | This is a good idea. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

[plugins][feature request]: Support additional script tag attributes when loading custom JS 1182227211 | |

| 1082617386 | https://github.com/simonw/datasette/issues/1695#issuecomment-1082617386 | https://api.github.com/repos/simonw/datasette/issues/1695 | IC_kwDOBm6k_c5Ah24q | simonw 9599 | 2022-03-30T04:46:18Z | 2022-03-30T04:46:18Z | OWNER |

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Option to un-filter facet not shown for `?col__exact=value` 1185868354 | |

| 1082617241 | https://github.com/simonw/datasette/issues/1695#issuecomment-1082617241 | https://api.github.com/repos/simonw/datasette/issues/1695 | IC_kwDOBm6k_c5Ah22Z | simonw 9599 | 2022-03-30T04:45:55Z | 2022-03-30T04:45:55Z | OWNER | {

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Option to un-filter facet not shown for `?col__exact=value` 1185868354 | ||

| 1082476727 | https://github.com/simonw/sqlite-utils/issues/420#issuecomment-1082476727 | https://api.github.com/repos/simonw/sqlite-utils/issues/420 | IC_kwDOCGYnMM5AhUi3 | strada 770231 | 2022-03-29T23:52:38Z | 2022-03-29T23:52:38Z | NONE | @simonw Thanks for looking into it and documenting the solution! |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Document how to use a `--convert` function that runs initialization code first 1178546862 | |

| 1081860312 | https://github.com/simonw/datasette/pull/1694#issuecomment-1081860312 | https://api.github.com/repos/simonw/datasette/issues/1694 | IC_kwDOBm6k_c5Ae-DY | codecov[bot] 22429695 | 2022-03-29T13:17:30Z | 2022-03-29T13:17:30Z | NONE | Codecov Report

```diff @@ Coverage Diff @@ main #1694 +/-=======================================

Coverage 91.74% 91.74% Continue to review full report at Codecov.

|

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Update click requirement from <8.1.0,>=7.1.1 to >=7.1.1,<8.2.0 1184850675 | |

| 1081079506 | https://github.com/simonw/sqlite-utils/issues/421#issuecomment-1081079506 | https://api.github.com/repos/simonw/sqlite-utils/issues/421 | IC_kwDOCGYnMM5Ab_bS | learning4life 24938923 | 2022-03-28T19:58:55Z | 2022-03-28T20:05:57Z | NONE | Sure, it is from the documentation example: Extracting columns into a separate table sqlite-utils insert global.db power_plants \ 'global_power_plant_database.csv?raw=true' --csv Extract those columns:sqlite-utils extract global.db power_plants country country_long \ --table countries \ --fk-column country_id \ --rename country_long name ``` |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

"Error: near "(": syntax error" when using sqlite-utils indexes CLI 1180427792 | |

| 1081047053 | https://github.com/simonw/sqlite-utils/issues/420#issuecomment-1081047053 | https://api.github.com/repos/simonw/sqlite-utils/issues/420 | IC_kwDOCGYnMM5Ab3gN | simonw 9599 | 2022-03-28T19:22:37Z | 2022-03-28T19:22:37Z | OWNER | Wrote about this in my weeknotes: https://simonwillison.net/2022/Mar/28/datasette-auth0/#new-features-as-documentation |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Document how to use a `--convert` function that runs initialization code first 1178546862 | |

| 1080141111 | https://github.com/simonw/sqlite-utils/issues/420#issuecomment-1080141111 | https://api.github.com/repos/simonw/sqlite-utils/issues/420 | IC_kwDOCGYnMM5AYaU3 | simonw 9599 | 2022-03-28T03:25:57Z | 2022-03-28T03:54:37Z | OWNER | So now this should solve your problem: ``` echo '[{"name": "notaword"}, {"name": "word"}] ' | python3 -m sqlite_utils insert listings.db listings - --convert ' import enchant d = enchant.Dict("en_US") def convert(row): global d row["is_dictionary_word"] = d.check(row["name"]) ' ``` |

{

"total_count": 1,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 1,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Document how to use a `--convert` function that runs initialization code first 1178546862 | |

| 1079806857 | https://github.com/simonw/datasette/issues/1688#issuecomment-1079806857 | https://api.github.com/repos/simonw/datasette/issues/1688 | IC_kwDOBm6k_c5AXIuJ | hydrosquall 9020979 | 2022-03-27T01:01:14Z | 2022-03-27T01:01:14Z | CONTRIBUTOR | Thank you! I went through the cookiecutter template, and published my first package here: https://github.com/hydrosquall/datasette-nteract-data-explorer |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

[plugins][documentation] Is it possible to serve per-plugin static folders when writing one-off (single file) plugins? 1181432624 |

Advanced export

JSON shape: default, array, newline-delimited, object

CREATE TABLE [issue_comments] (

[html_url] TEXT,

[issue_url] TEXT,

[id] INTEGER PRIMARY KEY,

[node_id] TEXT,

[user] INTEGER REFERENCES [users]([id]),

[created_at] TEXT,

[updated_at] TEXT,

[author_association] TEXT,

[body] TEXT,

[reactions] TEXT,

[issue] INTEGER REFERENCES [issues]([id])

, [performed_via_github_app] TEXT);

CREATE INDEX [idx_issue_comments_issue]

ON [issue_comments] ([issue]);

CREATE INDEX [idx_issue_comments_user]

ON [issue_comments] ([user]);

user >30